Adrian Basarab

Efficient Convolutional Forward Model for Passive Acoustic Mapping and Temporal Monitoring

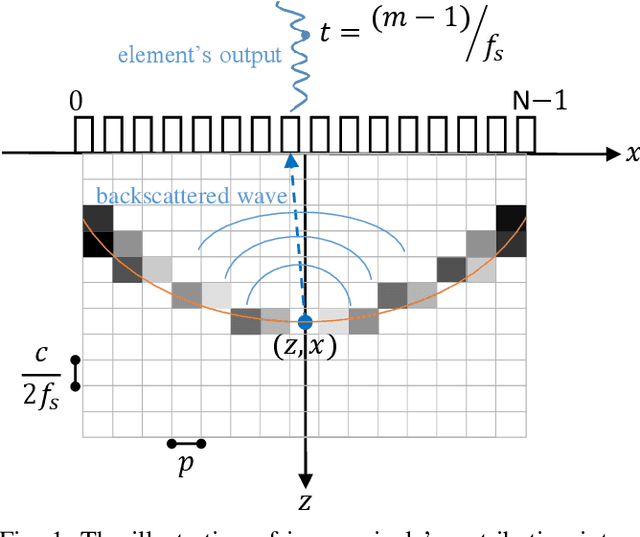

Jan 12, 2026Abstract:Passive acoustic mapping (PAM) is a key imaging technique for characterizing cavitation activity in therapeutic ultrasound applications. Recent model-based beamforming algorithms offer high reconstruction quality and strong physical interpretability. However, their computational burden and limited temporal resolution restrict their use in applications with time-evolving cavitation. To address these challenges, we introduce a PAM beamforming framework based on a novel convolutional formulation in the time domain, which enables efficient computation. In this framework, PAM is formulated as an inverse problem in which the forward operator maps spatiotemporal cavitation activity to recorded radio-frequency signals accounting for time-of-flight delays defined by the acquisition geometry. We then formulate a regularized inversion algorithm that incorporates prior knowledge on cavitation activity. Experimental results demonstrate that our framework outperforms classical beamforming methods, providing higher temporal resolution than frequency-domain techniques while substantially reducing computational burden compared with iterative time-domain formulations.

Ultrasound Image Generation using Latent Diffusion Models

Feb 12, 2025Abstract:Diffusion models for image generation have been a subject of increasing interest due to their ability to generate diverse, high-quality images. Image generation has immense potential in medical imaging because open-source medical images are difficult to obtain compared to natural images, especially for rare conditions. The generated images can be used later to train classification and segmentation models. In this paper, we propose simulating realistic ultrasound (US) images by successive fine-tuning of large diffusion models on different publicly available databases. To do so, we fine-tuned Stable Diffusion, a state-of-the-art latent diffusion model, on BUSI (Breast US Images) an ultrasound breast image dataset. We successfully generated high-quality US images of the breast using simple prompts that specify the organ and pathology, which appeared realistic to three experienced US scientists and a US radiologist. Additionally, we provided user control by conditioning the model with segmentations through ControlNet. We will release the source code at http://code.sonography.ai/ to allow fast US image generation to the scientific community.

A Weighted Hankel Approach and Cramér-Rao Bound Analysis for Quantitative Acoustic Microscopy Imaging

Dec 10, 2024Abstract:Quantitative acoustic microscopy (QAM) is a cutting-edge imaging modality that leverages very high-frequency ultrasound to characterize the acoustic and mechanical properties of biological tissues at microscopic resolutions. Radio-frequency echo signals are digitized and processed to yield two-dimensional maps. This paper introduces a weighted Hankel-based spectral method with a reweighting strategy to enhance robustness with regard to noise and reduce unreliable acoustic parameter estimates. Additionally, we derive, for the first time in QAM, Cram\'er-Rao bounds to establish theoretical performance benchmarks for acoustic parameter estimation. Simulations and experimental results demonstrate that the proposed method consistently outperform standard autoregressive approach, particularly under challenging conditions. These advancements promise to improve the accuracy and reliability of tissue characterization, enhancing the potential of QAM for biomedical applications.

Denoising Plane Wave Ultrasound Images Using Diffusion Probabilistic Models

Aug 20, 2024

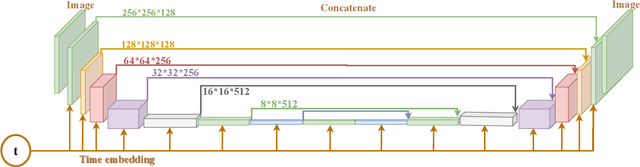

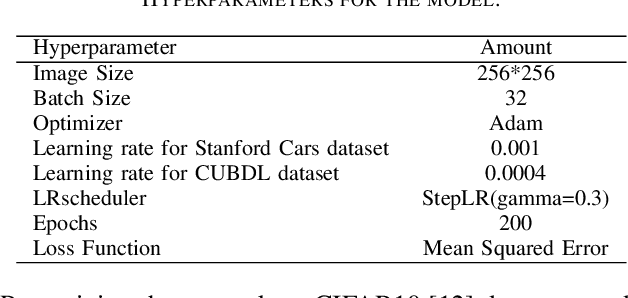

Abstract:Ultrasound plane wave imaging is a cutting-edge technique that enables high frame-rate imaging. However, one challenge associated with high frame-rate ultrasound imaging is the high noise associated with them, hindering their wider adoption. Therefore, the development of a denoising method becomes imperative to augment the quality of plane wave images. Drawing inspiration from Denoising Diffusion Probabilistic Models (DDPMs), our proposed solution aims to enhance plane wave image quality. Specifically, the method considers the distinction between low-angle and high-angle compounding plane waves as noise and effectively eliminates it by adapting a DDPM to beamformed radiofrequency (RF) data. The method underwent training using only 400 simulated images. In addition, our approach employs natural image segmentation masks as intensity maps for the generated images, resulting in accurate denoising for various anatomy shapes. The proposed method was assessed across simulation, phantom, and in vivo images. The results of the evaluations indicate that our approach not only enhances image quality on simulated data but also demonstrates effectiveness on phantom and in vivo data in terms of image quality. Comparative analysis with other methods underscores the superiority of our proposed method across various evaluation metrics. The source code and trained model will be released along with the dataset at: http://code.sonography.ai

Quantum Algorithm for Signal Denoising

Dec 24, 2023Abstract:This letter presents a novel \textit{quantum algorithm} for signal denoising, which performs a thresholding in the frequency domain through amplitude amplification and using an adaptive threshold determined by local mean values. The proposed algorithm is able to process \textit{both classical and quantum} signals. It is parametrically faster than previous classical and quantum denoising algorithms. Numerical results show that it is efficient at removing noise of both classical and quantum origin, significantly outperforming existing quantum algorithms in this respect, especially in the presence of quantum noise.

* 6 pages, 3 figurs

Mask-guided Data Augmentation for Multiparametric MRI Generation with a Rare Hepatocellular Carcinoma

Jul 30, 2023

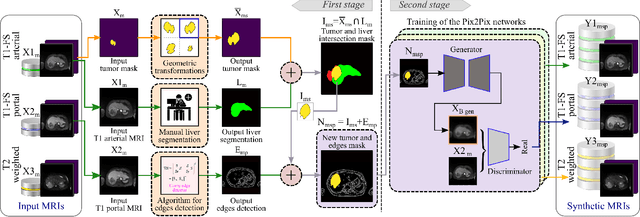

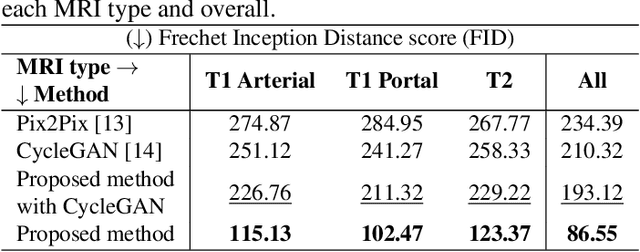

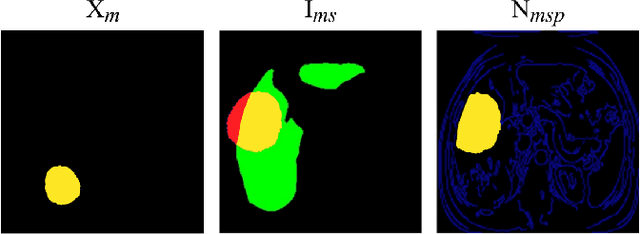

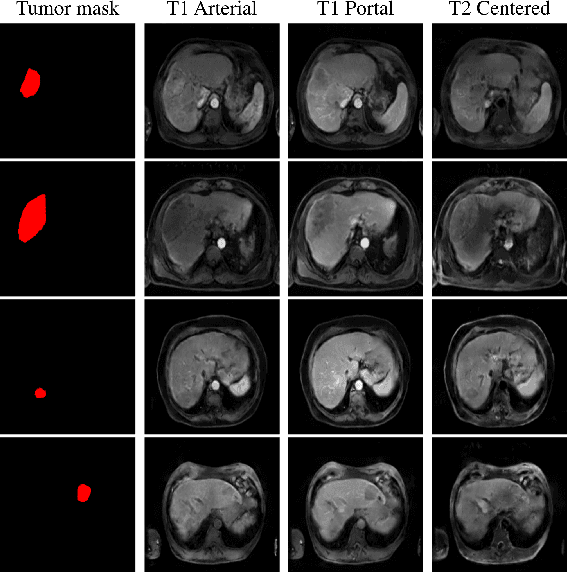

Abstract:Data augmentation is classically used to improve the overall performance of deep learning models. It is, however, challenging in the case of medical applications, and in particular for multiparametric datasets. For example, traditional geometric transformations used in several applications to generate synthetic images can modify in a non-realistic manner the patients' anatomy. Therefore, dedicated image generation techniques are necessary in the medical field to, for example, mimic a given pathology realistically. This paper introduces a new data augmentation architecture that generates synthetic multiparametric (T1 arterial, T1 portal, and T2) magnetic resonance images (MRI) of massive macrotrabecular subtype hepatocellular carcinoma with their corresponding tumor masks through a generative deep learning approach. The proposed architecture creates liver tumor masks and abdominal edges used as input in a Pix2Pix network for synthetic data creation. The method's efficiency is demonstrated by training it on a limited multiparametric dataset of MRI triplets from $89$ patients with liver lesions to generate $1,000$ synthetic triplets and their corresponding liver tumor masks. The resulting Frechet Inception Distance score was $86.55$. The proposed approach was among the winners of the 2021 data augmentation challenge organized by the French Society of Radiology.

Deep Ultrasound Denoising Using Diffusion Probabilistic Models

Jun 12, 2023

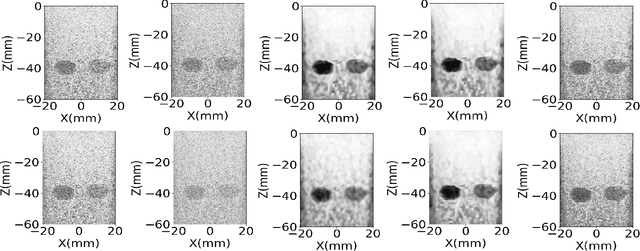

Abstract:Ultrasound images are widespread in medical diagnosis for musculoskeletal, cardiac, and obstetrical imaging due to the efficiency and non-invasiveness of the acquisition methodology. However, the acquired images are degraded by acoustic (e.g. reverberation and clutter) and electronic sources of noise. To improve the Peak Signal to Noise Ratio (PSNR) of the images, previous denoising methods often remove the speckles, which could be informative for radiologists and also for quantitative ultrasound. Herein, a method based on the recent Denoising Diffusion Probabilistic Models (DDPM) is proposed. It iteratively enhances the image quality by eliminating the noise while preserving the speckle texture. It is worth noting that the proposed method is trained in a completely unsupervised manner, and no annotated data is required. The experimental blind test results show that our method outperforms the previous nonlocal means denoising methods in terms of PSNR and Generalized Contrast to Noise Ratio (GCNR) while preserving speckles.

DIVA: Deep Unfolded Network from Quantum Interactive Patches for Image Restoration

Dec 31, 2022Abstract:This paper presents a deep neural network called DIVA unfolding a baseline adaptive denoising algorithm (De-QuIP), relying on the theory of quantum many-body physics. Furthermore, it is shown that with very slight modifications, this network can be enhanced to solve more challenging image restoration tasks such as image deblurring, super-resolution and inpainting. Despite a compact and interpretable (from a physical perspective) architecture, the proposed deep learning network outperforms several recent algorithms from the literature, designed specifically for each task. The key ingredients of the proposed method are on one hand, its ability to handle non-local image structures through the patch-interaction term and the quantum-based Hamiltonian operator, and, on the other hand, its flexibility to adapt the hyperparameters patch-wisely, due to the training process.

Inverse Problem of Ultrasound Beamforming with Denoising-Based Regularized Solutions

Jun 16, 2022

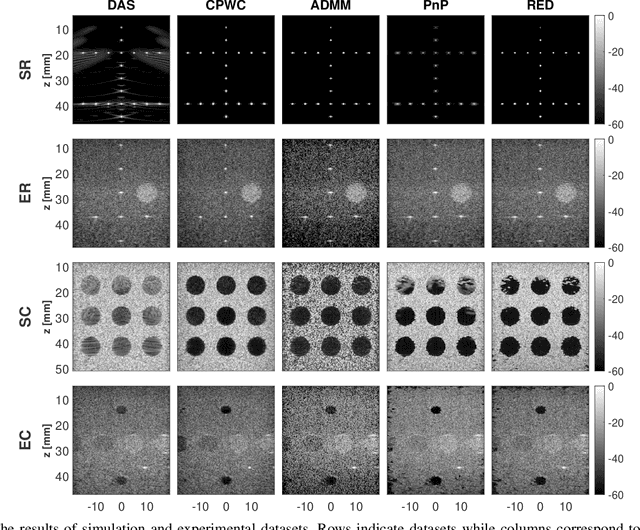

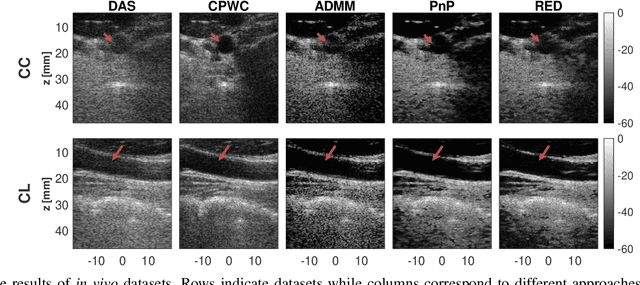

Abstract:During the past few years, inverse problem formulations of ultrasound beamforming have attracted a growing interest. They usually pose beamforming as a minimization problem of a fidelity term resulting from the measurement model plus a regularization term that enforces a certain class on the resulting image. Herein, we take advantages of alternating direction method of multipliers to propose a flexible framework in which each term is optimized separately. Furthermore, the proposed beamforming formulation is extended to replace the regularization term by a denoising algorithm, based on the recent approaches called plug-and-play (PnP) and regularization by denoising (RED). Such regularizations are shown in this work to better preserve speckle texture, an important feature in ultrasound imaging, than sparsity-based approaches previously proposed in the literature. The efficiency of proposed methods is evaluated on simulations, real phantoms, and \textit{in vivo} data available from a plane-wave imaging challenge in medical ultrasound. Furthermore, a comprehensive comparison with existing ultrasound beamforming methods is also provided. These results show that the RED algorithm gives the best image quality in terms of contrast index while preserving the speckle statistics.

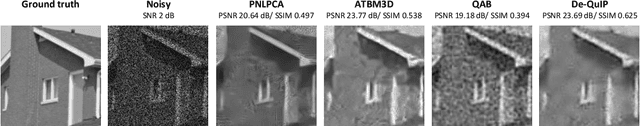

A Novel Image Denoising Algorithm Using Concepts of Quantum Many-Body Theory

Dec 16, 2021

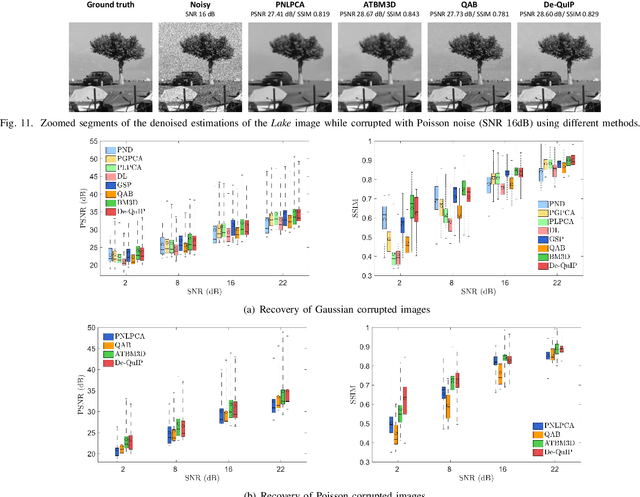

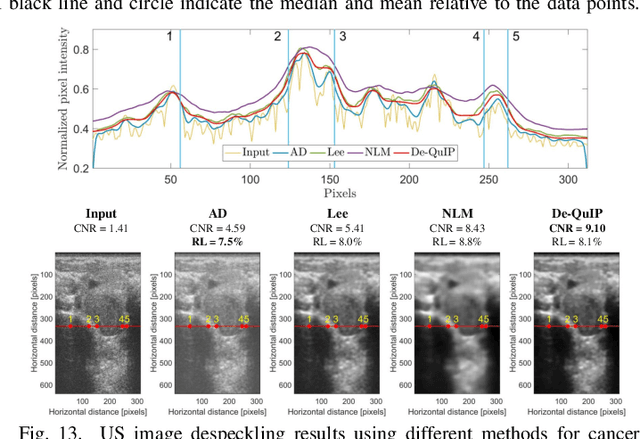

Abstract:Sparse representation of real-life images is a very effective approach in imaging applications, such as denoising. In recent years, with the growth of computing power, data-driven strategies exploiting the redundancy within patches extracted from one or several images to increase sparsity have become more prominent. This paper presents a novel image denoising algorithm exploiting such an image-dependent basis inspired by the quantum many-body theory. Based on patch analysis, the similarity measures in a local image neighborhood are formalized through a term akin to interaction in quantum mechanics that can efficiently preserve the local structures of real images. The versatile nature of this adaptive basis extends the scope of its application to image-independent or image-dependent noise scenarios without any adjustment. We carry out a rigorous comparison with contemporary methods to demonstrate the denoising capability of the proposed algorithm regardless of the image characteristics, noise statistics and intensity. We illustrate the properties of the hyperparameters and their respective effects on the denoising performance, together with automated rules of selecting their values close to the optimal one in experimental setups with ground truth not available. Finally, we show the ability of our approach to deal with practical images denoising problems such as medical ultrasound image despeckling applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge