Adam J. Thorpe

Learning Generalizable Neural Operators for Inverse Problems

Dec 19, 2025Abstract:Inverse problems challenge existing neural operator architectures because ill-posed inverse maps violate continuity, uniqueness, and stability assumptions. We introduce B2B${}^{-1}$, an inverse basis-to-basis neural operator framework that addresses this limitation. Our key innovation is to decouple function representation from the inverse map. We learn neural basis functions for the input and output spaces, then train inverse models that operate on the resulting coefficient space. This structure allows us to learn deterministic, invertible, and probabilistic models within a single framework, and to choose models based on the degree of ill-posedness. We evaluate our approach on six inverse PDE benchmarks, including two novel datasets, and compare against existing invertible neural operator baselines. We learn probabilistic models that capture uncertainty and input variability, and remain robust to measurement noise due to implicit denoising in the coefficient calculation. Our results show consistent re-simulation performance across varying levels of ill-posedness. By separating representation from inversion, our framework enables scalable surrogate models for inverse problems that generalize across instances, domains, and degrees of ill-posedness.

Zero to Autonomy in Real-Time: Online Adaptation of Dynamics in Unstructured Environments

Sep 15, 2025Abstract:Autonomous robots must go from zero prior knowledge to safe control within seconds to operate in unstructured environments. Abrupt terrain changes, such as a sudden transition to ice, create dynamics shifts that can destabilize planners unless the model adapts in real-time. We present a method for online adaptation that combines function encoders with recursive least squares, treating the function encoder coefficients as latent states updated from streaming odometry. This yields constant-time coefficient estimation without gradient-based inner-loop updates, enabling adaptation from only a few seconds of data. We evaluate our approach on a Van der Pol system to highlight algorithmic behavior, in a Unity simulator for high-fidelity off-road navigation, and on a Clearpath Jackal robot, including on a challenging terrain at a local ice rink. Across these settings, our method improves model accuracy and downstream planning, reducing collisions compared to static and meta-learning baselines.

Online Adaptation of Terrain-Aware Dynamics for Planning in Unstructured Environments

Jun 04, 2025Abstract:Autonomous mobile robots operating in remote, unstructured environments must adapt to new, unpredictable terrains that can change rapidly during operation. In such scenarios, a critical challenge becomes estimating the robot's dynamics on changing terrain in order to enable reliable, accurate navigation and planning. We present a novel online adaptation approach for terrain-aware dynamics modeling and planning using function encoders. Our approach efficiently adapts to new terrains at runtime using limited online data without retraining or fine-tuning. By learning a set of neural network basis functions that span the robot dynamics on diverse terrains, we enable rapid online adaptation to new, unseen terrains and environments as a simple least-squares calculation. We demonstrate our approach for terrain adaptation in a Unity-based robotics simulator and show that the downstream controller has better empirical performance due to higher accuracy of the learned model. This leads to fewer collisions with obstacles while navigating in cluttered environments as compared to a neural ODE baseline.

Function Encoders: A Principled Approach to Transfer Learning in Hilbert Spaces

Jan 30, 2025Abstract:A central challenge in transfer learning is designing algorithms that can quickly adapt and generalize to new tasks without retraining. Yet, the conditions of when and how algorithms can effectively transfer to new tasks is poorly characterized. We introduce a geometric characterization of transfer in Hilbert spaces and define three types of inductive transfer: interpolation within the convex hull, extrapolation to the linear span, and extrapolation outside the span. We propose a method grounded in the theory of function encoders to achieve all three types of transfer. Specifically, we introduce a novel training scheme for function encoders using least-squares optimization, prove a universal approximation theorem for function encoders, and provide a comprehensive comparison with existing approaches such as transformers and meta-learning on four diverse benchmarks. Our experiments demonstrate that the function encoder outperforms state-of-the-art methods on four benchmark tasks and on all three types of transfer.

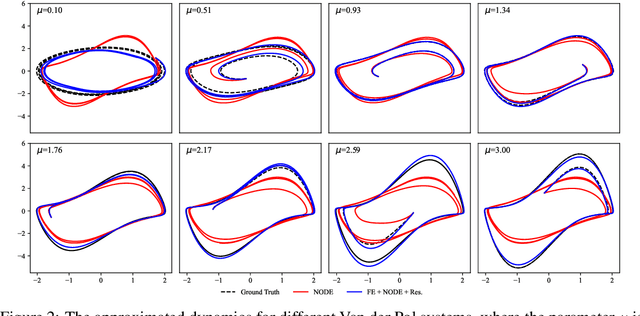

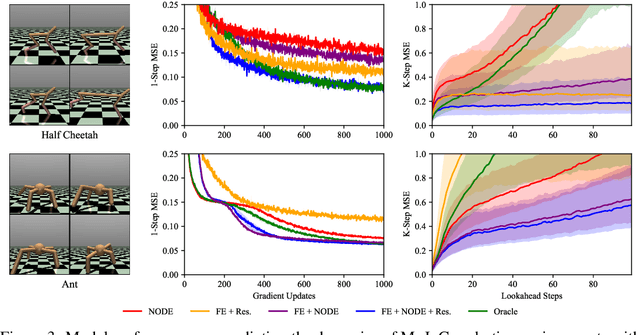

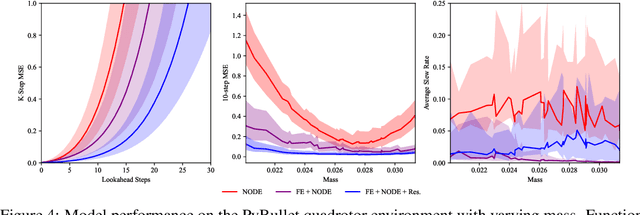

Zero-Shot Transfer of Neural ODEs

May 14, 2024

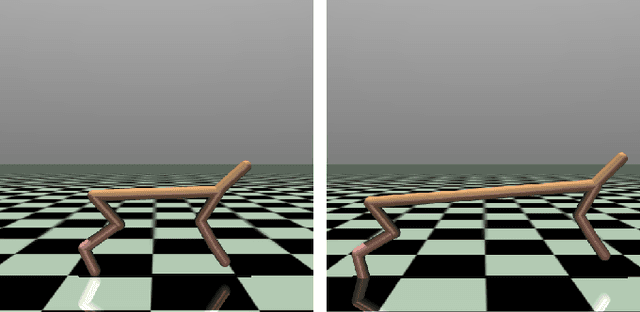

Abstract:Autonomous systems often encounter environments and scenarios beyond the scope of their training data, which underscores a critical challenge: the need to generalize and adapt to unseen scenarios in real time. This challenge necessitates new mathematical and algorithmic tools that enable adaptation and zero-shot transfer. To this end, we leverage the theory of function encoders, which enables zero-shot transfer by combining the flexibility of neural networks with the mathematical principles of Hilbert spaces. Using this theory, we first present a method for learning a space of dynamics spanned by a set of neural ODE basis functions. After training, the proposed approach can rapidly identify dynamics in the learned space using an efficient inner product calculation. Critically, this calculation requires no gradient calculations or retraining during the online phase. This method enables zero-shot transfer for autonomous systems at runtime and opens the door for a new class of adaptable control algorithms. We demonstrate state-of-the-art system modeling accuracy for two MuJoCo robot environments and show that the learned models can be used for more efficient MPC control of a quadrotor.

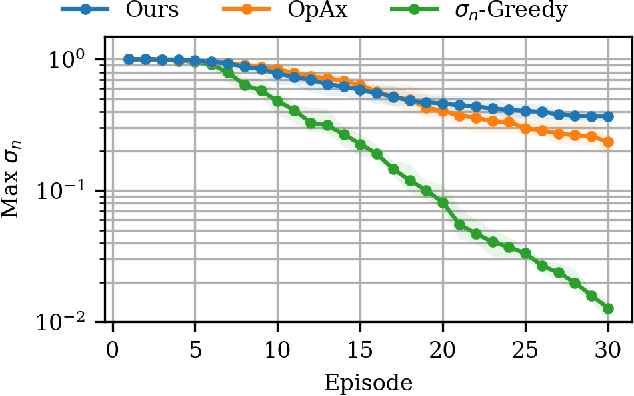

Active Learning of Dynamics Using Prior Domain Knowledge in the Sampling Process

Mar 25, 2024

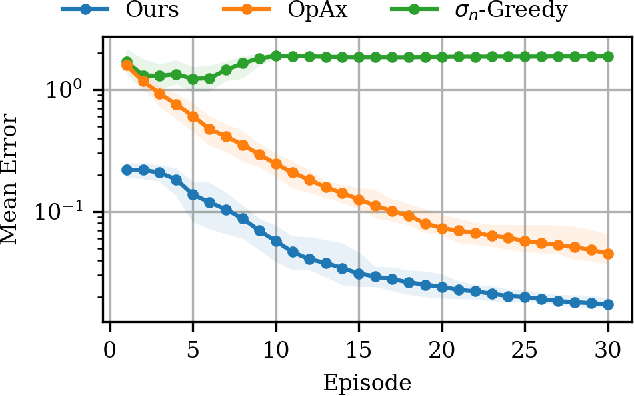

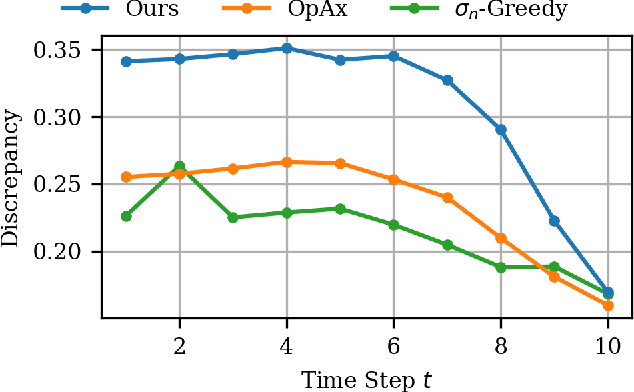

Abstract:We present an active learning algorithm for learning dynamics that leverages side information by explicitly incorporating prior domain knowledge into the sampling process. Our proposed algorithm guides the exploration toward regions that demonstrate high empirical discrepancy between the observed data and an imperfect prior model of the dynamics derived from side information. Through numerical experiments, we demonstrate that this strategy explores regions of high discrepancy and accelerates learning while simultaneously reducing model uncertainty. We rigorously prove that our active learning algorithm yields a consistent estimate of the underlying dynamics by providing an explicit rate of convergence for the maximum predictive variance. We demonstrate the efficacy of our approach on an under-actuated pendulum system and on the half-cheetah MuJoCo environment.

Refining Human-Centered Autonomy Using Side Information

May 09, 2023Abstract:Data-driven algorithms for human-centered autonomy use observed data to compute models of human behavior in order to ensure safety, correctness, and to avoid potential errors that arise at runtime. However, such algorithms often neglect useful a priori knowledge, known as side information, that can improve the quality of data-driven models. We identify several key challenges in human-centered autonomy, and identify possible approaches to incorporate side information in data-driven models of human behavior.

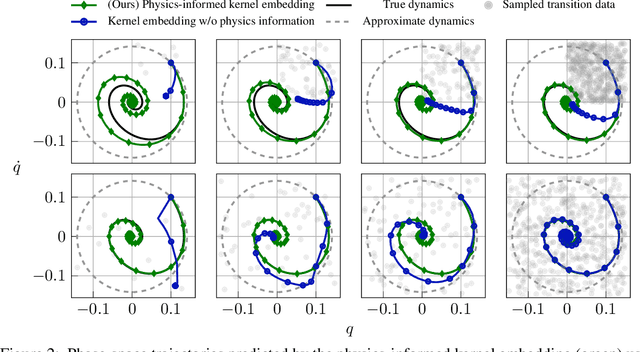

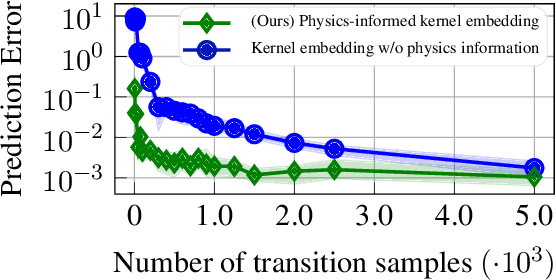

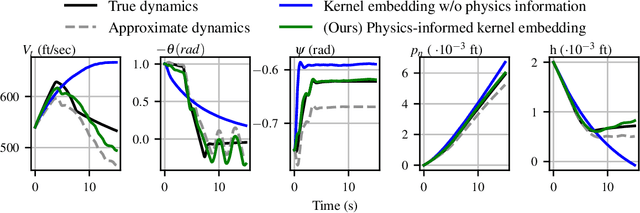

Physics-Informed Kernel Embeddings: Integrating Prior System Knowledge with Data-Driven Control

Jan 09, 2023

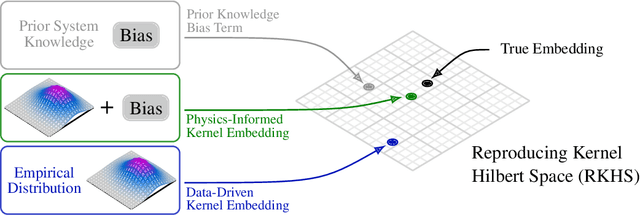

Abstract:Data-driven control algorithms use observations of system dynamics to construct an implicit model for the purpose of control. However, in practice, data-driven techniques often require excessive sample sizes, which may be infeasible in real-world scenarios where only limited observations of the system are available. Furthermore, purely data-driven methods often neglect useful a priori knowledge, such as approximate models of the system dynamics. We present a method to incorporate such prior knowledge into data-driven control algorithms using kernel embeddings, a nonparametric machine learning technique based in the theory of reproducing kernel Hilbert spaces. Our proposed approach incorporates prior knowledge of the system dynamics as a bias term in the kernel learning problem. We formulate the biased learning problem as a least-squares problem with a regularization term that is informed by the dynamics, that has an efficiently computable, closed-form solution. Through numerical experiments, we empirically demonstrate the improved sample efficiency and out-of-sample generalization of our approach over a purely data-driven baseline. We demonstrate an application of our method to control through a target tracking problem with nonholonomic dynamics, and on spring-mass-damper and F-16 aircraft state prediction tasks.

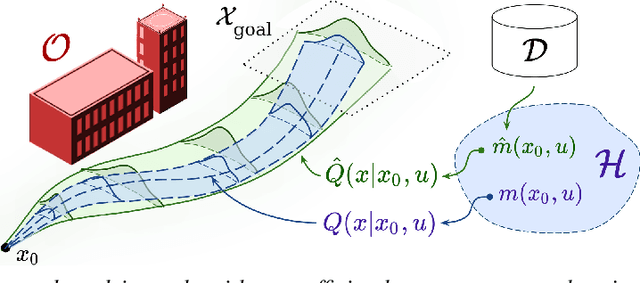

SOCKS: A Stochastic Optimal Control and Reachability Toolbox Using Kernel Methods

Mar 12, 2022

Abstract:We present SOCKS, a data-driven stochastic optimal control toolbox based in kernel methods. SOCKS is a collection of data-driven algorithms that compute approximate solutions to stochastic optimal control problems with arbitrary cost and constraint functions, including stochastic reachability, which seeks to determine the likelihood that a system will reach a desired target set while respecting a set of pre-defined safety constraints. Our approach relies upon a class of machine learning algorithms based in kernel methods, a nonparametric technique which can be used to represent probability distributions in a high-dimensional space of functions known as a reproducing kernel Hilbert space. As a nonparametric technique, kernel methods are inherently data-driven, meaning that they do not place prior assumptions on the system dynamics or the structure of the uncertainty. This makes the toolbox amenable to a wide variety of systems, including those with nonlinear dynamics, black-box elements, and poorly characterized stochastic disturbances. We present the main features of SOCKS and demonstrate its capabilities on several benchmarks.

Data-Driven Chance Constrained Control using Kernel Distribution Embeddings

Feb 08, 2022

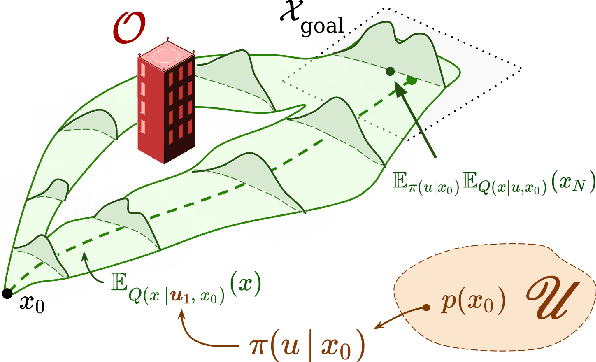

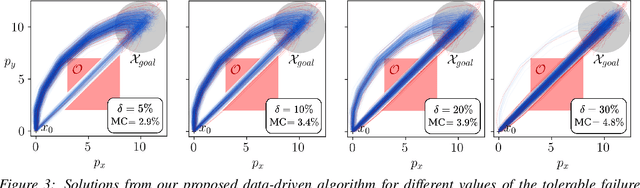

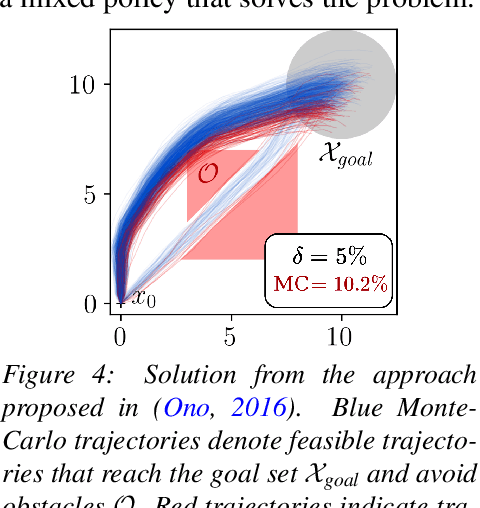

Abstract:We present a data-driven algorithm for efficiently computing stochastic control policies for general joint chance constrained optimal control problems. Our approach leverages the theory of kernel distribution embeddings, which allows representing expectation operators as inner products in a reproducing kernel Hilbert space. This framework enables approximately reformulating the original problem using a dataset of observed trajectories from the system without imposing prior assumptions on the parameterization of the system dynamics or the structure of the uncertainty. By optimizing over a finite subset of stochastic open-loop control trajectories, we relax the original problem to a linear program over the control parameters that can be efficiently solved using standard convex optimization techniques. We demonstrate our proposed approach in simulation on a system with nonlinear non-Markovian dynamics navigating in a cluttered environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge