Extreme Multi Label Classification

Extreme multi-label classification is the task of assigning multiple labels to a single instance from an extremely large label space.

Papers and Code

Distributionally Robust Classification for Multi-source Unsupervised Domain Adaptation

Jan 29, 2026Unsupervised domain adaptation (UDA) is a statistical learning problem when the distribution of training (source) data is different from that of test (target) data. In this setting, one has access to labeled data only from the source domain and unlabeled data from the target domain. The central objective is to leverage the source data and the unlabeled target data to build models that generalize to the target domain. Despite its potential, existing UDA approaches often struggle in practice, particularly in scenarios where the target domain offers only limited unlabeled data or spurious correlations dominate the source domain. To address these challenges, we propose a novel distributionally robust learning framework that models uncertainty in both the covariate distribution and the conditional label distribution. Our approach is motivated by the multi-source domain adaptation setting but is also directly applicable to the single-source scenario, making it versatile in practice. We develop an efficient learning algorithm that can be seamlessly integrated with existing UDA methods. Extensive experiments under various distribution shift scenarios show that our method consistently outperforms strong baselines, especially when target data are extremely scarce.

Contrastive Bi-Encoder Models for Multi-Label Skill Extraction: Enhancing ESCO Ontology Matching with BERT and Attention Mechanisms

Jan 14, 2026Fine-grained labor market analysis increasingly relies on mapping unstructured job advertisements to standardized skill taxonomies such as ESCO. This mapping is naturally formulated as an Extreme Multi-Label Classification (XMLC) problem, but supervised solutions are constrained by the scarcity and cost of large-scale, taxonomy-aligned annotations--especially in non-English settings where job-ad language diverges substantially from formal skill definitions. We propose a zero-shot skill extraction framework that eliminates the need for manually labeled job-ad training data. The framework uses a Large Language Model (LLM) to synthesize training instances from ESCO definitions, and introduces hierarchically constrained multi-skill generation based on ESCO Level-2 categories to improve semantic coherence in multi-label contexts. On top of the synthetic corpus, we train a contrastive bi-encoder that aligns job-ad sentences with ESCO skill descriptions in a shared embedding space; the encoder augments a BERT backbone with BiLSTM and attention pooling to better model long, information-dense requirement statements. An upstream RoBERTa-based binary filter removes non-skill sentences to improve end-to-end precision. Experiments show that (i) hierarchy-conditioned generation improves both fluency and discriminability relative to unconstrained pairing, and (ii) the resulting multi-label model transfers effectively to real-world Chinese job advertisements, achieving strong zero-shot retrieval performance (F1@5 = 0.72) and outperforming TF--IDF and standard BERT baselines. Overall, the proposed pipeline provides a scalable, data-efficient pathway for automated skill coding in labor economics and workforce analytics.

VisNet: Efficient Person Re-Identification via Alpha-Divergence Loss, Feature Fusion and Dynamic Multi-Task Learning

Jan 01, 2026Person re-identification (ReID) is an extremely important area in both surveillance and mobile applications, requiring strong accuracy with minimal computational cost. State-of-the-art methods give good accuracy but with high computational budgets. To remedy this, this paper proposes VisNet, a computationally efficient and effective re-identification model suitable for real-world scenarios. It is the culmination of conceptual contributions, including feature fusion at multiple scales with automatic attention on each, semantic clustering with anatomical body partitioning, a dynamic weight averaging technique to balance classification semantic regularization, and the use of loss function FIDI for improved metric learning tasks. The multiple scales fuse ResNet50's stages 1 through 4 without the use of parallel paths, with semantic clustering introducing spatial constraints through the use of rule-based pseudo-labeling. VisNet achieves 87.05% Rank-1 and 77.65% mAP on the Market-1501 dataset, having 32.41M parameters and 4.601 GFLOPs, hence, proposing a practical approach for real-time deployment in surveillance and mobile applications where computational resources are limited.

Fine-Tuning Causal LLMs for Text Classification: Embedding-Based vs. Instruction-Based Approaches

Dec 14, 2025We explore efficient strategies to fine-tune decoder-only Large Language Models (LLMs) for downstream text classification under resource constraints. Two approaches are investigated: (1) attaching a classification head to a pre-trained causal LLM and fine-tuning on the task (using the LLM's final token embedding as a sequence representation), and (2) instruction-tuning the LLM in a prompt->response format for classification. To enable single-GPU fine-tuning of models up to 8B parameters, we combine 4-bit model quantization with Low-Rank Adaptation (LoRA) for parameter-efficient training. Experiments on two datasets - a proprietary single-label dataset and the public WIPO-Alpha patent dataset (extreme multi-label classification) - show that the embedding-based method significantly outperforms the instruction-tuned method in F1-score, and is very competitive with - even surpassing - fine-tuned domain-specific models (e.g. BERT) on the same tasks. These results demonstrate that directly leveraging the internal representations of causal LLMs, along with efficient fine-tuning techniques, yields impressive classification performance under limited computational resources. We discuss the advantages of each approach while outlining practical guidelines and future directions for optimizing LLM fine-tuning in classification scenarios.

BarcodeMamba+: Advancing State-Space Models for Fungal Biodiversity Research

Dec 17, 2025Accurate taxonomic classification from DNA barcodes is a cornerstone of global biodiversity monitoring, yet fungi present extreme challenges due to sparse labelling and long-tailed taxa distributions. Conventional supervised learning methods often falter in this domain, struggling to generalize to unseen species and to capture the hierarchical nature of the data. To address these limitations, we introduce BarcodeMamba+, a foundation model for fungal barcode classification built on a powerful and efficient state-space model architecture. We employ a pretrain and fine-tune paradigm, which utilizes partially labelled data and we demonstrate this is substantially more effective than traditional fully-supervised methods in this data-sparse environment. During fine-tuning, we systematically integrate and evaluate a suite of enhancements--including hierarchical label smoothing, a weighted loss function, and a multi-head output layer from MycoAI--to specifically tackle the challenges of fungal taxonomy. Our experiments show that each of these components yields significant performance gains. On a challenging fungal classification benchmark with distinct taxonomic distribution shifts from the broad training set, our final model outperforms a range of existing methods across all taxonomic levels. Our work provides a powerful new tool for genomics-based biodiversity research and establishes an effective and scalable training paradigm for this challenging domain. Our code is publicly available at https://github.com/bioscan-ml/BarcodeMamba.

Large Language Models Meet Extreme Multi-label Classification: Scaling and Multi-modal Framework

Nov 17, 2025Foundation models have revolutionized artificial intelligence across numerous domains, yet their transformative potential remains largely untapped in Extreme Multi-label Classification (XMC). Queries in XMC are associated with relevant labels from extremely large label spaces, where it is critical to strike a balance between efficiency and performance. Therefore, many recent approaches efficiently pose XMC as a maximum inner product search between embeddings learned from small encoder-only transformer architectures. In this paper, we address two important aspects in XMC: how to effectively harness larger decoder-only models, and how to exploit visual information while maintaining computational efficiency. We demonstrate that both play a critical role in XMC separately and can be combined for improved performance. We show that a few billion-size decoder can deliver substantial improvements while keeping computational overhead manageable. Furthermore, our Vision-enhanced eXtreme Multi-label Learning framework (ViXML) efficiently integrates foundation vision models by pooling a single embedding per image. This limits computational growth while unlocking multi-modal capabilities. Remarkably, ViXML with small encoders outperforms text-only decoder in most cases, showing that an image is worth billions of parameters. Finally, we present an extension of existing text-only datasets to exploit visual metadata and make them available for future benchmarking. Comprehensive experiments across four public text-only datasets and their corresponding image enhanced versions validate our proposals' effectiveness, surpassing previous state-of-the-art by up to +8.21\% in P@1 on the largest dataset. ViXML's code is available at https://github.com/DiegoOrtego/vixml.

Optimizing Classification of Infrequent Labels by Reducing Variability in Label Distribution

Nov 07, 2025This paper presents a novel solution, LEVER, designed to address the challenges posed by underperforming infrequent categories in Extreme Classification (XC) tasks. Infrequent categories, often characterized by sparse samples, suffer from high label inconsistency, which undermines classification performance. LEVER mitigates this problem by adopting a robust Siamese-style architecture, leveraging knowledge transfer to reduce label inconsistency and enhance the performance of One-vs-All classifiers. Comprehensive testing across multiple XC datasets reveals substantial improvements in the handling of infrequent categories, setting a new benchmark for the field. Additionally, the paper introduces two newly created multi-intent datasets, offering essential resources for future XC research.

Fine-grained auxiliary learning for real-world product recommendation

Oct 06, 2025

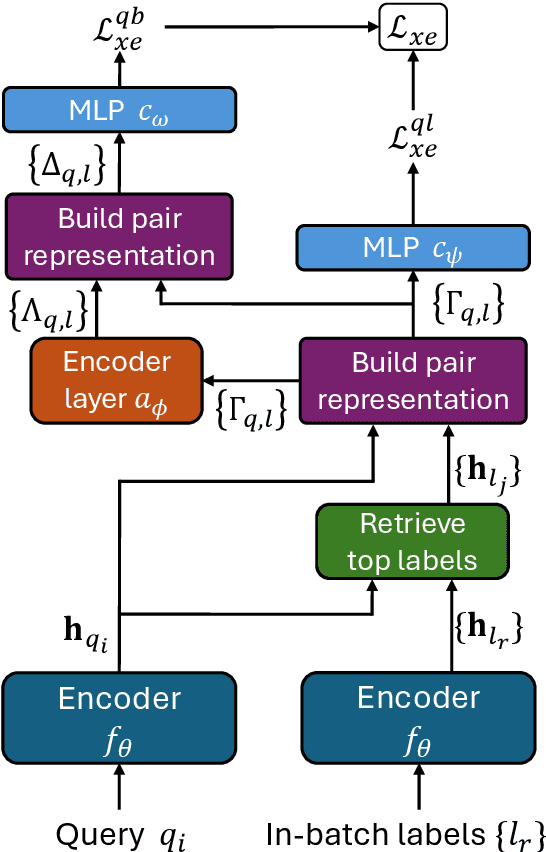

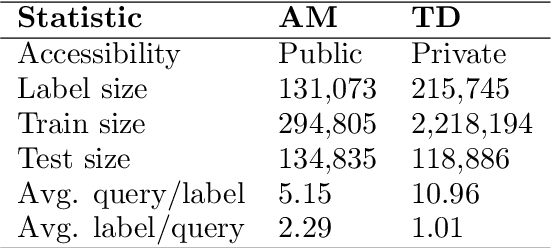

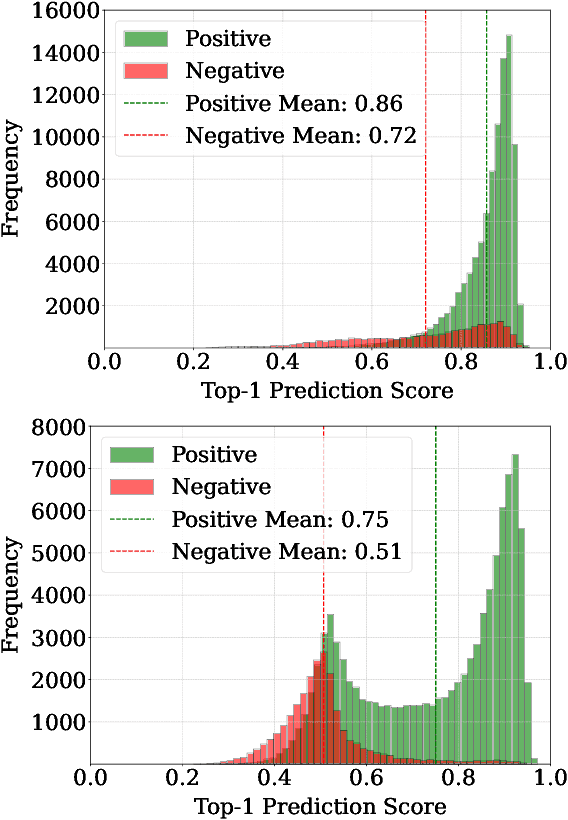

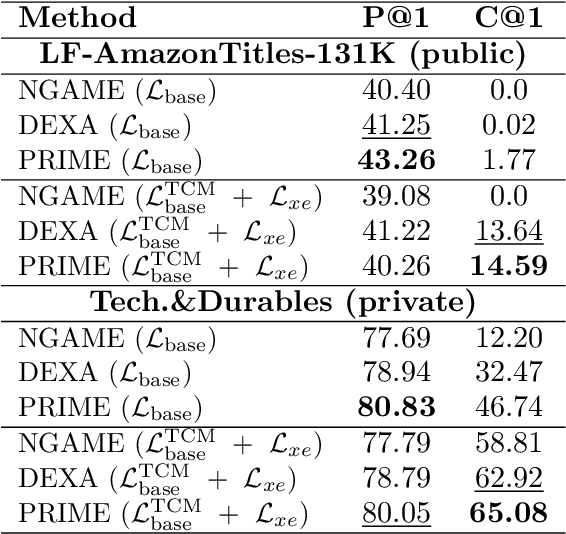

Product recommendation is the task of recovering the closest items to a given query within a large product corpora. Generally, one can determine if top-ranked products are related to the query by applying a similarity threshold; exceeding it deems the product relevant, otherwise manual revision is required. Despite being a well-known problem, the integration of these models in real-world systems is often overlooked. In particular, production systems have strong coverage requirements, i.e., a high proportion of recommendations must be automated. In this paper we propose ALC , an Auxiliary Learning strategy that boosts Coverage through learning fine-grained embeddings. Concretely, we introduce two training objectives that leverage the hardest negatives in the batch to build discriminative training signals between positives and negatives. We validate ALC using three extreme multi-label classification approaches in two product recommendation datasets; LF-AmazonTitles-131K and Tech and Durables (proprietary), demonstrating state-of-the-art coverage rates when combined with a recent threshold-consistent margin loss.

Beyond one-hot encoding? Journey into compact encoding for large multi-class segmentation

Oct 01, 2025This work presents novel methods to reduce computational and memory requirements for medical image segmentation with a large number of classes. We curiously observe challenges in maintaining state-of-the-art segmentation performance with all of the explored options. Standard learning-based methods typically employ one-hot encoding of class labels. The computational complexity and memory requirements thus increase linearly with the number of classes. We propose a family of binary encoding approaches instead of one-hot encoding to reduce the computational complexity and memory requirements to logarithmic in the number of classes. In addition to vanilla binary encoding, we investigate the effects of error-correcting output codes (ECOCs), class weighting, hard/soft decoding, class-to-codeword assignment, and label embedding trees. We apply the methods to the use case of whole brain parcellation with 108 classes based on 3D MRI images. While binary encodings have proven efficient in so-called extreme classification problems in computer vision, we faced challenges in reaching state-of-the-art segmentation quality with binary encodings. Compared to one-hot encoding (Dice Similarity Coefficient (DSC) = 82.4 (2.8)), we report reduced segmentation performance with the binary segmentation approaches, achieving DSCs in the range from 39.3 to 73.8. Informative negative results all too often go unpublished. We hope that this work inspires future research of compact encoding strategies for large multi-class segmentation tasks.

Efficient Text Encoders for Labor Market Analysis

May 30, 2025Labor market analysis relies on extracting insights from job advertisements, which provide valuable yet unstructured information on job titles and corresponding skill requirements. While state-of-the-art methods for skill extraction achieve strong performance, they depend on large language models (LLMs), which are computationally expensive and slow. In this paper, we propose \textbf{ConTeXT-match}, a novel contrastive learning approach with token-level attention that is well-suited for the extreme multi-label classification task of skill classification. \textbf{ConTeXT-match} significantly improves skill extraction efficiency and performance, achieving state-of-the-art results with a lightweight bi-encoder model. To support robust evaluation, we introduce \textbf{Skill-XL}, a new benchmark with exhaustive, sentence-level skill annotations that explicitly address the redundancy in the large label space. Finally, we present \textbf{JobBERT V2}, an improved job title normalization model that leverages extracted skills to produce high-quality job title representations. Experiments demonstrate that our models are efficient, accurate, and scalable, making them ideal for large-scale, real-time labor market analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge