Ziye Wang

Advances and Innovations in the Multi-Agent Robotic System (MARS) Challenge

Jan 26, 2026Abstract:Recent advancements in multimodal large language models and vision-languageaction models have significantly driven progress in Embodied AI. As the field transitions toward more complex task scenarios, multi-agent system frameworks are becoming essential for achieving scalable, efficient, and collaborative solutions. This shift is fueled by three primary factors: increasing agent capabilities, enhancing system efficiency through task delegation, and enabling advanced human-agent interactions. To address the challenges posed by multi-agent collaboration, we propose the Multi-Agent Robotic System (MARS) Challenge, held at the NeurIPS 2025 Workshop on SpaVLE. The competition focuses on two critical areas: planning and control, where participants explore multi-agent embodied planning using vision-language models (VLMs) to coordinate tasks and policy execution to perform robotic manipulation in dynamic environments. By evaluating solutions submitted by participants, the challenge provides valuable insights into the design and coordination of embodied multi-agent systems, contributing to the future development of advanced collaborative AI systems.

Semantic Discrepancy-aware Detector for Image Forgery Identification

Aug 17, 2025Abstract:With the rapid advancement of image generation techniques, robust forgery detection has become increasingly imperative to ensure the trustworthiness of digital media. Recent research indicates that the learned semantic concepts of pre-trained models are critical for identifying fake images. However, the misalignment between the forgery and semantic concept spaces hinders the model's forgery detection performance. To address this problem, we propose a novel Semantic Discrepancy-aware Detector (SDD) that leverages reconstruction learning to align the two spaces at a fine-grained visual level. By exploiting the conceptual knowledge embedded in the pre-trained vision language model, we specifically design a semantic token sampling module to mitigate the space shifts caused by features irrelevant to both forgery traces and semantic concepts. A concept-level forgery discrepancy learning module, built upon a visual reconstruction paradigm, is proposed to strengthen the interaction between visual semantic concepts and forgery traces, effectively capturing discrepancies under the concepts' guidance. Finally, the low-level forgery feature enhancemer integrates the learned concept level forgery discrepancies to minimize redundant forgery information. Experiments conducted on two standard image forgery datasets demonstrate the efficacy of the proposed SDD, which achieves superior results compared to existing methods. The code is available at https://github.com/wzy1111111/SSD.

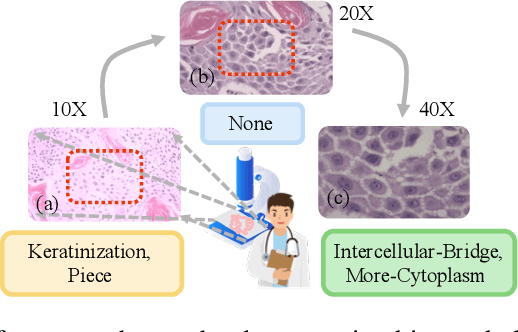

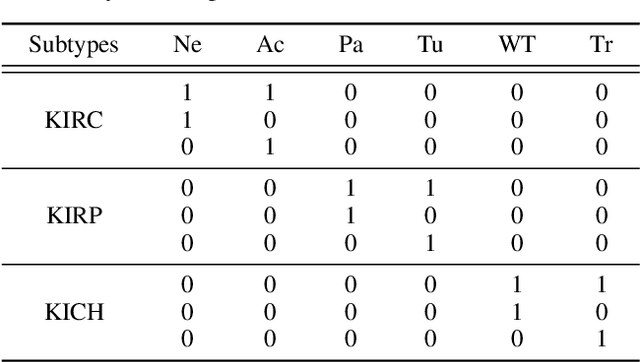

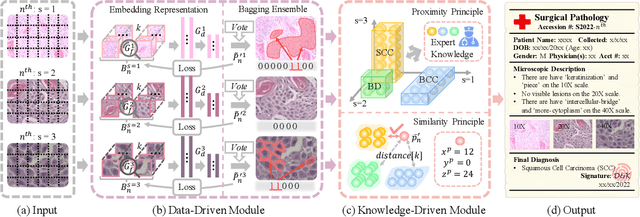

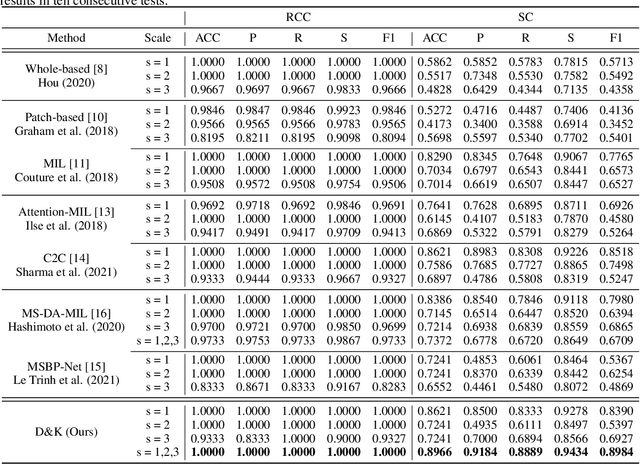

Data and Knowledge Co-driving for Cancer Subtype Classification on Multi-Scale Histopathological Slides

Apr 18, 2023

Abstract:Artificial intelligence-enabled histopathological data analysis has become a valuable assistant to the pathologist. However, existing models lack representation and inference abilities compared with those of pathologists, especially in cancer subtype diagnosis, which is unconvincing in clinical practice. For instance, pathologists typically observe the lesions of a slide from global to local, and then can give a diagnosis based on their knowledge and experience. In this paper, we propose a Data and Knowledge Co-driving (D&K) model to replicate the process of cancer subtype classification on a histopathological slide like a pathologist. Specifically, in the data-driven module, the bagging mechanism in ensemble learning is leveraged to integrate the histological features from various bags extracted by the embedding representation unit. Furthermore, a knowledge-driven module is established based on the Gestalt principle in psychology to build the three-dimensional (3D) expert knowledge space and map histological features into this space for metric. Then, the diagnosis can be made according to the Euclidean distance between them. Extensive experimental results on both public and in-house datasets demonstrate that the D&K model has a high performance and credible results compared with the state-of-the-art methods for diagnosing histopathological subtypes. Code: https://github.com/Dennis-YB/Data-and-Knowledge-Co-driving-for-Cancer-Subtypes-Classification

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge