Zhiqiang Kou

LoGoSeg: Integrating Local and Global Features for Open-Vocabulary Semantic Segmentation

Feb 05, 2026Abstract:Open-vocabulary semantic segmentation (OVSS) extends traditional closed-set segmentation by enabling pixel-wise annotation for both seen and unseen categories using arbitrary textual descriptions. While existing methods leverage vision-language models (VLMs) like CLIP, their reliance on image-level pretraining often results in imprecise spatial alignment, leading to mismatched segmentations in ambiguous or cluttered scenes. However, most existing approaches lack strong object priors and region-level constraints, which can lead to object hallucination or missed detections, further degrading performance. To address these challenges, we propose LoGoSeg, an efficient single-stage framework that integrates three key innovations: (i) an object existence prior that dynamically weights relevant categories through global image-text similarity, effectively reducing hallucinations; (ii) a region-aware alignment module that establishes precise region-level visual-textual correspondences; and (iii) a dual-stream fusion mechanism that optimally combines local structural information with global semantic context. Unlike prior works, LoGoSeg eliminates the need for external mask proposals, additional backbones, or extra datasets, ensuring efficiency. Extensive experiments on six benchmarks (A-847, PC-459, A-150, PC-59, PAS-20, and PAS-20b) demonstrate its competitive performance and strong generalization in open-vocabulary settings.

Positive-Unlabeled Reinforcement Learning Distillation for On-Premise Small Models

Jan 28, 2026Abstract:Due to constraints on privacy, cost, and latency, on-premise deployment of small models is increasingly common. However, most practical pipelines stop at supervised fine-tuning (SFT) and fail to reach the reinforcement learning (RL) alignment stage. The main reason is that RL alignment typically requires either expensive human preference annotation or heavy reliance on high-quality reward models with large-scale API usage and ongoing engineering maintenance, both of which are ill-suited to on-premise settings. To bridge this gap, we propose a positive-unlabeled (PU) RL distillation method for on-premise small-model deployment. Without human-labeled preferences or a reward model, our method distills the teacher's preference-optimization capability from black-box generations into a locally trainable student. For each prompt, we query the teacher once to obtain an anchor response, locally sample multiple student candidates, and perform anchor-conditioned self-ranking to induce pairwise or listwise preferences, enabling a fully local training loop via direct preference optimization or group relative policy optimization. Theoretical analysis justifies that the induced preference signal by our method is order-consistent and concentrates on near-optimal candidates, supporting its stability for preference optimization. Experiments demonstrate that our method achieves consistently strong performance under a low-cost setting.

Toward Efficient Agents: Memory, Tool learning, and Planning

Jan 20, 2026Abstract:Recent years have witnessed increasing interest in extending large language models into agentic systems. While the effectiveness of agents has continued to improve, efficiency, which is crucial for real-world deployment, has often been overlooked. This paper therefore investigates efficiency from three core components of agents: memory, tool learning, and planning, considering costs such as latency, tokens, steps, etc. Aimed at conducting comprehensive research addressing the efficiency of the agentic system itself, we review a broad range of recent approaches that differ in implementation yet frequently converge on shared high-level principles including but not limited to bounding context via compression and management, designing reinforcement learning rewards to minimize tool invocation, and employing controlled search mechanisms to enhance efficiency, which we discuss in detail. Accordingly, we characterize efficiency in two complementary ways: comparing effectiveness under a fixed cost budget, and comparing cost at a comparable level of effectiveness. This trade-off can also be viewed through the Pareto frontier between effectiveness and cost. From this perspective, we also examine efficiency oriented benchmarks by summarizing evaluation protocols for these components and consolidating commonly reported efficiency metrics from both benchmark and methodological studies. Moreover, we discuss the key challenges and future directions, with the goal of providing promising insights.

Object-level Correlation for Few-Shot Segmentation

Sep 09, 2025Abstract:Few-shot semantic segmentation (FSS) aims to segment objects of novel categories in the query images given only a few annotated support samples. Existing methods primarily build the image-level correlation between the support target object and the entire query image. However, this correlation contains the hard pixel noise, \textit{i.e.}, irrelevant background objects, that is intractable to trace and suppress, leading to the overfitting of the background. To address the limitation of this correlation, we imitate the biological vision process to identify novel objects in the object-level information. Target identification in the general objects is more valid than in the entire image, especially in the low-data regime. Inspired by this, we design an Object-level Correlation Network (OCNet) by establishing the object-level correlation between the support target object and query general objects, which is mainly composed of the General Object Mining Module (GOMM) and Correlation Construction Module (CCM). Specifically, GOMM constructs the query general object feature by learning saliency and high-level similarity cues, where the general objects include the irrelevant background objects and the target foreground object. Then, CCM establishes the object-level correlation by allocating the target prototypes to match the general object feature. The generated object-level correlation can mine the query target feature and suppress the hard pixel noise for the final prediction. Extensive experiments on PASCAL-${5}^{i}$ and COCO-${20}^{i}$ show that our model achieves the state-of-the-art performance.

Label Distribution Learning with Biased Annotations by Learning Multi-Label Representation

Feb 03, 2025Abstract:Multi-label learning (MLL) has gained attention for its ability to represent real-world data. Label Distribution Learning (LDL), an extension of MLL to learning from label distributions, faces challenges in collecting accurate label distributions. To address the issue of biased annotations, based on the low-rank assumption, existing works recover true distributions from biased observations by exploring the label correlations. However, recent evidence shows that the label distribution tends to be full-rank, and naive apply of low-rank approximation on biased observation leads to inaccurate recovery and performance degradation. In this paper, we address the LDL with biased annotations problem from a novel perspective, where we first degenerate the soft label distribution into a hard multi-hot label and then recover the true label information for each instance. This idea stems from an insight that assigning hard multi-hot labels is often easier than assigning a soft label distribution, and it shows stronger immunity to noise disturbances, leading to smaller label bias. Moreover, assuming that the multi-label space for predicting label distributions is low-rank offers a more reasonable approach to capturing label correlations. Theoretical analysis and experiments confirm the effectiveness and robustness of our method on real-world datasets.

Towards Better Performance in Incomplete LDL: Addressing Data Imbalance

Oct 17, 2024

Abstract:Label Distribution Learning (LDL) is a novel machine learning paradigm that addresses the problem of label ambiguity and has found widespread applications. Obtaining complete label distributions in real-world scenarios is challenging, which has led to the emergence of Incomplete Label Distribution Learning (InLDL). However, the existing InLDL methods overlook a crucial aspect of LDL data: the inherent imbalance in label distributions. To address this limitation, we propose \textbf{Incomplete and Imbalance Label Distribution Learning (I\(^2\)LDL)}, a framework that simultaneously handles incomplete labels and imbalanced label distributions. Our method decomposes the label distribution matrix into a low-rank component for frequent labels and a sparse component for rare labels, effectively capturing the structure of both head and tail labels. We optimize the model using the Alternating Direction Method of Multipliers (ADMM) and derive generalization error bounds via Rademacher complexity, providing strong theoretical guarantees. Extensive experiments on 15 real-world datasets demonstrate the effectiveness and robustness of our proposed framework compared to existing InLDL methods.

Addressing Skewed Heterogeneity via Federated Prototype Rectification with Personalization

Aug 15, 2024

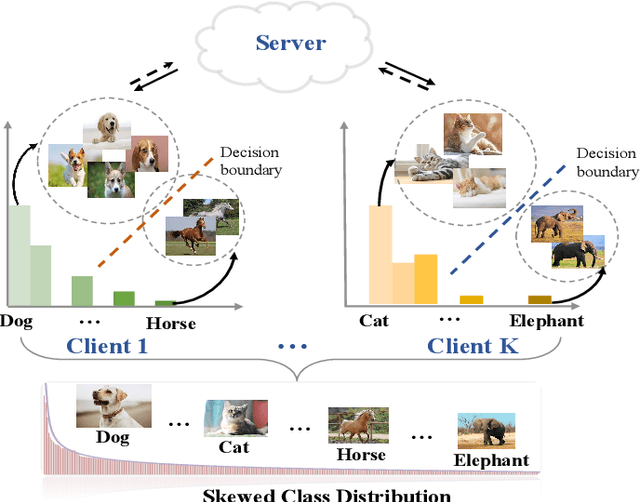

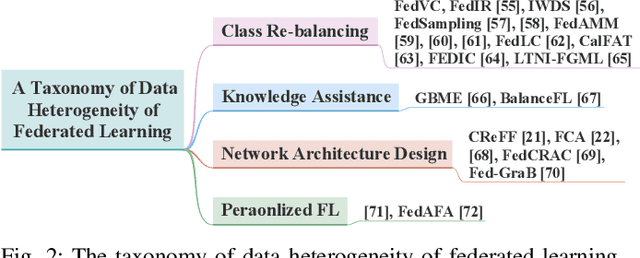

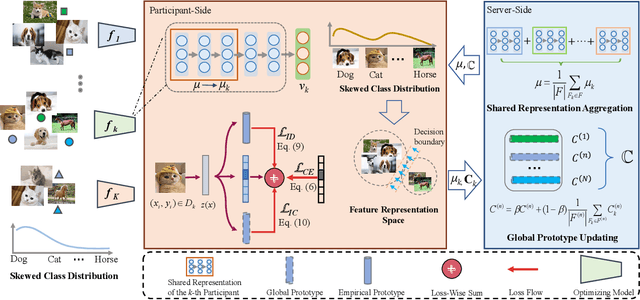

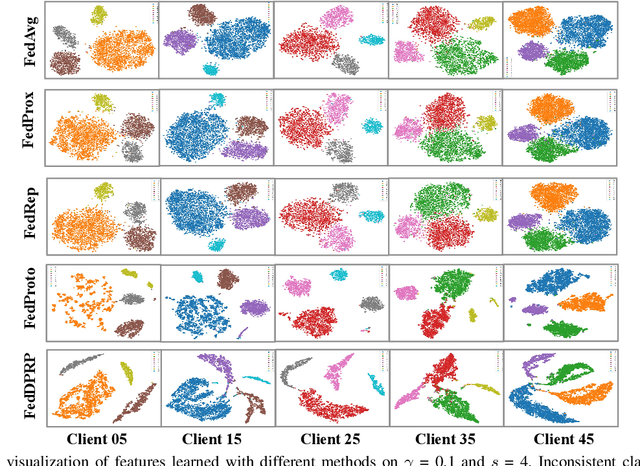

Abstract:Federated learning is an efficient framework designed to facilitate collaborative model training across multiple distributed devices while preserving user data privacy. A significant challenge of federated learning is data-level heterogeneity, i.e., skewed or long-tailed distribution of private data. Although various methods have been proposed to address this challenge, most of them assume that the underlying global data is uniformly distributed across all clients. This paper investigates data-level heterogeneity federated learning with a brief review and redefines a more practical and challenging setting called Skewed Heterogeneous Federated Learning (SHFL). Accordingly, we propose a novel Federated Prototype Rectification with Personalization which consists of two parts: Federated Personalization and Federated Prototype Rectification. The former aims to construct balanced decision boundaries between dominant and minority classes based on private data, while the latter exploits both inter-class discrimination and intra-class consistency to rectify empirical prototypes. Experiments on three popular benchmarks show that the proposed approach outperforms current state-of-the-art methods and achieves balanced performance in both personalization and generalization.

Inaccurate Label Distribution Learning with Dependency Noise

May 26, 2024Abstract:In this paper, we introduce the Dependent Noise-based Inaccurate Label Distribution Learning (DN-ILDL) framework to tackle the challenges posed by noise in label distribution learning, which arise from dependencies on instances and labels. We start by modeling the inaccurate label distribution matrix as a combination of the true label distribution and a noise matrix influenced by specific instances and labels. To address this, we develop a linear mapping from instances to their true label distributions, incorporating label correlations, and decompose the noise matrix using feature and label representations, applying group sparsity constraints to accurately capture the noise. Furthermore, we employ graph regularization to align the topological structures of the input and output spaces, ensuring accurate reconstruction of the true label distribution matrix. Utilizing the Alternating Direction Method of Multipliers (ADMM) for efficient optimization, we validate our method's capability to recover true labels accurately and establish a generalization error bound. Extensive experiments demonstrate that DN-ILDL effectively addresses the ILDL problem and outperforms existing LDL methods.

Building Variable-sized Models via Learngene Pool

Dec 12, 2023

Abstract:Recently, Stitchable Neural Networks (SN-Net) is proposed to stitch some pre-trained networks for quickly building numerous networks with different complexity and performance trade-offs. In this way, the burdens of designing or training the variable-sized networks, which can be used in application scenarios with diverse resource constraints, are alleviated. However, SN-Net still faces a few challenges. 1) Stitching from multiple independently pre-trained anchors introduces high storage resource consumption. 2) SN-Net faces challenges to build smaller models for low resource constraints. 3). SN-Net uses an unlearned initialization method for stitch layers, limiting the final performance. To overcome these challenges, motivated by the recently proposed Learngene framework, we propose a novel method called Learngene Pool. Briefly, Learngene distills the critical knowledge from a large pre-trained model into a small part (termed as learngene) and then expands this small part into a few variable-sized models. In our proposed method, we distill one pretrained large model into multiple small models whose network blocks are used as learngene instances to construct the learngene pool. Since only one large model is used, we do not need to store more large models as SN-Net and after distilling, smaller learngene instances can be created to build small models to satisfy low resource constraints. We also insert learnable transformation matrices between the instances to stitch them into variable-sized models to improve the performance of these models. Exhaustive experiments have been implemented and the results validate the effectiveness of the proposed Learngene Pool compared with SN-Net.

Data Augmentation For Label Enhancement

Mar 21, 2023Abstract:Label distribution (LD) uses the description degree to describe instances, which provides more fine-grained supervision information when learning with label ambiguity. Nevertheless, LD is unavailable in many real-world applications. To obtain LD, label enhancement (LE) has emerged to recover LD from logical label. Existing LE approach have the following problems: (\textbf{i}) They use logical label to train mappings to LD, but the supervision information is too loose, which can lead to inaccurate model prediction; (\textbf{ii}) They ignore feature redundancy and use the collected features directly. To solve (\textbf{i}), we use the topology of the feature space to generate more accurate label-confidence. To solve (\textbf{ii}), we proposed a novel supervised LE dimensionality reduction approach, which projects the original data into a lower dimensional feature space. Combining the above two, we obtain the augmented data for LE. Further, we proposed a novel nonlinear LE model based on the label-confidence and reduced features. Extensive experiments on 12 real-world datasets are conducted and the results show that our method consistently outperforms the other five comparing approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge