Zhi Cao

Generalizable Machine Learning Models for Predicting Data Center Server Power, Efficiency, and Throughput

Mar 09, 2025

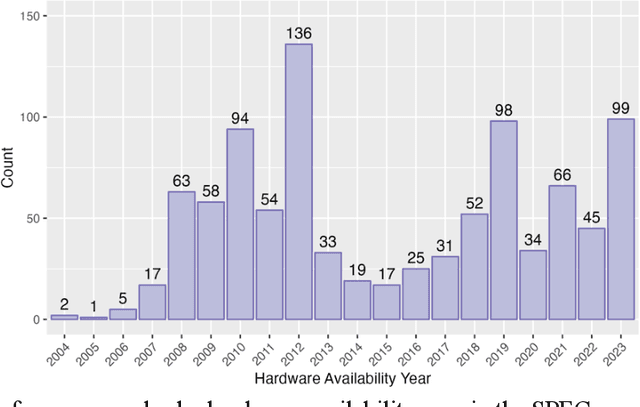

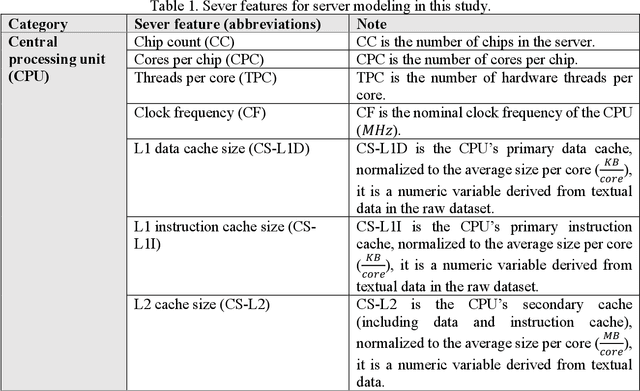

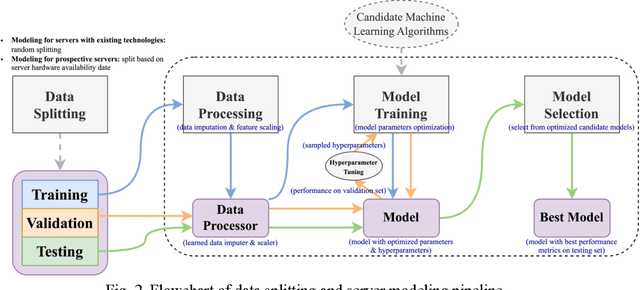

Abstract:In the rapidly evolving digital era, comprehending the intricate dynamics influencing server power consumption, efficiency, and performance is crucial for sustainable data center operations. However, existing models lack the ability to provide a detailed and reliable understanding of these intricate relationships. This study employs a machine learning-based approach, using the SPECPower_ssj2008 database, to facilitate user-friendly and generalizable server modeling. The resulting models demonstrate high accuracy, with errors falling within approximately 10% on the testing dataset, showcasing their practical utility and generalizability. Through meticulous analysis, predictive features related to hardware availability date, server workload level, and specifications are identified, providing insights into optimizing energy conservation, efficiency, and performance in server deployment and operation. By systematically measuring biases and uncertainties, the study underscores the need for caution when employing historical data for prospective server modeling, considering the dynamic nature of technology landscapes. Collectively, this work offers valuable insights into the sustainable deployment and operation of servers in data centers, paving the way for enhanced resource use efficiency and more environmentally conscious practices.

Active Sampling for Node Attribute Completion on Graphs

Jan 14, 2025

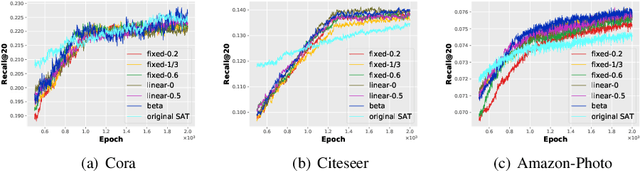

Abstract:Node attribute, a type of crucial information for graph analysis, may be partially or completely missing for certain nodes in real world applications. Restoring the missing attributes is expected to benefit downstream graph learning. Few attempts have been made on node attribute completion, but a novel framework called Structure-attribute Transformer (SAT) was recently proposed by using a decoupled scheme to leverage structures and attributes. SAT ignores the differences in contributing to the learning schedule and finding a practical way to model the different importance of nodes with observed attributes is challenging. This paper proposes a novel AcTive Sampling algorithm (ATS) to restore missing node attributes. The representativeness and uncertainty of each node's information are first measured based on graph structure, representation similarity and learning bias. To select nodes as train samples in the next optimization step, a weighting scheme controlled by Beta distribution is then introduced to linearly combine the two properties. Extensive experiments on four public benchmark datasets and two downstream tasks have shown the superiority of ATS in node attribute completion.

Instructional Video Generation

Dec 05, 2024

Abstract:Despite the recent strides in video generation, state-of-the-art methods still struggle with elements of visual detail. One particularly challenging case is the class of egocentric instructional videos in which the intricate motion of the hand coupled with a mostly stable and non-distracting environment is necessary to convey the appropriate visual action instruction. To address these challenges, we introduce a new method for instructional video generation. Our diffusion-based method incorporates two distinct innovations. First, we propose an automatic method to generate the expected region of motion, guided by both the visual context and the action text. Second, we introduce a critical hand structure loss to guide the diffusion model to focus on smooth and consistent hand poses. We evaluate our method on augmented instructional datasets based on EpicKitchens and Ego4D, demonstrating significant improvements over state-of-the-art methods in terms of instructional clarity, especially of the hand motion in the target region, across diverse environments and actions.Video results can be found on the project webpage: https://excitedbutter.github.io/Instructional-Video-Generation/

Enhancing Adversarial Training with Prior Knowledge Distillation for Robust Image Compression

Mar 16, 2024

Abstract:Deep neural network-based image compression (NIC) has achieved excellent performance, but NIC method models have been shown to be susceptible to backdoor attacks. Adversarial training has been validated in image compression models as a common method to enhance model robustness. However, the improvement effect of adversarial training on model robustness is limited. In this paper, we propose a prior knowledge-guided adversarial training framework for image compression models. Specifically, first, we propose a gradient regularization constraint for training robust teacher models. Subsequently, we design a knowledge distillation based strategy to generate a priori knowledge from the teacher model to the student model for guiding adversarial training. Experimental results show that our method improves the reconstruction quality by about 9dB when the Kodak dataset is elected as the backdoor attack object for psnr attack. Compared with Ma2023, our method has a 5dB higher PSNR output at high bitrate points.

3DRIMR: 3D Reconstruction and Imaging via mmWave Radar based on Deep Learning

Aug 05, 2021

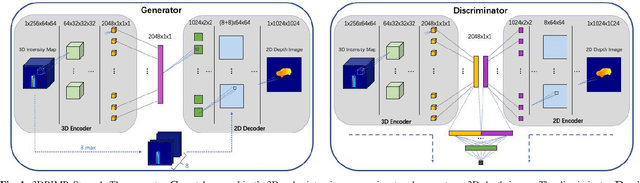

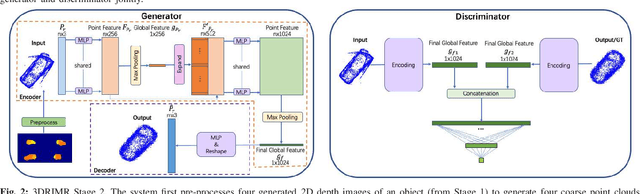

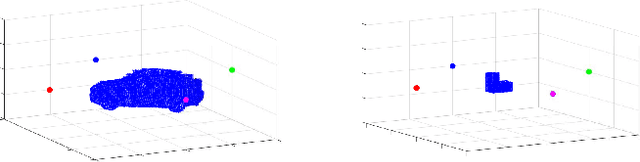

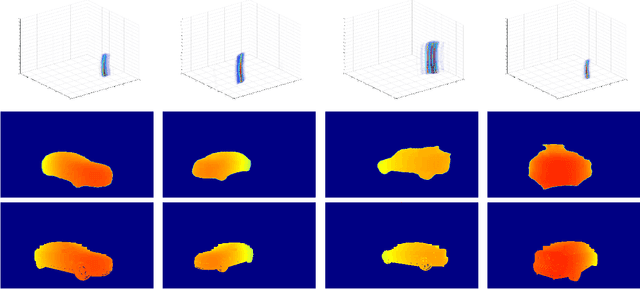

Abstract:mmWave radar has been shown as an effective sensing technique in low visibility, smoke, dusty, and dense fog environment. However tapping the potential of radar sensing to reconstruct 3D object shapes remains a great challenge, due to the characteristics of radar data such as sparsity, low resolution, specularity, high noise, and multi-path induced shadow reflections and artifacts. In this paper we propose 3D Reconstruction and Imaging via mmWave Radar (3DRIMR), a deep learning based architecture that reconstructs 3D shape of an object in dense detailed point cloud format, based on sparse raw mmWave radar intensity data. The architecture consists of two back-to-back conditional GAN deep neural networks: the first generator network generates 2D depth images based on raw radar intensity data, and the second generator network outputs 3D point clouds based on the results of the first generator. The architecture exploits both convolutional neural network's convolutional operation (that extracts local structure neighborhood information) and the efficiency and detailed geometry capture capability of point clouds (other than costly voxelization of 3D space or distance fields). Our experiments have demonstrated 3DRIMR's effectiveness in reconstructing 3D objects, and its performance improvement over standard techniques.

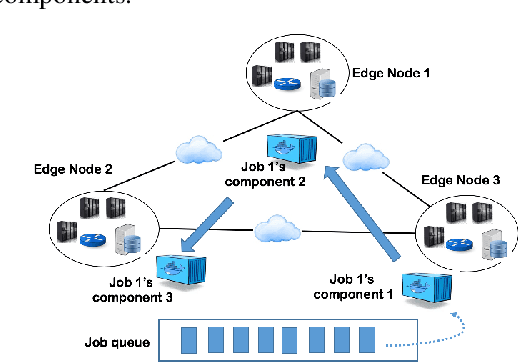

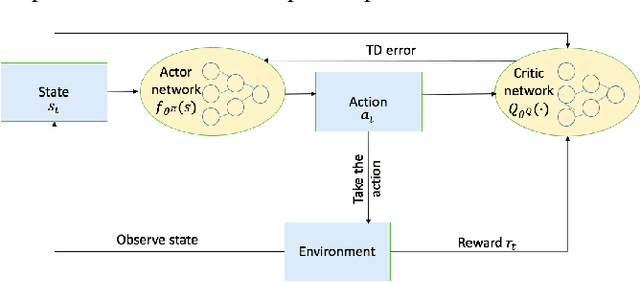

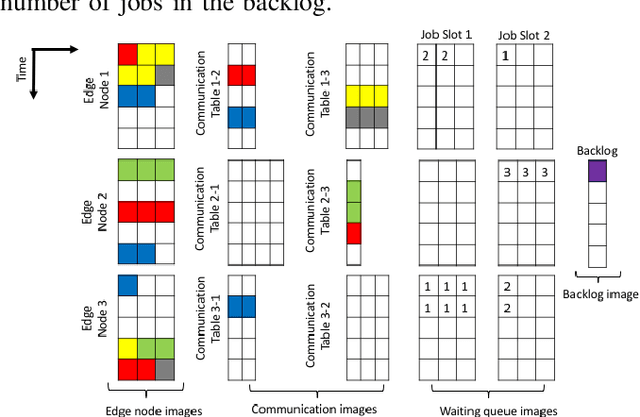

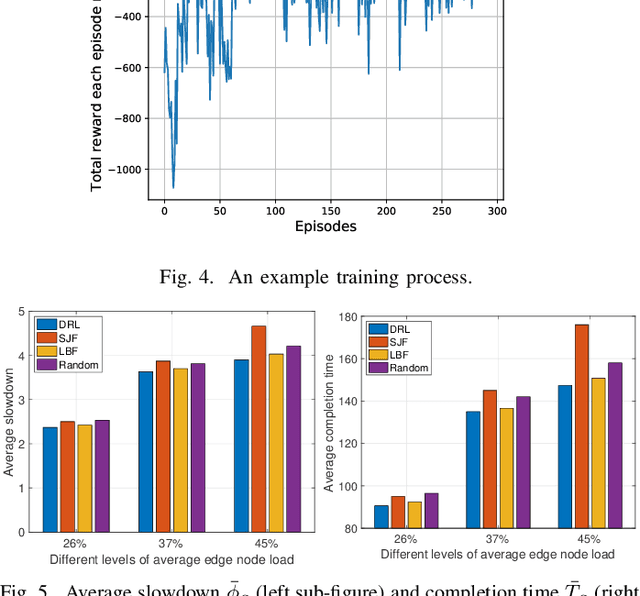

A Deep Reinforcement Learning Approach to Multi-component Job Scheduling in Edge Computing

Sep 01, 2019

Abstract:We are interested in the optimal scheduling of a collection of multi-component application jobs in an edge computing system that consists of geo-distributed edge computing nodes connected through a wide area network. The scheduling and placement of application jobs in an edge system is challenging due to the interdependence of multiple components of each job, and the communication delays between the geographically distributed data sources and edge nodes and their dynamic availability. In this paper we explore the feasibility of applying Deep Reinforcement Learning (DRL) based design to address these challenges. We introduce a DRL actor-critic algorithm that aims to find an optimal scheduling policy to minimize average job slowdown in the edge system. We have demonstrated through simulations that our design outperforms a few existing algorithms, based on both synthetic data and a Google cloud data trace.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge