Zetong Chen

SCFANet: Style Distribution Constraint Feature Alignment Network For Pathological Staining Translation

Apr 01, 2025

Abstract:Immunohistochemical (IHC) staining serves as a valuable technique for detecting specific antigens or proteins through antibody-mediated visualization. However, the IHC staining process is both time-consuming and costly. To address these limitations, the application of deep learning models for direct translation of cost-effective Hematoxylin and Eosin (H&E) stained images into IHC stained images has emerged as an efficient solution. Nevertheless, the conversion from H&E to IHC images presents significant challenges, primarily due to alignment discrepancies between image pairs and the inherent diversity in IHC staining style patterns. To overcome these challenges, we propose the Style Distribution Constraint Feature Alignment Network (SCFANet), which incorporates two innovative modules: the Style Distribution Constrainer (SDC) and Feature Alignment Learning (FAL). The SDC ensures consistency between the generated and target images' style distributions while integrating cycle consistency loss to maintain structural consistency. To mitigate the complexity of direct image-to-image translation, the FAL module decomposes the end-to-end translation task into two subtasks: image reconstruction and feature alignment. Furthermore, we ensure pathological consistency between generated and target images by maintaining pathological pattern consistency and Optical Density (OD) uniformity. Extensive experiments conducted on the Breast Cancer Immunohistochemical (BCI) dataset demonstrate that our SCFANet model outperforms existing methods, achieving precise transformation of H&E-stained images into their IHC-stained counterparts. The proposed approach not only addresses the technical challenges in H&E to IHC image translation but also provides a robust framework for accurate and efficient stain conversion in pathological analysis.

DAFFNet: A Dual Attention Feature Fusion Network for Classification of White Blood Cells

May 25, 2024

Abstract:The precise categorization of white blood cell (WBC) is crucial for diagnosing blood-related disorders. However, manual analysis in clinical settings is time-consuming, labor-intensive, and prone to errors. Numerous studies have employed machine learning and deep learning techniques to achieve objective WBC classification, yet these studies have not fully utilized the information of WBC images. Therefore, our motivation is to comprehensively utilize the morphological information and high-level semantic information of WBC images to achieve accurate classification of WBC. In this study, we propose a novel dual-branch network Dual Attention Feature Fusion Network (DAFFNet), which for the first time integrates the high-level semantic features with morphological features of WBC to achieve accurate classification. Specifically, we introduce a dual attention mechanism, which enables the model to utilize the channel features and spatially localized features of the image more comprehensively. Morphological Feature Extractor (MFE), comprising Morphological Attributes Predictor (MAP) and Morphological Attributes Encoder (MAE), is proposed to extract the morphological features of WBC. We also implement Deep-supervised Learning (DSL) and Semi-supervised Learning (SSL) training strategies for MAE to enhance its performance. Our proposed network framework achieves 98.77%, 91.30%, 98.36%, 99.71%, 98.45%, and 98.85% overall accuracy on the six public datasets PBC, LISC, Raabin-WBC, BCCD, LDWBC, and Labelled, respectively, demonstrating superior effectiveness compared to existing studies. The results indicate that the WBC classification combining high-level semantic features and low-level morphological features is of great significance, which lays the foundation for objective and accurate classification of WBC in microscopic blood cell images.

Combining Radiomics and Machine Learning Approaches for Objective ASD Diagnosis: Verifying White Matter Associations with ASD

May 25, 2024Abstract:Autism Spectrum Disorder is a condition characterized by a typical brain development leading to impairments in social skills, communication abilities, repetitive behaviors, and sensory processing. There have been many studies combining brain MRI images with machine learning algorithms to achieve objective diagnosis of autism, but the correlation between white matter and autism has not been fully utilized. To address this gap, we develop a computer-aided diagnostic model focusing on white matter regions in brain MRI by employing radiomics and machine learning methods. This study introduced a MultiUNet model for segmenting white matter, leveraging the UNet architecture and utilizing manually segmented MRI images as the training data. Subsequently, we extracted white matter features using the Pyradiomics toolkit and applied different machine learning models such as Support Vector Machine, Random Forest, Logistic Regression, and K-Nearest Neighbors to predict autism. The prediction sets all exceeded 80% accuracy. Additionally, we employed Convolutional Neural Network to analyze segmented white matter images, achieving a prediction accuracy of 86.84%. Notably, Support Vector Machine demonstrated the highest prediction accuracy at 89.47%. These findings not only underscore the efficacy of the models but also establish a link between white matter abnormalities and autism. Our study contributes to a comprehensive evaluation of various diagnostic models for autism and introduces a computer-aided diagnostic algorithm for early and objective autism diagnosis based on MRI white matter regions.

A better approach to diagnose retinal diseases: Combining our Segmentation-based Vascular Enhancement with deep learning features

May 25, 2024Abstract:Abnormalities in retinal fundus images may indicate certain pathologies such as diabetic retinopathy, hypertension, stroke, glaucoma, retinal macular edema, venous occlusion, and atherosclerosis, making the study and analysis of retinal images of great significance. In conventional medicine, the diagnosis of retina-related diseases relies on a physician's subjective assessment of the retinal fundus images, which is a time-consuming process and the accuracy is highly dependent on the physician's subjective experience. To this end, this paper proposes a fast, objective, and accurate method for the diagnosis of diseases related to retinal fundus images. This method is a multiclassification study of normal samples and 13 categories of disease samples on the STARE database, with a test set accuracy of 99.96%. Compared with other studies, our method achieved the highest accuracy. This study innovatively propose Segmentation-based Vascular Enhancement(SVE). After comparing the classification performances of the deep learning models of SVE images, original images and Smooth Grad-CAM ++ images, we extracted the deep learning features and traditional features of the SVE images and input them into nine meta learners for classification. The results shows that our proposed UNet-SVE-VGG-MLP model has the optimal performance for classifying diseases related to retinal fundus images on the STARE database, with a overall accuracy of 99.96% and a weighted AUC of 99.98% for the 14 categories on test dataset. This method can be used to realize rapid, objective, and accurate classification and diagnosis of retinal fundus image related diseases.

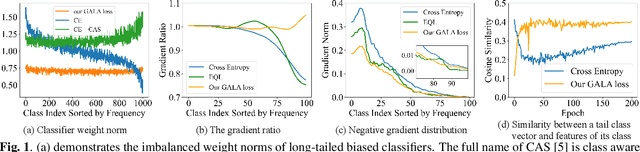

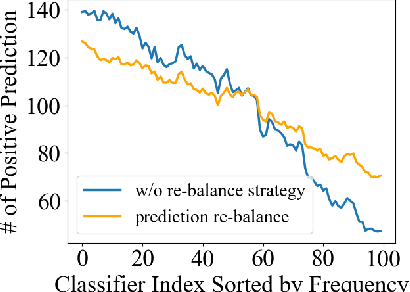

Gradient-Aware Logit Adjustment Loss for Long-tailed Classifier

Mar 14, 2024

Abstract:In the real-world setting, data often follows a long-tailed distribution, where head classes contain significantly more training samples than tail classes. Consequently, models trained on such data tend to be biased toward head classes. The medium of this bias is imbalanced gradients, which include not only the ratio of scale between positive and negative gradients but also imbalanced gradients from different negative classes. Therefore, we propose the Gradient-Aware Logit Adjustment (GALA) loss, which adjusts the logits based on accumulated gradients to balance the optimization process. Additionally, We find that most of the solutions to long-tailed problems are still biased towards head classes in the end, and we propose a simple and post hoc prediction re-balancing strategy to further mitigate the basis toward head class. Extensive experiments are conducted on multiple popular long-tailed recognition benchmark datasets to evaluate the effectiveness of these two designs. Our approach achieves top-1 accuracy of 48.5\%, 41.4\%, and 73.3\% on CIFAR100-LT, Places-LT, and iNaturalist, outperforming the state-of-the-art method GCL by a significant margin of 3.62\%, 0.76\% and 1.2\%, respectively. Code is available at https://github.com/lt-project-repository/lt-project.

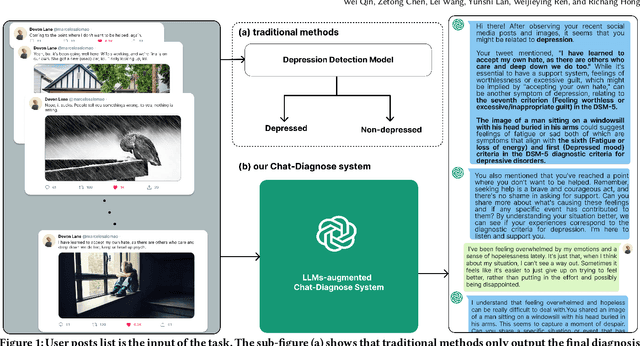

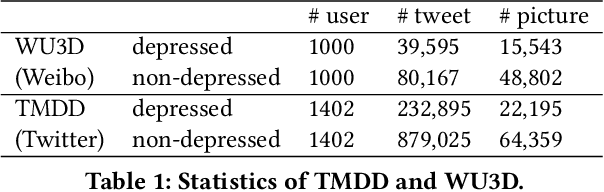

Read, Diagnose and Chat: Towards Explainable and Interactive LLMs-Augmented Depression Detection in Social Media

May 09, 2023

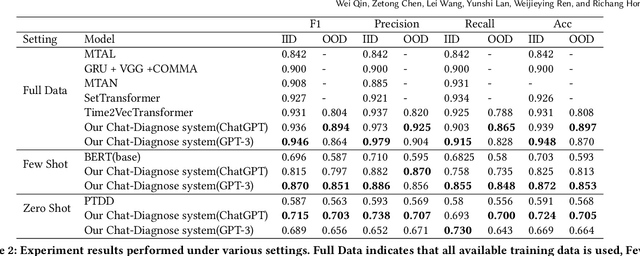

Abstract:This paper proposes a new depression detection system based on LLMs that is both interpretable and interactive. It not only provides a diagnosis, but also diagnostic evidence and personalized recommendations based on natural language dialogue with the user. We address challenges such as the processing of large amounts of text and integrate professional diagnostic criteria. Our system outperforms traditional methods across various settings and is demonstrated through case studies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge