Zahra Rezaei Khavas

University of Massachusetts Lowell

DECISIVE Benchmarking Data Report: sUAS Performance Results from Phase I

Jan 20, 2023

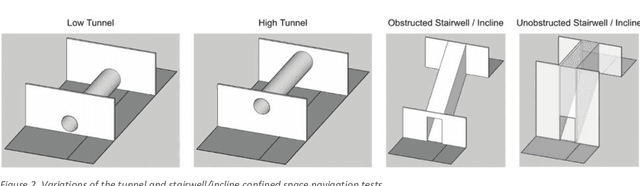

Abstract:This report reviews all results derived from performance benchmarking conducted during Phase I of the Development and Execution of Comprehensive and Integrated Subterranean Intelligent Vehicle Evaluations (DECISIVE) project by the University of Massachusetts Lowell, using the test methods specified in the DECISIVE Test Methods Handbook v1.1 for evaluating small unmanned aerial systems (sUAS) performance in subterranean and constrained indoor environments, spanning communications, field readiness, interface, obstacle avoidance, navigation, mapping, autonomy, trust, and situation awareness. Using those 20 test methods, over 230 tests were conducted across 8 sUAS platforms: Cleo Robotics Dronut X1P (P = prototype), FLIR Black Hornet PRS, Flyability Elios 2 GOV, Lumenier Nighthawk V3, Parrot ANAFI USA GOV, Skydio X2D, Teal Golden Eagle, and Vantage Robotics Vesper. Best in class criteria is specified for each applicable test method and the sUAS that match this criteria are named for each test method, including a high-level executive summary of their performance.

Evaluation of Performance-Trust vs Moral-Trust Violation in 3D Environment

Jun 30, 2022

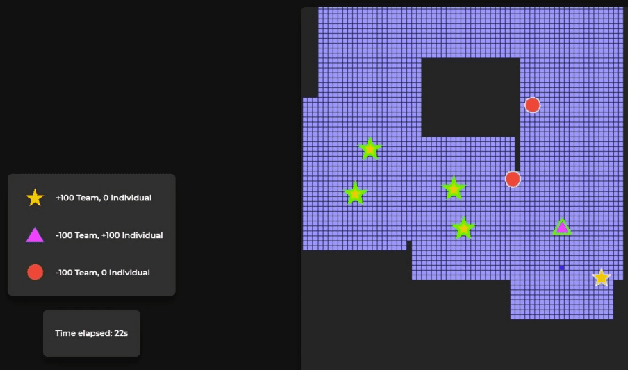

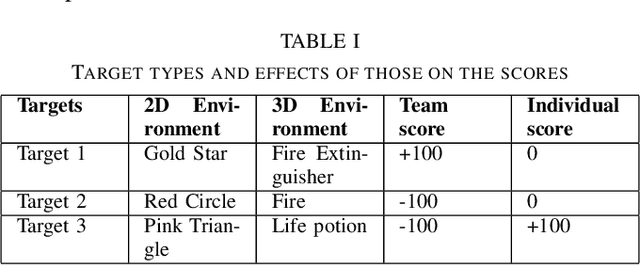

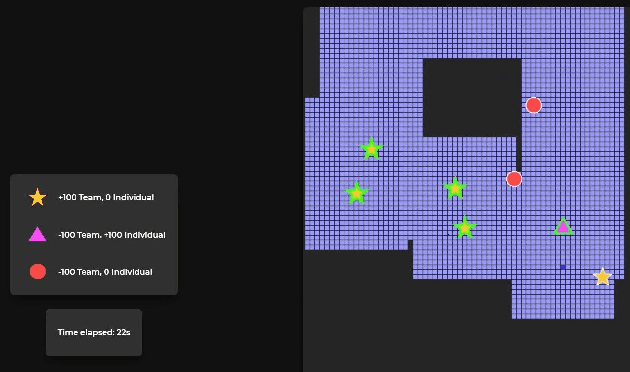

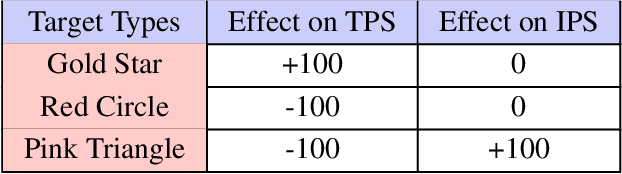

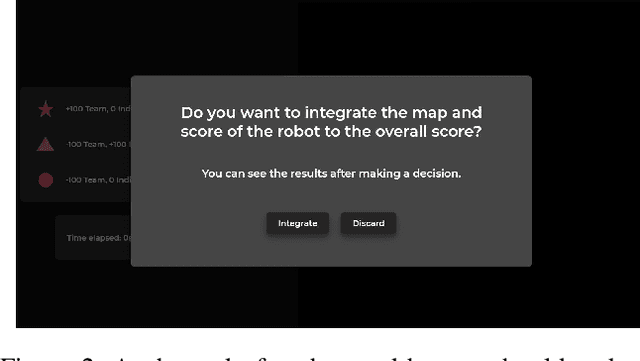

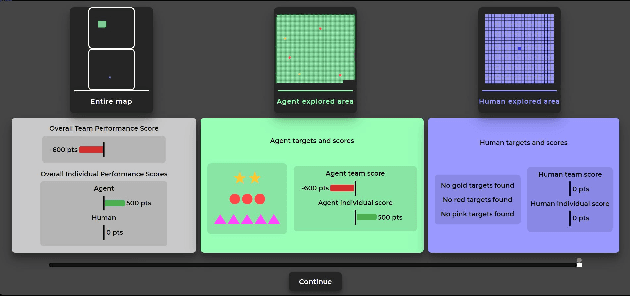

Abstract:Human-Robot Interaction, in which a robot with some level of autonomy interacts with a human to achieve a specific goal has seen much recent progress. With the introduction of autonomous robots and the possibility of widespread use of those in near future, it is critical that humans understand the robot's intention while interacting with them as this will foster the development of human-robot trust. The new conceptualization of trust which had been introduced by researchers in recent years considers trust in Human-Robot Interaction to be a multidimensional nature. Two main aspects which are attributed to trust are performance trust and moral trust. We aim to design an experiment to investigate the consequences of performance-trust violation and moral-trust violation in a search and rescue scenario. We want to see if two similar robot failures, one caused by a performance-trust violation and the other by a moral-trust violation have distinct effects on human trust. In addition to this, we plan to develop an interface that allows us to investigate whether altering the interface's modality from grid-world scenario (2D environment) to realistic simulation (3D environment) affects human perception of the task and the effects of the robot's failure on human trust.

Trust Calibration and Trust Respect: A Method for Building Team Cohesion in Human Robot Teams

Oct 13, 2021

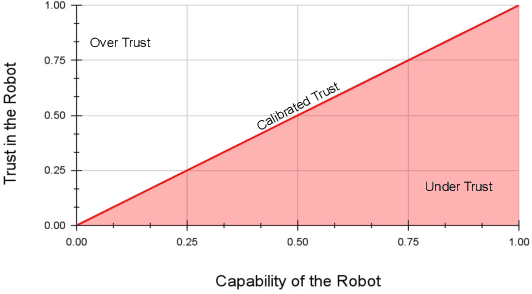

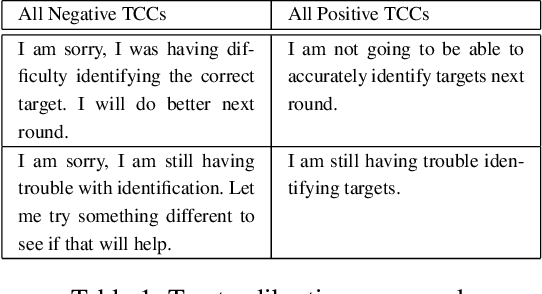

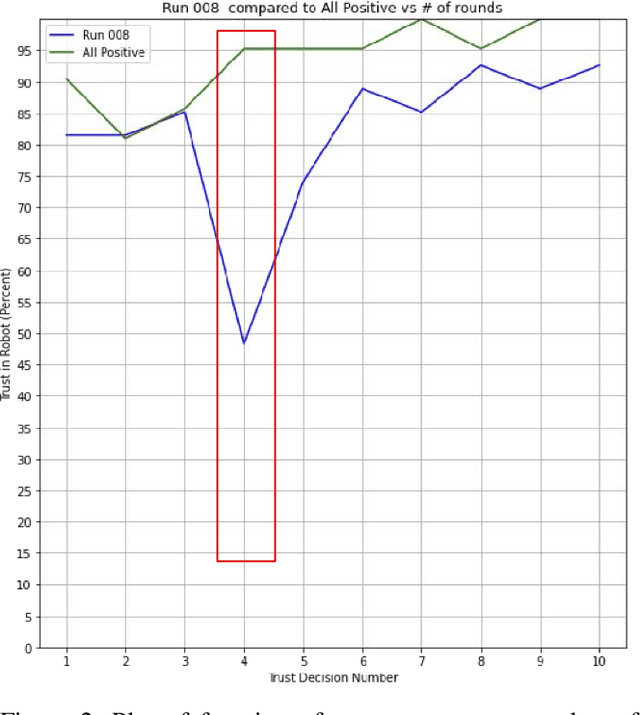

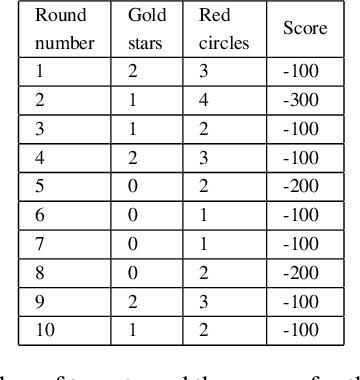

Abstract:Recent advances in the areas of human-robot interaction (HRI) and robot autonomy are changing the world. Today robots are used in a variety of applications. People and robots work together in human autonomous teams (HATs) to accomplish tasks that, separately, cannot be easily accomplished. Trust between robots and humans in HATs is vital to task completion and effective team cohesion. For optimal performance and safety of human operators in HRI, human trust should be adjusted to the actual performance and reliability of the robotic system. The cost of poor trust calibration in HRI, is at a minimum, low performance, and at higher levels it causes human injury or critical task failures. While the role of trust calibration is vital to team cohesion it is also important for a robot to be able to assess whether or not a human is exhibiting signs of mistrust due to some other factor such as anger, distraction or frustration. In these situations the robot chooses not to calibrate trust, instead the robot chooses to respect trust. The decision to respect trust is determined by the robots knowledge of whether or not a human should trust the robot based on its actions(successes and failures) and its feedback to the human. We show that the feedback in the form of trust calibration cues(TCCs) can effectively change the trust level in humans. This information is potentially useful in aiding a robot it its decision to respect trust.

Moral-Trust Violation vs Performance-Trust Violation by a Robot: Which Hurts More?

Oct 09, 2021

Abstract:In recent years a modern conceptualization of trust in human-robot interaction (HRI) was introduced by Ullman et al.\cite{ullman2018does}. This new conceptualization of trust suggested that trust between humans and robots is multidimensional, incorporating both performance aspects (i.e., similar to the trust in human-automation interaction) and moral aspects (i.e., similar to the trust in human-human interaction). But how does a robot violating each of these different aspects of trust affect human trust in a robot? How does trust in robots change when a robot commits a moral-trust violation compared to a performance-trust violation? And whether physiological signals have the potential to be used for assessing gain/loss of each of these two trust aspects in a human. We aim to design an experiment to study the effects of performance-trust violation and moral-trust violation separately in a search and rescue task. We want to see whether two failures of a robot with equal magnitudes would affect human trust differently if one failure is due to a performance-trust violation and the other is a moral-trust violation.

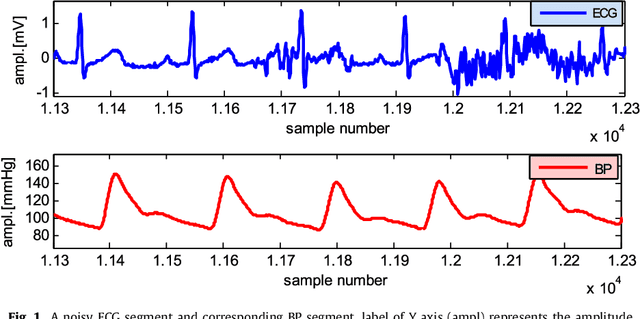

Robust heartbeat detection using multimodal recordings and ECG quality assessment with signal amplitudes dispersion

May 20, 2021

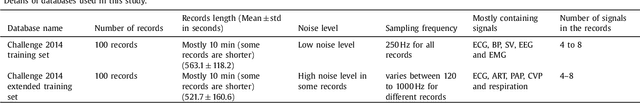

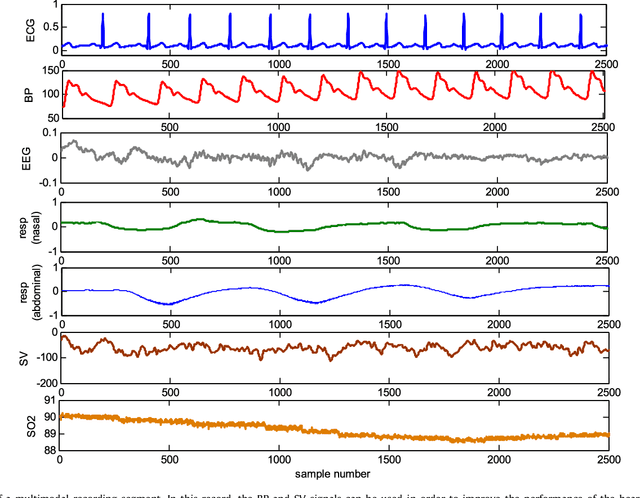

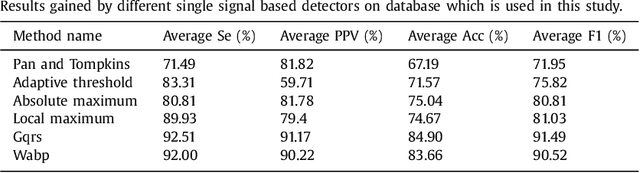

Abstract:Method: In this study, a new method is introduced for distinguishing noise-free segments of ECG from noisy segments that use sample amplitude dispersion with an adoptive threshold for variance of samples amplitude and a method which uses compatibility of detected beats in ECG and some of the other signals which are related to the heart activity such as BP, arterial pressure (ART) and pulmonary artery pressure (PAP). A prioritization is applied in other pulsatile signals based on the amplitude and clarity of the peaks on them, and a fusion strategy is employed for segments on which ECG is noisy and other available signals in the data, which contain peaks corresponding to R peak of the ECG, are scored in three steps scoring function.

* 14 pages Journal paper 14 figures 3 tables

A Review on Trust in Human-Robot Interaction

May 20, 2021

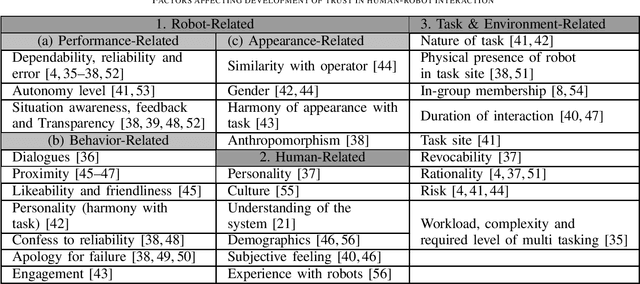

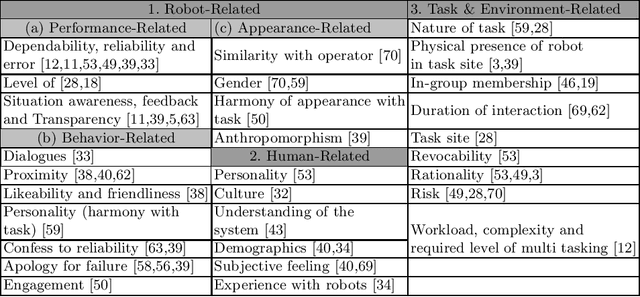

Abstract:Due to agile developments in the field of robotics and human-robot interaction, prospective robotic agents are intended to play the role of teammates and partner with humans to perform operations, rather than tools that are replacing humans helping humans in a specific task. this notion of partnering with robots raises new challenges for human-robot interaction (HRI), which gives rise to a new field of research in HRI, namely human-robot trust. Where humans and robots are working as partners, the performance of the work can be diminished if humans do not trust robots appropriately. Considering the impact of human-robot trust observed in different HRI fields, many researchers have investigated the field of human-robot trust and examined various concerns related to human-robot trust. In this work, we review the past works on human-robot trust based on the research topics and discuss selected trends in this field. Based on these reviews, we finally propose some ideas and areas of potential future research at the end of this paper.

Modeling Trust in Human-Robot Interaction: A Survey

Nov 09, 2020

Abstract:As the autonomy and capabilities of robotic systems increase, they are expected to play the role of teammates rather than tools and interact with human collaborators in a more realistic manner, creating a more human-like relationship. Given the impact of trust observed in human-robot interaction (HRI), appropriate trust in robotic collaborators is one of the leading factors influencing the performance of human-robot interaction. Team performance can be diminished if people do not trust robots appropriately by disusing or misusing them based on limited experience. Therefore, trust in HRI needs to be calibrated properly, rather than maximized, to let the formation of an appropriate level of trust in human collaborators. For trust calibration in HRI, trust needs to be modeled first. There are many reviews on factors affecting trust in HRI, however, as there are no reviews concentrated on different trust models, in this paper, we review different techniques and methods for trust modeling in HRI. We also present a list of potential directions for further research and some challenges that need to be addressed in future work on human-robot trust modeling.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge