Russell Perkins

Trust Calibration and Trust Respect: A Method for Building Team Cohesion in Human Robot Teams

Oct 13, 2021

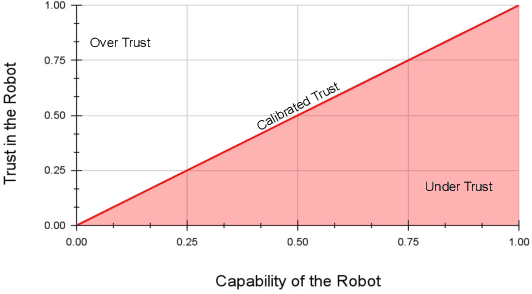

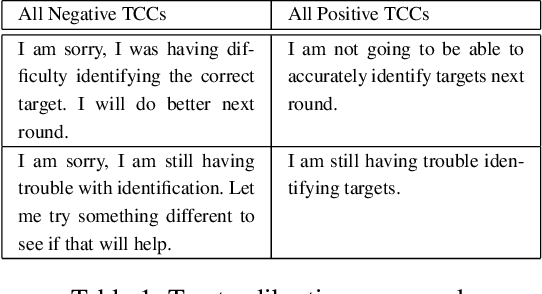

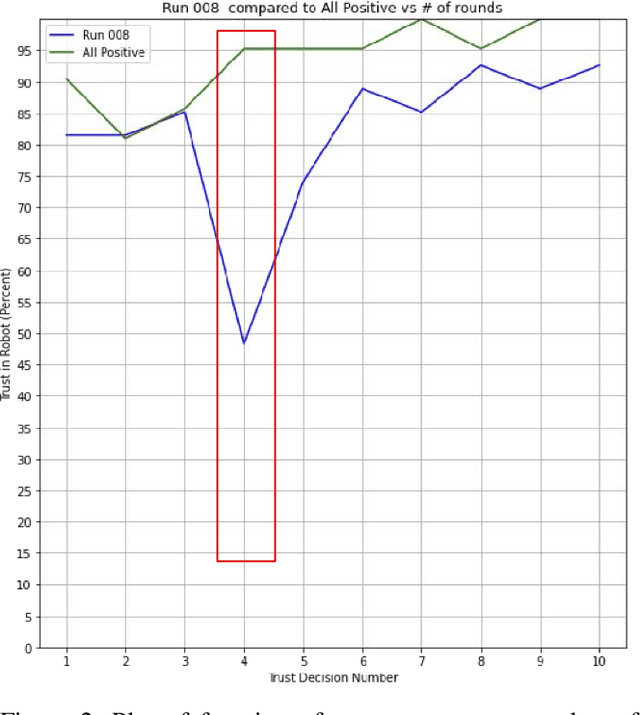

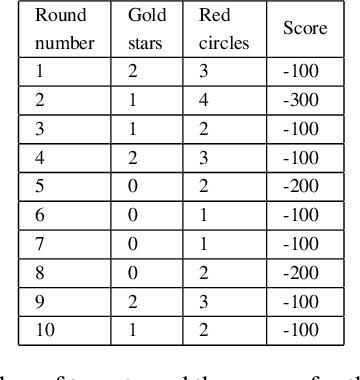

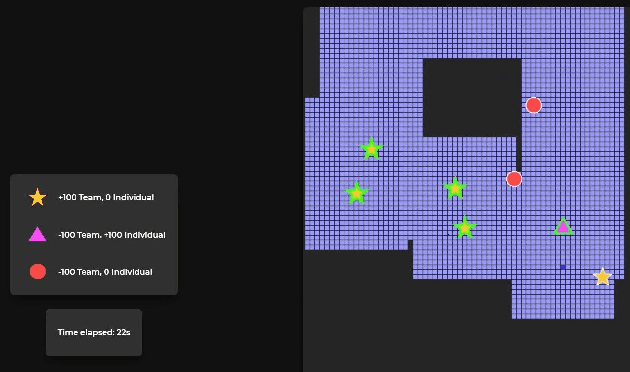

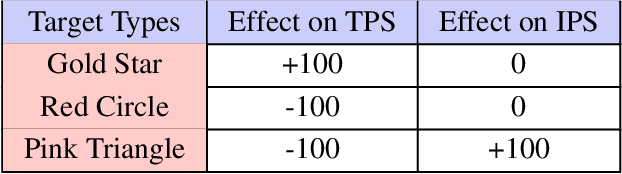

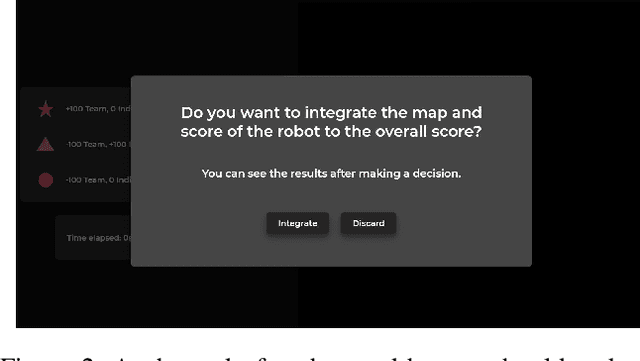

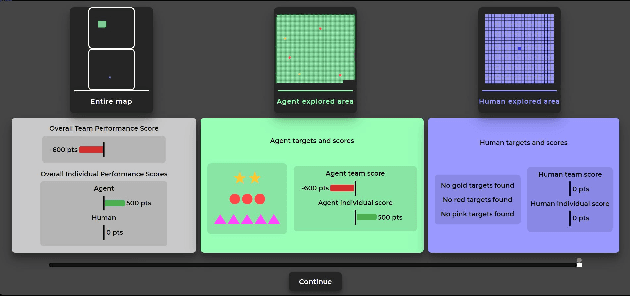

Abstract:Recent advances in the areas of human-robot interaction (HRI) and robot autonomy are changing the world. Today robots are used in a variety of applications. People and robots work together in human autonomous teams (HATs) to accomplish tasks that, separately, cannot be easily accomplished. Trust between robots and humans in HATs is vital to task completion and effective team cohesion. For optimal performance and safety of human operators in HRI, human trust should be adjusted to the actual performance and reliability of the robotic system. The cost of poor trust calibration in HRI, is at a minimum, low performance, and at higher levels it causes human injury or critical task failures. While the role of trust calibration is vital to team cohesion it is also important for a robot to be able to assess whether or not a human is exhibiting signs of mistrust due to some other factor such as anger, distraction or frustration. In these situations the robot chooses not to calibrate trust, instead the robot chooses to respect trust. The decision to respect trust is determined by the robots knowledge of whether or not a human should trust the robot based on its actions(successes and failures) and its feedback to the human. We show that the feedback in the form of trust calibration cues(TCCs) can effectively change the trust level in humans. This information is potentially useful in aiding a robot it its decision to respect trust.

Moral-Trust Violation vs Performance-Trust Violation by a Robot: Which Hurts More?

Oct 09, 2021

Abstract:In recent years a modern conceptualization of trust in human-robot interaction (HRI) was introduced by Ullman et al.\cite{ullman2018does}. This new conceptualization of trust suggested that trust between humans and robots is multidimensional, incorporating both performance aspects (i.e., similar to the trust in human-automation interaction) and moral aspects (i.e., similar to the trust in human-human interaction). But how does a robot violating each of these different aspects of trust affect human trust in a robot? How does trust in robots change when a robot commits a moral-trust violation compared to a performance-trust violation? And whether physiological signals have the potential to be used for assessing gain/loss of each of these two trust aspects in a human. We aim to design an experiment to study the effects of performance-trust violation and moral-trust violation separately in a search and rescue task. We want to see whether two failures of a robot with equal magnitudes would affect human trust differently if one failure is due to a performance-trust violation and the other is a moral-trust violation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge