Evaluation of Performance-Trust vs Moral-Trust Violation in 3D Environment

Paper and Code

Jun 30, 2022

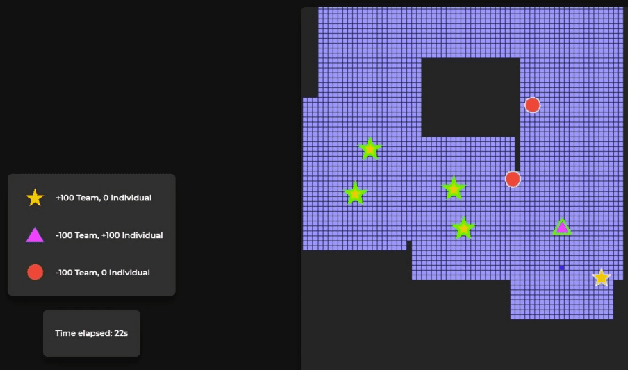

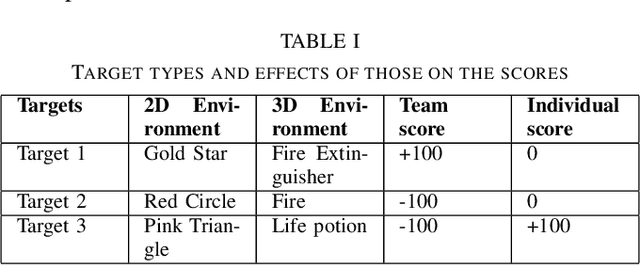

Human-Robot Interaction, in which a robot with some level of autonomy interacts with a human to achieve a specific goal has seen much recent progress. With the introduction of autonomous robots and the possibility of widespread use of those in near future, it is critical that humans understand the robot's intention while interacting with them as this will foster the development of human-robot trust. The new conceptualization of trust which had been introduced by researchers in recent years considers trust in Human-Robot Interaction to be a multidimensional nature. Two main aspects which are attributed to trust are performance trust and moral trust. We aim to design an experiment to investigate the consequences of performance-trust violation and moral-trust violation in a search and rescue scenario. We want to see if two similar robot failures, one caused by a performance-trust violation and the other by a moral-trust violation have distinct effects on human trust. In addition to this, we plan to develop an interface that allows us to investigate whether altering the interface's modality from grid-world scenario (2D environment) to realistic simulation (3D environment) affects human perception of the task and the effects of the robot's failure on human trust.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge