Yuzhu Sun

A Twin Delayed Deep Deterministic Policy Gradient Algorithm for Autonomous Ground Vehicle Navigation via Digital Twin Perception Awareness

Mar 22, 2024Abstract:Autonomous ground vehicle (UGV) navigation has the potential to revolutionize the transportation system by increasing accessibility to disabled people, ensure safety and convenience of use. However, UGV requires extensive and efficient testing and evaluation to ensure its acceptance for public use. This testing are mostly done in a simulator which result to sim2real transfer gap. In this paper, we propose a digital twin perception awareness approach for the control of robot navigation without prior creation of the virtual environment (VT) environment state. To achieve this, we develop a twin delayed deep deterministic policy gradient (TD3) algorithm that ensures collision avoidance and goal-based path planning. We demonstrate the performance of our approach on different environment dynamics. We show that our approach is capable of efficiently avoiding collision with obstacles and navigating to its desired destination, while at the same time safely avoids obstacles using the information received from the LIDAR sensor mounted on the robot. Our approach bridges the gap between sim-to-real transfer and contributes to the adoption of UGVs in real world. We validate our approach in simulation and a real-world application in an office space.

Digital Twin-Driven Reinforcement Learning for Obstacle Avoidance in Robot Manipulators: A Self-Improving Online Training Framework

Mar 19, 2024

Abstract:The evolution and growing automation of collaborative robots introduce more complexity and unpredictability to systems, highlighting the crucial need for robot's adaptability and flexibility to address the increasing complexities of their environment. In typical industrial production scenarios, robots are often required to be re-programmed when facing a more demanding task or even a few changes in workspace conditions. To increase productivity, efficiency and reduce human effort in the design process, this paper explores the potential of using digital twin combined with Reinforcement Learning (RL) to enable robots to generate self-improving collision-free trajectories in real time. The digital twin, acting as a virtual counterpart of the physical system, serves as a 'forward run' for monitoring, controlling, and optimizing the physical system in a safe and cost-effective manner. The physical system sends data to synchronize the digital system through the video feeds from cameras, which allows the virtual robot to update its observation and policy based on real scenarios. The bidirectional communication between digital and physical systems provides a promising platform for hardware-in-the-loop RL training through trial and error until the robot successfully adapts to its new environment. The proposed online training framework is demonstrated on the Unfactory Xarm5 collaborative robot, where the robot end-effector aims to reach the target position while avoiding obstacles. The experiment suggest that proposed framework is capable of performing policy online training, and that there remains significant room for improvement.

Adaptive Safety-critical Control with Uncertainty Estimation for Human-robot Collaboration

Apr 14, 2023

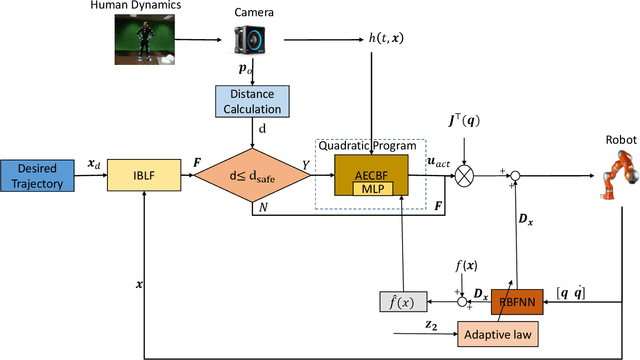

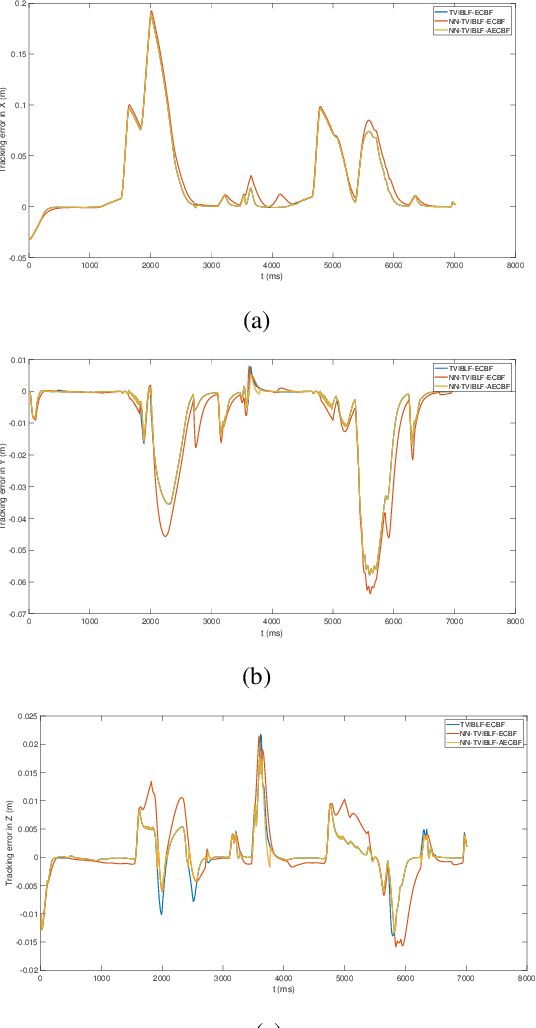

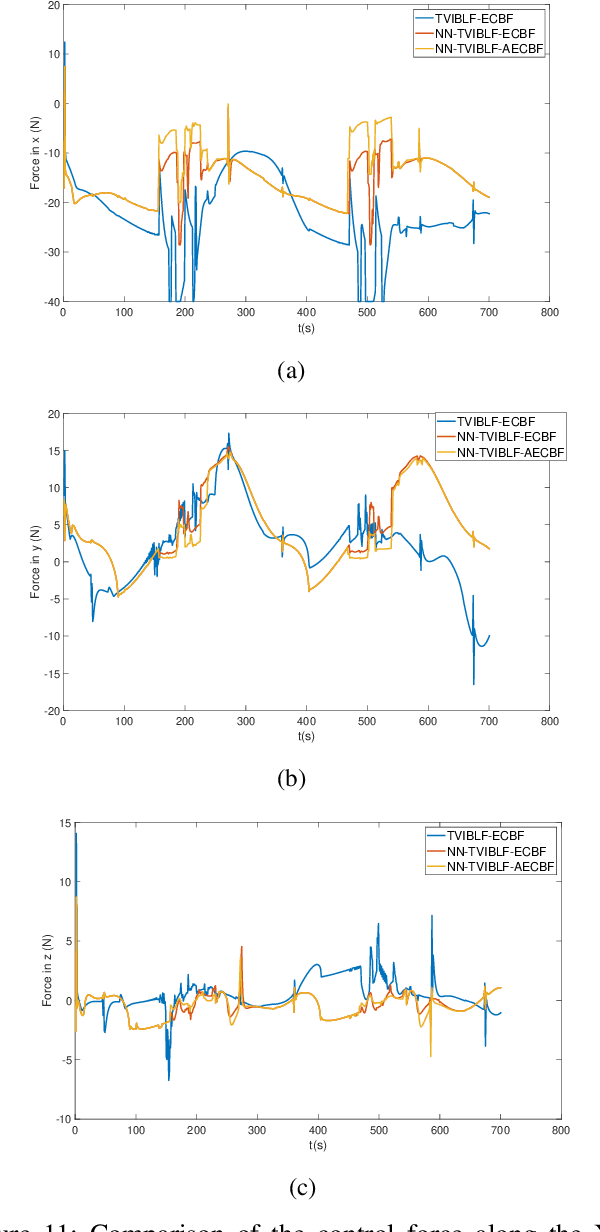

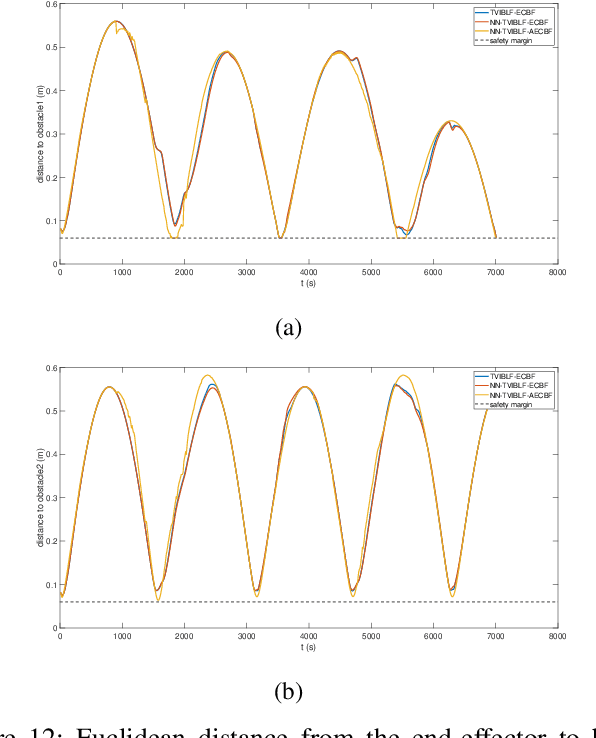

Abstract:In advanced manufacturing, strict safety guarantees are required to allow humans and robots to work together in a shared workspace. One of the challenges in this application field is the variety and unpredictability of human behavior, leading to potential dangers for human coworkers. This paper presents a novel control framework by adopting safety-critical control and uncertainty estimation for human-robot collaboration. Additionally, to select the shortest path during collaboration, a novel quadratic penalty method is presented. The innovation of the proposed approach is that the proposed controller will prevent the robot from violating any safety constraints even in cases where humans move accidentally in a collaboration task. This is implemented by the combination of a time-varying integral barrier Lyapunov function (TVIBLF) and an adaptive exponential control barrier function (AECBF) to achieve a flexible mode switch between path tracking and collision avoidance with guaranteed closed-loop system stability. The performance of our approach is demonstrated in simulation studies on a 7-DOF robot manipulator. Additionally, a comparison between the tasks involving static and dynamic targets is provided.

Fixed-time Adaptive Neural Control for Physical Human-Robot Collaboration with Time-Varying Workspace Constraints

Mar 04, 2023

Abstract:Physical human-robot collaboration (pHRC) requires both compliance and safety guarantees since robots coordinate with human actions in a shared workspace. This paper presents a novel fixed-time adaptive neural control methodology for handling time-varying workspace constraints that occur in physical human-robot collaboration while also guaranteeing compliance during intended force interactions. The proposed methodology combines the benefits of compliance control, time-varying integral barrier Lyapunov function (TVIBLF) and fixed-time techniques, which not only achieve compliance during physical contact with human operators but also guarantee time-varying workspace constraints and fast tracking error convergence without any restriction on the initial conditions. Furthermore, a neural adaptive control law is designed to compensate for the unknown dynamics and disturbances of the robot manipulator such that the proposed control framework is overall fixed-time converged and capable of online learning without any prior knowledge of robot dynamics and disturbances. The proposed approach is finally validated on a simulated two-link robot manipulator. Simulation results show that the proposed controller is superior in the sense of both tracking error and convergence time compared with the existing barrier Lyapunov functions based controllers, while simultaneously guaranteeing compliance and safety.

Reinforcement Learning-Enhanced Control Barrier Functions for Robot Manipulators

Nov 21, 2022Abstract:In this paper we present the implementation of a Control Barrier Function (CBF) using a quadratic program (QP) formulation that provides obstacle avoidance for a robotic manipulator arm system. CBF is a control technique that has emerged and developed over the past decade and has been extensively explored in the literature on its mathematical foundations, proof of set invariance and potential applications for a variety of safety-critical control systems. In this work we will look at the design of CBF for the robotic manipulator obstacle avoidance, discuss the selection of the CBF parameters and present a Reinforcement Learning (RL) scheme to assist with finding parameters values that provide the most efficient trajectory to successfully avoid different sized obstacles. We then create a data-set across a range of scenarios used to train a Neural-Network (NN) model that can be used within the control scheme to allow the system to efficiently adapt to different obstacle scenarios. Computer simulations (based on Matlab/Simulink) demonstrate the effectiveness of the proposed algorithm.

Fixed-time Integral Sliding Mode Control for Admittance Control of a Robot Manipulator

Aug 09, 2022

Abstract:This paper proposes a novel fixed-time integral sliding mode controller for admittance control to enhance physical human-robot collaboration. The proposed method combines the benefits of compliance to external forces of admittance control and high robustness to uncertainties of integral sliding mode control (ISMC), such that the system can collaborate with a human partner in an uncertain environment effectively. Firstly, a fixed-time sliding surface is applied in the ISMC to make the tracking error of the system converge within a fixed-time regardless of the initial condition. Then, a fixed-time backstepping controller (BSP) is integrated into the ISMC as the nominal controller to realize global fixed-time convergence. Furthermore, to overcome the singularity problem, a non-singular fixed-time sliding surface is designed and integrated into the controller, which is useful for practical application. Finally, the proposed controller is validated for a two-link robot manipulator with uncertainties and external human forces. The results show that the proposed controller is superior in the sense of both tracking error and convergence time, and at the same time, can comply with human motion in a shared workspace.

Adaptive Admittance Control for Safety-Critical Physical Human Robot Collaboration

Aug 09, 2022

Abstract:Physical human-robot collaboration requires strict safety guarantees since robots and humans work in a shared workspace. This letter presents a novel control framework to handle safety-critical position-based constraints for human-robot physical interaction. The proposed methodology is based on admittance control, exponential control barrier functions (ECBFs) and quadratic program (QP) to achieve compliance during the force interaction between human and robot, while simultaneously guaranteeing safety constraints. In particular, the formulation of admittance control is rewritten as a second-order nonlinear control system, and the interaction forces between humans and robots are regarded as the control input. A virtual force feedback for admittance control is provided in real-time by using the ECBFs-QP framework as a compensator of the external human forces. A safe trajectory is therefore derived from the proposed adaptive admittance control scheme for a low-level controller to track. The innovation of the proposed approach is that the proposed controller will enable the robot to comply with human forces with natural fluidity without violation of any safety constraints even in cases where human external forces incidentally force the robot to violate constraints. The effectiveness of our approach is demonstrated in simulation studies on a two-link planar robot manipulator.

FNA++: Fast Network Adaptation via Parameter Remapping and Architecture Search

Jun 21, 2020

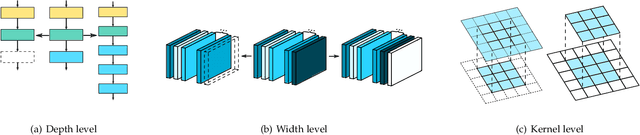

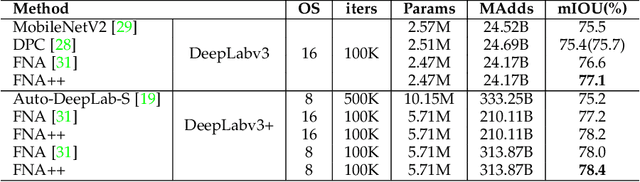

Abstract:Deep neural networks achieve remarkable performance in many computer vision tasks. Most state-of-the-art (SOTA) semantic segmentation and object detection approaches reuse neural network architectures designed for image classification as the backbone, commonly pre-trained on ImageNet. However, performance gains can be achieved by designing network architectures specifically for detection and segmentation, as shown by recent neural architecture search (NAS) research for detection and segmentation. One major challenge though is that ImageNet pre-training of the search space representation (a.k.a. super network) or the searched networks incurs huge computational cost. In this paper, we propose a Fast Network Adaptation (FNA++) method, which can adapt both the architecture and parameters of a seed network (e.g. an ImageNet pre-trained network) to become a network with different depths, widths, or kernel sizes via a parameter remapping technique, making it possible to use NAS for segmentation/detection tasks a lot more efficiently. In our experiments, we conduct FNA++ on MobileNetV2 to obtain new networks for semantic segmentation, object detection, and human pose estimation that clearly outperform existing networks designed both manually and by NAS. We also implement FNA++ on ResNets and NAS networks, which demonstrates a great generalization ability. The total computation cost of FNA++ is significantly less than SOTA segmentation/detection NAS approaches: 1737x less than DPC, 6.8x less than Auto-DeepLab, and 8.0x less than DetNAS. The code will be released at https://github.com/JaminFong/FNA.

Fast Neural Network Adaptation via Parameter Remapping and Architecture Search

Jan 08, 2020

Abstract:Deep neural networks achieve remarkable performance in many computer vision tasks. Most state-of-the-art (SOTA) semantic segmentation and object detection approaches reuse neural network architectures designed for image classification as the backbone, commonly pre-trained on ImageNet. However, performance gains can be achieved by designing network architectures specifically for detection and segmentation, as shown by recent neural architecture search (NAS) research for detection and segmentation. One major challenge though, is that ImageNet pre-training of the search space representation (a.k.a. super network) or the searched networks incurs huge computational cost. In this paper, we propose a Fast Neural Network Adaptation (FNA) method, which can adapt both the architecture and parameters of a seed network (e.g. a high performing manually designed backbone) to become a network with different depth, width, or kernels via a Parameter Remapping technique, making it possible to utilize NAS for detection/segmentation tasks a lot more efficiently. In our experiments, we conduct FNA on MobileNetV2 to obtain new networks for both segmentation and detection that clearly out-perform existing networks designed both manually and by NAS. The total computation cost of FNA is significantly less than SOTA segmentation/detection NAS approaches: 1737$\times$ less than DPC, 6.8$\times$ less than Auto-DeepLab and 7.4$\times$ less than DetNAS. The code is available at https://github.com/JaminFong/FNA.

Densely Connected Search Space for More Flexible Neural Architecture Search

Jun 23, 2019

Abstract:In recent years, neural architecture search (NAS) has dramatically advanced the development of neural network design. While most previous works are computationally intensive, differentiable NAS methods reduce the search cost by constructing a super network in a continuous space covering all possible architectures to search for. However, few of them can search for the network width (the number of filters/channels) because it is intractable to integrate architectures with different widths into one super network following conventional differentiable NAS paradigm. In this paper, we propose a novel differentiable NAS method which can search for the width and the spatial resolution of each block simultaneously. We achieve this by constructing a densely connected search space and name our method as DenseNAS. Blocks with different width and spatial resolution combinations are densely connected to each other. The best path in the super network is selected by optimizing the transition probabilities between blocks. As a result, the overall depth distribution of the network is optimized globally in a graceful manner. In the experiments, DenseNAS obtains an architecture with 75.9% top-1 accuracy on ImageNet and the latency is as low as 24.3ms on a single TITAN-XP. The total search time is merely 23 hours on 4 GPUs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge