Yunyi Yang

UBARv2: Towards Mitigating Exposure Bias in Task-Oriented Dialogs

Sep 15, 2022

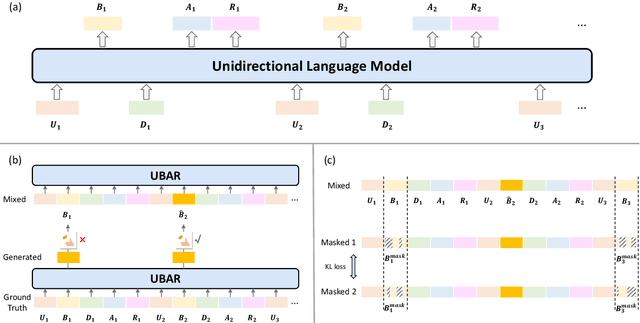

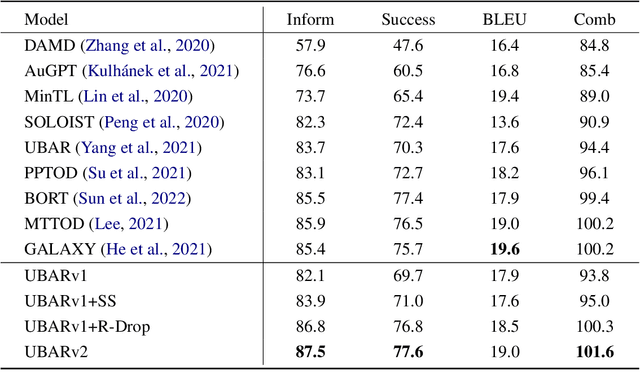

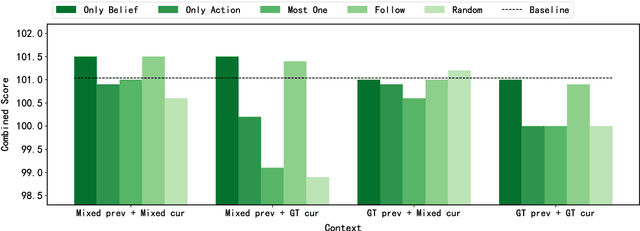

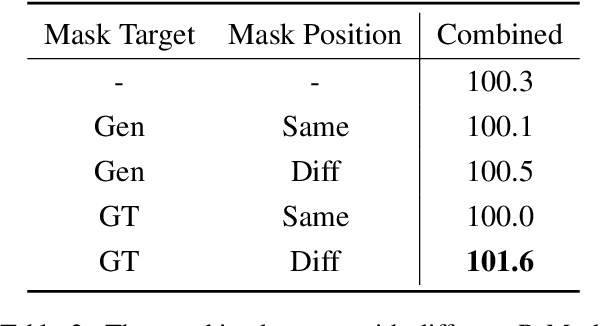

Abstract:This paper studies the exposure bias problem in task-oriented dialog systems, where the model's generated content over multiple turns drives the dialog context away from the ground-truth distribution at training time, introducing error propagation and damaging the robustness of the TOD system. To bridge the gap between training and inference for multi-turn task-oriented dialogs, we propose session-level sampling which explicitly exposes the model to sampled generated content of dialog context during training. Additionally, we employ a dropout-based consistency regularization with the masking strategy R-Mask to further improve the robustness and performance of the model. The proposed UBARv2 achieves state-of-the-art performance on the standardized evaluation benchmark MultiWOZ and extensive experiments show the effectiveness of the proposed methods.

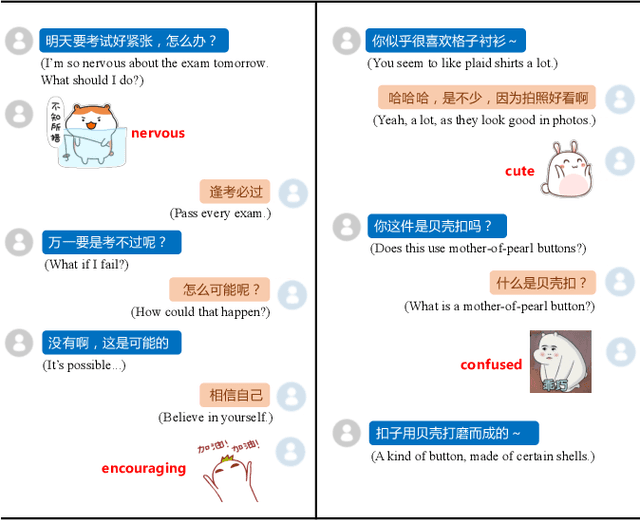

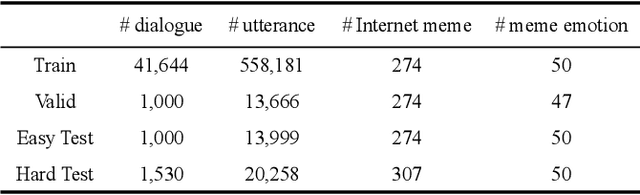

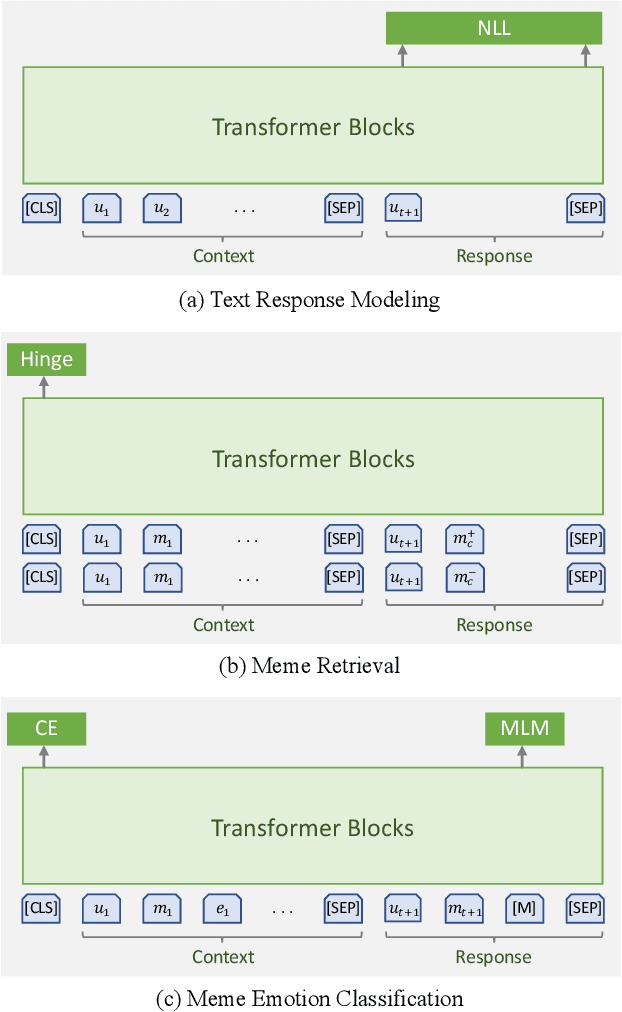

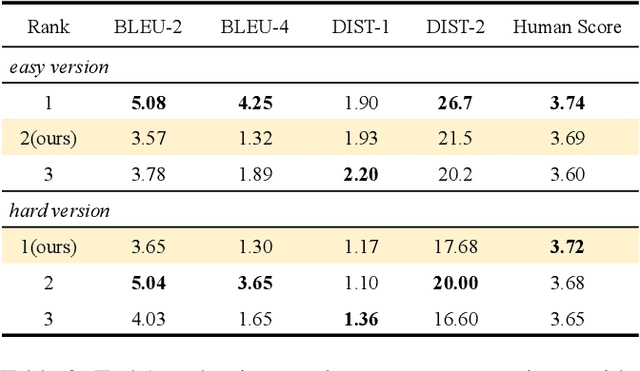

Towards Building an Open-Domain Dialogue System Incorporated with Internet Memes

Mar 08, 2022

Abstract:In recent years, Internet memes have been widely used in online chatting. Compared with text-based communication, conversations become more expressive and attractive when Internet memes are incorporated. This paper presents our solutions for the Meme incorporated Open-domain Dialogue (MOD) Challenge of DSTC10, where three tasks are involved: text response modeling, meme retrieval, and meme emotion classification. Firstly, we leverage a large-scale pre-trained dialogue model for coherent and informative response generation. Secondly, based on interaction-based text-matching, our approach can retrieve appropriate memes with good generalization ability. Thirdly, we propose to model the emotion flow (EF) in conversations and introduce an auxiliary task of emotion description prediction (EDP) to boost the performance of meme emotion classification. Experimental results on the MOD dataset demonstrate that our methods can incorporate Internet memes into dialogue systems effectively.

Amendable Generation for Dialogue State Tracking

Oct 29, 2021

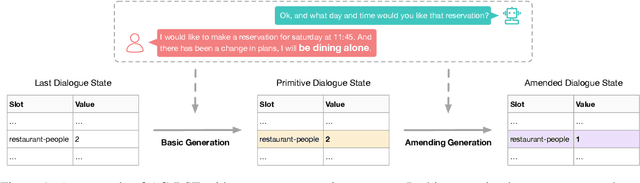

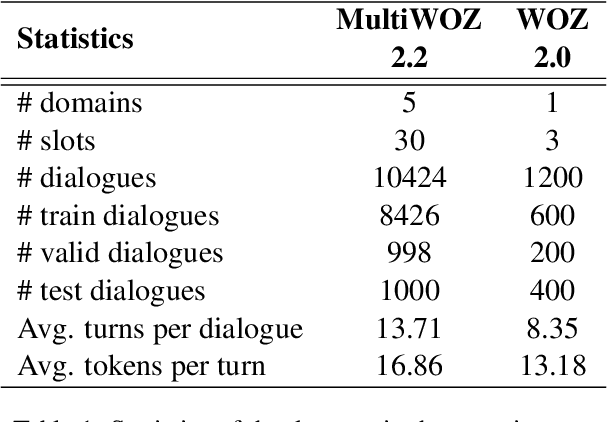

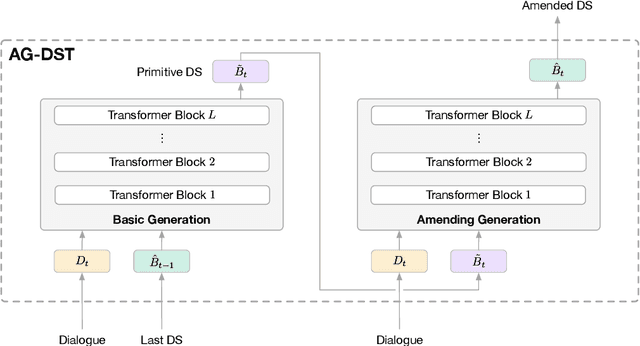

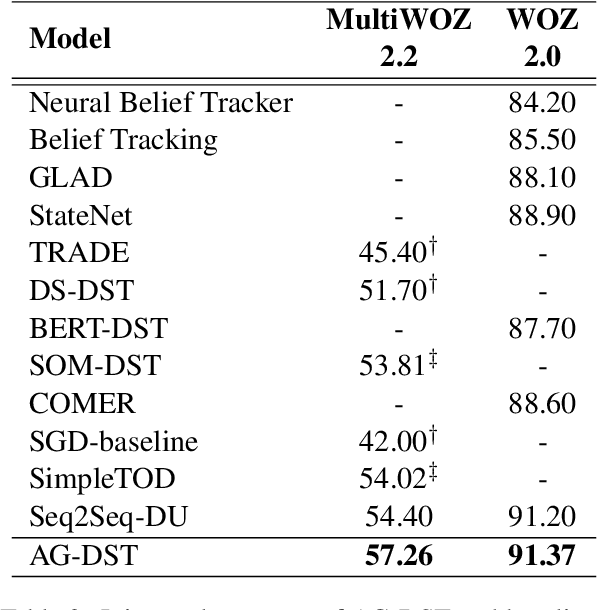

Abstract:In task-oriented dialogue systems, recent dialogue state tracking methods tend to perform one-pass generation of the dialogue state based on the previous dialogue state. The mistakes of these models made at the current turn are prone to be carried over to the next turn, causing error propagation. In this paper, we propose a novel Amendable Generation for Dialogue State Tracking (AG-DST), which contains a two-pass generation process: (1) generating a primitive dialogue state based on the dialogue of the current turn and the previous dialogue state, and (2) amending the primitive dialogue state from the first pass. With the additional amending generation pass, our model is tasked to learn more robust dialogue state tracking by amending the errors that still exist in the primitive dialogue state, which plays the role of reviser in the double-checking process and alleviates unnecessary error propagation. Experimental results show that AG-DST significantly outperforms previous works in two active DST datasets (MultiWOZ 2.2 and WOZ 2.0), achieving new state-of-the-art performances.

Retrieve & Memorize: Dialog Policy Learning with Multi-Action Memory

Jun 27, 2021

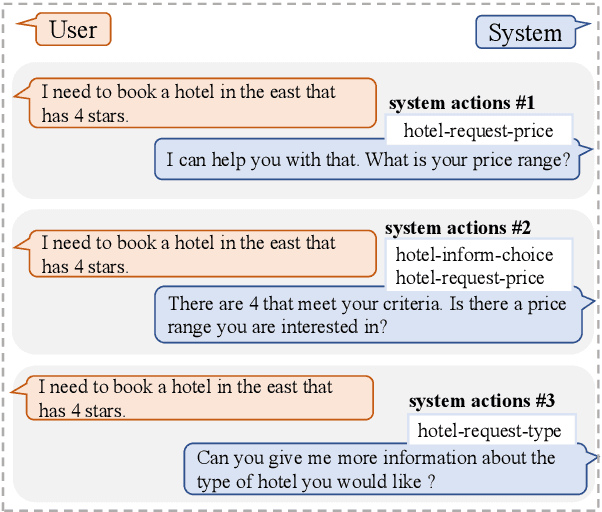

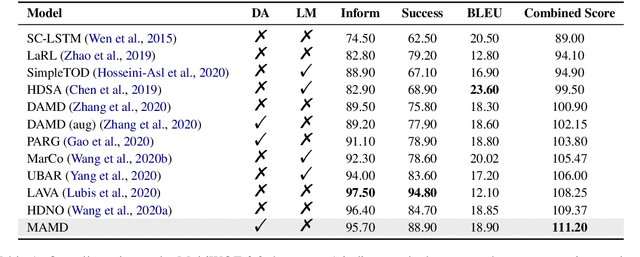

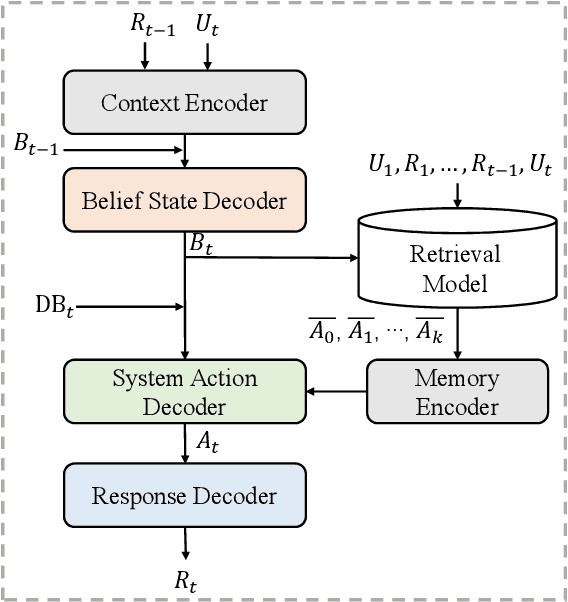

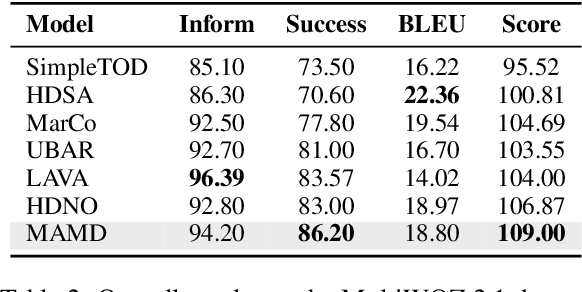

Abstract:Dialogue policy learning, a subtask that determines the content of system response generation and then the degree of task completion, is essential for task-oriented dialogue systems. However, the unbalanced distribution of system actions in dialogue datasets often causes difficulty in learning to generate desired actions and responses. In this paper, we propose a retrieve-and-memorize framework to enhance the learning of system actions. Specially, we first design a neural context-aware retrieval module to retrieve multiple candidate system actions from the training set given a dialogue context. Then, we propose a memory-augmented multi-decoder network to generate the system actions conditioned on the candidate actions, which allows the network to adaptively select key information in the candidate actions and ignore noises. We conduct experiments on the large-scale multi-domain task-oriented dialogue dataset MultiWOZ 2.0 and MultiWOZ 2.1. Experimental results show that our method achieves competitive performance among several state-of-the-art models in the context-to-response generation task.

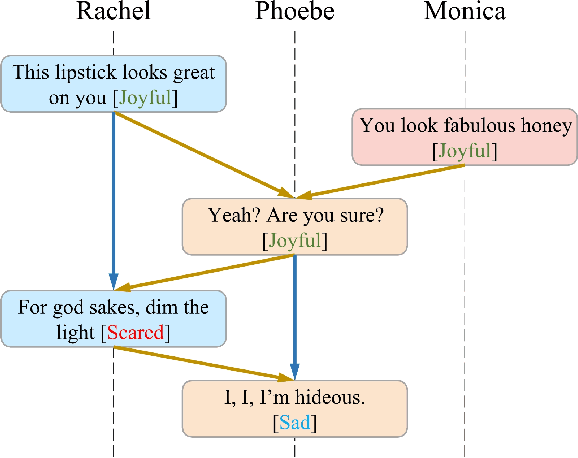

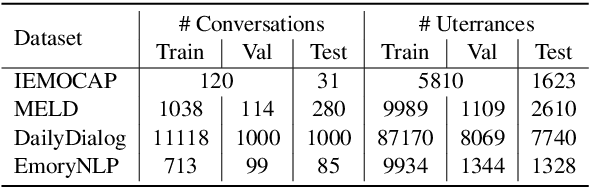

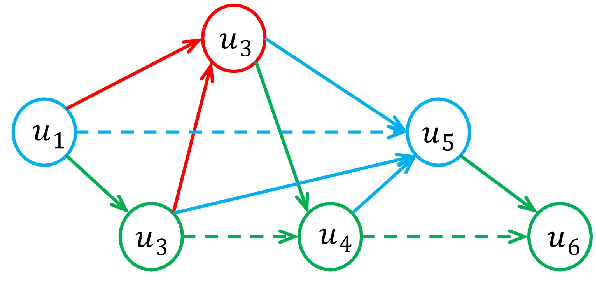

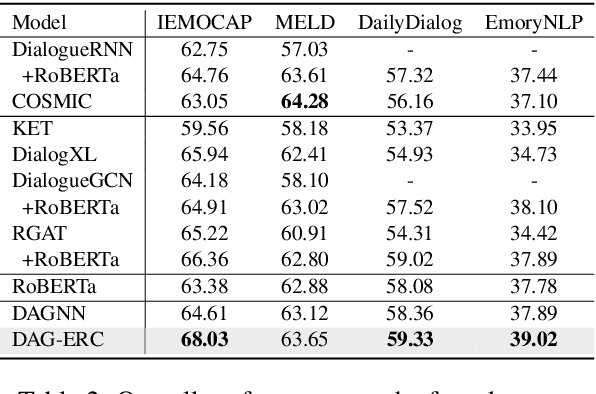

Directed Acyclic Graph Network for Conversational Emotion Recognition

May 27, 2021

Abstract:The modeling of conversational context plays a vital role in emotion recognition from conversation (ERC). In this paper, we put forward a novel idea of encoding the utterances with a directed acyclic graph (DAG) to better model the intrinsic structure within a conversation, and design a directed acyclic neural network,~namely DAG-ERC, to implement this idea.~In an attempt to combine the strengths of conventional graph-based neural models and recurrence-based neural models,~DAG-ERC provides a more intuitive way to model the information flow between long-distance conversation background and nearby context.~Extensive experiments are conducted on four ERC benchmarks with state-of-the-art models employed as baselines for comparison.~The empirical results demonstrate the superiority of this new model and confirm the motivation of the directed acyclic graph architecture for ERC.

UBAR: Towards Fully End-to-End Task-Oriented Dialog Systems with GPT-2

Dec 07, 2020

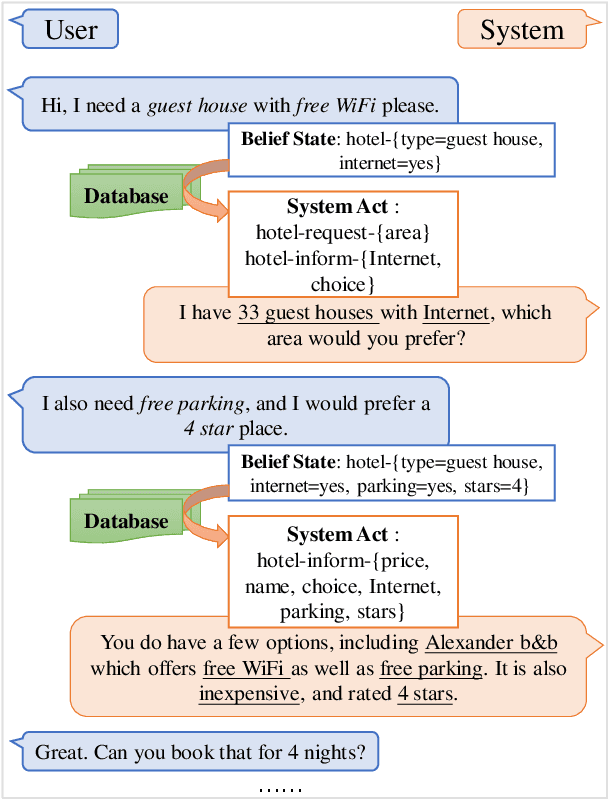

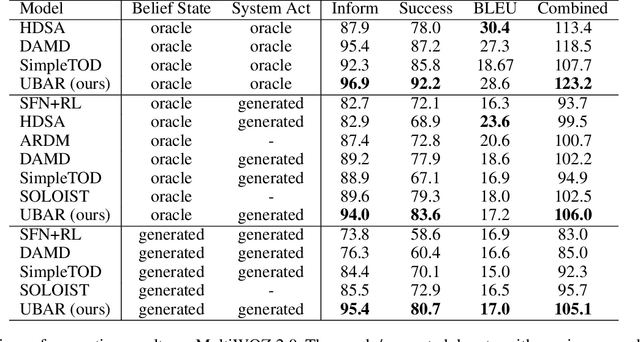

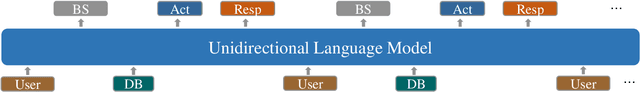

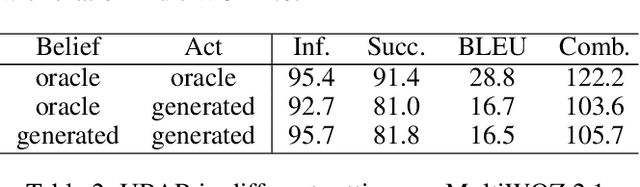

Abstract:This paper presents our task-oriented dialog system UBAR which models task-oriented dialogs on a dialog session level. Specifically, UBAR is acquired by fine-tuning the large pre-trained unidirectional language model GPT-2 on the sequence of the entire dialog session which is composed of user utterance, belief state, database result, system act, and system response of every dialog turn. Additionally, UBAR is evaluated in a more realistic setting, where its dialog context has access to user utterances and all content it generated such as belief states, system acts, and system responses. Experimental results on the MultiWOZ datasets show that UBAR achieves state-of-the-art performances in multiple settings, improving the combined score of response generation, policy optimization, and end-to-end modeling by 4.7, 3.5, and 9.4 points respectively. % especially in end-to-end modeling, where we improve the combined score by 9.4 points. Thorough analyses demonstrate that the session-level training sequence formulation and the generated dialog context are essential for UBAR to operate as a fully end-to-end task-oriented dialog system in real life. We also examine the transfer ability of UBAR to new domains with limited data and provide visualization and a case study to illustrate the advantages of UBAR in modeling on a dialog session level.

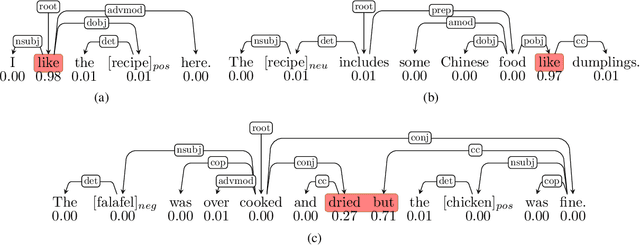

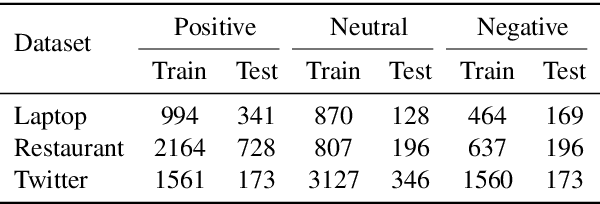

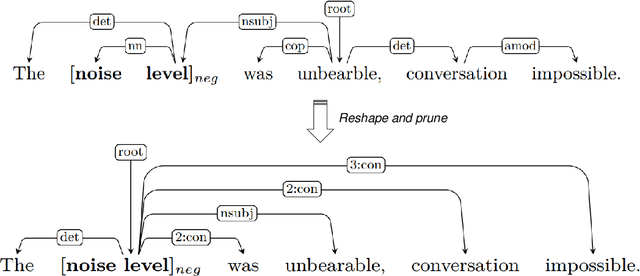

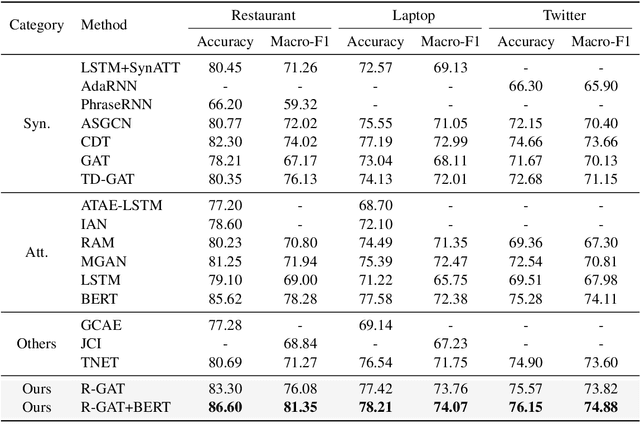

Relational Graph Attention Network for Aspect-based Sentiment Analysis

Apr 26, 2020

Abstract:Aspect-based sentiment analysis aims to determine the sentiment polarity towards a specific aspect in online reviews. Most recent efforts adopt attention-based neural network models to implicitly connect aspects with opinion words. However, due to the complexity of language and the existence of multiple aspects in a single sentence, these models often confuse the connections. In this paper, we address this problem by means of effective encoding of syntax information. Firstly, we define a unified aspect-oriented dependency tree structure rooted at a target aspect by reshaping and pruning an ordinary dependency parse tree. Then, we propose a relational graph attention network (R-GAT) to encode the new tree structure for sentiment prediction. Extensive experiments are conducted on the SemEval 2014 and Twitter datasets, and the experimental results confirm that the connections between aspects and opinion words can be better established with our approach, and the performance of the graph attention network (GAT) is significantly improved as a consequence.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge