Yunyi Li

School of Computer Science and Engineering, Hunan University of Science and Technology

Bias-Aware Mislabeling Detection via Decoupled Confident Learning

Jul 09, 2025

Abstract:Reliable data is a cornerstone of modern organizational systems. A notable data integrity challenge stems from label bias, which refers to systematic errors in a label, a covariate that is central to a quantitative analysis, such that its quality differs across social groups. This type of bias has been conceptually and empirically explored and is widely recognized as a pressing issue across critical domains. However, effective methodologies for addressing it remain scarce. In this work, we propose Decoupled Confident Learning (DeCoLe), a principled machine learning based framework specifically designed to detect mislabeled instances in datasets affected by label bias, enabling bias aware mislabelling detection and facilitating data quality improvement. We theoretically justify the effectiveness of DeCoLe and evaluate its performance in the impactful context of hate speech detection, a domain where label bias is a well documented challenge. Empirical results demonstrate that DeCoLe excels at bias aware mislabeling detection, consistently outperforming alternative approaches for label error detection. Our work identifies and addresses the challenge of bias aware mislabeling detection and offers guidance on how DeCoLe can be integrated into organizational data management practices as a powerful tool to enhance data reliability.

Mitigating Label Bias via Decoupled Confident Learning

Jul 18, 2023

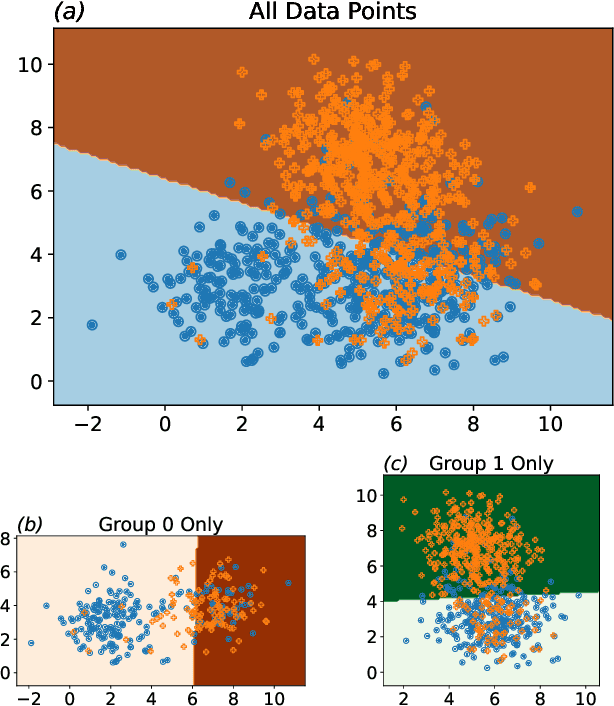

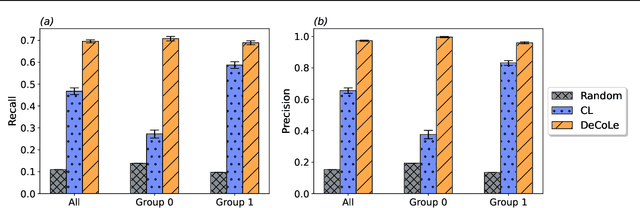

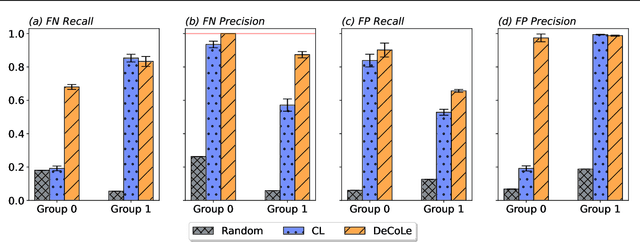

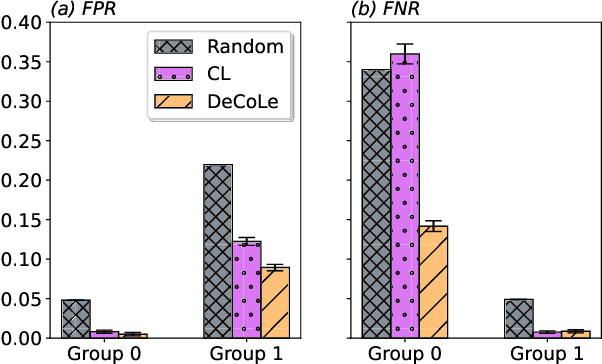

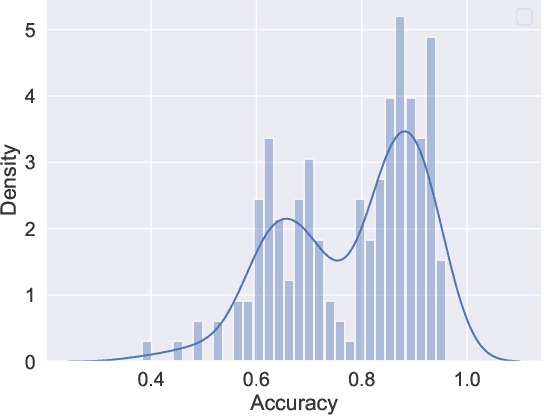

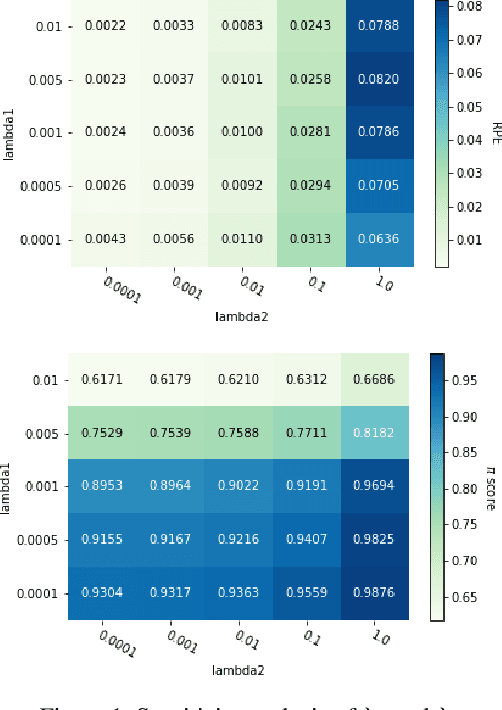

Abstract:Growing concerns regarding algorithmic fairness have led to a surge in methodologies to mitigate algorithmic bias. However, such methodologies largely assume that observed labels in training data are correct. This is problematic because bias in labels is pervasive across important domains, including healthcare, hiring, and content moderation. In particular, human-generated labels are prone to encoding societal biases. While the presence of labeling bias has been discussed conceptually, there is a lack of methodologies to address this problem. We propose a pruning method -- Decoupled Confident Learning (DeCoLe) -- specifically designed to mitigate label bias. After illustrating its performance on a synthetic dataset, we apply DeCoLe in the context of hate speech detection, where label bias has been recognized as an important challenge, and show that it successfully identifies biased labels and outperforms competing approaches.

More Data Can Lead Us Astray: Active Data Acquisition in the Presence of Label Bias

Jul 15, 2022

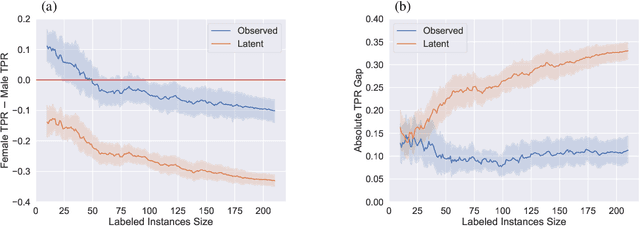

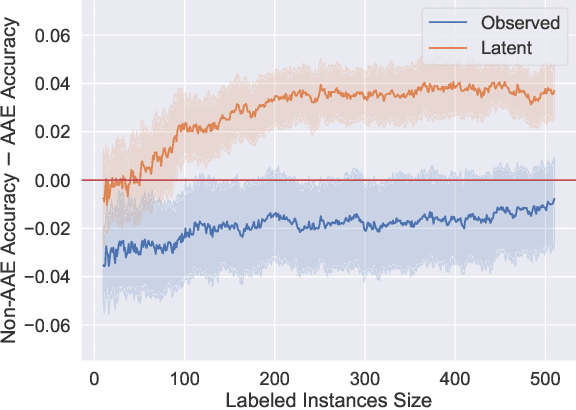

Abstract:An increased awareness concerning risks of algorithmic bias has driven a surge of efforts around bias mitigation strategies. A vast majority of the proposed approaches fall under one of two categories: (1) imposing algorithmic fairness constraints on predictive models, and (2) collecting additional training samples. Most recently and at the intersection of these two categories, methods that propose active learning under fairness constraints have been developed. However, proposed bias mitigation strategies typically overlook the bias presented in the observed labels. In this work, we study fairness considerations of active data collection strategies in the presence of label bias. We first present an overview of different types of label bias in the context of supervised learning systems. We then empirically show that, when overlooking label bias, collecting more data can aggravate bias, and imposing fairness constraints that rely on the observed labels in the data collection process may not address the problem. Our results illustrate the unintended consequences of deploying a model that attempts to mitigate a single type of bias while neglecting others, emphasizing the importance of explicitly differentiating between the types of bias that fairness-aware algorithms aim to address, and highlighting the risks of neglecting label bias during data collection.

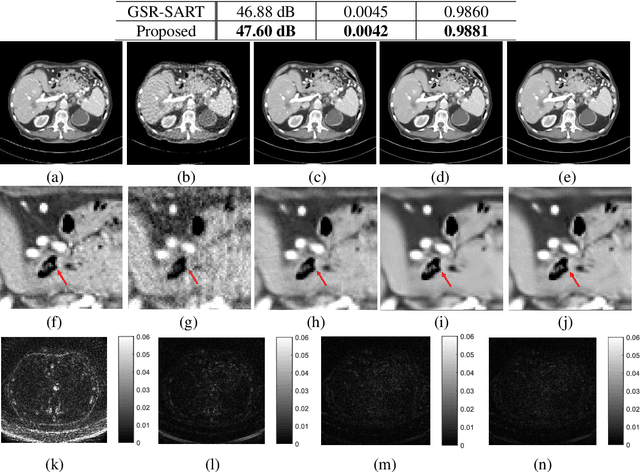

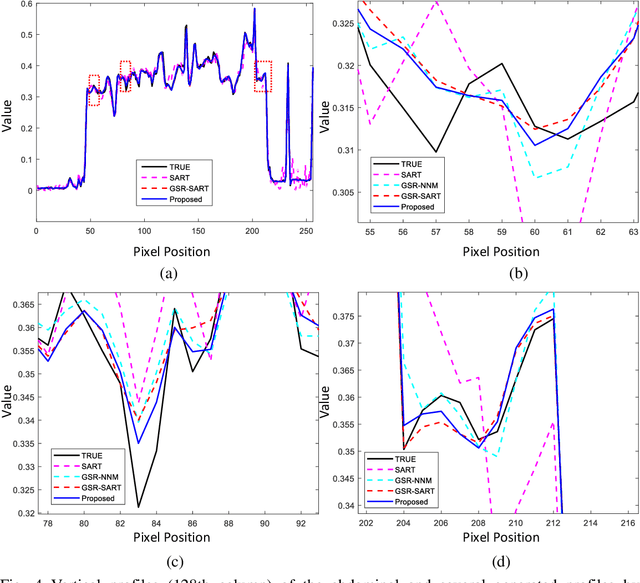

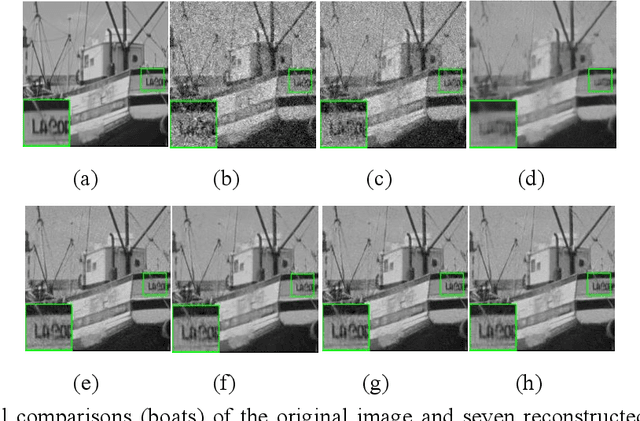

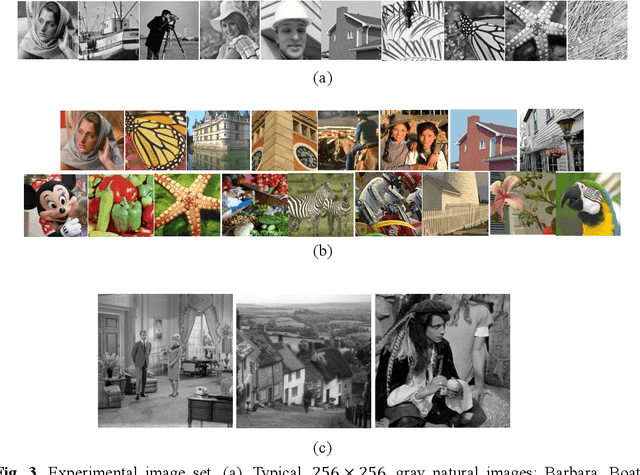

Nonconvex ${L_ {1/2}} $-Regularized Nonlocal Self-similarity Denoiser for Compressive Sensing based CT Reconstruction

May 15, 2022

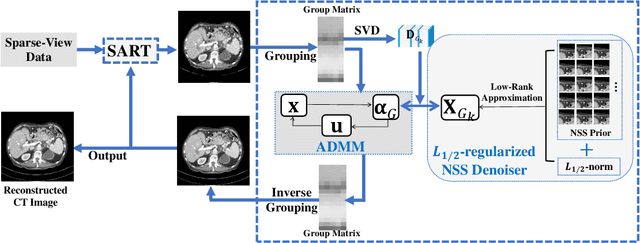

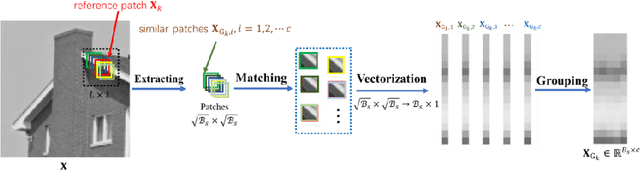

Abstract:Compressive sensing (CS) based computed tomography (CT) image reconstruction aims at reducing the radiation risk through sparse-view projection data. It is usually challenging to achieve satisfying image quality from incomplete projections. Recently, the nonconvex ${{L_ {{1/2}}}} $-norm has achieved promising performance in sparse recovery, while the applications on imaging are unsatisfactory due to its nonconvexity. In this paper, we develop a ${{L_ {{1/2}}}} $-regularized nonlocal self-similarity (NSS) denoiser for CT reconstruction problem, which integrates low-rank approximation with group sparse coding (GSC) framework. Concretely, we first split the CT reconstruction problem into two subproblems, and then improve the CT image quality furtherly using our ${{L_ {{1/2}}}} $-regularized NSS denoiser. Instead of optimizing the nonconvex problem under the perspective of GSC, we particularly reconstruct CT image via low-rank minimization based on two simple yet essential schemes, which build the equivalent relationship between GSC based denoiser and low-rank minimization. Furtherly, the weighted singular value thresholding (WSVT) operator is utilized to optimize the resulting nonconvex ${{L_ {{1/2}}}} $ minimization problem. Following this, our proposed denoiser is integrated with the CT reconstruction problem by alternating direction method of multipliers (ADMM) framework. Extensive experimental results on typical clinical CT images have demonstrated that our approach can further achieve better performance than popular approaches.

Edge-Enhanced Global Disentangled Graph Neural Network for Sequential Recommendation

Nov 23, 2021

Abstract:Sequential recommendation has been a widely popular topic of recommender systems. Existing works have contributed to enhancing the prediction ability of sequential recommendation systems based on various methods, such as recurrent networks and self-attention mechanisms. However, they fail to discover and distinguish various relationships between items, which could be underlying factors which motivate user behaviors. In this paper, we propose an Edge-Enhanced Global Disentangled Graph Neural Network (EGD-GNN) model to capture the relation information between items for global item representation and local user intention learning. At the global level, we build a global-link graph over all sequences to model item relationships. Then a channel-aware disentangled learning layer is designed to decompose edge information into different channels, which can be aggregated to represent the target item from its neighbors. At the local level, we apply a variational auto-encoder framework to learn user intention over the current sequence. We evaluate our proposed method on three real-world datasets. Experimental results show that our model can get a crucial improvement over state-of-the-art baselines and is able to distinguish item features.

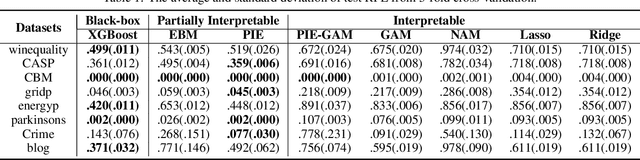

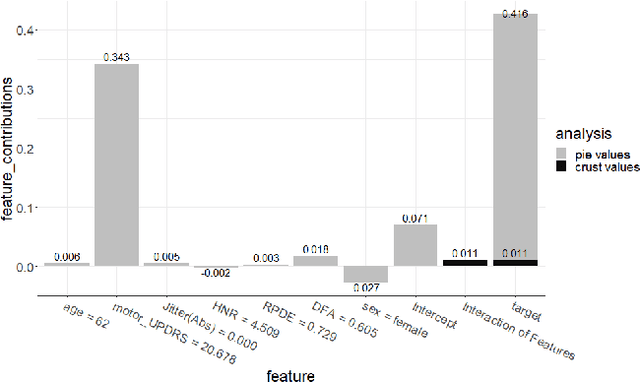

Partially Interpretable Estimators (PIE): Black-Box-Refined Interpretable Machine Learning

May 06, 2021

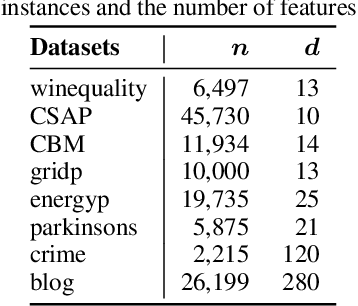

Abstract:We propose Partially Interpretable Estimators (PIE) which attribute a prediction to individual features via an interpretable model, while a (possibly) small part of the PIE prediction is attributed to the interaction of features via a black-box model, with the goal to boost the predictive performance while maintaining interpretability. As such, the interpretable model captures the main contributions of features, and the black-box model attempts to complement the interpretable piece by capturing the "nuances" of feature interactions as a refinement. We design an iterative training algorithm to jointly train the two types of models. Experimental results show that PIE is highly competitive to black-box models while outperforming interpretable baselines. In addition, the understandability of PIE is comparable to simple linear models as validated via a human evaluation.

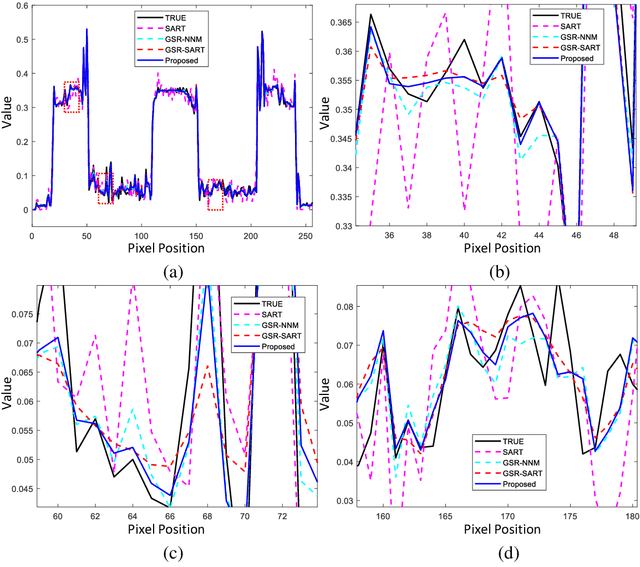

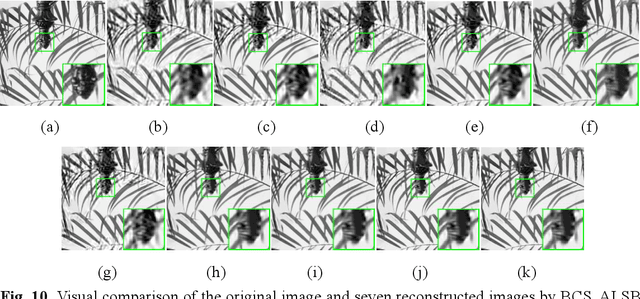

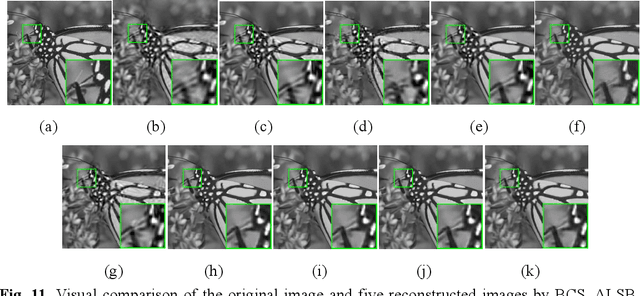

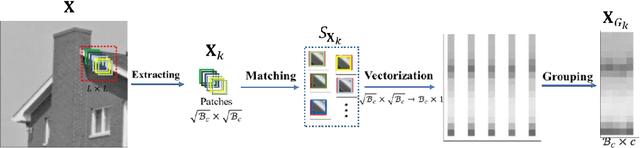

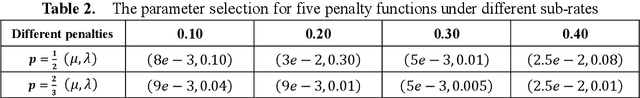

Nonconvex Nonsmooth Low-Rank Minimization for Generalized Image Compressed Sensing via Group Sparse Representation

Nov 18, 2019

Abstract:Group sparse representation (GSR) based method has led to great successes in various image recovery tasks, which can be converted into a low-rank matrix minimization problem. As a widely used surrogate function of low-rank, the nuclear norm based convex surrogate usually leads to over-shrinking problem, since the standard soft-thresholding operator shrinks all singular values equally. To improve traditional sparse representation based image compressive sensing (CS) performance, we propose a generalized CS framework based on GSR model, leading to a nonconvex nonsmooth low-rank minimization problem. The popular L_2-norm and M-estimator are employed for standard image CS and robust CS problem to fit the data respectively. For the better approximation of the rank of group-matrix, a family of nuclear norms are employed to address the over-shrinking problem. Moreover, we also propose a flexible and effective iteratively-weighting strategy to control the weighting and contribution of each singular value. Then we develop an iteratively reweighted nuclear norm algorithm for our generalized framework via an alternating direction method of multipliers framework, namely, GSR-ADMM-IRNN. Experimental results demonstrate that our proposed CS framework can achieve favorable reconstruction performance compared with current state-of-the-art methods and the RCS framework can suppress the outliers effectively.

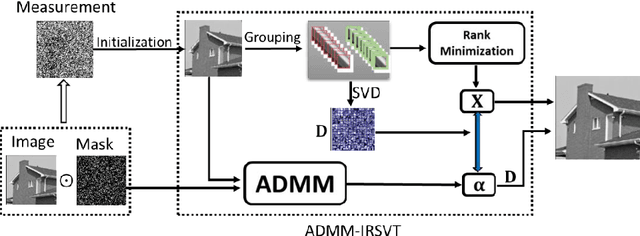

Generalized Rank Minimization based Group Sparse Coding for Low-level Image Restoration via Dictionary Learning

Jul 13, 2019

Abstract:Recently, low-rank matrix recovery theory has been emerging as a significant progress for various image processing problems. Meanwhile, the group sparse coding (GSC) theory has led to great successes in image restoration with group contains low-rank property. In this paper, we introduce a novel GSC framework using generalized rank minimization for image restoration tasks via an effective adaptive dictionary learning scheme. For a more accurate approximation of the rank of group matrix, we proposed a generalized rank minimization model with a generalized and flexible weighted scheme and the generalized nonconvex nonsmooth relaxation function. Then an efficient generalized iteratively reweighted singular-value function thresholding (GIR-SFT) algorithm is proposed to handle the resulting minimization problem of GSC. Our proposed model is connected to image restoration (IR) problems via an alternating direction method of multipliers (ADMM) strategy. Extensive experiments on typical IR problems of image compressive sensing (CS) reconstruction, inpainting, deblurring and impulsive noise removal demonstrate that our proposed GSC framework can enhance the image restoration quality compared with many state-of-the-art methods.

Next Hit Predictor - Self-exciting Risk Modeling for Predicting Next Locations of Serial Crimes

Dec 13, 2018

Abstract:Our goal is to predict the location of the next crime in a crime series, based on the identified previous offenses in the series. We build a predictive model called Next Hit Predictor (NHP) that finds the most likely location of the next serial crime via a carefully designed risk model. The risk model follows the paradigm of a self-exciting point process which consists of a background crime risk and triggered risks stimulated by previous offenses in the series. Thus, NHP creates a risk map for a crime series at hand. To train the risk model, we formulate a convex learning objective that considers pairwise rankings of locations and use stochastic gradient descent to learn the optimal parameters. Next Hit Predictor incorporates both spatial-temporal features and geographical characteristics of prior crime locations in the series. Next Hit Predictor has demonstrated promising results on decades' worth of serial crime data collected by the Crime Analysis Unit of the Cambridge Police Department in Massachusetts, USA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge