Yunming Zhang

ErasableMask: A Robust and Erasable Privacy Protection Scheme against Black-box Face Recognition Models

Dec 24, 2024

Abstract:While face recognition (FR) models have brought remarkable convenience in face verification and identification, they also pose substantial privacy risks to the public. Existing facial privacy protection schemes usually adopt adversarial examples to disrupt face verification of FR models. However, these schemes often suffer from weak transferability against black-box FR models and permanently damage the identifiable information that cannot fulfill the requirements of authorized operations such as forensics and authentication. To address these limitations, we propose ErasableMask, a robust and erasable privacy protection scheme against black-box FR models. Specifically, via rethinking the inherent relationship between surrogate FR models, ErasableMask introduces a novel meta-auxiliary attack, which boosts black-box transferability by learning more general features in a stable and balancing optimization strategy. It also offers a perturbation erasion mechanism that supports the erasion of semantic perturbations in protected face without degrading image quality. To further improve performance, ErasableMask employs a curriculum learning strategy to mitigate optimization conflicts between adversarial attack and perturbation erasion. Extensive experiments on the CelebA-HQ and FFHQ datasets demonstrate that ErasableMask achieves the state-of-the-art performance in transferability, achieving over 72% confidence on average in commercial FR systems. Moreover, ErasableMask also exhibits outstanding perturbation erasion performance, achieving over 90% erasion success rate.

StyleMark: A Robust Watermarking Method for Art Style Images Against Black-Box Arbitrary Style Transfer

Dec 10, 2024

Abstract:Arbitrary Style Transfer (AST) achieves the rendering of real natural images into the painting styles of arbitrary art style images, promoting art communication. However, misuse of unauthorized art style images for AST may infringe on artists' copyrights. One countermeasure is robust watermarking, which tracks image propagation by embedding copyright watermarks into carriers. Unfortunately, AST-generated images lose the structural and semantic information of the original style image, hindering end-to-end robust tracking by watermarks. To fill this gap, we propose StyleMark, the first robust watermarking method for black-box AST, which can be seamlessly applied to art style images achieving precise attribution of artistic styles after AST. Specifically, we propose a new style watermark network that adjusts the mean activations of style features through multi-scale watermark embedding, thereby planting watermark traces into the shared style feature space of style images. Furthermore, we design a distribution squeeze loss, which constrain content statistical feature distortion, forcing the reconstruction network to focus on integrating style features with watermarks, thus optimizing the intrinsic watermark distribution. Finally, based on solid end-to-end training, StyleMark mitigates the optimization conflict between robustness and watermark invisibility through decoder fine-tuning under random noise. Experimental results demonstrate that StyleMark exhibits significant robustness against black-box AST and common pixel-level distortions, while also securely defending against malicious adaptive attacks.

Take Fake as Real: Realistic-like Robust Black-box Adversarial Attack to Evade AIGC Detection

Dec 09, 2024

Abstract:The security of AI-generated content (AIGC) detection based on GANs and diffusion models is closely related to the credibility of multimedia content. Malicious adversarial attacks can evade these developing AIGC detection. However, most existing adversarial attacks focus only on GAN-generated facial images detection, struggle to be effective on multi-class natural images and diffusion-based detectors, and exhibit poor invisibility. To fill this gap, we first conduct an in-depth analysis of the vulnerability of AIGC detectors and discover the feature that detectors vary in vulnerability to different post-processing. Then, considering the uncertainty of detectors in real-world scenarios, and based on the discovery, we propose a Realistic-like Robust Black-box Adversarial attack (R$^2$BA) with post-processing fusion optimization. Unlike typical perturbations, R$^2$BA uses real-world post-processing, i.e., Gaussian blur, JPEG compression, Gaussian noise and light spot to generate adversarial examples. Specifically, we use a stochastic particle swarm algorithm with inertia decay to optimize post-processing fusion intensity and explore the detector's decision boundary. Guided by the detector's fake probability, R$^2$BA enhances/weakens the detector-vulnerable/detector-robust post-processing intensity to strike a balance between adversariality and invisibility. Extensive experiments on popular/commercial AIGC detectors and datasets demonstrate that R$^2$BA exhibits impressive anti-detection performance, excellent invisibility, and strong robustness in GAN-based and diffusion-based cases. Compared to state-of-the-art white-box and black-box attacks, R$^2$BA shows significant improvements of 15% and 21% in anti-detection performance under the original and robust scenario respectively, offering valuable insights for the security of AIGC detection in real-world applications.

Trinity Detector:text-assisted and attention mechanisms based spectral fusion for diffusion generation image detection

Apr 26, 2024

Abstract:Artificial Intelligence Generated Content (AIGC) techniques, represented by text-to-image generation, have led to a malicious use of deep forgeries, raising concerns about the trustworthiness of multimedia content. Adapting traditional forgery detection methods to diffusion models proves challenging. Thus, this paper proposes a forgery detection method explicitly designed for diffusion models called Trinity Detector. Trinity Detector incorporates coarse-grained text features through a CLIP encoder, coherently integrating them with fine-grained artifacts in the pixel domain for comprehensive multimodal detection. To heighten sensitivity to diffusion-generated image features, a Multi-spectral Channel Attention Fusion Unit (MCAF) is designed, extracting spectral inconsistencies through adaptive fusion of diverse frequency bands and further integrating spatial co-occurrence of the two modalities. Extensive experimentation validates that our Trinity Detector method outperforms several state-of-the-art methods, our performance is competitive across all datasets and up to 17.6\% improvement in transferability in the diffusion datasets.

Double Privacy Guard: Robust Traceable Adversarial Watermarking against Face Recognition

Apr 23, 2024

Abstract:The wide deployment of Face Recognition (FR) systems poses risks of privacy leakage. One countermeasure to address this issue is adversarial attacks, which deceive malicious FR searches but simultaneously interfere the normal identity verification of trusted authorizers. In this paper, we propose the first Double Privacy Guard (DPG) scheme based on traceable adversarial watermarking. DPG employs a one-time watermark embedding to deceive unauthorized FR models and allows authorizers to perform identity verification by extracting the watermark. Specifically, we propose an information-guided adversarial attack against FR models. The encoder embeds an identity-specific watermark into the deep feature space of the carrier, guiding recognizable features of the image to deviate from the source identity. We further adopt a collaborative meta-optimization strategy compatible with sub-tasks, which regularizes the joint optimization direction of the encoder and decoder. This strategy enhances the representation of universal carrier features, mitigating multi-objective optimization conflicts in watermarking. Experiments confirm that DPG achieves significant attack success rates and traceability accuracy on state-of-the-art FR models, exhibiting remarkable robustness that outperforms the existing privacy protection methods using adversarial attacks and deep watermarking, or simple combinations of the two. Our work potentially opens up new insights into proactive protection for FR privacy.

AVT2-DWF: Improving Deepfake Detection with Audio-Visual Fusion and Dynamic Weighting Strategies

Mar 22, 2024

Abstract:With the continuous improvements of deepfake methods, forgery messages have transitioned from single-modality to multi-modal fusion, posing new challenges for existing forgery detection algorithms. In this paper, we propose AVT2-DWF, the Audio-Visual dual Transformers grounded in Dynamic Weight Fusion, which aims to amplify both intra- and cross-modal forgery cues, thereby enhancing detection capabilities. AVT2-DWF adopts a dual-stage approach to capture both spatial characteristics and temporal dynamics of facial expressions. This is achieved through a face transformer with an n-frame-wise tokenization strategy encoder and an audio transformer encoder. Subsequently, it uses multi-modal conversion with dynamic weight fusion to address the challenge of heterogeneous information fusion between audio and visual modalities. Experiments on DeepfakeTIMIT, FakeAVCeleb, and DFDC datasets indicate that AVT2-DWF achieves state-of-the-art performance intra- and cross-dataset Deepfake detection. Code is available at https://github.com/raining-dev/AVT2-DWF.

Dual Defense: Adversarial, Traceable, and Invisible Robust Watermarking against Face Swapping

Oct 25, 2023

Abstract:The malicious applications of deep forgery, represented by face swapping, have introduced security threats such as misinformation dissemination and identity fraud. While some research has proposed the use of robust watermarking methods to trace the copyright of facial images for post-event traceability, these methods cannot effectively prevent the generation of forgeries at the source and curb their dissemination. To address this problem, we propose a novel comprehensive active defense mechanism that combines traceability and adversariality, called Dual Defense. Dual Defense invisibly embeds a single robust watermark within the target face to actively respond to sudden cases of malicious face swapping. It disrupts the output of the face swapping model while maintaining the integrity of watermark information throughout the entire dissemination process. This allows for watermark extraction at any stage of image tracking for traceability. Specifically, we introduce a watermark embedding network based on original-domain feature impersonation attack. This network learns robust adversarial features of target facial images and embeds watermarks, seeking a well-balanced trade-off between watermark invisibility, adversariality, and traceability through perceptual adversarial encoding strategies. Extensive experiments demonstrate that Dual Defense achieves optimal overall defense success rates and exhibits promising universality in anti-face swapping tasks and dataset generalization ability. It maintains impressive adversariality and traceability in both original and robust settings, surpassing current forgery defense methods that possess only one of these capabilities, including CMUA-Watermark, Anti-Forgery, FakeTagger, or PGD methods.

Feature Extraction Matters More: Universal Deepfake Disruption through Attacking Ensemble Feature Extractors

Mar 01, 2023

Abstract:Adversarial example is a rising way of protecting facial privacy security from deepfake modification. To prevent massive facial images from being illegally modified by various deepfake models, it is essential to design a universal deepfake disruptor. However, existing works treat deepfake disruption as an End-to-End process, ignoring the functional difference between feature extraction and image reconstruction, which makes it difficult to generate a cross-model universal disruptor. In this work, we propose a novel Feature-Output ensemble UNiversal Disruptor (FOUND) against deepfake networks, which explores a new opinion that considers attacking feature extractors as the more critical and general task in deepfake disruption. We conduct an effective two-stage disruption process. We first disrupt multi-model feature extractors through multi-feature aggregation and individual-feature maintenance, and then develop a gradient-ensemble algorithm to enhance the disruption effect by simplifying the complex optimization problem of disrupting multiple End-to-End models. Extensive experiments demonstrate that FOUND can significantly boost the disruption effect against ensemble deepfake benchmark models. Besides, our method can fast obtain a cross-attribute, cross-image, and cross-model universal deepfake disruptor with only a few training images, surpassing state-of-the-art universal disruptors in both success rate and efficiency.

Tiramisu: A Code Optimization Framework for High Performance Systems

Sep 26, 2018

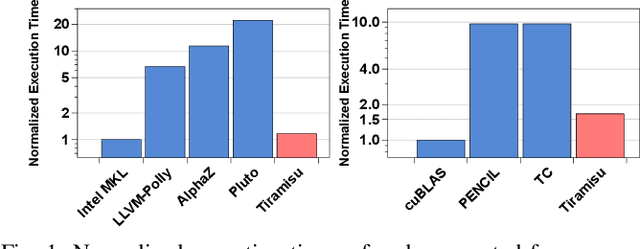

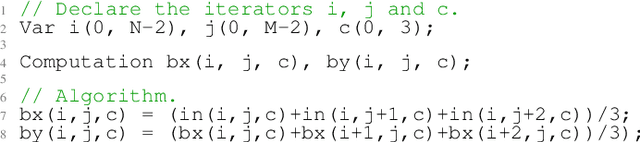

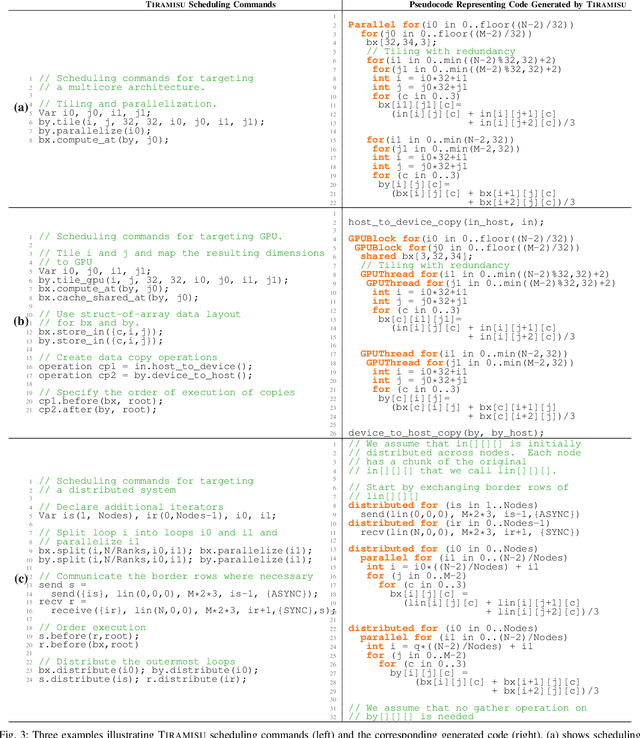

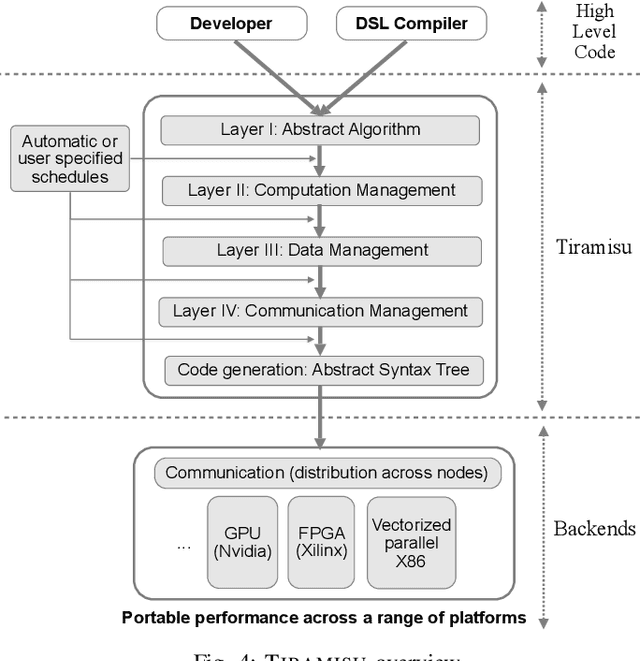

Abstract:This paper introduces Tiramisu, a polyhedral framework designed to generate high performance code for multiple platforms including multicores, GPUs, and distributed machines. Tiramisu introduces a scheduling language with novel extensions to explicitly manage the complexities that arise when targeting these systems. The extensions include explicit communication, synchronization, and mapping buffers to different memory hierarchies. Tiramisu relies on a flexible representation based on the polyhedral model and explicitly uses a well-defined four-level IR that allows full separation between the algorithms, loop transformations, data-layouts, and communication. This separation simplifies targeting multiple hardware architectures with the same algorithm. We evaluate Tiramisu by writing a set of image processing and stencil benchmarks and compare it with state-of-the-art compilers. We show that Tiramisu matches or outperforms existing compilers on different hardware architectures, including multicore CPUs, GPUs, and distributed machines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge