Ying Zhong

SimLLM: Fine-Tuning Code LLMs for SimPy-Based Queueing System Simulation

Jan 10, 2026Abstract:The Python package SimPy is widely used for modeling queueing systems due to its flexibility, simplicity, and smooth integration with modern data analysis and optimization frameworks. Recent advances in large language models (LLMs) have shown strong ability in generating clear and executable code, making them powerful and suitable tools for writing SimPy queueing simulation code. However, directly employing closed-source models like GPT-4o to generate such code may lead to high computational costs and raise data privacy concerns. To address this, we fine-tune two open-source LLMs, Qwen-Coder-7B and DeepSeek-Coder-6.7B, on curated SimPy queueing data, which enhances their code-generating performance in executability, output-format compliance, and instruction-code consistency. Particularly, we proposed a multi-stage fine-tuning framework comprising two stages of supervised fine-tuning (SFT) and one stage of direct preference optimization (DPO), progressively enhancing the model's ability in SimPy-based queueing simulation code generation. Extensive evaluations demonstrate that both fine-tuned models achieve substantial improvements in executability, output-format compliance, and instruct consistency. These results confirm that domain-specific fine-tuning can effectively transform compact open-source code models into reliable SimPy simulation generators which provide a practical alternative to closed-source LLMs for education, research, and operational decision support.

Deep Temporal Contrastive Clustering

Dec 29, 2022Abstract:Recently the deep learning has shown its advantage in representation learning and clustering for time series data. Despite the considerable progress, the existing deep time series clustering approaches mostly seek to train the deep neural network by some instance reconstruction based or cluster distribution based objective, which, however, lack the ability to exploit the sample-wise (or augmentation-wise) contrastive information or even the higher-level (e.g., cluster-level) contrastiveness for learning discriminative and clustering-friendly representations. In light of this, this paper presents a deep temporal contrastive clustering (DTCC) approach, which for the first time, to our knowledge, incorporates the contrastive learning paradigm into the deep time series clustering research. Specifically, with two parallel views generated from the original time series and their augmentations, we utilize two identical auto-encoders to learn the corresponding representations, and in the meantime perform the cluster distribution learning by incorporating a k-means objective. Further, two levels of contrastive learning are simultaneously enforced to capture the instance-level and cluster-level contrastive information, respectively. With the reconstruction loss of the auto-encoder, the cluster distribution loss, and the two levels of contrastive losses jointly optimized, the network architecture is trained in a self-supervised manner and the clustering result can thereby be obtained. Experiments on a variety of time series datasets demonstrate the superiority of our DTCC approach over the state-of-the-art.

DeepAID: Interpreting and Improving Deep Learning-based Anomaly Detection in Security Applications

Sep 23, 2021

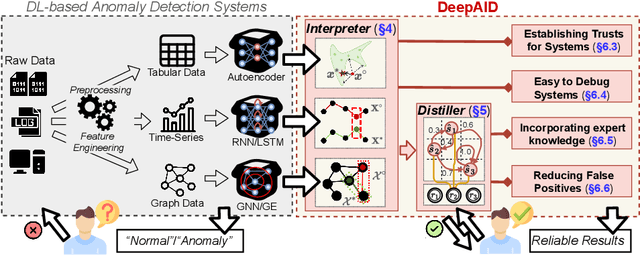

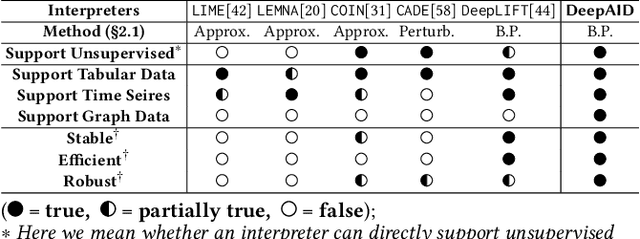

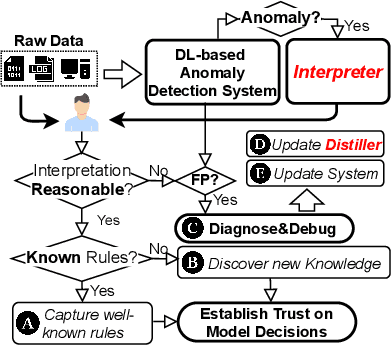

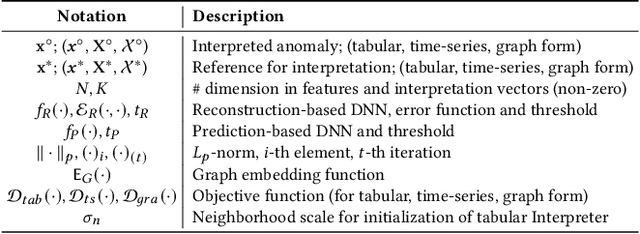

Abstract:Unsupervised Deep Learning (DL) techniques have been widely used in various security-related anomaly detection applications, owing to the great promise of being able to detect unforeseen threats and superior performance provided by Deep Neural Networks (DNN). However, the lack of interpretability creates key barriers to the adoption of DL models in practice. Unfortunately, existing interpretation approaches are proposed for supervised learning models and/or non-security domains, which are unadaptable for unsupervised DL models and fail to satisfy special requirements in security domains. In this paper, we propose DeepAID, a general framework aiming to (1) interpret DL-based anomaly detection systems in security domains, and (2) improve the practicality of these systems based on the interpretations. We first propose a novel interpretation method for unsupervised DNNs by formulating and solving well-designed optimization problems with special constraints for security domains. Then, we provide several applications based on our Interpreter as well as a model-based extension Distiller to improve security systems by solving domain-specific problems. We apply DeepAID over three types of security-related anomaly detection systems and extensively evaluate our Interpreter with representative prior works. Experimental results show that DeepAID can provide high-quality interpretations for unsupervised DL models while meeting the special requirements of security domains. We also provide several use cases to show that DeepAID can help security operators to understand model decisions, diagnose system mistakes, give feedback to models, and reduce false positives.

A System for Worldwide COVID-19 Information Aggregation

Jul 28, 2020

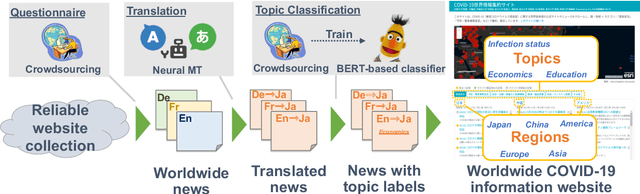

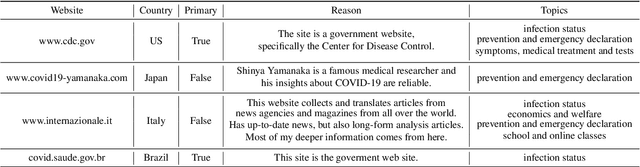

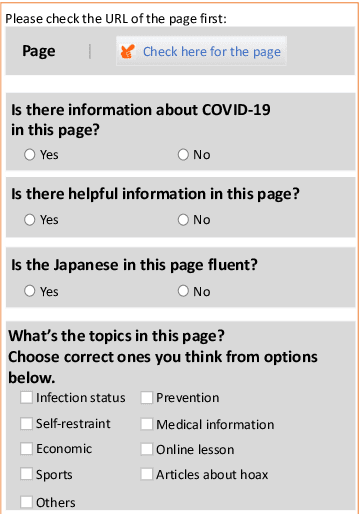

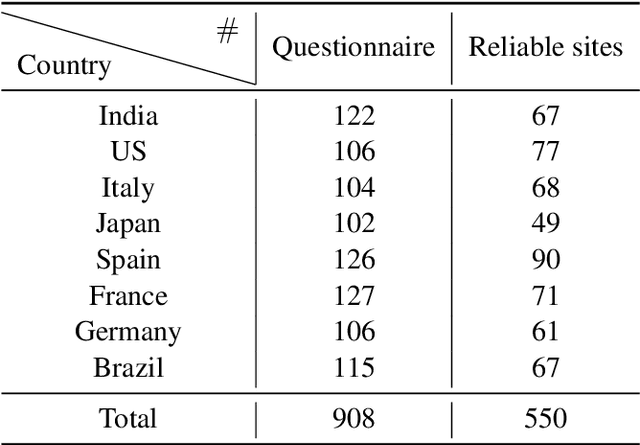

Abstract:The global pandemic of COVID-19 has made the public pay close attention to related news, covering various domains, such as sanitation, treatment, and effects on education. Meanwhile, the COVID-19 condition is very different among the countries (e.g., policies and development of the epidemic), and thus citizens would be interested in news in foreign countries. We build a system for worldwide COVID-19 information aggregation (http://lotus.kuee.kyoto-u.ac.jp/NLPforCOVID-19 ) containing reliable articles from 10 regions in 7 languages sorted by topics for Japanese citizens. Our reliable COVID-19 related website dataset collected through crowdsourcing ensures the quality of the articles. A neural machine translation module translates articles in other languages into Japanese. A BERT-based topic-classifier trained on an article-topic pair dataset helps users find their interested information efficiently by putting articles into different categories.

Practical Traffic-space Adversarial Attacks on Learning-based NIDSs

May 15, 2020

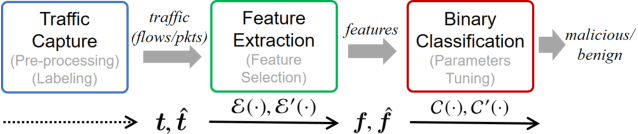

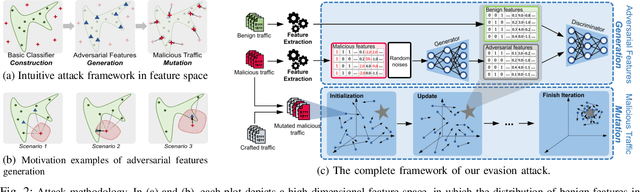

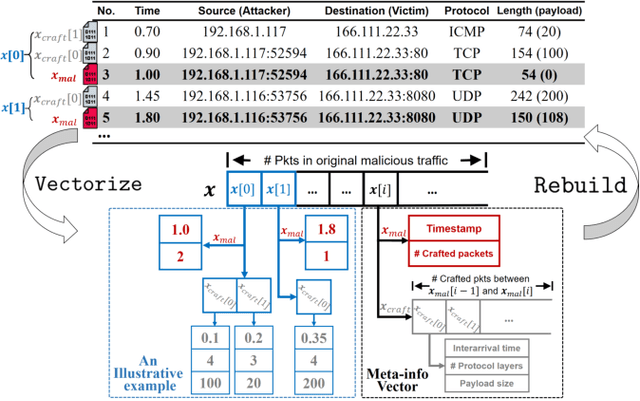

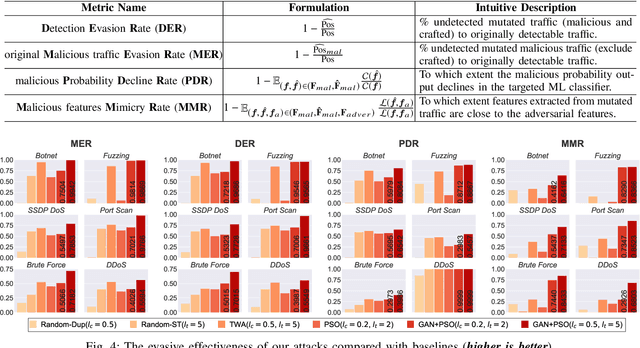

Abstract:Machine learning (ML) techniques have been increasingly used in anomaly-based network intrusion detection systems (NIDS) to detect unknown attacks. However, ML has shown to be extremely vulnerable to adversarial attacks, aggravating the potential risk of evasion attacks against learning-based NIDSs. In this situation, prior studies on evading traditional anomaly-based or signature-based NIDSs are no longer valid. Existing attacks on learning-based NIDSs mostly focused on feature-space and/or white-box attacks, leaving the study on practical gray/black-box attacks largely unexplored. To bridge this gap, we conduct the first systematic study of the practical traffic-space evasion attack on learning-based NIDSs. We outperform the previous work in the following aspects: (1) practical---instead of directly modifying features, we provide a novel framework to automatically mutate malicious traffic with extremely limited knowledge while preserving its functionality; (2) generic---the proposed attack is effective for any ML classifiers (i.e., model-agnostic) and most non-payload-based features; (3) explainable---we propose a feature-based interpretation method to measure the robustness of targeted systems against such attacks. We extensively evaluate our attack and defense scheme on Kitsune, a state-of-the-art learning-based NIDS, as well as measuring the robustness of various NIDSs using diverse features and ML classifiers. Experimental results show promising results and intriguing findings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge