Xia Yin

Minimum-Cost Network Flow with Dual Predictions

Jan 28, 2026Abstract:Recent work has shown that machine-learned predictions can provably improve the performance of classic algorithms. In this work, we propose the first minimum-cost network flow algorithm augmented with a dual prediction. Our method is based on a classic minimum-cost flow algorithm, namely $\varepsilon$-relaxation. We provide time complexity bounds in terms of the infinity norm prediction error, which is both consistent and robust. We also prove sample complexity bounds for PAC-learning the prediction. We empirically validate our theoretical results on two applications of minimum-cost flow, i.e., traffic networks and chip escape routing, in which we learn a fixed prediction, and a feature-based neural network model to infer the prediction, respectively. Experimental results illustrate $12.74\times$ and $1.64\times$ average speedup on two applications.

threaTrace: Detecting and Tracing Host-based Threats in Node Level Through Provenance Graph Learning

Nov 08, 2021

Abstract:Host-based threats such as Program Attack, Malware Implantation, and Advanced Persistent Threats (APT), are commonly adopted by modern attackers. Recent studies propose leveraging the rich contextual information in data provenance to detect threats in a host. Data provenance is a directed acyclic graph constructed from system audit data. Nodes in a provenance graph represent system entities (e.g., $processes$ and $files$) and edges represent system calls in the direction of information flow. However, previous studies, which extract features of the whole provenance graph, are not sensitive to the small number of threat-related entities and thus result in low performance when hunting stealthy threats. We present threaTrace, an anomaly-based detector that detects host-based threats at system entity level without prior knowledge of attack patterns. We tailor GraphSAGE, an inductive graph neural network, to learn every benign entity's role in a provenance graph. threaTrace is a real-time system, which is scalable of monitoring a long-term running host and capable of detecting host-based intrusion in their early phase. We evaluate threaTrace on three public datasets. The results show that threaTrace outperforms three state-of-the-art host intrusion detection systems.

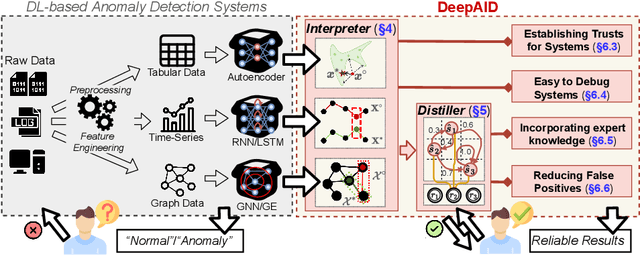

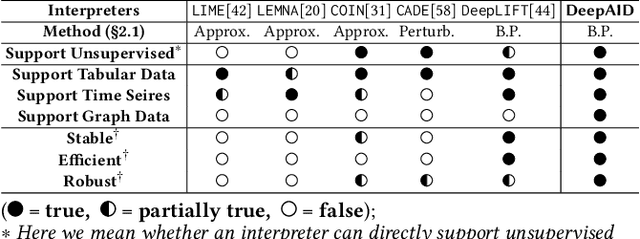

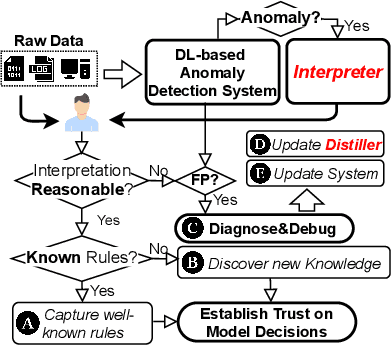

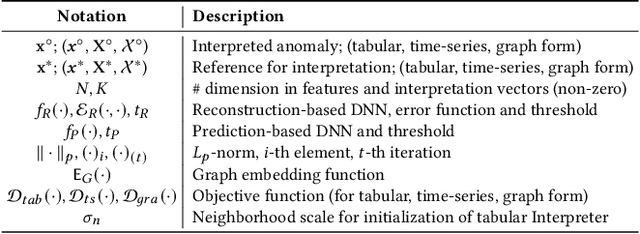

DeepAID: Interpreting and Improving Deep Learning-based Anomaly Detection in Security Applications

Sep 23, 2021

Abstract:Unsupervised Deep Learning (DL) techniques have been widely used in various security-related anomaly detection applications, owing to the great promise of being able to detect unforeseen threats and superior performance provided by Deep Neural Networks (DNN). However, the lack of interpretability creates key barriers to the adoption of DL models in practice. Unfortunately, existing interpretation approaches are proposed for supervised learning models and/or non-security domains, which are unadaptable for unsupervised DL models and fail to satisfy special requirements in security domains. In this paper, we propose DeepAID, a general framework aiming to (1) interpret DL-based anomaly detection systems in security domains, and (2) improve the practicality of these systems based on the interpretations. We first propose a novel interpretation method for unsupervised DNNs by formulating and solving well-designed optimization problems with special constraints for security domains. Then, we provide several applications based on our Interpreter as well as a model-based extension Distiller to improve security systems by solving domain-specific problems. We apply DeepAID over three types of security-related anomaly detection systems and extensively evaluate our Interpreter with representative prior works. Experimental results show that DeepAID can provide high-quality interpretations for unsupervised DL models while meeting the special requirements of security domains. We also provide several use cases to show that DeepAID can help security operators to understand model decisions, diagnose system mistakes, give feedback to models, and reduce false positives.

Practical Traffic-space Adversarial Attacks on Learning-based NIDSs

May 15, 2020

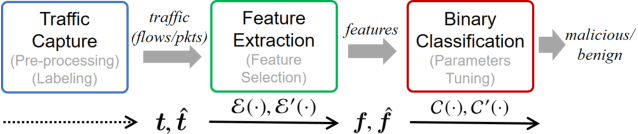

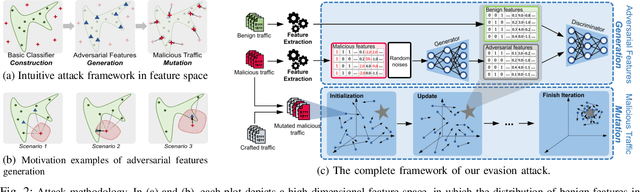

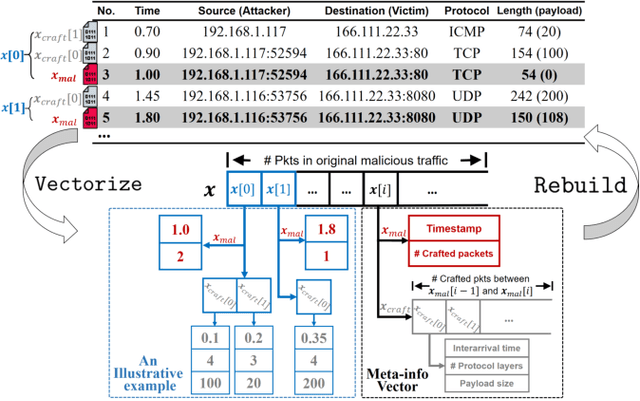

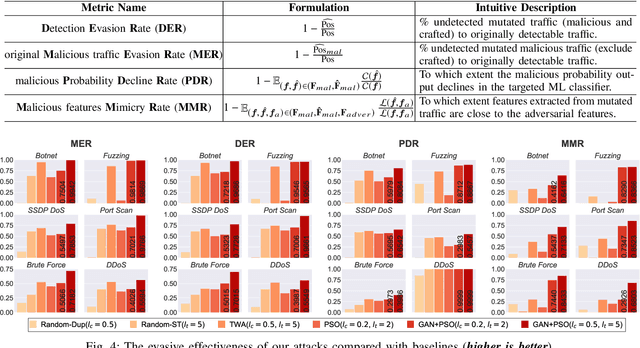

Abstract:Machine learning (ML) techniques have been increasingly used in anomaly-based network intrusion detection systems (NIDS) to detect unknown attacks. However, ML has shown to be extremely vulnerable to adversarial attacks, aggravating the potential risk of evasion attacks against learning-based NIDSs. In this situation, prior studies on evading traditional anomaly-based or signature-based NIDSs are no longer valid. Existing attacks on learning-based NIDSs mostly focused on feature-space and/or white-box attacks, leaving the study on practical gray/black-box attacks largely unexplored. To bridge this gap, we conduct the first systematic study of the practical traffic-space evasion attack on learning-based NIDSs. We outperform the previous work in the following aspects: (1) practical---instead of directly modifying features, we provide a novel framework to automatically mutate malicious traffic with extremely limited knowledge while preserving its functionality; (2) generic---the proposed attack is effective for any ML classifiers (i.e., model-agnostic) and most non-payload-based features; (3) explainable---we propose a feature-based interpretation method to measure the robustness of targeted systems against such attacks. We extensively evaluate our attack and defense scheme on Kitsune, a state-of-the-art learning-based NIDS, as well as measuring the robustness of various NIDSs using diverse features and ML classifiers. Experimental results show promising results and intriguing findings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge