Yin Gu

Collaborative Cognitive Diagnosis with Disentangled Representation Learning for Learner Modeling

Nov 04, 2024

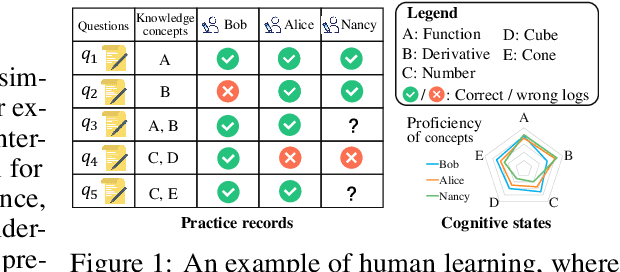

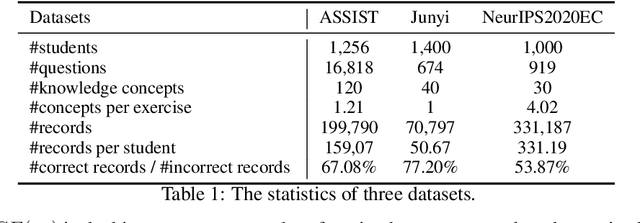

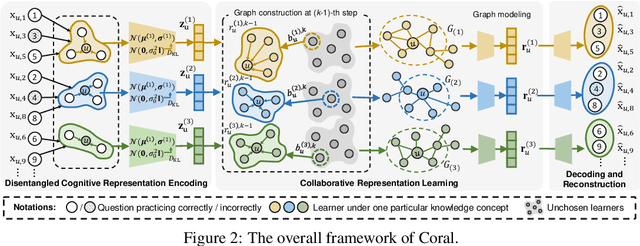

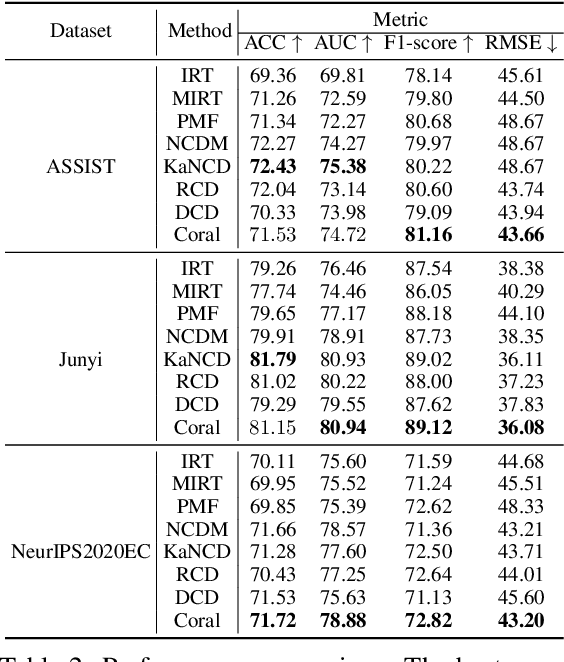

Abstract:Learners sharing similar implicit cognitive states often display comparable observable problem-solving performances. Leveraging collaborative connections among such similar learners proves valuable in comprehending human learning. Motivated by the success of collaborative modeling in various domains, such as recommender systems, we aim to investigate how collaborative signals among learners contribute to the diagnosis of human cognitive states (i.e., knowledge proficiency) in the context of intelligent education. The primary challenges lie in identifying implicit collaborative connections and disentangling the entangled cognitive factors of learners for improved explainability and controllability in learner Cognitive Diagnosis (CD). However, there has been no work on CD capable of simultaneously modeling collaborative and disentangled cognitive states. To address this gap, we present Coral, a Collaborative cognitive diagnosis model with disentangled representation learning. Specifically, Coral first introduces a disentangled state encoder to achieve the initial disentanglement of learners' states. Subsequently, a meticulously designed collaborative representation learning procedure captures collaborative signals. It dynamically constructs a collaborative graph of learners by iteratively searching for optimal neighbors in a context-aware manner. Using the constructed graph, collaborative information is extracted through node representation learning. Finally, a decoding process aligns the initial cognitive states and collaborative states, achieving co-disentanglement with practice performance reconstructions. Extensive experiments demonstrate the superior performance of Coral, showcasing significant improvements over state-of-the-art methods across several real-world datasets. Our code is available at https://github.com/bigdata-ustc/Coral.

KnowPC: Knowledge-Driven Programmatic Reinforcement Learning for Zero-shot Coordination

Aug 08, 2024Abstract:Zero-shot coordination (ZSC) remains a major challenge in the cooperative AI field, which aims to learn an agent to cooperate with an unseen partner in training environments or even novel environments. In recent years, a popular ZSC solution paradigm has been deep reinforcement learning (DRL) combined with advanced self-play or population-based methods to enhance the neural policy's ability to handle unseen partners. Despite some success, these approaches usually rely on black-box neural networks as the policy function. However, neural networks typically lack interpretability and logic, making the learned policies difficult for partners (e.g., humans) to understand and limiting their generalization ability. These shortcomings hinder the application of reinforcement learning methods in diverse cooperative scenarios.We suggest to represent the agent's policy with an interpretable program. Unlike neural networks, programs contain stable logic, but they are non-differentiable and difficult to optimize.To automatically learn such programs, we introduce Knowledge-driven Programmatic reinforcement learning for zero-shot Coordination (KnowPC). We first define a foundational Domain-Specific Language (DSL), including program structures, conditional primitives, and action primitives. A significant challenge is the vast program search space, making it difficult to find high-performing programs efficiently. To address this, KnowPC integrates an extractor and an reasoner. The extractor discovers environmental transition knowledge from multi-agent interaction trajectories, while the reasoner deduces the preconditions of each action primitive based on the transition knowledge.

Weakly supervised alignment and registration of MR-CT for cervical cancer radiotherapy

May 21, 2024

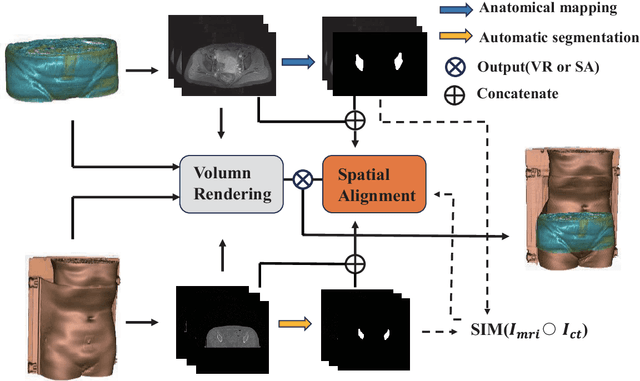

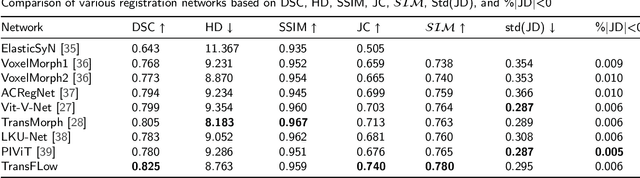

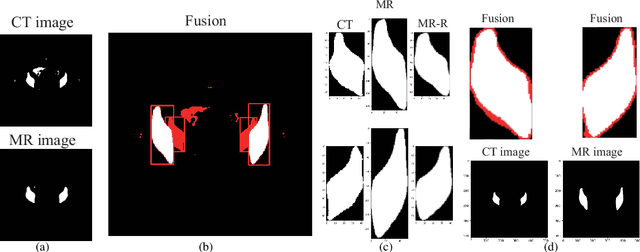

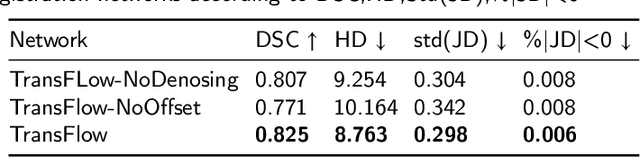

Abstract:Cervical cancer is one of the leading causes of death in women, and brachytherapy is currently the primary treatment method. However, it is important to precisely define the extent of paracervical tissue invasion to improve cancer diagnosis and treatment options. The fusion of the information characteristics of both computed tomography (CT) and magnetic resonance imaging(MRI) modalities may be useful in achieving a precise outline of the extent of paracervical tissue invasion. Registration is the initial step in information fusion. However, when aligning multimodal images with varying depths, manual alignment is prone to large errors and is time-consuming. Furthermore, the variations in the size of the Region of Interest (ROI) and the shape of multimodal images pose a significant challenge for achieving accurate registration.In this paper, we propose a preliminary spatial alignment algorithm and a weakly supervised multimodal registration network. The spatial position alignment algorithm efficiently utilizes the limited annotation information in the two modal images provided by the doctor to automatically align multimodal images with varying depths. By utilizing aligned multimodal images for weakly supervised registration and incorporating pyramidal features and cost volume to estimate the optical flow, the results indicate that the proposed method outperforms traditional volume rendering alignment methods and registration networks in various evaluation metrics. This demonstrates the effectiveness of our model in multimodal image registration.

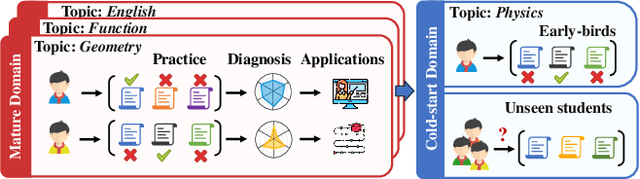

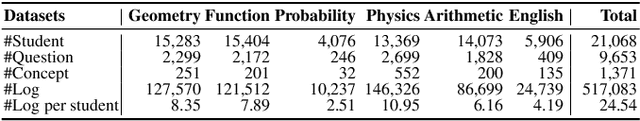

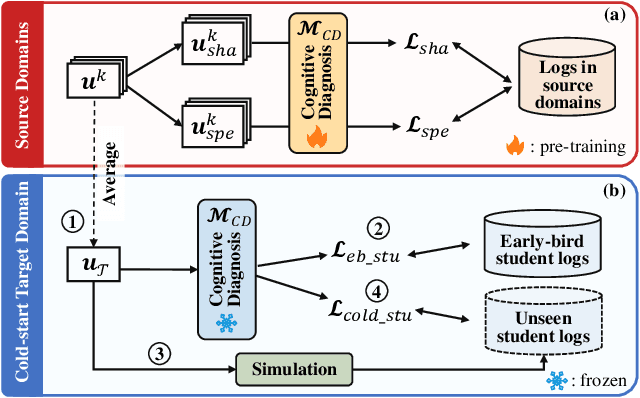

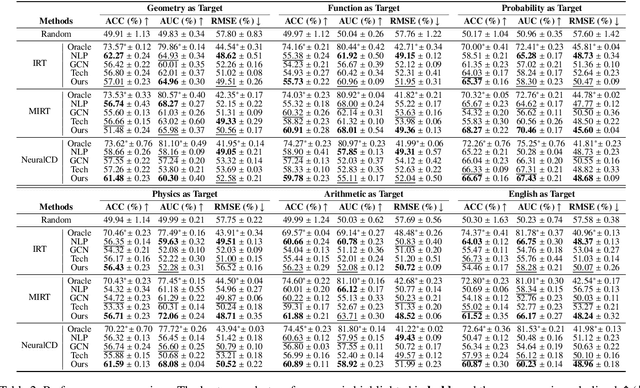

Zero-1-to-3: Domain-level Zero-shot Cognitive Diagnosis via One Batch of Early-bird Students towards Three Diagnostic Objectives

Dec 22, 2023

Abstract:Cognitive diagnosis seeks to estimate the cognitive states of students by exploring their logged practice quiz data. It plays a pivotal role in personalized learning guidance within intelligent education systems. In this paper, we focus on an important, practical, yet often underexplored task: domain-level zero-shot cognitive diagnosis (DZCD), which arises due to the absence of student practice logs in newly launched domains. Recent cross-domain diagnostic models have been demonstrated to be a promising strategy for DZCD. These methods primarily focus on how to transfer student states across domains. However, they might inadvertently incorporate non-transferable information into student representations, thereby limiting the efficacy of knowledge transfer. To tackle this, we propose Zero-1-to-3, a domain-level zero-shot cognitive diagnosis framework via one batch of early-bird students towards three diagnostic objectives. Our approach initiates with pre-training a diagnosis model with dual regularizers, which decouples student states into domain-shared and domain-specific parts. The shared cognitive signals can be transferred to the target domain, enriching the cognitive priors for the new domain, which ensures the cognitive state propagation objective. Subsequently, we devise a strategy to generate simulated practice logs for cold-start students through analyzing the behavioral patterns from early-bird students, fulfilling the domain-adaption goal. Consequently, we refine the cognitive states of cold-start students as diagnostic outcomes via virtual data, aligning with the diagnosis-oriented goal. Finally, extensive experiments on six real-world datasets highlight the efficacy of our model for DZCD and its practical application in question recommendation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge