Deyu Sun

Weakly supervised alignment and registration of MR-CT for cervical cancer radiotherapy

May 21, 2024

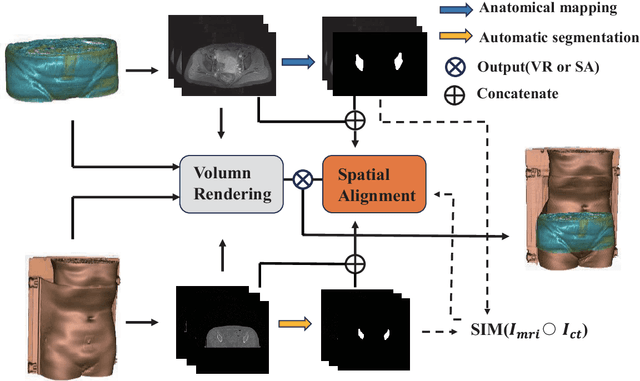

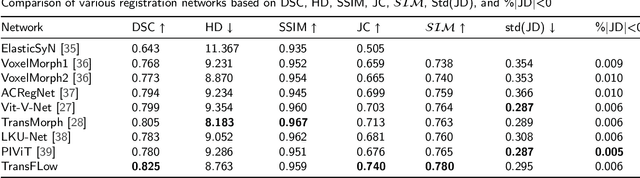

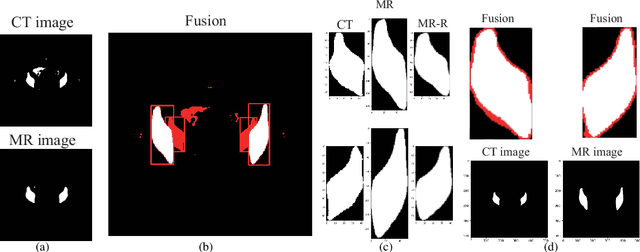

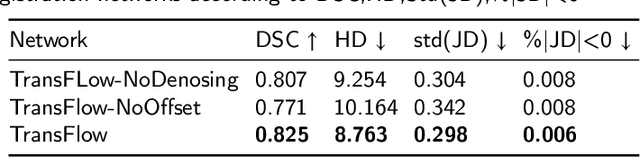

Abstract:Cervical cancer is one of the leading causes of death in women, and brachytherapy is currently the primary treatment method. However, it is important to precisely define the extent of paracervical tissue invasion to improve cancer diagnosis and treatment options. The fusion of the information characteristics of both computed tomography (CT) and magnetic resonance imaging(MRI) modalities may be useful in achieving a precise outline of the extent of paracervical tissue invasion. Registration is the initial step in information fusion. However, when aligning multimodal images with varying depths, manual alignment is prone to large errors and is time-consuming. Furthermore, the variations in the size of the Region of Interest (ROI) and the shape of multimodal images pose a significant challenge for achieving accurate registration.In this paper, we propose a preliminary spatial alignment algorithm and a weakly supervised multimodal registration network. The spatial position alignment algorithm efficiently utilizes the limited annotation information in the two modal images provided by the doctor to automatically align multimodal images with varying depths. By utilizing aligned multimodal images for weakly supervised registration and incorporating pyramidal features and cost volume to estimate the optical flow, the results indicate that the proposed method outperforms traditional volume rendering alignment methods and registration networks in various evaluation metrics. This demonstrates the effectiveness of our model in multimodal image registration.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge