Yifan Xue

Proactive Local-Minima-Free Robot Navigation: Blending Motion Prediction with Safe Control

Jan 15, 2026Abstract:This work addresses the challenge of safe and efficient mobile robot navigation in complex dynamic environments with concave moving obstacles. Reactive safe controllers like Control Barrier Functions (CBFs) design obstacle avoidance strategies based only on the current states of the obstacles, risking future collisions. To alleviate this problem, we use Gaussian processes to learn barrier functions online from multimodal motion predictions of obstacles generated by neural networks trained with energy-based learning. The learned barrier functions are then fed into quadratic programs using modulated CBFs (MCBFs), a local-minimum-free version of CBFs, to achieve safe and efficient navigation. The proposed framework makes two key contributions. First, it develops a prediction-to-barrier function online learning pipeline. Second, it introduces an autonomous parameter tuning algorithm that adapts MCBFs to deforming, prediction-based barrier functions. The framework is evaluated in both simulations and real-world experiments, consistently outperforming baselines and demonstrating superior safety and efficiency in crowded dynamic environments.

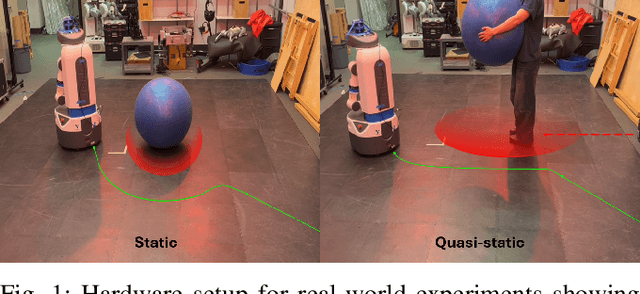

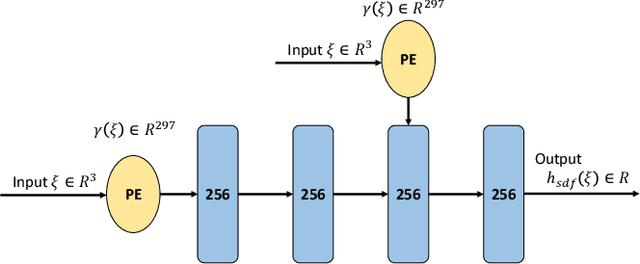

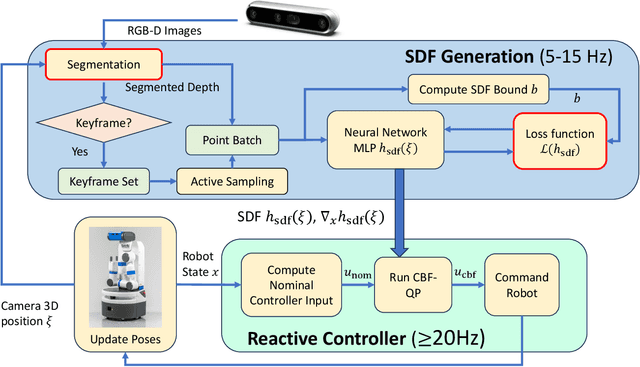

RNBF: Real-Time RGB-D Based Neural Barrier Functions for Safe Robotic Navigation

May 04, 2025

Abstract:Autonomous safe navigation in unstructured and novel environments poses significant challenges, especially when environment information can only be provided through low-cost vision sensors. Although safe reactive approaches have been proposed to ensure robot safety in complex environments, many base their theory off the assumption that the robot has prior knowledge on obstacle locations and geometries. In this paper, we present a real-time, vision-based framework that constructs continuous, first-order differentiable Signed Distance Fields (SDFs) of unknown environments without any pre-training. Our proposed method ensures full compatibility with established SDF-based reactive controllers. To achieve robust performance under practical sensing conditions, our approach explicitly accounts for noise in affordable RGB-D cameras, refining the neural SDF representation online for smoother geometry and stable gradient estimates. We validate the proposed method in simulation and real-world experiments using a Fetch robot.

Gradient Field-Based Dynamic Window Approach for Collision Avoidance in Complex Environments

Apr 04, 2025

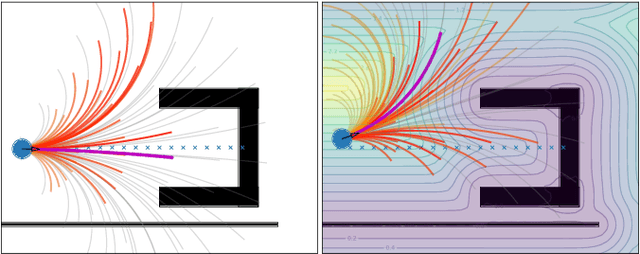

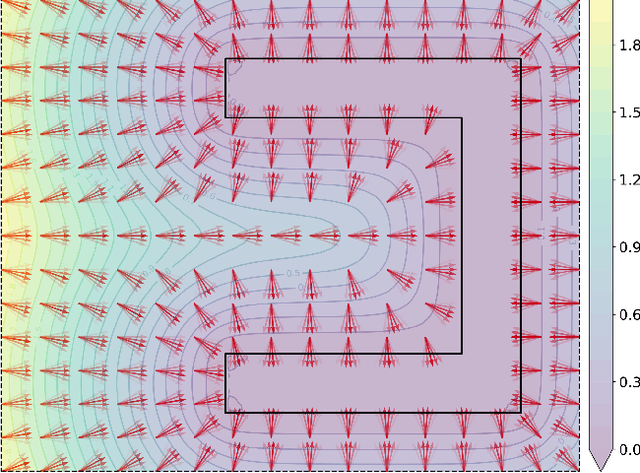

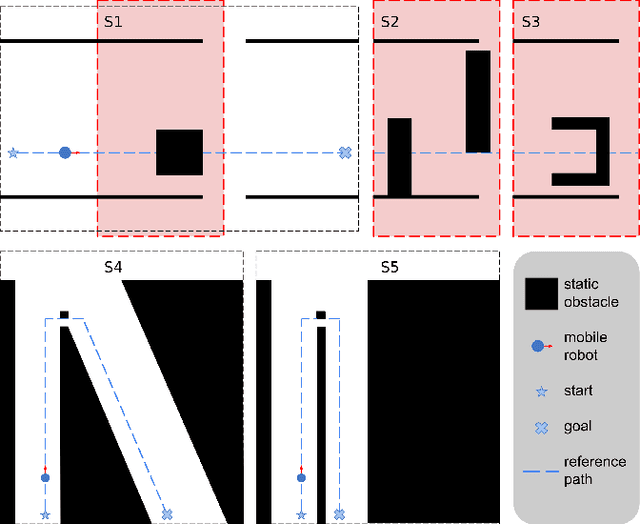

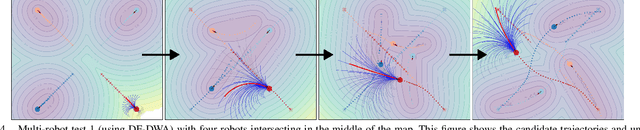

Abstract:For safe and flexible navigation in multi-robot systems, this paper presents an enhanced and predictive sampling-based trajectory planning approach in complex environments, the Gradient Field-based Dynamic Window Approach (GF-DWA). Building upon the dynamic window approach, the proposed method utilizes gradient information of obstacle distances as a new cost term to anticipate potential collisions. This enhancement enables the robot to improve awareness of obstacles, including those with non-convex shapes. The gradient field is derived from the Gaussian process distance field, which generates both the distance field and gradient field by leveraging Gaussian process regression to model the spatial structure of the environment. Through several obstacle avoidance and fleet collision avoidance scenarios, the proposed GF-DWA is shown to outperform other popular trajectory planning and control methods in terms of safety and flexibility, especially in complex environments with non-convex obstacles.

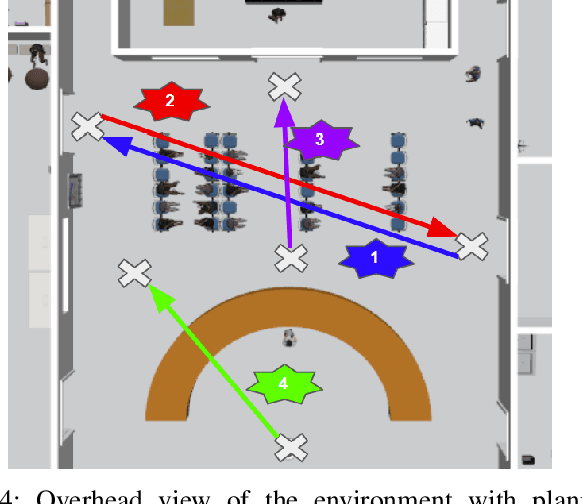

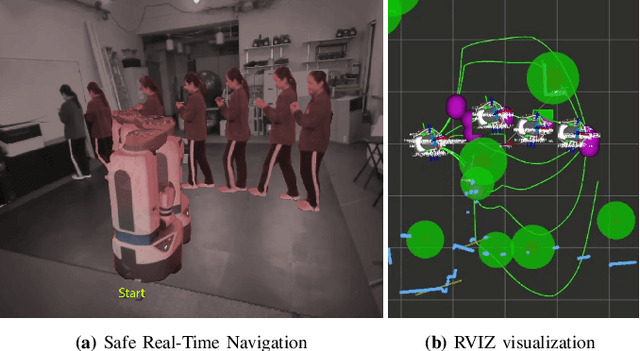

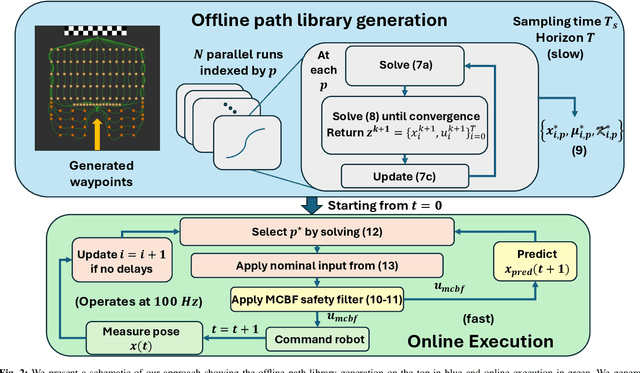

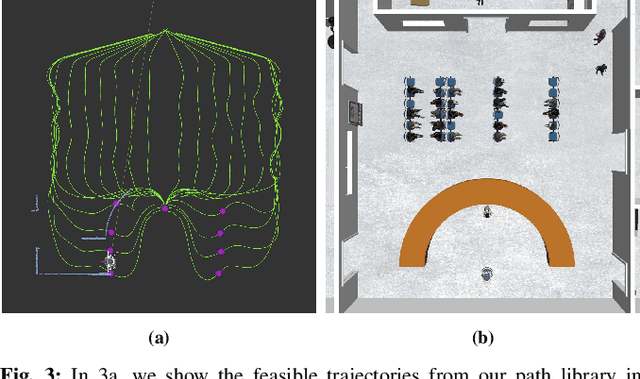

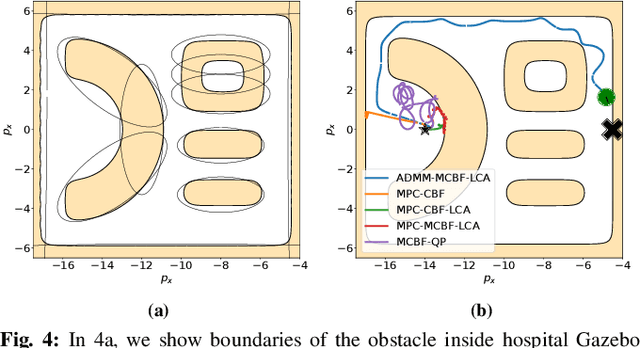

ADMM-MCBF-LCA: A Layered Control Architecture for Safe Real-Time Navigation

Mar 04, 2025

Abstract:We consider the problem of safe real-time navigation of a robot in a dynamic environment with moving obstacles of arbitrary smooth geometries and input saturation constraints. We assume that the robot detects and models nearby obstacle boundaries with a short-range sensor and that this detection is error-free. This problem presents three main challenges: i) input constraints, ii) safety, and iii) real-time computation. To tackle all three challenges, we present a layered control architecture (LCA) consisting of an offline path library generation layer, and an online path selection and safety layer. To overcome the limitations of reactive methods, our offline path library consists of feasible controllers, feedback gains, and reference trajectories. To handle computational burden and safety, we solve online path selection and generate safe inputs that run at 100 Hz. Through simulations on Gazebo and Fetch hardware in an indoor environment, we evaluate our approach against baselines that are layered, end-to-end, or reactive. Our experiments demonstrate that among all algorithms, only our proposed LCA is able to complete tasks such as reaching a goal, safely. When comparing metrics such as safety, input error, and success rate, we show that our approach generates safe and feasible inputs throughout the robot execution.

No Minima, No Collisions: Combining Modulation and Control Barrier Function Strategies for Feasible Dynamical Collision Avoidance

Feb 20, 2025Abstract:As prominent real-time safety-critical reactive control techniques, Control Barrier Function Quadratic Programs (CBF-QPs) work for control affine systems in general but result in local minima in the generated trajectories and consequently cannot ensure convergence to the goals. Contrarily, Modulation of Dynamical Systems (Mod-DSs), including normal, reference, and on-manifold Mod-DS, achieve obstacle avoidance with few and even no local minima but have trouble optimally minimizing the difference between the constrained and the unconstrained controller outputs, and its applications are limited to fully-actuated systems. We dive into the theoretical foundations of CBF-QP and Mod-DS, proving that despite their distinct origins, normal Mod-DS is a special case of CBF-QP, and reference Mod-DS's solutions are mathematically connected to that of the CBF-QP through one equation. Building on top of the unveiled theoretical connections between CBF-QP and Mod-DS, reference Mod-based CBF-QP and on-manifold Mod-based CBF-QP controllers are proposed to combine the strength of CBF-QP and Mod-DS approaches and realize local-minimum-free reactive obstacle avoidance for control affine systems in general. We validate our methods in both simulated hospital environments and real-world experiments using Ridgeback for fully-actuated systems and Fetch robots for underactuated systems. Mod-based CBF-QPs outperform CBF-QPs as well as the optimally constrained-enforcing Mod-DS approaches we proposed in all experiments.

Diffusion Models as Network Optimizers: Explorations and Analysis

Nov 04, 2024

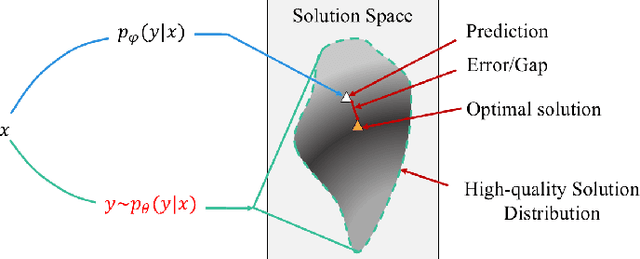

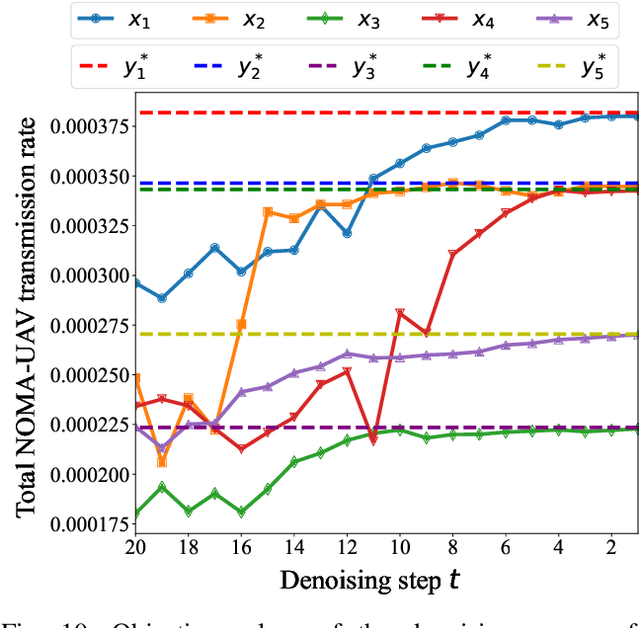

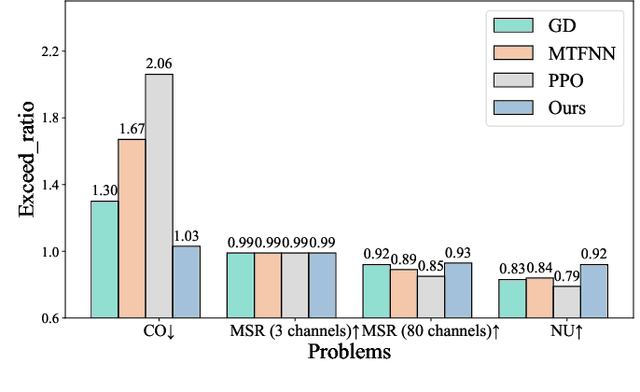

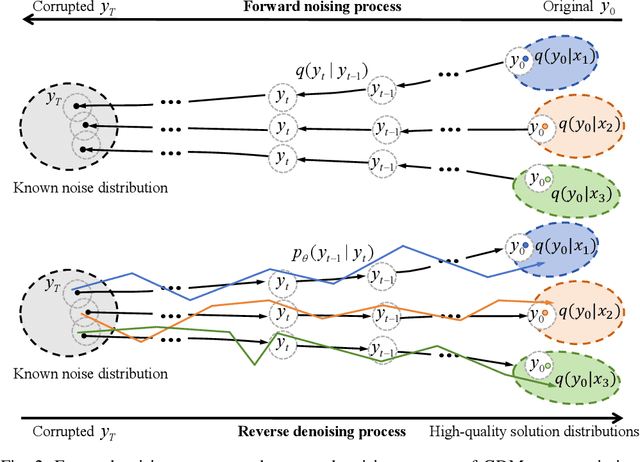

Abstract:Network optimization is a fundamental challenge in the Internet of Things (IoT) network, often characterized by complex features that make it difficult to solve these problems. Recently, generative diffusion models (GDMs) have emerged as a promising new approach to network optimization, with the potential to directly address these optimization problems. However, the application of GDMs in this field is still in its early stages, and there is a noticeable lack of theoretical research and empirical findings. In this study, we first explore the intrinsic characteristics of generative models. Next, we provide a concise theoretical proof and intuitive demonstration of the advantages of generative models over discriminative models in network optimization. Based on this exploration, we implement GDMs as optimizers aimed at learning high-quality solution distributions for given inputs, sampling from these distributions during inference to approximate or achieve optimal solutions. Specifically, we utilize denoising diffusion probabilistic models (DDPMs) and employ a classifier-free guidance mechanism to manage conditional guidance based on input parameters. We conduct extensive experiments across three challenging network optimization problems. By investigating various model configurations and the principles of GDMs as optimizers, we demonstrate the ability to overcome prediction errors and validate the convergence of generated solutions to optimal solutions.We provide code and data at https://github.com/qiyu3816/DiffSG.

Supervised Vector Quantized Variational Autoencoder for Learning Interpretable Global Representations

Sep 29, 2019

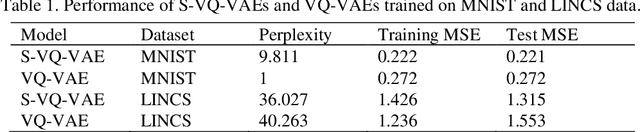

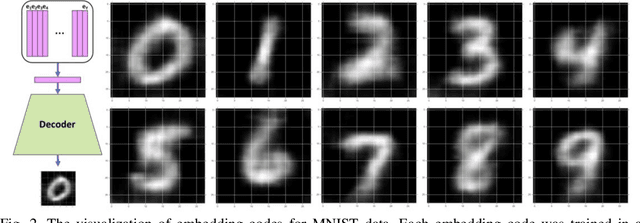

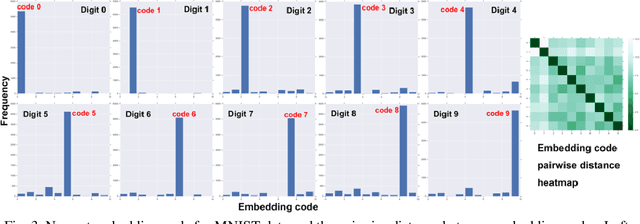

Abstract:Learning interpretable representations of data remains a central challenge in deep learning. When training a deep generative model, the observed data are often associated with certain categorical labels, and, in parallel with learning to regenerate data and simulate new data, learning an interpretable representation of each class of data is also a process of acquiring knowledge. Here, we present a novel generative model, referred to as the Supervised Vector Quantized Variational AutoEncoder (S-VQ-VAE), which combines the power of supervised and unsupervised learning to obtain a unique, interpretable global representation for each class of data. Compared with conventional generative models, our model has three key advantages: first, it is an integrative model that can simultaneously learn a feature representation for individual data point and a global representation for each class of data; second, the learning of global representations with embedding codes is guided by supervised information, which clearly defines the interpretation of each code; and third, the global representations capture crucial characteristics of different classes, which reveal similarity and differences of statistical structures underlying different groups of data. We evaluated the utility of S-VQ-VAE on a machine learning benchmark dataset, the MNIST dataset, and on gene expression data from the Library of Integrated Network-Based Cellular Signatures (LINCS). We proved that S-VQ-VAE was able to learn the global genetic characteristics of samples perturbed by the same class of perturbagen (PCL), and further revealed the mechanism correlations between PCLs. Such knowledge is crucial for promoting new drug development for complex diseases like cancer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge