Yanhui Gu

Breaking Semantic Hegemony: Decoupling Principal and Residual Subspaces for Generalized OOD Detection

Feb 05, 2026Abstract:While feature-based post-hoc methods have made significant strides in Out-of-Distribution (OOD) detection, we uncover a counter-intuitive Simplicity Paradox in existing state-of-the-art (SOTA) models: these models exhibit keen sensitivity in distinguishing semantically subtle OOD samples but suffer from severe Geometric Blindness when confronting structurally distinct yet semantically simple samples or high-frequency sensor noise. We attribute this phenomenon to Semantic Hegemony within the deep feature space and reveal its mathematical essence through the lens of Neural Collapse. Theoretical analysis demonstrates that the spectral concentration bias, induced by the high variance of the principal subspace, numerically masks the structural distribution shift signals that should be significant in the residual subspace. To address this issue, we propose D-KNN, a training-free, plug-and-play geometric decoupling framework. This method utilizes orthogonal decomposition to explicitly separate semantic components from structural residuals and introduces a dual-space calibration mechanism to reactivate the model's sensitivity to weak residual signals. Extensive experiments demonstrate that D-KNN effectively breaks Semantic Hegemony, establishing new SOTA performance on both CIFAR and ImageNet benchmarks. Notably, in resolving the Simplicity Paradox, it reduces the FPR95 from 31.3% to 2.3%; when addressing sensor failures such as Gaussian noise, it boosts the detection performance (AUROC) from a baseline of 79.7% to 94.9%.

Learning with Adaptive Prototype Manifolds for Out-of-Distribution Detection

Feb 05, 2026Abstract:Out-of-distribution (OOD) detection is a critical task for the safe deployment of machine learning models in the real world. Existing prototype-based representation learning methods have demonstrated exceptional performance. Specifically, we identify two fundamental flaws that universally constrain these methods: the Static Homogeneity Assumption (fixed representational resources for all classes) and the Learning-Inference Disconnect (discarding rich prototype quality knowledge at inference). These flaws fundamentally limit the model's capacity and performance. To address these issues, we propose APEX (Adaptive Prototype for eXtensive OOD Detection), a novel OOD detection framework designed via a Two-Stage Repair process to optimize the learned feature manifold. APEX introduces two key innovations to address these respective flaws: (1) an Adaptive Prototype Manifold (APM), which leverages the Minimum Description Length (MDL) principle to automatically determine the optimal prototype complexity $K_c^*$ for each class, thereby fundamentally resolving prototype collision; and (2) a Posterior-Aware OOD Scoring (PAOS) mechanism, which quantifies prototype quality (cohesion and separation) to bridge the learning-inference disconnect. Comprehensive experiments on benchmarks such as CIFAR-100 validate the superiority of our method, where APEX achieves new state-of-the-art performance.

VMF-GOS: Geometry-guided virtual Outlier Synthesis for Long-Tailed OOD Detection

Feb 05, 2026Abstract:Out-of-Distribution (OOD) detection under long-tailed distributions is a highly challenging task because the scarcity of samples in tail classes leads to blurred decision boundaries in the feature space. Current state-of-the-art (sota) methods typically employ Outlier Exposure (OE) strategies, relying on large-scale real external datasets (such as 80 Million Tiny Images) to regularize the feature space. However, this dependence on external data often becomes infeasible in practical deployment due to high data acquisition costs and privacy sensitivity. To this end, we propose a novel data-free framework aimed at completely eliminating reliance on external datasets while maintaining superior detection performance. We introduce a Geometry-guided virtual Outlier Synthesis (GOS) strategy that models statistical properties using the von Mises-Fisher (vMF) distribution on a hypersphere. Specifically, we locate a low-likelihood annulus in the feature space and perform directional sampling of virtual outliers in this region. Simultaneously, we introduce a new Dual-Granularity Semantic Loss (DGS) that utilizes contrastive learning to maximize the distinction between in-distribution (ID) features and these synthesized boundary outliers. Extensive experiments on benchmarks such as CIFAR-LT demonstrate that our method outperforms sota approaches that utilize external real images.

DFEN: Dual Feature Equalization Network for Medical Image Segmentation

May 09, 2025

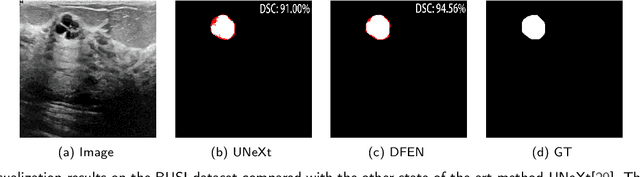

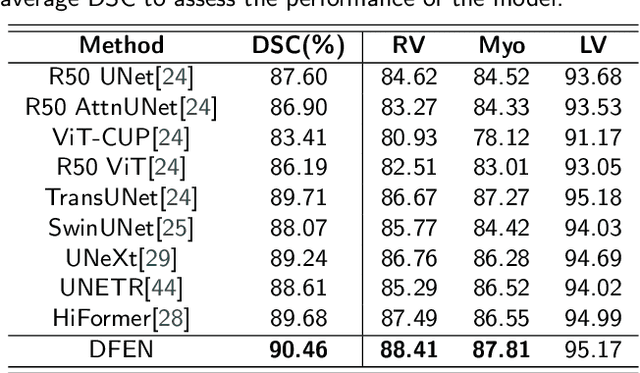

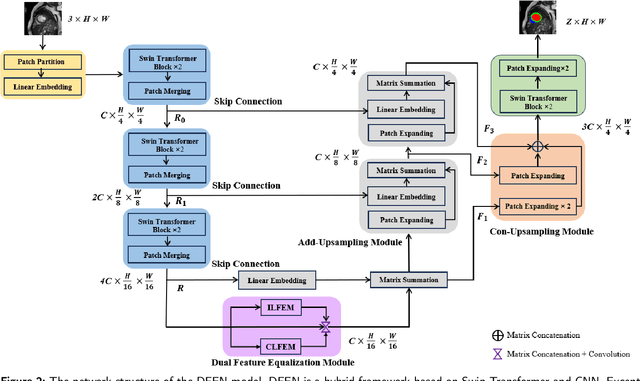

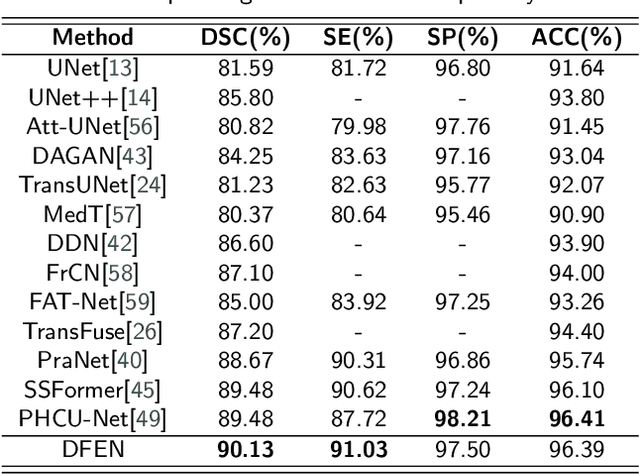

Abstract:Current methods for medical image segmentation primarily focus on extracting contextual feature information from the perspective of the whole image. While these methods have shown effective performance, none of them take into account the fact that pixels at the boundary and regions with a low number of class pixels capture more contextual feature information from other classes, leading to misclassification of pixels by unequal contextual feature information. In this paper, we propose a dual feature equalization network based on the hybrid architecture of Swin Transformer and Convolutional Neural Network, aiming to augment the pixel feature representations by image-level equalization feature information and class-level equalization feature information. Firstly, the image-level feature equalization module is designed to equalize the contextual information of pixels within the image. Secondly, we aggregate regions of the same class to equalize the pixel feature representations of the corresponding class by class-level feature equalization module. Finally, the pixel feature representations are enhanced by learning weights for image-level equalization feature information and class-level equalization feature information. In addition, Swin Transformer is utilized as both the encoder and decoder, thereby bolstering the ability of the model to capture long-range dependencies and spatial correlations. We conducted extensive experiments on Breast Ultrasound Images (BUSI), International Skin Imaging Collaboration (ISIC2017), Automated Cardiac Diagnosis Challenge (ACDC) and PH$^2$ datasets. The experimental results demonstrate that our method have achieved state-of-the-art performance. Our code is publicly available at https://github.com/JianJianYin/DFEN.

Uncertainty-Participation Context Consistency Learning for Semi-supervised Semantic Segmentation

Dec 24, 2024

Abstract:Semi-supervised semantic segmentation has attracted considerable attention for its ability to mitigate the reliance on extensive labeled data. However, existing consistency regularization methods only utilize high certain pixels with prediction confidence surpassing a fixed threshold for training, failing to fully leverage the potential supervisory information within the network. Therefore, this paper proposes the Uncertainty-participation Context Consistency Learning (UCCL) method to explore richer supervisory signals. Specifically, we first design the semantic backpropagation update (SBU) strategy to fully exploit the knowledge from uncertain pixel regions, enabling the model to learn consistent pixel-level semantic information from those areas. Furthermore, we propose the class-aware knowledge regulation (CKR) module to facilitate the regulation of class-level semantic features across different augmented views, promoting consistent learning of class-level semantic information within the encoder. Experimental results on two public benchmarks demonstrate that our proposed method achieves state-of-the-art performance. Our code is available at https://github.com/YUKEKEJAN/UCCL.

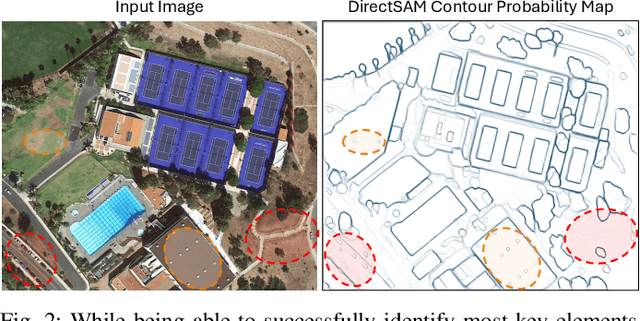

Prompting DirectSAM for Semantic Contour Extraction in Remote Sensing Images

Oct 08, 2024

Abstract:The Direct Segment Anything Model (DirectSAM) excels in class-agnostic contour extraction. In this paper, we explore its use by applying it to optical remote sensing imagery, where semantic contour extraction-such as identifying buildings, road networks, and coastlines-holds significant practical value. Those applications are currently handled via training specialized small models separately on small datasets in each domain. We introduce a foundation model derived from DirectSAM, termed DirectSAM-RS, which not only inherits the strong segmentation capability acquired from natural images, but also benefits from a large-scale dataset we created for remote sensing semantic contour extraction. This dataset comprises over 34k image-text-contour triplets, making it at least 30 times larger than individual dataset. DirectSAM-RS integrates a prompter module: a text encoder and cross-attention layers attached to the DirectSAM architecture, which allows flexible conditioning on target class labels or referring expressions. We evaluate the DirectSAM-RS in both zero-shot and fine-tuning setting, and demonstrate that it achieves state-of-the-art performance across several downstream benchmarks.

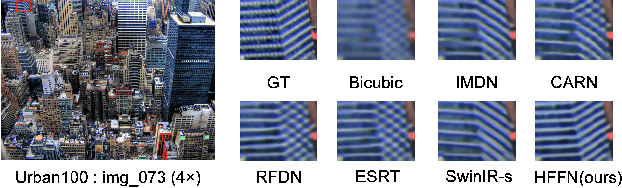

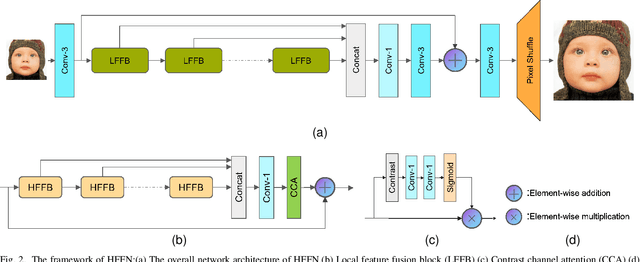

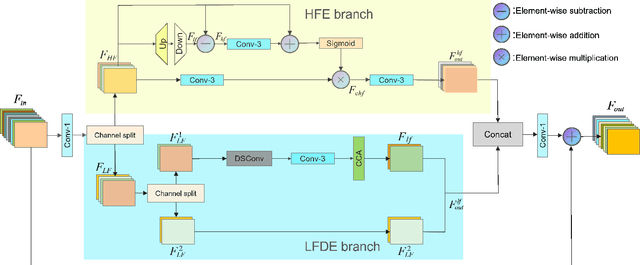

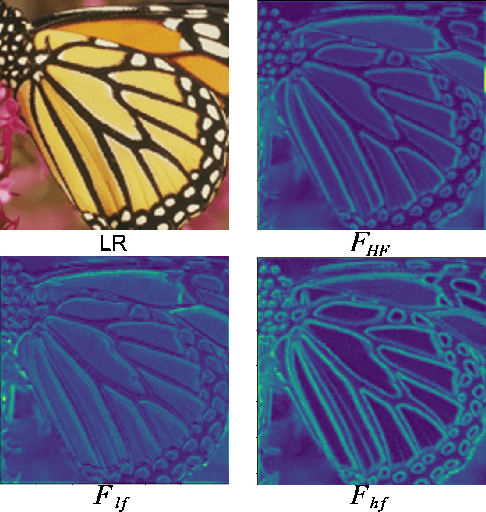

A High-Frequency Focused Network for Lightweight Single Image Super-Resolution

Mar 21, 2023

Abstract:Lightweight neural networks for single-image super-resolution (SISR) tasks have made substantial breakthroughs in recent years. Compared to low-frequency information, high-frequency detail is much more difficult to reconstruct. Most SISR models allocate equal computational resources for low-frequency and high-frequency information, which leads to redundant processing of simple low-frequency information and inadequate recovery of more challenging high-frequency information. We propose a novel High-Frequency Focused Network (HFFN) through High-Frequency Focused Blocks (HFFBs) that selectively enhance high-frequency information while minimizing redundant feature computation of low-frequency information. The HFFB effectively allocates more computational resources to the more challenging reconstruction of high-frequency information. Moreover, we propose a Local Feature Fusion Block (LFFB) effectively fuses features from multiple HFFBs in a local region, utilizing complementary information across layers to enhance feature representativeness and reduce artifacts in reconstructed images. We assess the efficacy of our proposed HFFN on five benchmark datasets and show that it significantly enhances the super-resolution performance of the network. Our experimental results demonstrate state-of-the-art performance in reconstructing high-frequency information while using a low number of parameters.

Multi-Semantic Interactive Learning for Object Detection

Mar 18, 2023Abstract:Single-branch object detection methods use shared features for localization and classification, yet the shared features are not fit for the two different tasks simultaneously. Multi-branch object detection methods usually use different features for localization and classification separately, ignoring the relevance between different tasks. Therefore, we propose multi-semantic interactive learning (MSIL) to mine the semantic relevance between different branches and extract multi-semantic enhanced features of objects. MSIL first performs semantic alignment of regression and classification branches, then merges the features of different branches by semantic fusion, finally extracts relevant information by semantic separation and passes it back to the regression and classification branches respectively. More importantly, MSIL can be integrated into existing object detection nets as a plug-and-play component. Experiments on the MS COCO, and Pascal VOC datasets show that the integration of MSIL with existing algorithms can utilize the relevant information between semantics of different tasks and achieve better performance.

Class-level Multiple Distributions Representation are Necessary for Semantic Segmentation

Mar 14, 2023Abstract:Existing approaches focus on using class-level features to improve semantic segmentation performance. How to characterize the relationships of intra-class pixels and inter-class pixels is the key to extract the discriminative representative class-level features. In this paper, we introduce for the first time to describe intra-class variations by multiple distributions. Then, multiple distributions representation learning(\textbf{MDRL}) is proposed to augment the pixel representations for semantic segmentation. Meanwhile, we design a class multiple distributions consistency strategy to construct discriminative multiple distribution representations of embedded pixels. Moreover, we put forward a multiple distribution semantic aggregation module to aggregate multiple distributions of the corresponding class to enhance pixel semantic information. Our approach can be seamlessly integrated into popular segmentation frameworks FCN/PSPNet/CCNet and achieve 5.61\%/1.75\%/0.75\% mIoU improvements on ADE20K. Extensive experiments on the Cityscapes, ADE20K datasets have proved that our method can bring significant performance improvement.

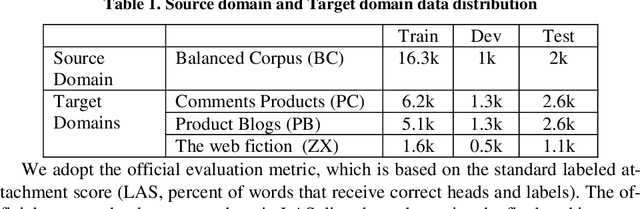

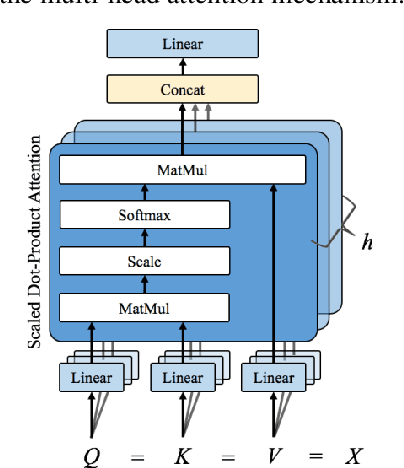

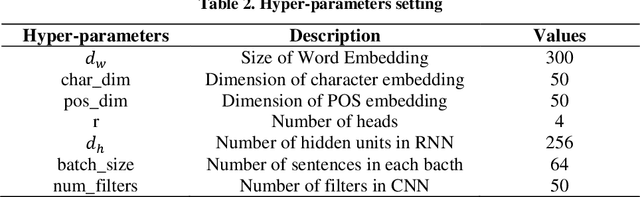

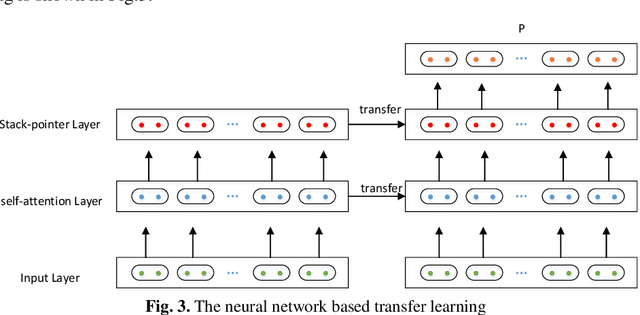

Neural Network based Deep Transfer Learning for Cross-domain Dependency Parsing

Aug 08, 2019

Abstract:In this paper, we describe the details of the neural dependency parser sub-mitted by our team to the NLPCC 2019 Shared Task of Semi-supervised do-main adaptation subtask on Cross-domain Dependency Parsing. Our system is based on the stack-pointer networks(STACKPTR). Considering the im-portance of context, we utilize self-attention mechanism for the representa-tion vectors to capture the meaning of words. In addition, to adapt three dif-ferent domains, we utilize neural network based deep transfer learning which transfers the pre-trained partial network in the source domain to be a part of deep neural network in the three target domains (product comments, product blogs and web fiction) respectively. Results on the three target domains demonstrate that our model performs competitively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge