Zhichao Zheng

Halt the Hallucination: Decoupling Signal and Semantic OOD Detection Based on Cascaded Early Rejection

Feb 06, 2026Abstract:Efficient and robust Out-of-Distribution (OOD) detection is paramount for safety-critical applications.However, existing methods still execute full-scale inference on low-level statistical noise. This computational mismatch not only incurs resource waste but also induces semantic hallucination, where deep networks forcefully interpret physical anomalies as high-confidence semantic features.To address this, we propose the Cascaded Early Rejection (CER) framework, which realizes hierarchical filtering for anomaly detection via a coarse-to-fine logic.CER comprises two core modules: 1)Structural Energy Sieve (SES), which establishes a non-parametric barrier at the network entry using the Laplacian operator to efficiently intercept physical signal anomalies; and 2) the Semantically-aware Hyperspherical Energy (SHE) detector, which decouples feature magnitude from direction in intermediate layers to identify fine-grained semantic deviations. Experimental results demonstrate that CER not only reduces computational overhead by 32% but also achieves a significant performance leap on the CIFAR-100 benchmark:the average FPR95 drastically decreases from 33.58% to 22.84%, and AUROC improves to 93.97%. Crucially, in real-world scenarios simulating sensor failures, CER exhibits performance far exceeding state-of-the-art methods. As a universal plugin, CER can be seamlessly integrated into various SOTA models to provide performance gains.

DFEN: Dual Feature Equalization Network for Medical Image Segmentation

May 09, 2025

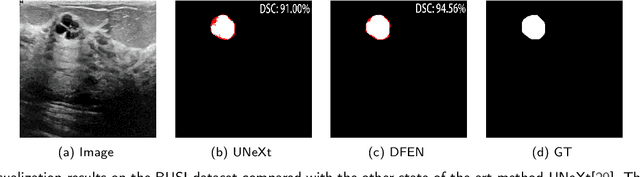

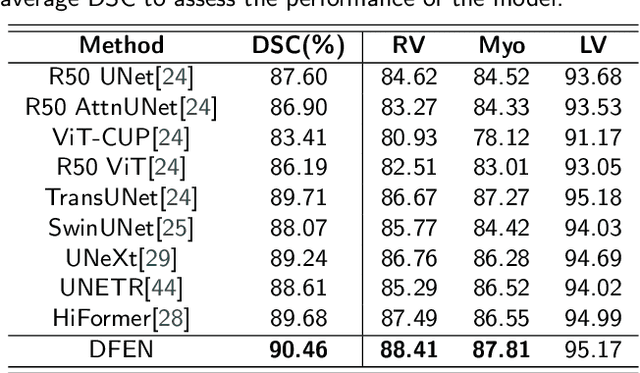

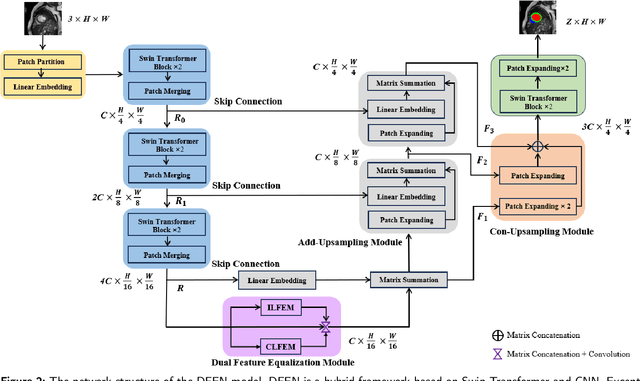

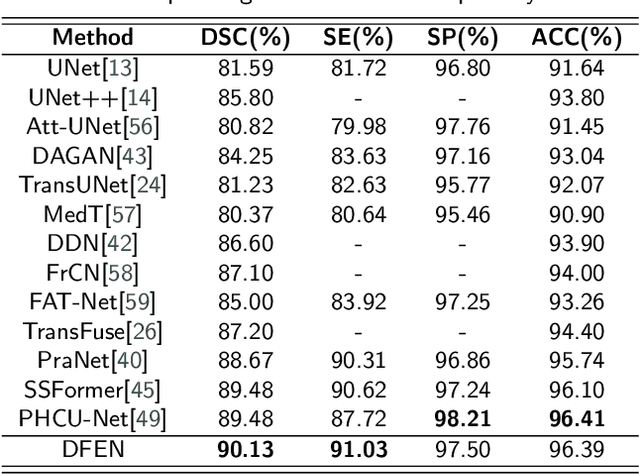

Abstract:Current methods for medical image segmentation primarily focus on extracting contextual feature information from the perspective of the whole image. While these methods have shown effective performance, none of them take into account the fact that pixels at the boundary and regions with a low number of class pixels capture more contextual feature information from other classes, leading to misclassification of pixels by unequal contextual feature information. In this paper, we propose a dual feature equalization network based on the hybrid architecture of Swin Transformer and Convolutional Neural Network, aiming to augment the pixel feature representations by image-level equalization feature information and class-level equalization feature information. Firstly, the image-level feature equalization module is designed to equalize the contextual information of pixels within the image. Secondly, we aggregate regions of the same class to equalize the pixel feature representations of the corresponding class by class-level feature equalization module. Finally, the pixel feature representations are enhanced by learning weights for image-level equalization feature information and class-level equalization feature information. In addition, Swin Transformer is utilized as both the encoder and decoder, thereby bolstering the ability of the model to capture long-range dependencies and spatial correlations. We conducted extensive experiments on Breast Ultrasound Images (BUSI), International Skin Imaging Collaboration (ISIC2017), Automated Cardiac Diagnosis Challenge (ACDC) and PH$^2$ datasets. The experimental results demonstrate that our method have achieved state-of-the-art performance. Our code is publicly available at https://github.com/JianJianYin/DFEN.

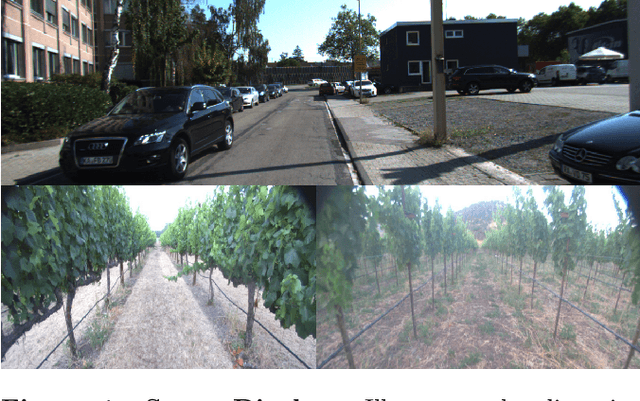

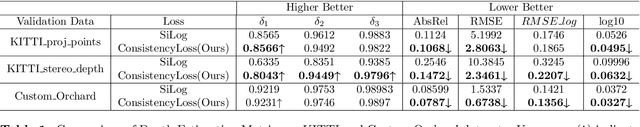

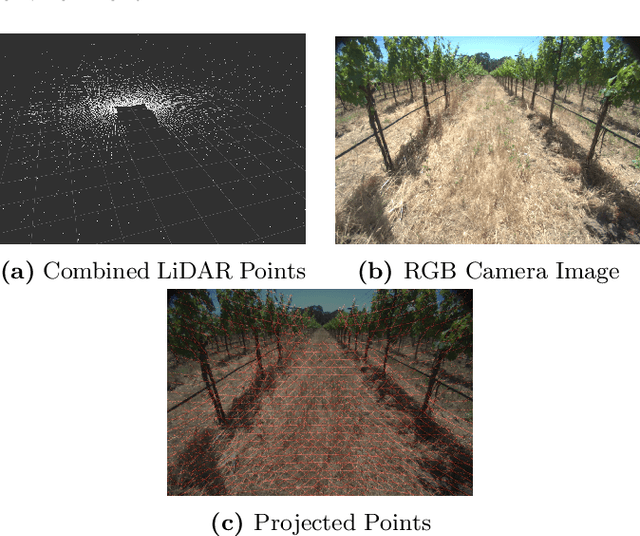

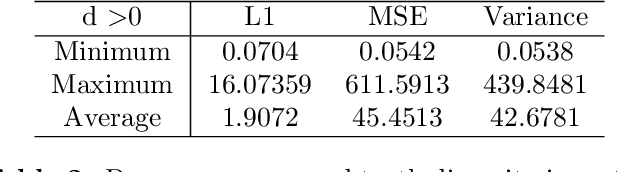

OrchardDepth: Precise Metric Depth Estimation of Orchard Scene from Monocular Camera Images

Feb 20, 2025

Abstract:Monocular depth estimation is a rudimentary task in robotic perception. Recently, with the development of more accurate and robust neural network models and different types of datasets, monocular depth estimation has significantly improved performance and efficiency. However, most of the research in this area focuses on very concentrated domains. In particular, most of the benchmarks in outdoor scenarios belong to urban environments for the improvement of autonomous driving devices, and these benchmarks have a massive disparity with the orchard/vineyard environment, which is hardly helpful for research in the primary industry. Therefore, we propose OrchardDepth, which fills the gap in the estimation of the metric depth of the monocular camera in the orchard/vineyard environment. In addition, we present a new retraining method to improve the training result by monitoring the consistent regularization between dense depth maps and sparse points. Our method improves the RMSE of depth estimation in the orchard environment from 1.5337 to 0.6738, proving our method's validation.

Uncertainty-Participation Context Consistency Learning for Semi-supervised Semantic Segmentation

Dec 24, 2024

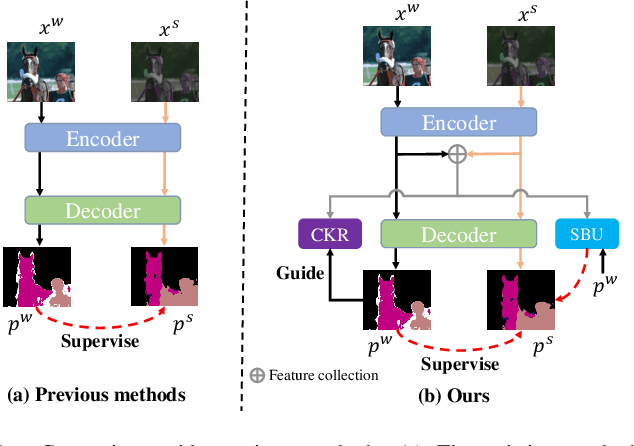

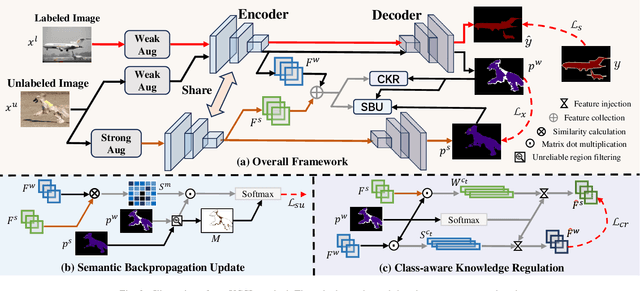

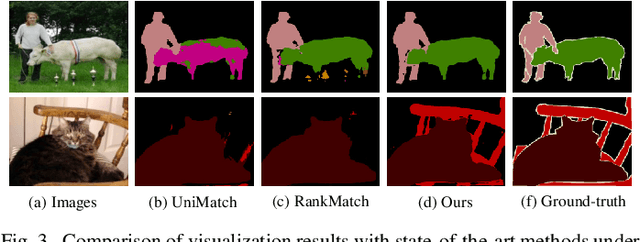

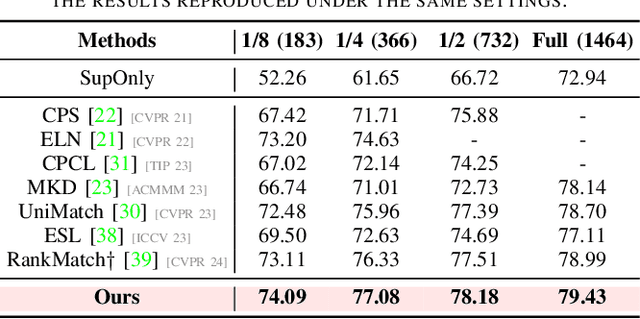

Abstract:Semi-supervised semantic segmentation has attracted considerable attention for its ability to mitigate the reliance on extensive labeled data. However, existing consistency regularization methods only utilize high certain pixels with prediction confidence surpassing a fixed threshold for training, failing to fully leverage the potential supervisory information within the network. Therefore, this paper proposes the Uncertainty-participation Context Consistency Learning (UCCL) method to explore richer supervisory signals. Specifically, we first design the semantic backpropagation update (SBU) strategy to fully exploit the knowledge from uncertain pixel regions, enabling the model to learn consistent pixel-level semantic information from those areas. Furthermore, we propose the class-aware knowledge regulation (CKR) module to facilitate the regulation of class-level semantic features across different augmented views, promoting consistent learning of class-level semantic information within the encoder. Experimental results on two public benchmarks demonstrate that our proposed method achieves state-of-the-art performance. Our code is available at https://github.com/YUKEKEJAN/UCCL.

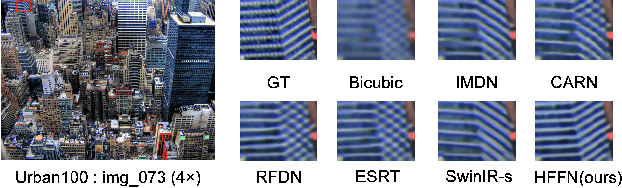

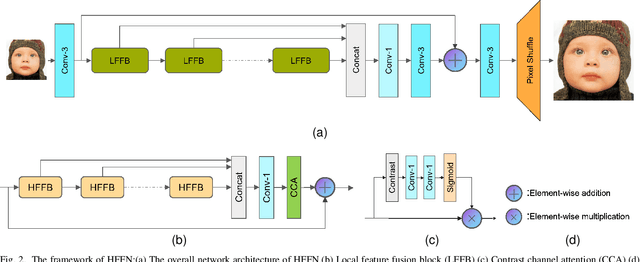

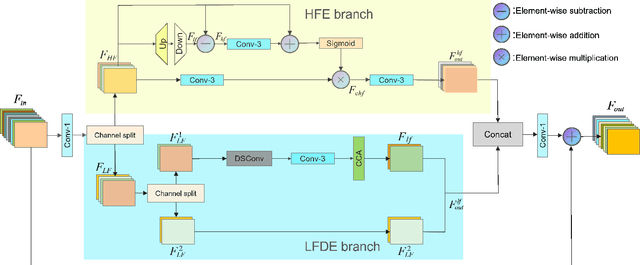

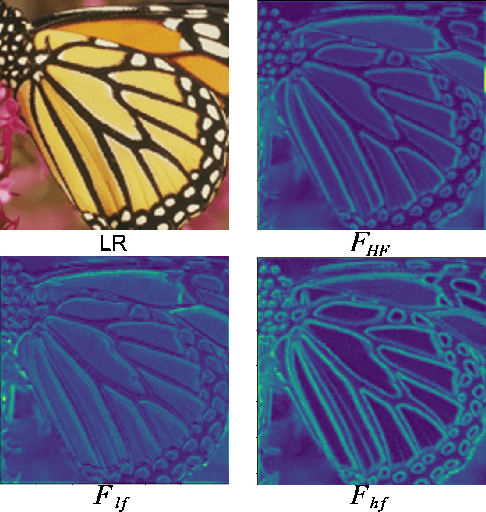

A High-Frequency Focused Network for Lightweight Single Image Super-Resolution

Mar 21, 2023

Abstract:Lightweight neural networks for single-image super-resolution (SISR) tasks have made substantial breakthroughs in recent years. Compared to low-frequency information, high-frequency detail is much more difficult to reconstruct. Most SISR models allocate equal computational resources for low-frequency and high-frequency information, which leads to redundant processing of simple low-frequency information and inadequate recovery of more challenging high-frequency information. We propose a novel High-Frequency Focused Network (HFFN) through High-Frequency Focused Blocks (HFFBs) that selectively enhance high-frequency information while minimizing redundant feature computation of low-frequency information. The HFFB effectively allocates more computational resources to the more challenging reconstruction of high-frequency information. Moreover, we propose a Local Feature Fusion Block (LFFB) effectively fuses features from multiple HFFBs in a local region, utilizing complementary information across layers to enhance feature representativeness and reduce artifacts in reconstructed images. We assess the efficacy of our proposed HFFN on five benchmark datasets and show that it significantly enhances the super-resolution performance of the network. Our experimental results demonstrate state-of-the-art performance in reconstructing high-frequency information while using a low number of parameters.

Multi-Semantic Interactive Learning for Object Detection

Mar 18, 2023Abstract:Single-branch object detection methods use shared features for localization and classification, yet the shared features are not fit for the two different tasks simultaneously. Multi-branch object detection methods usually use different features for localization and classification separately, ignoring the relevance between different tasks. Therefore, we propose multi-semantic interactive learning (MSIL) to mine the semantic relevance between different branches and extract multi-semantic enhanced features of objects. MSIL first performs semantic alignment of regression and classification branches, then merges the features of different branches by semantic fusion, finally extracts relevant information by semantic separation and passes it back to the regression and classification branches respectively. More importantly, MSIL can be integrated into existing object detection nets as a plug-and-play component. Experiments on the MS COCO, and Pascal VOC datasets show that the integration of MSIL with existing algorithms can utilize the relevant information between semantics of different tasks and achieve better performance.

Class-level Multiple Distributions Representation are Necessary for Semantic Segmentation

Mar 14, 2023Abstract:Existing approaches focus on using class-level features to improve semantic segmentation performance. How to characterize the relationships of intra-class pixels and inter-class pixels is the key to extract the discriminative representative class-level features. In this paper, we introduce for the first time to describe intra-class variations by multiple distributions. Then, multiple distributions representation learning(\textbf{MDRL}) is proposed to augment the pixel representations for semantic segmentation. Meanwhile, we design a class multiple distributions consistency strategy to construct discriminative multiple distribution representations of embedded pixels. Moreover, we put forward a multiple distribution semantic aggregation module to aggregate multiple distributions of the corresponding class to enhance pixel semantic information. Our approach can be seamlessly integrated into popular segmentation frameworks FCN/PSPNet/CCNet and achieve 5.61\%/1.75\%/0.75\% mIoU improvements on ADE20K. Extensive experiments on the Cityscapes, ADE20K datasets have proved that our method can bring significant performance improvement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge