Xihan Li

Logic Synthesis with Generative Deep Neural Networks

Jun 07, 2024

Abstract:While deep learning has achieved significant success in various domains, its application to logic circuit design has been limited due to complex constraints and strict feasibility requirement. However, a recent generative deep neural model, "Circuit Transformer", has shown promise in this area by enabling equivalence-preserving circuit transformation on a small scale. In this paper, we introduce a logic synthesis rewriting operator based on the Circuit Transformer model, named "ctrw" (Circuit Transformer Rewriting), which incorporates the following techniques: (1) a two-stage training scheme for the Circuit Transformer tailored for logic synthesis, with iterative improvement of optimality through self-improvement training; (2) integration of the Circuit Transformer with state-of-the-art rewriting techniques to address scalability issues, allowing for guided DAG-aware rewriting. Experimental results on the IWLS 2023 contest benchmark demonstrate the effectiveness of our proposed rewriting methods.

Circuit Transformer: End-to-end Circuit Design by Predicting the Next Gate

Mar 14, 2024Abstract:Language, a prominent human ability to express through sequential symbols, has been computationally mastered by recent advances of large language models (LLMs). By predicting the next word recurrently with huge neural models, LLMs have shown unprecedented capabilities in understanding and reasoning. Circuit, as the "language" of electronic design, specifies the functionality of an electronic device by cascade connections of logic gates. Then, can circuits also be mastered by a a sufficiently large "circuit model", which can conquer electronic design tasks by simply predicting the next logic gate? In this work, we take the first step to explore such possibilities. Two primary barriers impede the straightforward application of LLMs to circuits: their complex, non-sequential structure, and the intolerance of hallucination due to strict constraints (e.g., equivalence). For the first barrier, we encode a circuit as a memory-less, depth-first traversal trajectory, which allows Transformer-based neural models to better leverage its structural information, and predict the next gate on the trajectory as a circuit model. For the second barrier, we introduce an equivalence-preserving decoding process, which ensures that every token in the generated trajectory adheres to the specified equivalence constraints. Moreover, the circuit model can also be regarded as a stochastic policy to tackle optimization-oriented circuit design tasks. Experimentally, we trained a Transformer-based model of 88M parameters, named "Circuit Transformer", which demonstrates impressive performance in end-to-end logic synthesis. With Monte-Carlo tree search, Circuit Transformer significantly improves over resyn2 while retaining strict equivalence, showcasing the potential of generative AI in conquering electronic design challenges.

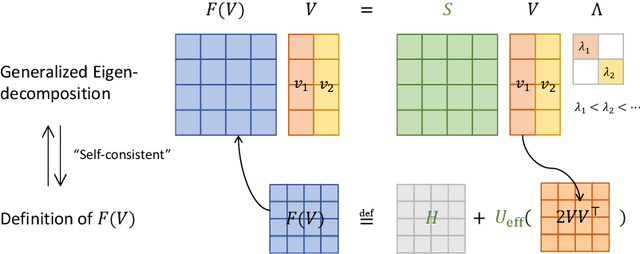

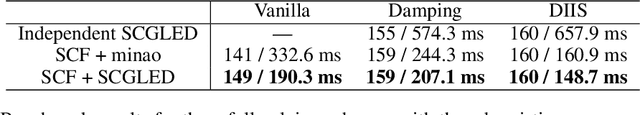

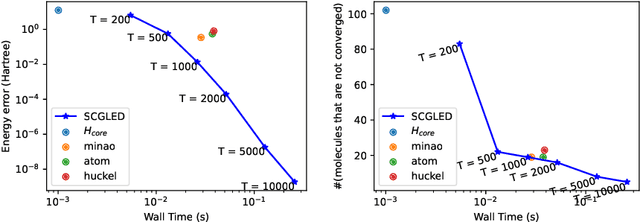

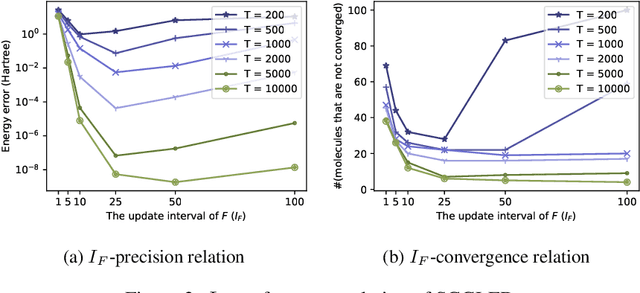

Self-consistent Gradient-like Eigen Decomposition in Solving Schrödinger Equations

Feb 03, 2022

Abstract:The Schr\"odinger equation is at the heart of modern quantum mechanics. Since exact solutions of the ground state are typically intractable, standard approaches approximate Schr\"odinger equation as forms of nonlinear generalized eigenvalue problems $F(V)V = SV\Lambda$ in which $F(V)$, the matrix to be decomposed, is a function of its own top-$k$ smallest eigenvectors $V$, leading to a "self-consistency problem". Traditional iterative methods heavily rely on high-quality initial guesses of $V$ generated via domain-specific heuristics methods based on quantum mechanics. In this work, we eliminate such a need for domain-specific heuristics by presenting a novel framework, Self-consistent Gradient-like Eigen Decomposition (SCGLED) that regards $F(V)$ as a special "online data generator", thus allows gradient-like eigendecomposition methods in streaming $k$-PCA to approach the self-consistency of the equation from scratch in an iterative way similar to online learning. With several critical numerical improvements, SCGLED is robust to initial guesses, free of quantum-mechanism-based heuristics designs, and neat in implementation. Our experiments show that it not only can simply replace traditional heuristics-based initial guess methods with large performance advantage (achieved averagely 25x more precise than the best baseline in similar wall time), but also is capable of finding highly precise solutions independently without any traditional iterative methods.

A Cooperative Multi-Agent Reinforcement Learning Framework for Resource Balancing in Complex Logistics Network

Mar 02, 2019

Abstract:Resource balancing within complex transportation networks is one of the most important problems in real logistics domain. Traditional solutions on these problems leverage combinatorial optimization with demand and supply forecasting. However, the high complexity of transportation routes, severe uncertainty of future demand and supply, together with non-convex business constraints make it extremely challenging in the traditional resource management field. In this paper, we propose a novel sophisticated multi-agent reinforcement learning approach to address these challenges. In particular, inspired by the externalities especially the interactions among resource agents, we introduce an innovative cooperative mechanism for state and reward design resulting in more effective and efficient transportation. Extensive experiments on a simulated ocean transportation service demonstrate that our new approach can stimulate cooperation among agents and lead to much better performance. Compared with traditional solutions based on combinatorial optimization, our approach can give rise to a significant improvement in terms of both performance and stability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge