Xiaoying Yang

ERL-MPP: Evolutionary Reinforcement Learning with Multi-head Puzzle Perception for Solving Large-scale Jigsaw Puzzles of Eroded Gaps

Apr 13, 2025Abstract:Solving jigsaw puzzles has been extensively studied. While most existing models focus on solving either small-scale puzzles or puzzles with no gap between fragments, solving large-scale puzzles with gaps presents distinctive challenges in both image understanding and combinatorial optimization. To tackle these challenges, we propose a framework of Evolutionary Reinforcement Learning with Multi-head Puzzle Perception (ERL-MPP) to derive a better set of swapping actions for solving the puzzles. Specifically, to tackle the challenges of perceiving the puzzle with gaps, a Multi-head Puzzle Perception Network (MPPN) with a shared encoder is designed, where multiple puzzlet heads comprehensively perceive the local assembly status, and a discriminator head provides a global assessment of the puzzle. To explore the large swapping action space efficiently, an Evolutionary Reinforcement Learning (EvoRL) agent is designed, where an actor recommends a set of suitable swapping actions from a large action space based on the perceived puzzle status, a critic updates the actor using the estimated rewards and the puzzle status, and an evaluator coupled with evolutionary strategies evolves the actions aligning with the historical assembly experience. The proposed ERL-MPP is comprehensively evaluated on the JPLEG-5 dataset with large gaps and the MIT dataset with large-scale puzzles. It significantly outperforms all state-of-the-art models on both datasets.

End-to-end Generative Spatial-Temporal Ultrasonic Odometry and Mapping Framework

Dec 23, 2024

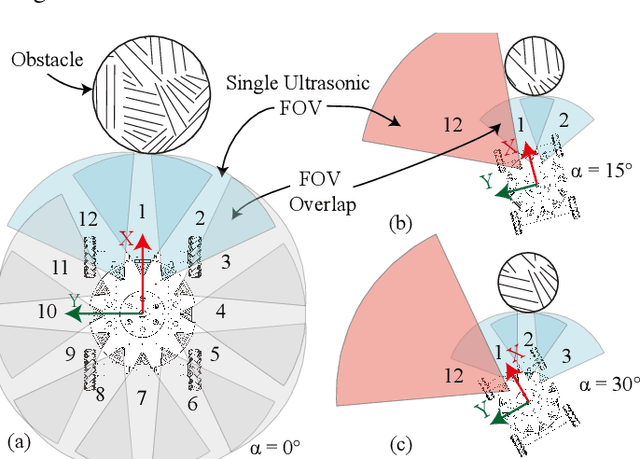

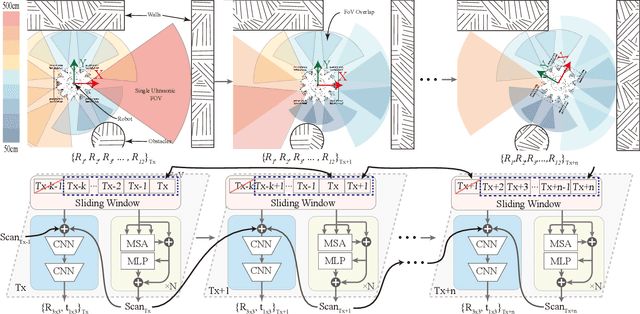

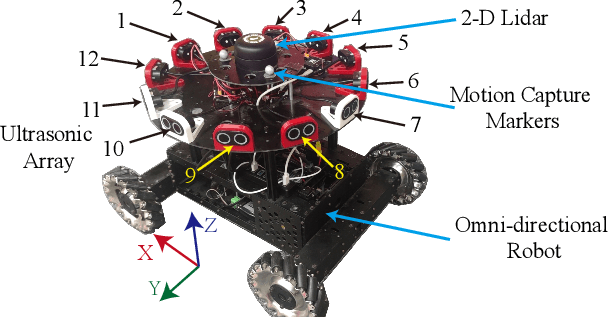

Abstract:Performing simultaneous localization and mapping (SLAM) in low-visibility conditions, such as environments filled with smoke, dust and transparent objets, has long been a challenging task. Sensors like cameras and Light Detection and Ranging (LiDAR) are significantly limited under these conditions, whereas ultrasonic sensors offer a more robust alternative. However, the low angular resolution, slow update frequency, and limited detection accuracy of ultrasonic sensors present barriers for SLAM. In this work, we propose a novel end-to-end generative ultrasonic SLAM framework. This framework employs a sensor array with overlapping fields of view, leveraging the inherently low angular resolution of ultrasonic sensors to implicitly encode spatial features in conjunction with the robot's motion. Consecutive time frame data is processed through a sliding window mechanism to capture temporal features. The spatiotemporally encoded sensor data is passed through multiple modules to generate dense scan point clouds and robot pose transformations for map construction and odometry. The main contributions of this work include a novel ultrasonic sensor array that spatiotemporally encodes the surrounding environment, and an end-to-end generative SLAM framework that overcomes the inherent defects of ultrasonic sensors. Several real-world experiments demonstrate the feasibility and robustness of the proposed framework.

Scale Optimization Using Evolutionary Reinforcement Learning for Object Detection on Drone Imagery

Dec 23, 2023Abstract:Object detection in aerial imagery presents a significant challenge due to large scale variations among objects. This paper proposes an evolutionary reinforcement learning agent, integrated within a coarse-to-fine object detection framework, to optimize the scale for more effective detection of objects in such images. Specifically, a set of patches potentially containing objects are first generated. A set of rewards measuring the localization accuracy, the accuracy of predicted labels, and the scale consistency among nearby patches are designed in the agent to guide the scale optimization. The proposed scale-consistency reward ensures similar scales for neighboring objects of the same category. Furthermore, a spatial-semantic attention mechanism is designed to exploit the spatial semantic relations between patches. The agent employs the proximal policy optimization strategy in conjunction with the evolutionary strategy, effectively utilizing both the current patch status and historical experience embedded in the agent. The proposed model is compared with state-of-the-art methods on two benchmark datasets for object detection on drone imagery. It significantly outperforms all the compared methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge