Wil Thomason

Department of Computer Science, Rice University

AORRTC: Almost-Surely Asymptotically Optimal Planning with RRT-Connect

May 15, 2025

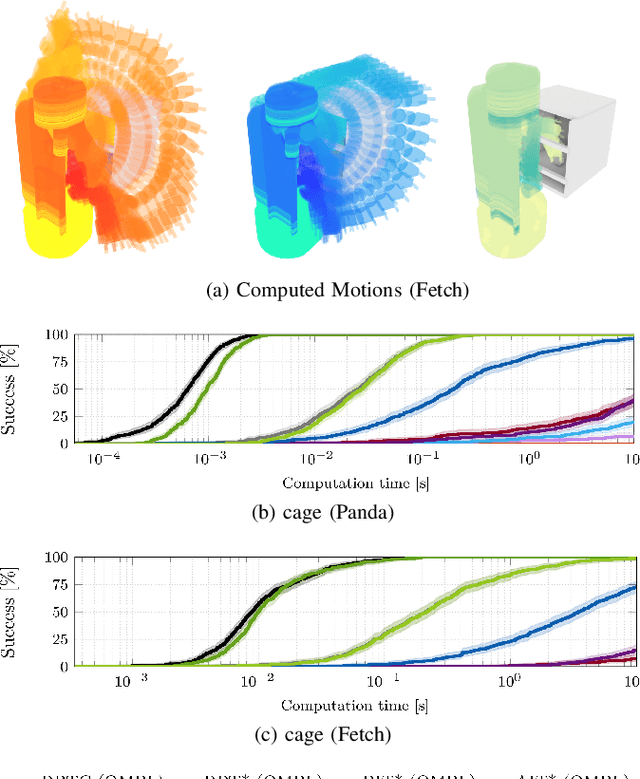

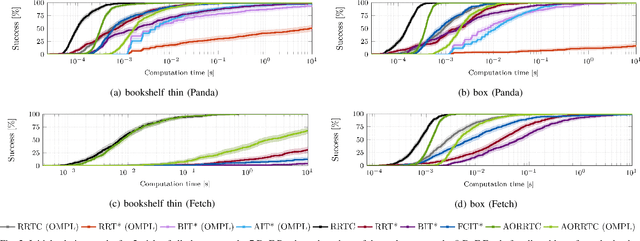

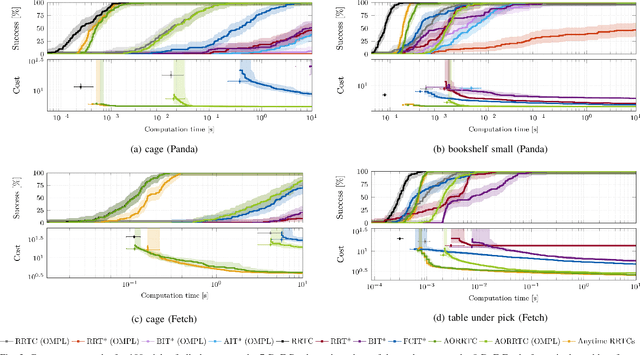

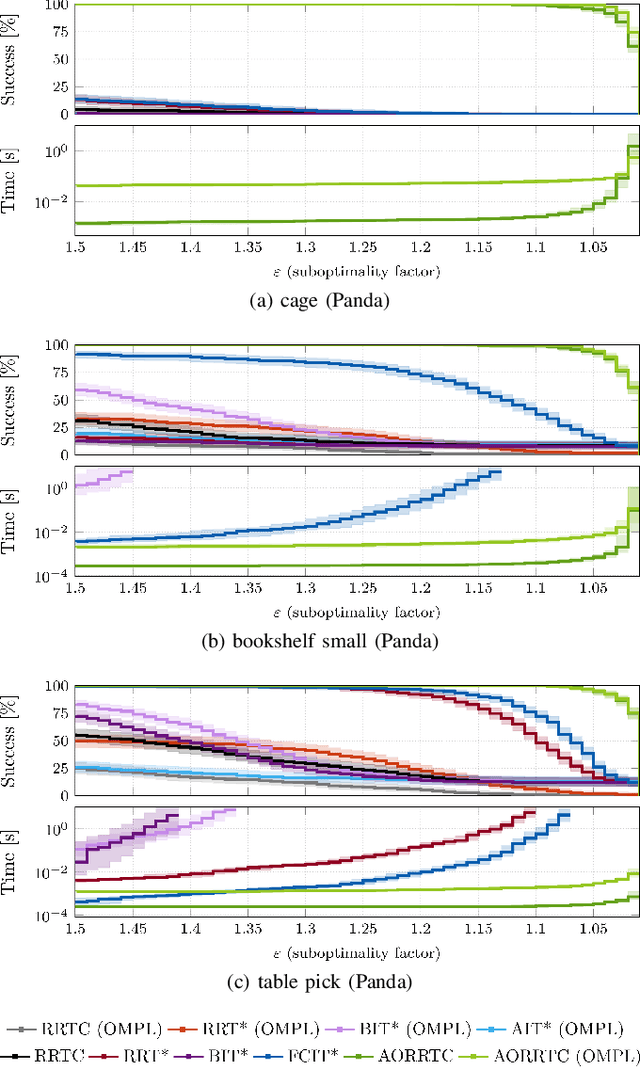

Abstract:Finding high-quality solutions quickly is an important objective in motion planning. This is especially true for high-degree-of-freedom robots. Satisficing planners have traditionally found feasible solutions quickly but provide no guarantees on their optimality, while almost-surely asymptotically optimal (a.s.a.o.) planners have probabilistic guarantees on their convergence towards an optimal solution but are more computationally expensive. This paper uses the AO-x meta-algorithm to extend the satisficing RRT-Connect planner to optimal planning. The resulting Asymptotically Optimal RRT-Connect (AORRTC) finds initial solutions in similar times as RRT-Connect and uses any additional planning time to converge towards the optimal solution in an anytime manner. It is proven to be probabilistically complete and a.s.a.o. AORRTC was tested with the Panda (7 DoF) and Fetch (8 DoF) robotic arms on the MotionBenchMaker dataset. These experiments show that AORRTC finds initial solutions as fast as RRT-Connect and faster than the tested state-of-the-art a.s.a.o. algorithms while converging to better solutions faster. AORRTC finds solutions to difficult high-DoF planning problems in milliseconds where the other a.s.a.o. planners could not consistently find solutions in seconds. This performance was demonstrated both with and without single instruction/multiple data (SIMD) acceleration.

Nearest-Neighbourless Asymptotically Optimal Motion Planning with Fully Connected Informed Trees (FCIT*)

Nov 26, 2024Abstract:Improving the performance of motion planning algorithms for high-degree-of-freedom robots usually requires reducing the cost or frequency of computationally expensive operations. Traditionally, and especially for asymptotically optimal sampling-based motion planners, the most expensive operations are local motion validation and querying the nearest neighbours of a configuration. Recent advances have significantly reduced the cost of motion validation by using single instruction/multiple data (SIMD) parallelism to improve solution times for satisficing motion planning problems. These advances have not yet been applied to asymptotically optimal motion planning. This paper presents Fully Connected Informed Trees (FCIT*), the first fully connected, informed, anytime almost-surely asymptotically optimal (ASAO) algorithm. FCIT* exploits the radically reduced cost of edge evaluation via SIMD parallelism to build and search fully connected graphs. This removes the need for nearest-neighbours structures, which are a dominant cost for many sampling-based motion planners, and allows it to find initial solutions faster than state-of-the-art ASAO (VAMP, OMPL) and satisficing (OMPL) algorithms on the MotionBenchMaker dataset while converging towards optimal plans in an anytime manner.

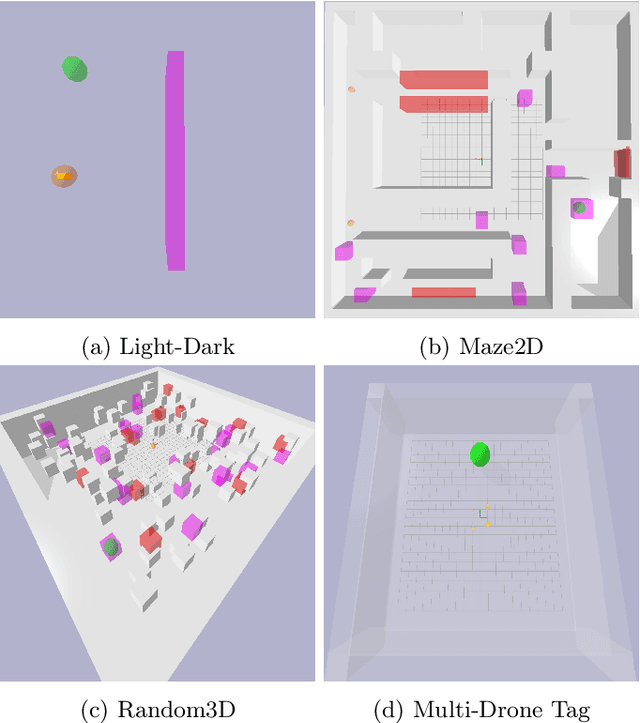

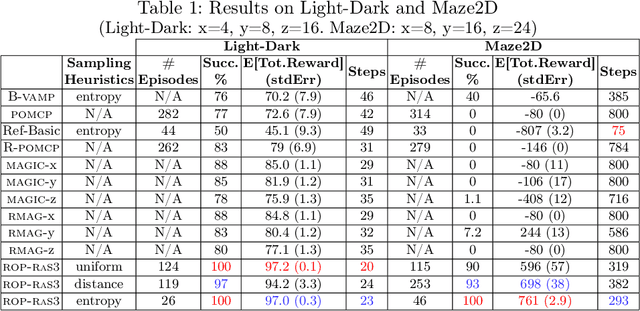

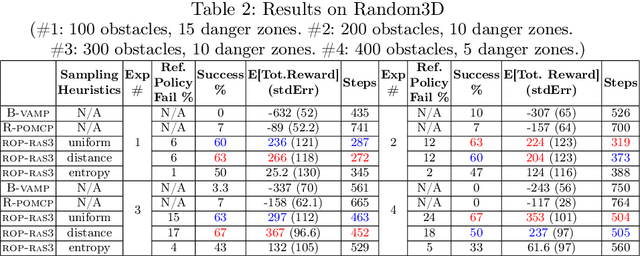

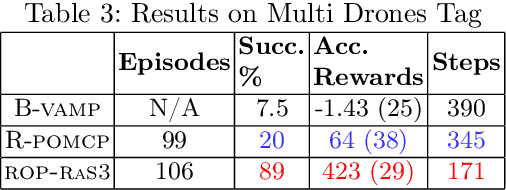

Scaling Long-Horizon Online POMDP Planning via Rapid State Space Sampling

Nov 11, 2024

Abstract:Partially Observable Markov Decision Processes (POMDPs) are a general and principled framework for motion planning under uncertainty. Despite tremendous improvement in the scalability of POMDP solvers, long-horizon POMDPs (e.g., $\geq15$ steps) remain difficult to solve. This paper proposes a new approximate online POMDP solver, called Reference-Based Online POMDP Planning via Rapid State Space Sampling (ROP-RaS3). ROP-RaS3 uses novel extremely fast sampling-based motion planning techniques to sample the state space and generate a diverse set of macro actions online which are then used to bias belief-space sampling and infer high-quality policies without requiring exhaustive enumeration of the action space -- a fundamental constraint for modern online POMDP solvers. ROP-RaS3 is evaluated on various long-horizon POMDPs, including on a problem with a planning horizon of more than 100 steps and a problem with a 15-dimensional state space that requires more than 20 look ahead steps. In all of these problems, ROP-RaS3 substantially outperforms other state-of-the-art methods by up to multiple folds.

Collision-Affording Point Trees: SIMD-Amenable Nearest Neighbors for Fast Collision Checking

Jun 04, 2024

Abstract:Motion planning against sensor data is often a critical bottleneck in real-time robot control. For sampling-based motion planners, which are effective for high-dimensional systems such as manipulators, the most time-intensive component is collision checking. We present a novel spatial data structure, the collision-affording point tree (CAPT): an exact representation of point clouds that accelerates collision-checking queries between robots and point clouds by an order of magnitude, with an average query time of less than 10 nanoseconds on 3D scenes comprising thousands of points. With the CAPT, sampling-based planners can generate valid, high-quality paths in under a millisecond, with total end-to-end computation time faster than 60 FPS, on a single thread of a consumer-grade CPU. We also present a point cloud filtering algorithm, based on space-filling curves, which reduces the number of points in a point cloud while preserving structure. Our approach enables robots to plan at real-time speeds in sensed environments, opening up potential uses of planning for high-dimensional systems in dynamic, changing, and unmodeled environments.

Motions in Microseconds via Vectorized Sampling-Based Planning

Sep 28, 2023

Abstract:Modern sampling-based motion planning algorithms typically take between hundreds of milliseconds to dozens of seconds to find collision-free motions for high degree-of-freedom problems. This paper presents performance improvements of more than 500x over the state-of-the-art, bringing planning times into the range of microseconds and solution rates into the range of kilohertz, without specialized hardware. Our key insight is how to exploit fine-grained parallelism within sampling-based planners, providing generality-preserving algorithmic improvements to any such planner and significantly accelerating critical subroutines, such as forward kinematics and collision checking. We demonstrate our approach over a diverse set of challenging, realistic problems for complex robots ranging from 7 to 14 degrees-of-freedom. Moreover, we show that our approach does not require high-power hardware by also evaluating on a low-power single-board computer. The planning speeds demonstrated are fast enough to reside in the range of control frequencies and open up new avenues of motion planning research.

Stochastic Implicit Neural Signed Distance Functions for Safe Motion Planning under Sensing Uncertainty

Sep 28, 2023

Abstract:Motion planning under sensing uncertainty is critical for robots in unstructured environments to guarantee safety for both the robot and any nearby humans. Most work on planning under uncertainty does not scale to high-dimensional robots such as manipulators, assumes simplified geometry of the robot or environment, or requires per-object knowledge of noise. Instead, we propose a method that directly models sensor-specific aleatoric uncertainty to find safe motions for high-dimensional systems in complex environments, without exact knowledge of environment geometry. We combine a novel implicit neural model of stochastic signed distance functions with a hierarchical optimization-based motion planner to plan low-risk motions without sacrificing path quality. Our method also explicitly bounds the risk of the path, offering trustworthiness. We empirically validate that our method produces safe motions and accurate risk bounds and is safer than baseline approaches.

Object Reconfiguration with Simulation-Derived Feasible Actions

Feb 27, 2023Abstract:3D object reconfiguration encompasses common robot manipulation tasks in which a set of objects must be moved through a series of physically feasible state changes into a desired final configuration. Object reconfiguration is challenging to solve in general, as it requires efficient reasoning about environment physics that determine action validity. This information is typically manually encoded in an explicit transition system. Constructing these explicit encodings is tedious and error-prone, and is often a bottleneck for planner use. In this work, we explore embedding a physics simulator within a motion planner to implicitly discover and specify the valid actions from any state, removing the need for manual specification of action semantics. Our experiments demonstrate that the resulting simulation-based planner can effectively produce physically valid rearrangement trajectories for a range of 3D object reconfiguration problems without requiring more than an environment description and start and goal arrangements.

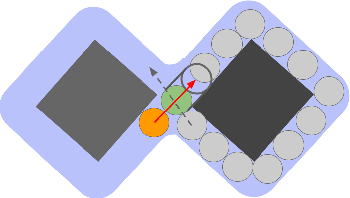

Task and Motion Informed Trees : Almost-Surely Asymptotically Optimal Integrated Task and Motion Planning

Oct 17, 2022

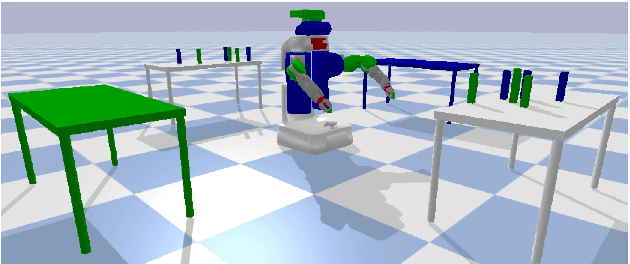

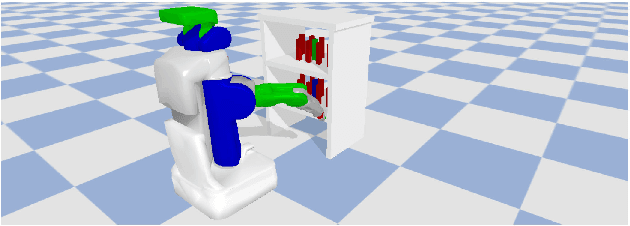

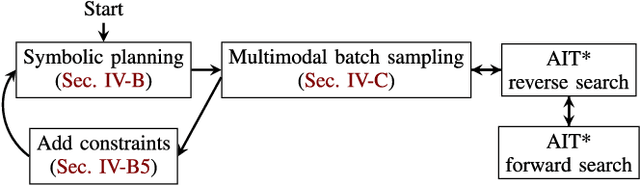

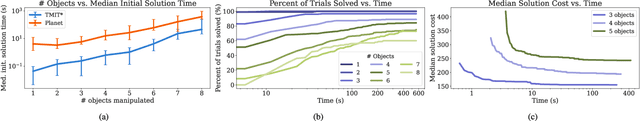

Abstract:High-level autonomy requires discrete and continuous reasoning to decide both what actions to take and how to execute them. Integrated Task and Motion Planning (TMP) algorithms solve these hybrid problems jointly to consider constraints between the discrete symbolic actions (i.e., the task plan) and their continuous geometric realization (i.e., motion plans). This joint approach solves more difficult problems than approaches that address the task and motion subproblems independently. TMP algorithms combine and extend results from both task and motion planning. TMP has mainly focused on computational performance and completeness and less on solution optimality. Optimal TMP is difficult because the independent optima of the subproblems may not be the optimal integrated solution, which can only be found by jointly optimizing both plans. This paper presents Task and Motion Informed Trees (TMIT*), an optimal TMP algorithm that combines results from makespan-optimal task planning and almost-surely asymptotically optimal motion planning. TMIT* interleaves asymmetric forward and reverse searches to delay computationally expensive operations until necessary and perform an efficient informed search directly in the problem's hybrid state space. This allows it to solve problems quickly and then converge towards the optimal solution with additional computational time, as demonstrated on the evaluated robotic-manipulation benchmark problems.

* 9 pages, 5 figures. Accepted to IEEE RA-L and IROS 2022

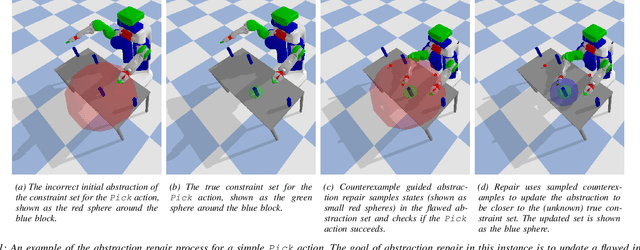

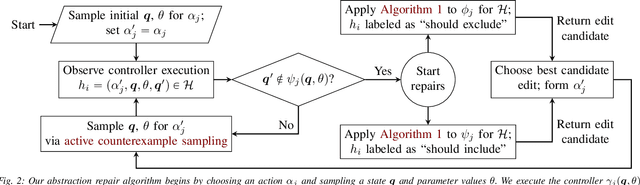

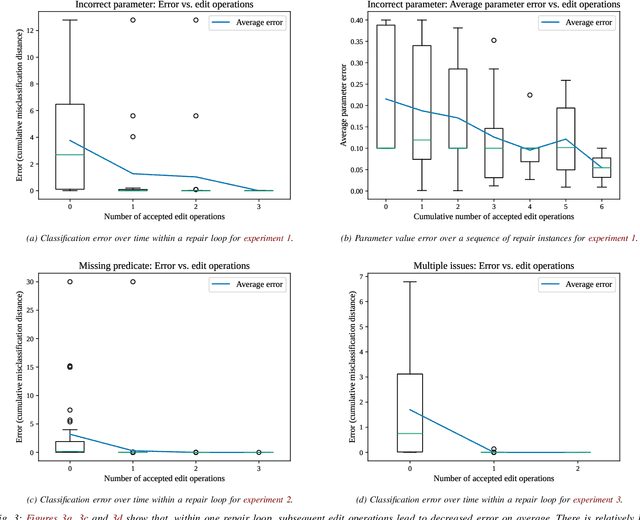

Counterexample-Guided Repair for Symbolic-Geometric Action Abstractions

May 13, 2021

Abstract:Integrated Task and Motion Planning (TMP) provides a promising class of approaches for solving robot planning problems with intricate symbolic and geometric constraints. However, the practical usefulness of TMP planners is limited by their need for symbolic abstractions of robot actions, which are difficult to construct even for experts. We propose an approach to automatically construct and continuously improve a symbolic abstraction of a robot action via observations of the robot performing the action. This approach, called automatic abstraction repair, allows symbolic abstractions to be initially incorrect or incomplete and converge toward a correct model over time. Abstraction repair uses constrained polynomial zonotopes (CPZs), an efficient non-convex set representation, to model predicates over joint symbolic and geometric state, and performs an optimizing search over symbolic edit operations to predicate formulae to improve the correspondence of a symbolic abstraction to the behavior of a physical robot controller. In this work, we describe the aforementioned predicate model, introduce the symbolic-geometric abstraction repair problem, and present an anytime algorithm for automatic abstraction repair. We then demonstrate that abstraction repair can improve realistic action abstractions for common mobile manipulation actions from a handful of observations.

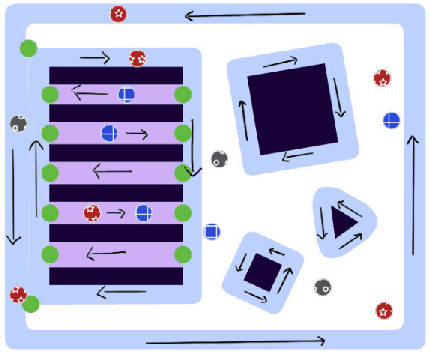

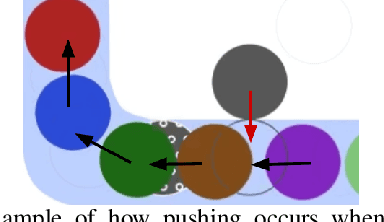

Ensuring Progress for Multiple Mobile Robots via Space Partitioning, Motion Rules, and Adaptively Centralized Conflict Resolution

Feb 25, 2021

Abstract:In environments where multiple robots must coordinate in a shared space, decentralized approaches allow for decoupled planning at the cost of global guarantees, while centralized approaches make the opposite trade-off. These solutions make a range of assumptions - commonly, that all the robots share the same planning strategies. In this work, we present a framework that ensures progress for all robots without assumptions on any robot's planning strategy by (1) generating a partition of the environment into "flow", "open", and "passage" regions and (2) imposing a set of rules for robot motion in these regions. These rules for robot motion prevent deadlock through an adaptively centralized protocol for resolving spatial conflicts between robots. Our proposed framework ensures progress for all robots without a grid-like discretization of the environment or strong requirements on robot communication, coordination, or cooperation. Each robot can freely choose how to plan and coordinate for itself, without being vulnerable to other robots or groups of robots blocking them from their goals, as long as they follow the rules when necessary. We describe our space partition and motion rules, prove that the motion rules suffice to guarantee progress in partitioned environments, and demonstrate several cases in simulated polygonal environments. This work strikes a balance between each robot's planning independence and a guarantee that each robot can always reach any goal in finite time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge