Jonathan D. Gammell

Estimation, Search, and Planning

$\rm{A}^{\rm{SAR}}$: $\varepsilon$-Optimal Graph Search for Minimum Expected-Detection-Time Paths with Path Budget Constraints for Search and Rescue

Nov 13, 2025Abstract:Searches are conducted to find missing persons and/or objects given uncertain information, imperfect observers and large search areas in Search and Rescue (SAR). In many scenarios, such as Maritime SAR, expected survival times are short and optimal search could increase the likelihood of success. This optimization problem is complex for nontrivial problems given its probabilistic nature. Stochastic optimization methods search large problems by nondeterministically sampling the space to reduce the effective size of the problem. This has been used in SAR planning to search otherwise intractably large problems but the stochastic nature provides no formal guarantees on the quality of solutions found in finite time. This paper instead presents $\rm{A}^{\rm{SAR}}$, an $\varepsilon$-optimal search algorithm for SAR planning. It calculates a heuristic to bound the search space and uses graph-search methods to find solutions that are formally guaranteed to be within a user-specified factor, $\varepsilon$, of the optimal solution. It finds better solutions faster than existing optimization approaches in operational simulations. It is also demonstrated with a real-world field trial on Lake Ontario, Canada, where it was used to locate a drifting manikin in only 150s.

Nearest-Neighbourless Asymptotically Optimal Motion Planning with Fully Connected Informed Trees (FCIT*)

Nov 26, 2024Abstract:Improving the performance of motion planning algorithms for high-degree-of-freedom robots usually requires reducing the cost or frequency of computationally expensive operations. Traditionally, and especially for asymptotically optimal sampling-based motion planners, the most expensive operations are local motion validation and querying the nearest neighbours of a configuration. Recent advances have significantly reduced the cost of motion validation by using single instruction/multiple data (SIMD) parallelism to improve solution times for satisficing motion planning problems. These advances have not yet been applied to asymptotically optimal motion planning. This paper presents Fully Connected Informed Trees (FCIT*), the first fully connected, informed, anytime almost-surely asymptotically optimal (ASAO) algorithm. FCIT* exploits the radically reduced cost of edge evaluation via SIMD parallelism to build and search fully connected graphs. This removes the need for nearest-neighbours structures, which are a dominant cost for many sampling-based motion planners, and allows it to find initial solutions faster than state-of-the-art ASAO (VAMP, OMPL) and satisficing (OMPL) algorithms on the MotionBenchMaker dataset while converging towards optimal plans in an anytime manner.

Osprey: Multi-Session Autonomous Aerial Mapping with LiDAR-based SLAM and Next Best View Planning

Nov 06, 2023Abstract:Aerial mapping systems are important for many surveying applications (e.g., industrial inspection or agricultural monitoring). Semi-autonomous mapping with GPS-guided aerial platforms that fly preplanned missions is already widely available but fully autonomous systems can significantly improve efficiency. Autonomously mapping complex 3D structures requires a system that performs online mapping and mission planning. This paper presents Osprey, an autonomous aerial mapping system with state-of-the-art multi-session mapping capabilities. It enables a non-expert operator to specify a bounded target area that the aerial platform can then map autonomously, over multiple flights if necessary. Field experiments with Osprey demonstrate that this system can achieve greater map coverage of large industrial sites than manual surveys with a pilot-flown aerial platform or a terrestrial laser scanner (TLS). Three sites, with a total ground coverage of $7085$ m$^2$ and a maximum height of $27$ m, were mapped in separate missions using $112$ minutes of autonomous flight time. True colour maps were created from images captured by Osprey using pointcloud and NeRF reconstruction methods. These maps provide useful data for structural inspection tasks.

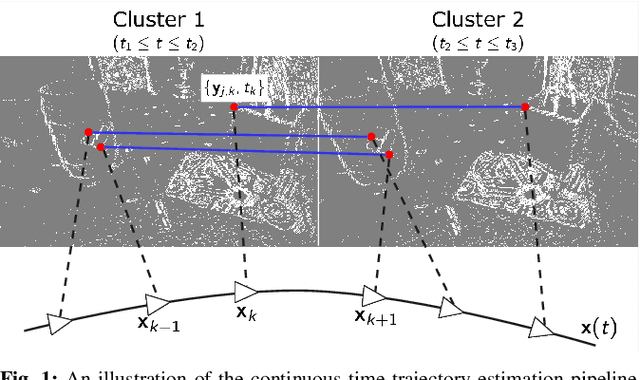

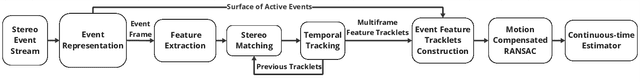

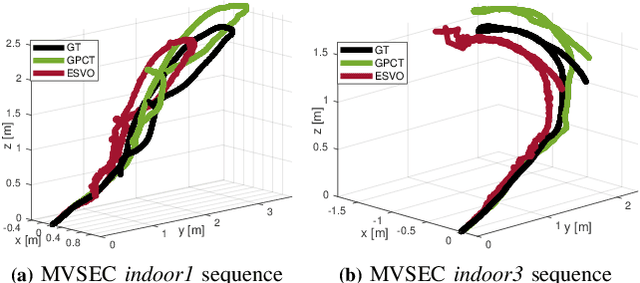

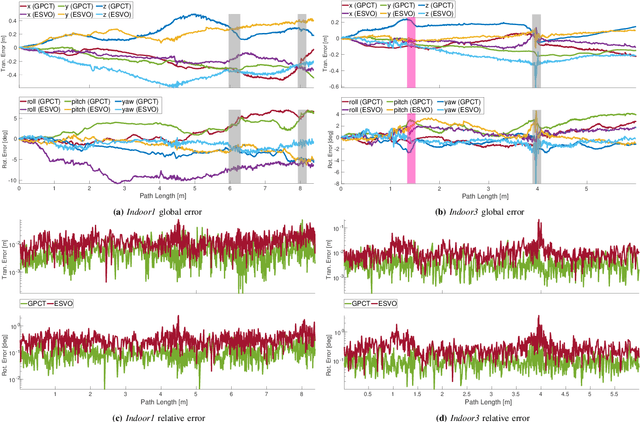

Event-based Visual Odometry with Full Temporal Resolution via Continuous-time Gaussian Process Regression

Jun 01, 2023

Abstract:Event-based cameras asynchronously capture individual visual changes in a scene. This makes them more robust than traditional frame-based cameras to highly dynamic motions and poor illumination. It also means that every measurement in a scene can occur at a unique time. Handling these different measurement times is a major challenge of using event-based cameras. It is often addressed in visual odometry (VO) pipelines by approximating temporally close measurements as occurring at one common time. This grouping simplifies the estimation problem but sacrifices the inherent temporal resolution of event-based cameras. This paper instead presents a complete stereo VO pipeline that estimates directly with individual event-measurement times without requiring any grouping or approximation. It uses continuous-time trajectory estimation to maintain the temporal fidelity and asynchronous nature of event-based cameras through Gaussian process regression with a physically motivated prior. Its performance is evaluated on the MVSEC dataset, where it achieves 7.9e-3 and 5.9e-3 RMS relative error on two independent sequences, outperforming the existing publicly available event-based stereo VO pipeline by two and four times, respectively.

Task and Motion Informed Trees : Almost-Surely Asymptotically Optimal Integrated Task and Motion Planning

Oct 17, 2022

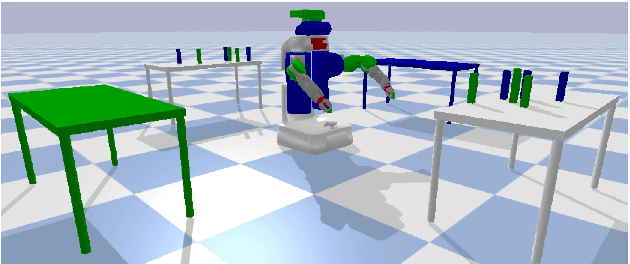

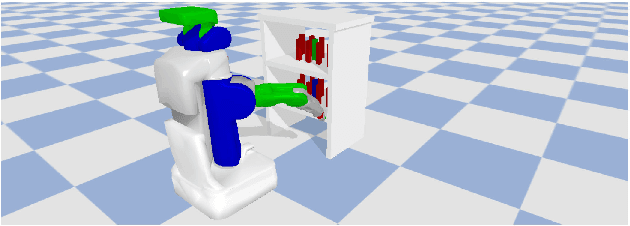

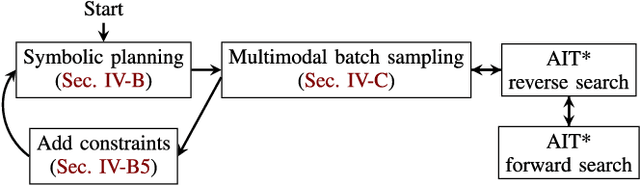

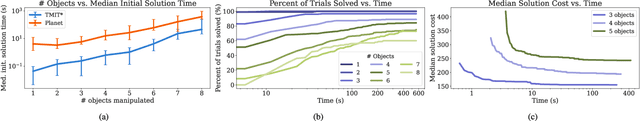

Abstract:High-level autonomy requires discrete and continuous reasoning to decide both what actions to take and how to execute them. Integrated Task and Motion Planning (TMP) algorithms solve these hybrid problems jointly to consider constraints between the discrete symbolic actions (i.e., the task plan) and their continuous geometric realization (i.e., motion plans). This joint approach solves more difficult problems than approaches that address the task and motion subproblems independently. TMP algorithms combine and extend results from both task and motion planning. TMP has mainly focused on computational performance and completeness and less on solution optimality. Optimal TMP is difficult because the independent optima of the subproblems may not be the optimal integrated solution, which can only be found by jointly optimizing both plans. This paper presents Task and Motion Informed Trees (TMIT*), an optimal TMP algorithm that combines results from makespan-optimal task planning and almost-surely asymptotically optimal motion planning. TMIT* interleaves asymmetric forward and reverse searches to delay computationally expensive operations until necessary and perform an efficient informed search directly in the problem's hybrid state space. This allows it to solve problems quickly and then converge towards the optimal solution with additional computational time, as demonstrated on the evaluated robotic-manipulation benchmark problems.

* 9 pages, 5 figures. Accepted to IEEE RA-L and IROS 2022

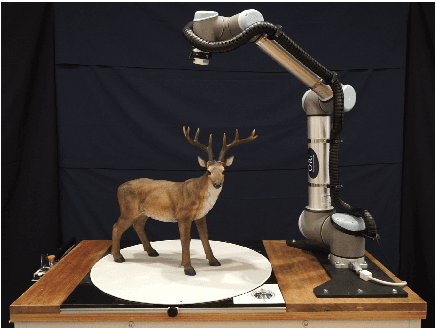

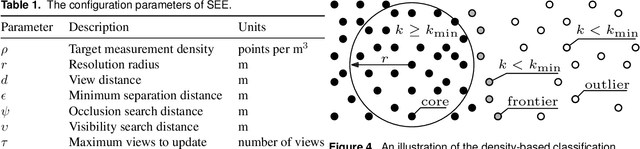

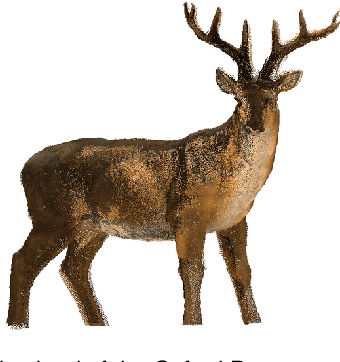

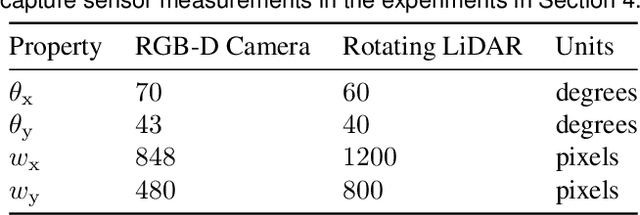

The Surface Edge Explorer (SEE): A measurement-direct approach to next best view planning

Jul 27, 2022

Abstract:High-quality observations of the real world are crucial for a variety of applications, including producing 3D printed replicas of small-scale scenes and conducting inspections of large-scale infrastructure. These 3D observations are commonly obtained by combining multiple sensor measurements from different views. Guiding the selection of suitable views is known as the Next Best View (NBV) planning problem. Most NBV approaches reason about measurements using rigid data structures (e.g., surface meshes or voxel grids). This simplifies next best view selection but can be computationally expensive, reduces real-world fidelity, and couples the selection of a next best view with the final data processing. This paper presents the Surface Edge Explorer (SEE), a NBV approach that selects new observations directly from previous sensor measurements without requiring rigid data structures. SEE uses measurement density to propose next best views that increase coverage of insufficiently observed surfaces while avoiding potential occlusions. Statistical results from simulated experiments show that SEE can attain better surface coverage in less computational time and sensor travel distance than evaluated volumetric approaches on both small- and large-scale scenes. Real-world experiments demonstrate SEE autonomously observing a deer statue using a 3D sensor affixed to a robotic arm.

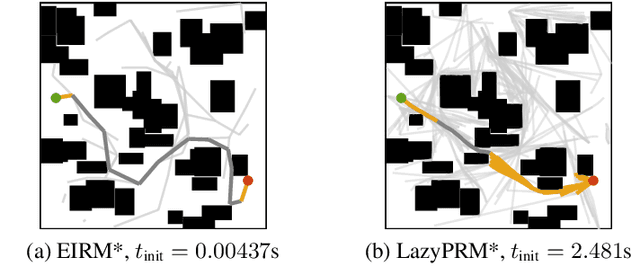

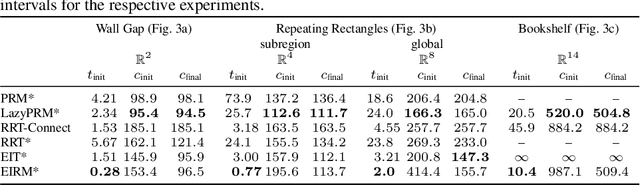

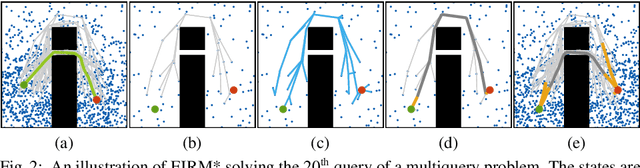

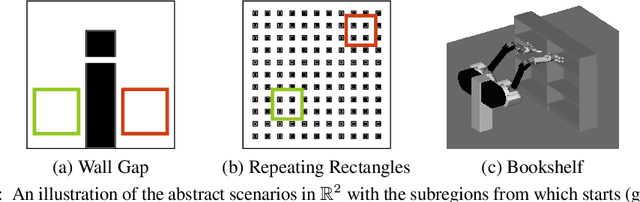

Effort Informed Roadmaps (EIRM*): Efficient Asymptotically Optimal Multiquery Planning by Actively Reusing Validation Effort

May 17, 2022

Abstract:Multiquery planning algorithms find paths between various different starts and goals in a single search space. They are designed to do so efficiently by reusing information across planning queries. This information may be computed before or during the search and often includes knowledge of valid paths. Using known valid paths to solve an individual planning query takes less computational effort than finding a completely new solution. This allows multiquery algorithms, such as PRM*, to outperform single-query algorithms, such as RRT*, on many problems but their relative performance depends on how much information is reused. Despite this, few multiquery planners explicitly seek to maximize path reuse and, as a result, many do not consistently outperform single-query alternatives. This paper presents Effort Informed Roadmaps (EIRM*), an almost-surely asymptotically optimal multiquery planning algorithm that explicitly prioritizes reusing computational effort. EIRM* uses an asymmetric bidirectional search to identify existing paths that may help solve an individual planning query and then uses this information to order its search and reduce computational effort. This allows it to find initial solutions up to an order-of-magnitude faster than state-of-the-art planning algorithms on the tested abstract and robotic multiquery planning problems.

AIT* and EIT*: Asymmetric bidirectional sampling-based path planning

Nov 02, 2021

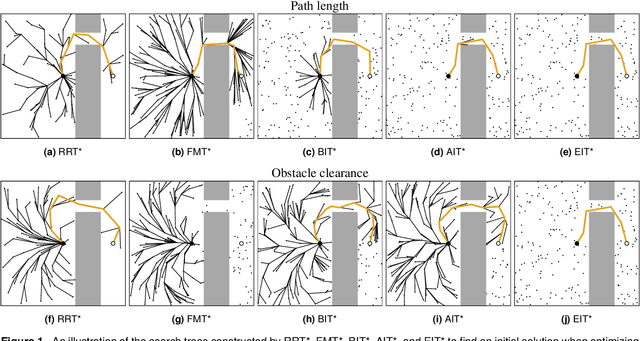

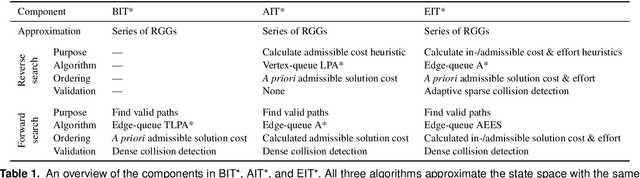

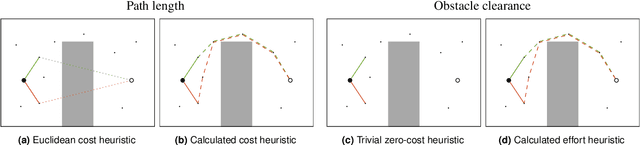

Abstract:Optimal path planning is the problem of finding a valid sequence of states between a start and goal that optimizes an objective. Informed path planning algorithms order their search with problem-specific knowledge expressed as heuristics and can be orders of magnitude more efficient than uninformed algorithms. Heuristics are most effective when they are both accurate and computationally inexpensive to evaluate, but these are often conflicting characteristics. This makes the selection of appropriate heuristics difficult for many problems. This paper presents two almost-surely asymptotically optimal sampling-based path planning algorithms to address this challenge, Adaptively Informed Trees (AIT*) and Effort Informed Trees (EIT*). These algorithms use an asymmetric bidirectional search in which both searches continuously inform each other. This allows AIT* and EIT* to improve planning performance by simultaneously calculating and exploiting increasingly accurate, problem-specific heuristics. The benefits of AIT* and EIT* relative to other sampling-based algorithms are demonstrated on twelve problems in abstract, robotic, and biomedical domains optimizing path length and obstacle clearance. The experiments show that AIT* and EIT* outperform other algorithms on problems optimizing obstacle clearance, where a priori cost heuristics are often ineffective, and still perform well on problems minimizing path length, where such heuristics are often effective.

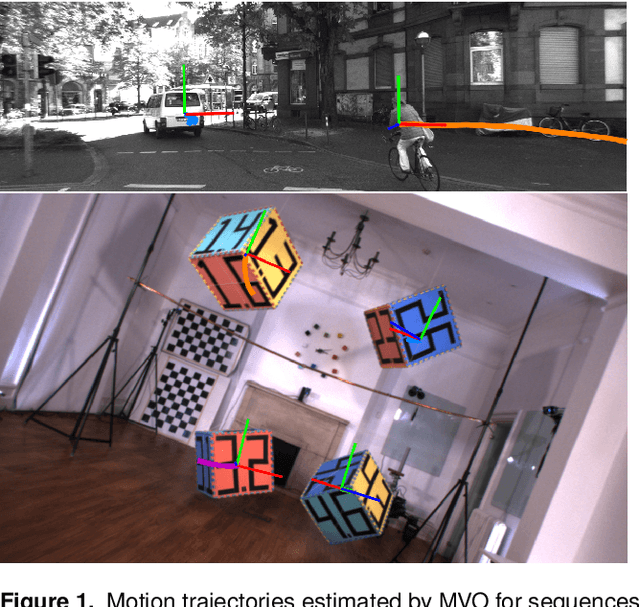

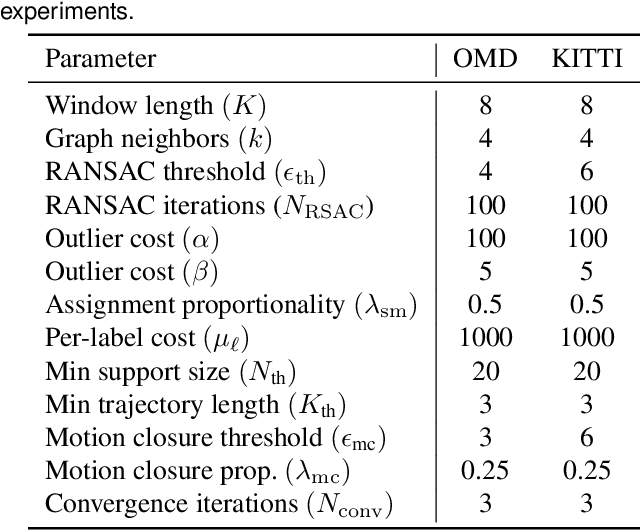

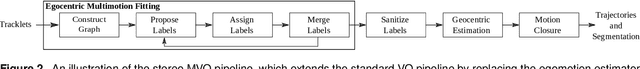

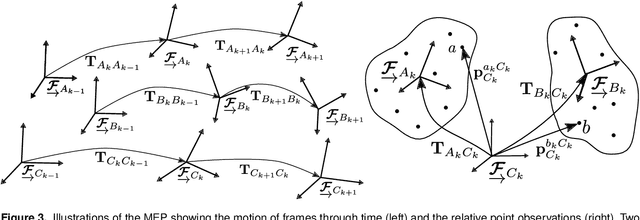

Multimotion Visual Odometry (MVO)

Oct 28, 2021

Abstract:Visual motion estimation is a well-studied challenge in autonomous navigation. Recent work has focused on addressing multimotion estimation in highly dynamic environments. These environments not only comprise multiple, complex motions but also tend to exhibit significant occlusion. Estimating third-party motions simultaneously with the sensor egomotion is difficult because an object's observed motion consists of both its true motion and the sensor motion. Most previous works in multimotion estimation simplify this problem by relying on appearance-based object detection or application-specific motion constraints. These approaches are effective in specific applications and environments but do not generalize well to the full multimotion estimation problem (MEP). This paper presents Multimotion Visual Odometry (MVO), a multimotion estimation pipeline that estimates the full SE(3) trajectory of every motion in the scene, including the sensor egomotion, without relying on appearance-based information. MVO extends the traditional visual odometry (VO) pipeline with multimotion segmentation and tracking techniques. It uses physically founded motion priors to extrapolate motions through temporary occlusions and identify the reappearance of motions through motion closure. Evaluations on real-world data from the Oxford Multimotion Dataset (OMD) and the KITTI Vision Benchmark Suite demonstrate that MVO achieves good estimation accuracy compared to similar approaches and is applicable to a variety of multimotion estimation challenges.

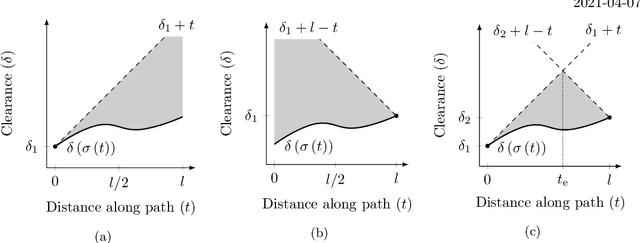

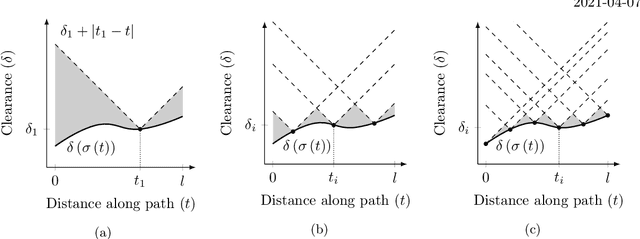

Admissible heuristics for obstacle clearance optimization objectives

May 01, 2021

Abstract:Obstacle clearance in state space is an important optimization objective in path planning because it can result in safe paths. This technical report presents admissible solution- and path-cost heuristics for this objective, which can be used to improve the performance of informed path planning algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge