Rowan Border

Osprey: Multi-Session Autonomous Aerial Mapping with LiDAR-based SLAM and Next Best View Planning

Nov 06, 2023Abstract:Aerial mapping systems are important for many surveying applications (e.g., industrial inspection or agricultural monitoring). Semi-autonomous mapping with GPS-guided aerial platforms that fly preplanned missions is already widely available but fully autonomous systems can significantly improve efficiency. Autonomously mapping complex 3D structures requires a system that performs online mapping and mission planning. This paper presents Osprey, an autonomous aerial mapping system with state-of-the-art multi-session mapping capabilities. It enables a non-expert operator to specify a bounded target area that the aerial platform can then map autonomously, over multiple flights if necessary. Field experiments with Osprey demonstrate that this system can achieve greater map coverage of large industrial sites than manual surveys with a pilot-flown aerial platform or a terrestrial laser scanner (TLS). Three sites, with a total ground coverage of $7085$ m$^2$ and a maximum height of $27$ m, were mapped in separate missions using $112$ minutes of autonomous flight time. True colour maps were created from images captured by Osprey using pointcloud and NeRF reconstruction methods. These maps provide useful data for structural inspection tasks.

The Surface Edge Explorer (SEE): A measurement-direct approach to next best view planning

Jul 27, 2022

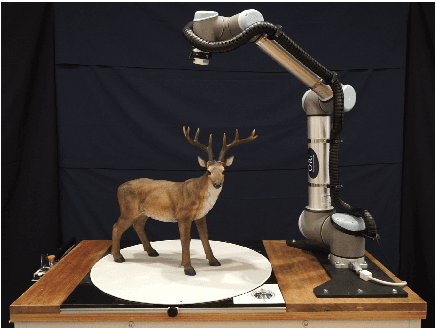

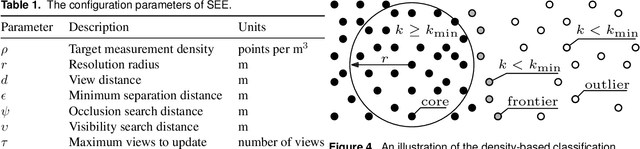

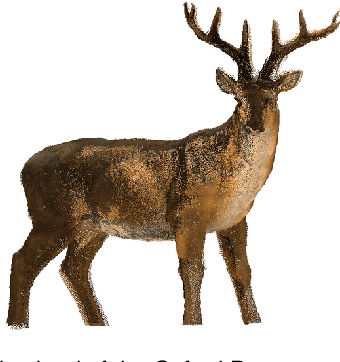

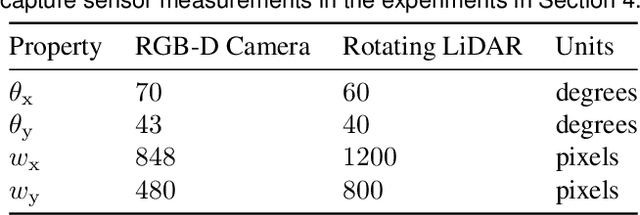

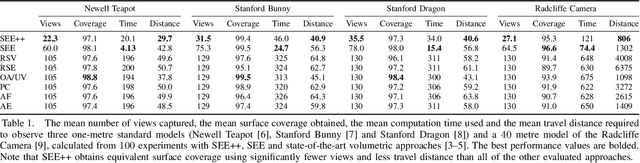

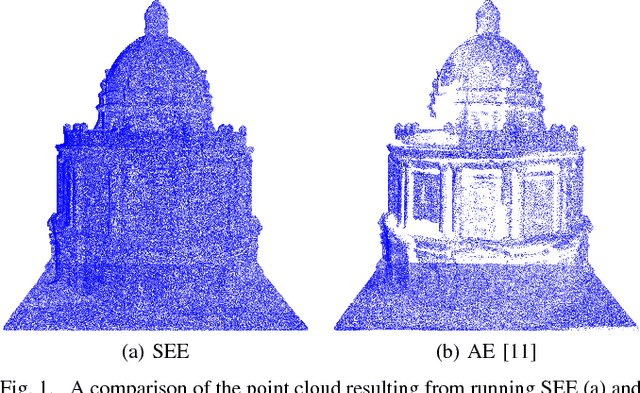

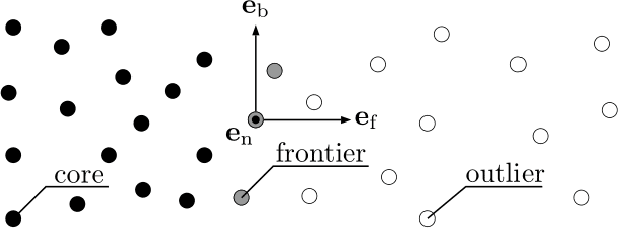

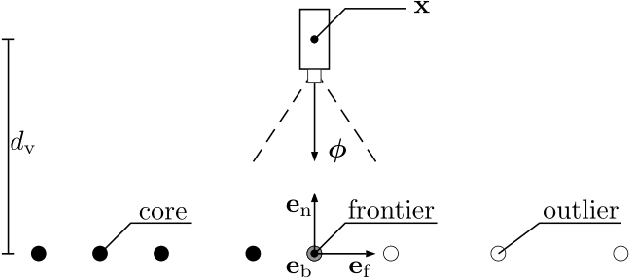

Abstract:High-quality observations of the real world are crucial for a variety of applications, including producing 3D printed replicas of small-scale scenes and conducting inspections of large-scale infrastructure. These 3D observations are commonly obtained by combining multiple sensor measurements from different views. Guiding the selection of suitable views is known as the Next Best View (NBV) planning problem. Most NBV approaches reason about measurements using rigid data structures (e.g., surface meshes or voxel grids). This simplifies next best view selection but can be computationally expensive, reduces real-world fidelity, and couples the selection of a next best view with the final data processing. This paper presents the Surface Edge Explorer (SEE), a NBV approach that selects new observations directly from previous sensor measurements without requiring rigid data structures. SEE uses measurement density to propose next best views that increase coverage of insufficiently observed surfaces while avoiding potential occlusions. Statistical results from simulated experiments show that SEE can attain better surface coverage in less computational time and sensor travel distance than evaluated volumetric approaches on both small- and large-scale scenes. Real-world experiments demonstrate SEE autonomously observing a deer statue using a 3D sensor affixed to a robotic arm.

Proactive Estimation of Occlusions and Scene Coverage for Planning Next Best Views in an Unstructured Representation

Sep 09, 2020

Abstract:The process of planning views to observe a scene is known as the Next Best View (NBV) problem. Approaches often aim to obtain high-quality scene observations while reducing the number of views, travel distance and computational cost. Considering occlusions and scene coverage can significantly reduce the number of views and travel distance required to obtain an observation. Structured representations (e.g., a voxel grid or surface mesh) typically use raycasting to evaluate the visibility of represented structures but this is often computationally expensive. Unstructured representations (e.g., point density) avoid the computational overhead of maintaining and raycasting a structure imposed on the scene but as a result do not proactively predict the success of future measurements. This paper presents proactive solutions for handling occlusions and considering scene coverage with an unstructured representation. Their performance is evaluated by extending the density-based Surface Edge Explorer (SEE). Experiments show that these techniques allow an unstructured representation to observe scenes with fewer views and shorter distances while retaining high observation quality and low computational cost.

* For a video of SEE++ go to https://www.youtube.com/watch?v=4r2Z85zccms . For an open-source version of SEE++ go to https://github.com/robotic-esp/see-public . Documentation can be found at https://robotic-esp.github.io/see-public

Surface Edge Explorer (SEE): Planning Next Best Views Directly from 3D Observations

Feb 23, 2018

Abstract:Surveying 3D scenes is a common task in robotics. Systems can do so autonomously by iteratively obtaining measurements. This process of planning observations to improve the model of a scene is called Next Best View (NBV) planning. NBV planning approaches often use either volumetric (e.g., voxel grids) or surface (e.g., triangulated meshes) representations. Volumetric approaches generalise well between scenes as they do not depend on surface geometry but do not scale to high-resolution models of large scenes. Surface representations can obtain high-resolution models at any scale but often require tuning of unintuitive parameters or multiple survey stages. This paper presents a scene-model-free NBV planning approach with a density representation. The Surface Edge Explorer (SEE) uses the density of current measurements to detect and explore observed surface boundaries. This approach is shown experimentally to provide better surface coverage in lower computation time than the evaluated state-of-the-art volumetric approaches while moving equivalent distances.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge