Wenkui Ding

Improving Vision-and-Language Reasoning via Spatial Relations Modeling

Nov 09, 2023Abstract:Visual commonsense reasoning (VCR) is a challenging multi-modal task, which requires high-level cognition and commonsense reasoning ability about the real world. In recent years, large-scale pre-training approaches have been developed and promoted the state-of-the-art performance of VCR. However, the existing approaches almost employ the BERT-like objectives to learn multi-modal representations. These objectives motivated from the text-domain are insufficient for the excavation on the complex scenario of visual modality. Most importantly, the spatial distribution of the visual objects is basically neglected. To address the above issue, we propose to construct the spatial relation graph based on the given visual scenario. Further, we design two pre-training tasks named object position regression (OPR) and spatial relation classification (SRC) to learn to reconstruct the spatial relation graph respectively. Quantitative analysis suggests that the proposed method can guide the representations to maintain more spatial context and facilitate the attention on the essential visual regions for reasoning. We achieve the state-of-the-art results on VCR and two other vision-and-language reasoning tasks VQA, and NLVR.

HiT: Hierarchical Transformer with Momentum Contrast for Video-Text Retrieval

Mar 28, 2021

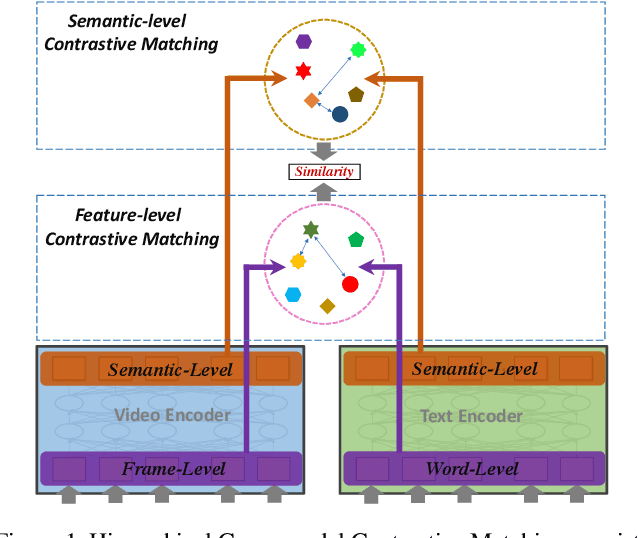

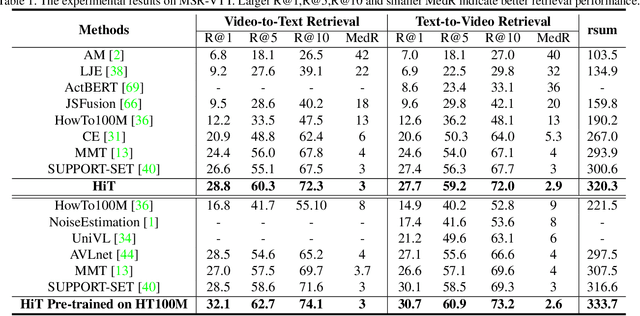

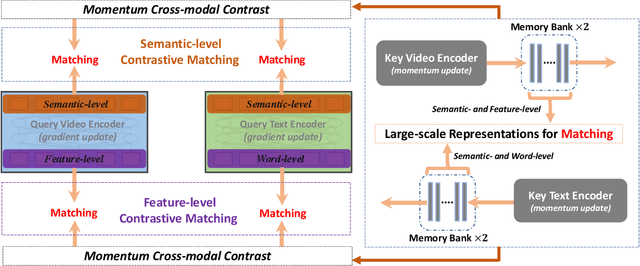

Abstract:Video-Text Retrieval has been a hot research topic with the explosion of multimedia data on the Internet. Transformer for video-text learning has attracted increasing attention due to the promising performance.However, existing cross-modal transformer approaches typically suffer from two major limitations: 1) Limited exploitation of the transformer architecture where different layers have different feature characteristics. 2) End-to-end training mechanism limits negative interactions among samples in a mini-batch. In this paper, we propose a novel approach named Hierarchical Transformer (HiT) for video-text retrieval. HiT performs hierarchical cross-modal contrastive matching in feature-level and semantic-level to achieve multi-view and comprehensive retrieval results. Moreover, inspired by MoCo, we propose Momentum Cross-modal Contrast for cross-modal learning to enable large-scale negative interactions on-the-fly, which contributes to the generation of more precise and discriminative representations. Experimental results on three major Video-Text Retrieval benchmark datasets demonstrate the advantages of our methods.

A Neural Autoregressive Approach to Collaborative Filtering

May 31, 2016

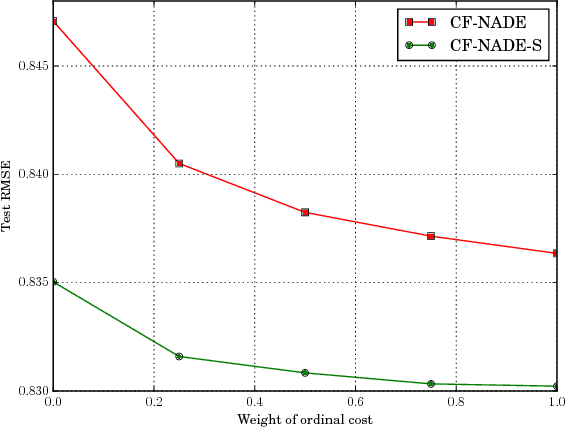

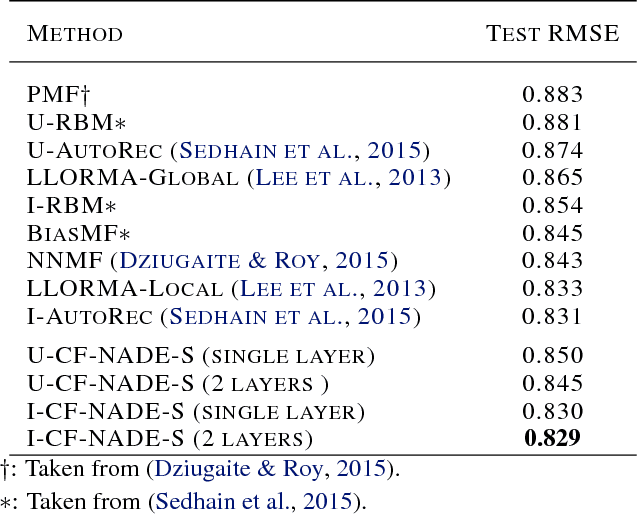

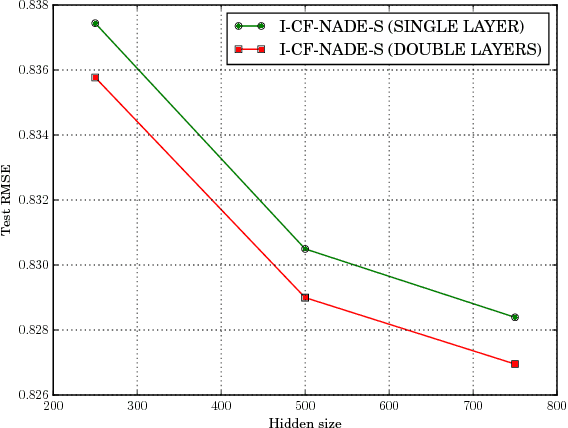

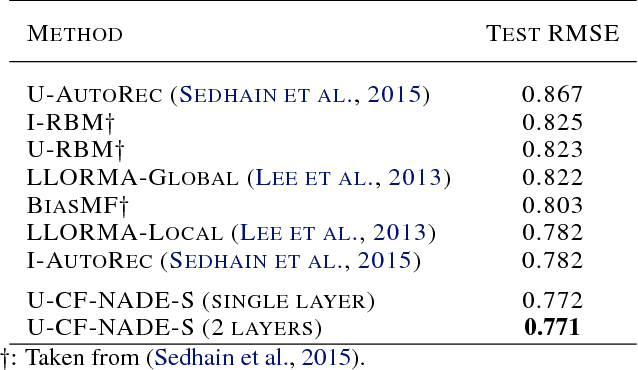

Abstract:This paper proposes CF-NADE, a neural autoregressive architecture for collaborative filtering (CF) tasks, which is inspired by the Restricted Boltzmann Machine (RBM) based CF model and the Neural Autoregressive Distribution Estimator (NADE). We first describe the basic CF-NADE model for CF tasks. Then we propose to improve the model by sharing parameters between different ratings. A factored version of CF-NADE is also proposed for better scalability. Furthermore, we take the ordinal nature of the preferences into consideration and propose an ordinal cost to optimize CF-NADE, which shows superior performance. Finally, CF-NADE can be extended to a deep model, with only moderately increased computational complexity. Experimental results show that CF-NADE with a single hidden layer beats all previous state-of-the-art methods on MovieLens 1M, MovieLens 10M, and Netflix datasets, and adding more hidden layers can further improve the performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge