Wenfeng Feng

DynaSearcher: Dynamic Knowledge Graph Augmented Search Agent via Multi-Reward Reinforcement Learning

Jul 23, 2025Abstract:Multi-step agentic retrieval systems based on large language models (LLMs) have demonstrated remarkable performance in complex information search tasks. However, these systems still face significant challenges in practical applications, particularly in generating factually inconsistent intermediate queries and inefficient search trajectories, which can lead to reasoning deviations or redundant computations. To address these issues, we propose DynaSearcher, an innovative search agent enhanced by dynamic knowledge graphs and multi-reward reinforcement learning (RL). Specifically, our system leverages knowledge graphs as external structured knowledge to guide the search process by explicitly modeling entity relationships, thereby ensuring factual consistency in intermediate queries and mitigating biases from irrelevant information. Furthermore, we employ a multi-reward RL framework for fine-grained control over training objectives such as retrieval accuracy, efficiency, and response quality. This framework promotes the generation of high-quality intermediate queries and comprehensive final answers, while discouraging unnecessary exploration and minimizing information omissions or redundancy. Experimental results demonstrate that our approach achieves state-of-the-art answer accuracy on six multi-hop question answering datasets, matching frontier LLMs while using only small-scale models and limited computational resources. Furthermore, our approach demonstrates strong generalization and robustness across diverse retrieval environments and larger-scale models, highlighting its broad applicability.

EDIT: Enhancing Vision Transformers by Mitigating Attention Sink through an Encoder-Decoder Architecture

Apr 09, 2025

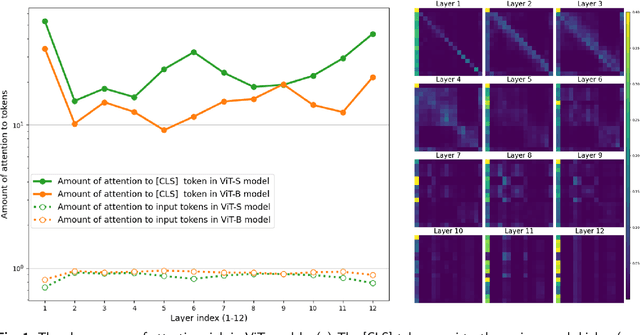

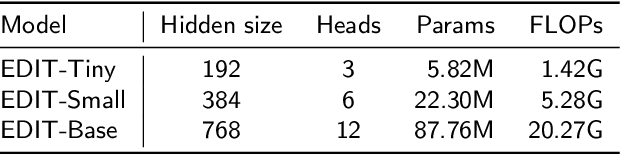

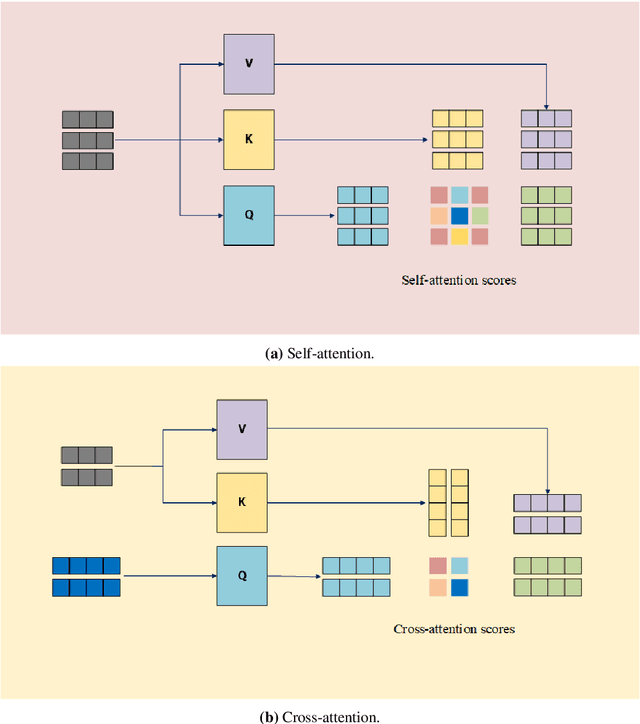

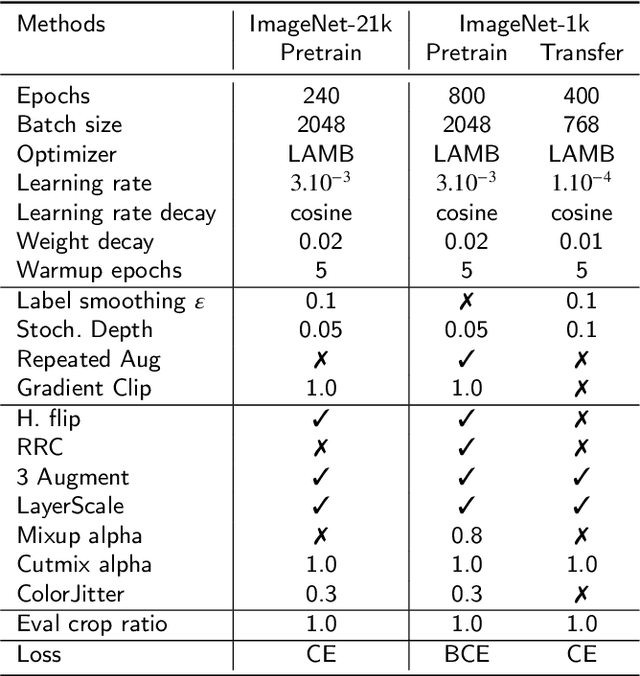

Abstract:In this paper, we propose EDIT (Encoder-Decoder Image Transformer), a novel architecture designed to mitigate the attention sink phenomenon observed in Vision Transformer models. Attention sink occurs when an excessive amount of attention is allocated to the [CLS] token, distorting the model's ability to effectively process image patches. To address this, we introduce a layer-aligned encoder-decoder architecture, where the encoder utilizes self-attention to process image patches, while the decoder uses cross-attention to focus on the [CLS] token. Unlike traditional encoder-decoder framework, where the decoder depends solely on high-level encoder representations, EDIT allows the decoder to extract information starting from low-level features, progressively refining the representation layer by layer. EDIT is naturally interpretable demonstrated through sequential attention maps, illustrating the refined, layer-by-layer focus on key image features. Experiments on ImageNet-1k and ImageNet-21k, along with transfer learning tasks, show that EDIT achieves consistent performance improvements over DeiT3 models. These results highlight the effectiveness of EDIT's design in addressing attention sink and improving visual feature extraction.

RASD: Retrieval-Augmented Speculative Decoding

Mar 05, 2025

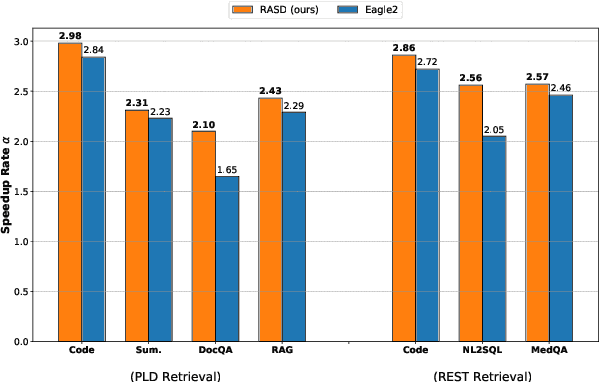

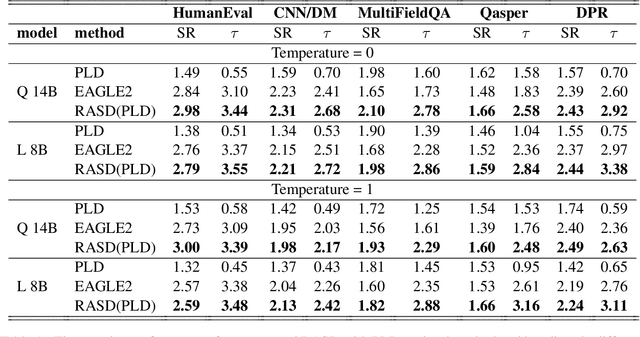

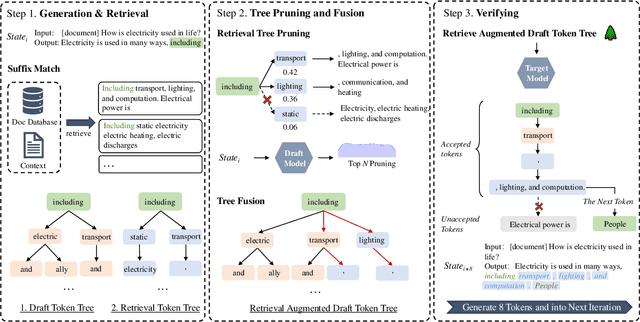

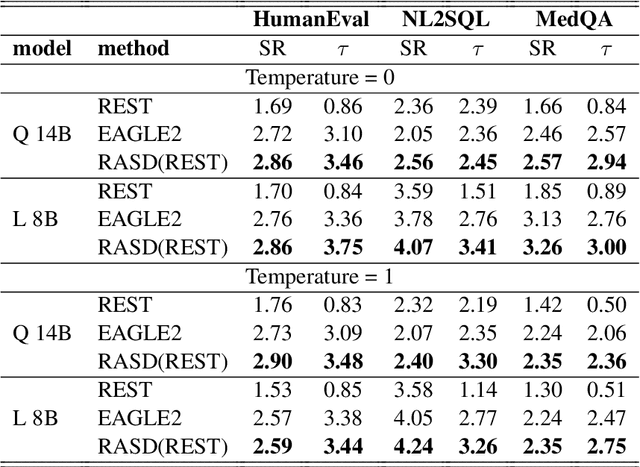

Abstract:Speculative decoding accelerates inference in large language models (LLMs) by generating draft tokens for target model verification. Current approaches for obtaining draft tokens rely on lightweight draft models or additional model structures to generate draft tokens and retrieve context from databases. Due to the draft model's small size and limited training data, model-based speculative decoding frequently becomes less effective in out-of-domain scenarios. Additionally, the time cost of the drafting phase results in a low upper limit on acceptance length during the verification step, limiting overall efficiency. This paper proposes RASD (Retrieval-Augmented Speculative Decoding), which adopts retrieval methods to enhance model-based speculative decoding. We introduce tree pruning and tree fusion to achieve this. Specifically, we develop a pruning method based on the draft model's probability distribution to construct the optimal retrieval tree. Second, we employ the longest prefix matching algorithm to merge the tree generated by the draft model with the retrieval tree, resulting in a unified tree for verification. Experimental results demonstrate that RASD achieves state-of-the-art inference acceleration across tasks such as DocQA, Summary, Code, and In-Domain QA. Moreover, RASD exhibits strong scalability, seamlessly integrating with various speculative decoding approaches, including both generation-based and retrieval-based methods.

AirRAG: Activating Intrinsic Reasoning for Retrieval Augmented Generation via Tree-based Search

Jan 17, 2025

Abstract:Leveraging the autonomous decision-making capabilities of large language models (LLMs) demonstrates superior performance in reasoning tasks. Despite the successes of iterative or recursive retrieval-augmented generation (RAG), they often are trapped in a single solution space when confronted with complex tasks. In this paper, we propose a novel thinking pattern in RAG which integrates system analysis with efficient reasoning actions, significantly activating intrinsic reasoning capabilities and expanding the solution space of specific tasks via Monte Carlo Tree Search (MCTS), dubbed AirRAG. Specifically, our approach designs five fundamental reasoning actions that are expanded to a wide tree-based reasoning spaces using MCTS. The extension also uses self-consistency verification to explore potential reasoning paths and implement inference scaling. In addition, computationally optimal strategies are used to apply more inference computation to key actions to achieve further performance improvements. Experimental results demonstrate the effectiveness of AirRAG through considerable performance gains over complex QA datasets. Furthermore, AirRAG is flexible and lightweight, making it easy to integrate with other advanced technologies.

Mixture-of-LoRAs: An Efficient Multitask Tuning for Large Language Models

Mar 06, 2024Abstract:Instruction Tuning has the potential to stimulate or enhance specific capabilities of large language models (LLMs). However, achieving the right balance of data is crucial to prevent catastrophic forgetting and interference between tasks. To address these limitations and enhance training flexibility, we propose the Mixture-of-LoRAs (MoA) architecture which is a novel and parameter-efficient tuning method designed for multi-task learning with LLMs. In this paper, we start by individually training multiple domain-specific LoRA modules using corresponding supervised corpus data. These LoRA modules can be aligned with the expert design principles observed in Mixture-of-Experts (MoE). Subsequently, we combine the multiple LoRAs using an explicit routing strategy and introduce domain labels to facilitate multi-task learning, which help prevent interference between tasks and ultimately enhances the performance of each individual task. Furthermore, each LoRA model can be iteratively adapted to a new domain, allowing for quick domain-specific adaptation. Experiments on diverse tasks demonstrate superior and robust performance, which can further promote the wide application of domain-specific LLMs.

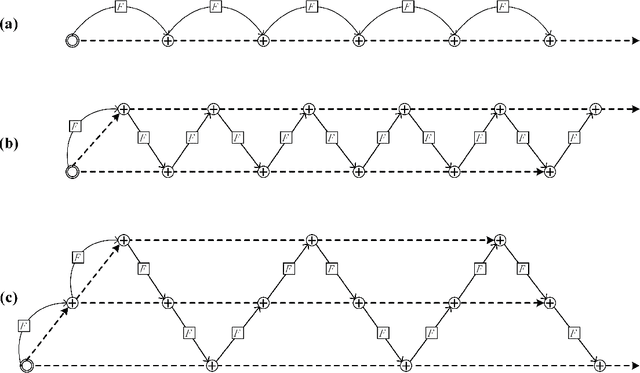

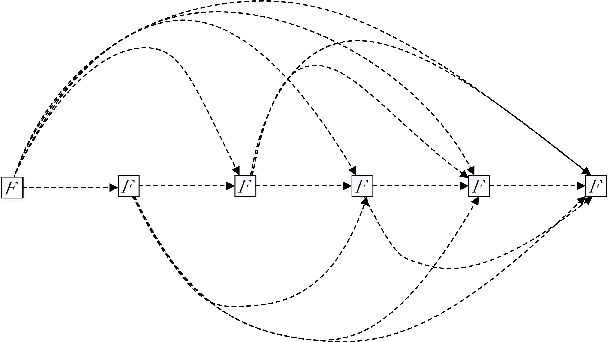

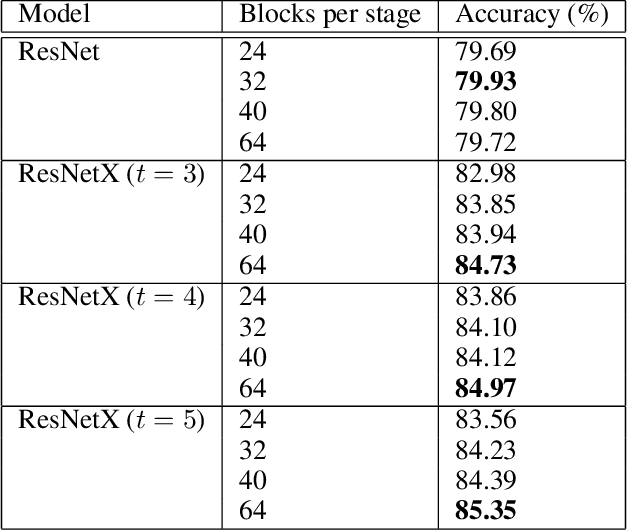

ResNetX: a more disordered and deeper network architecture

Dec 18, 2019

Abstract:Designing efficient network structures has always been the core content of neural network research. ResNet and its variants have proved to be efficient in architecture. However, how to theoretically character the influence of network structure on performance is still vague. With the help of techniques in complex networks, We here provide a natural yet efficient extension to ResNet by folding its backbone chain. Our architecture has two structural features when being mapped to directed acyclic graphs: First is a higher degree of the disorder compared with ResNet, which let ResNetX explore a larger number of feature maps with different sizes of receptive fields. Second is a larger proportion of shorter paths compared to ResNet, which improves the direct flow of information through the entire network. Our architecture exposes a new dimension, namely "fold depth", in addition to existing dimensions of depth, width, and cardinality. Our architecture is a natural extension to ResNet, and can be integrated with existing state-of-the-art methods with little effort. Image classification results on CIFAR-10 and CIFAR-100 benchmarks suggested that our new network architecture performs better than ResNet.

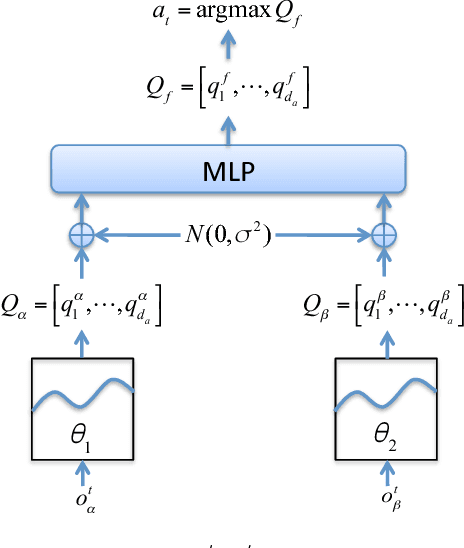

Federated Reinforcement Learning

Jan 25, 2019

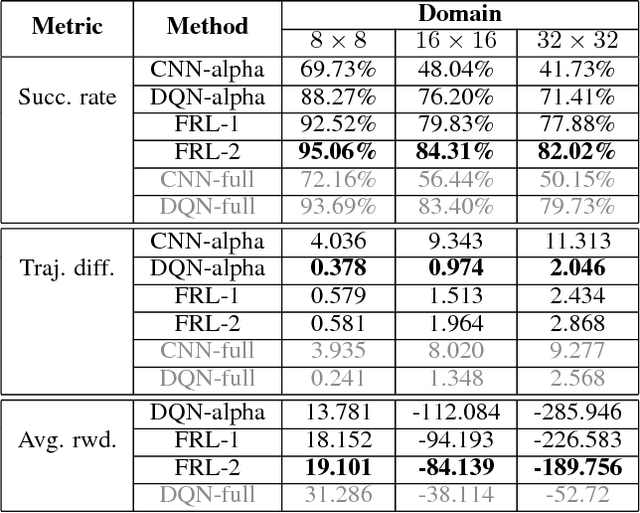

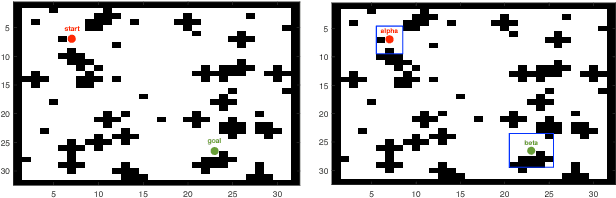

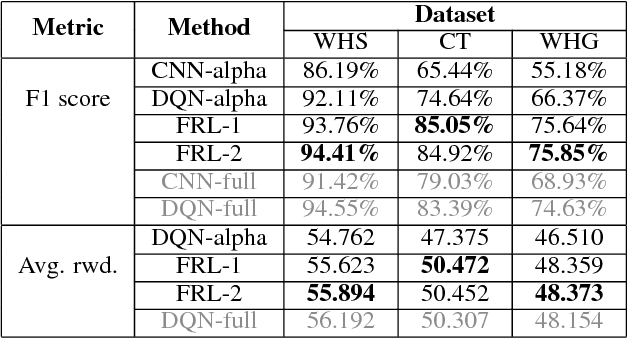

Abstract:In reinforcement learning, building policies of high-quality is challenging when the feature space of states is small and the training data is limited. Directly transferring data or knowledge from an agent to another agent will not work due to the privacy requirement of data and models. In this paper, we propose a novel reinforcement learning approach to considering the privacy requirement and building Q-network for each agent with the help of other agents, namely federated reinforcement learning (FRL). To protect the privacy of data and models, we exploit Gausian differentials on the information shared with each other when updating their local models. In the experiment, we evaluate our FRL framework in two diverse domains, Grid-world and Text2Action domains, by comparing to various baselines.

Extracting Action Sequences from Texts Based on Deep Reinforcement Learning

May 11, 2018

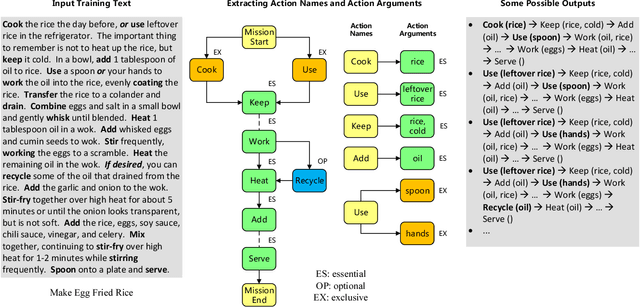

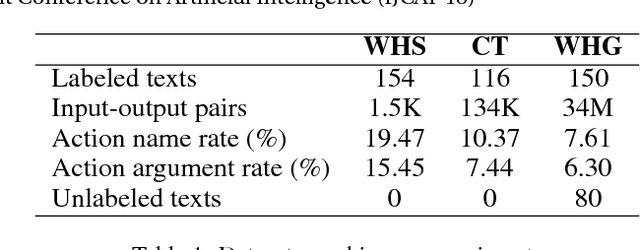

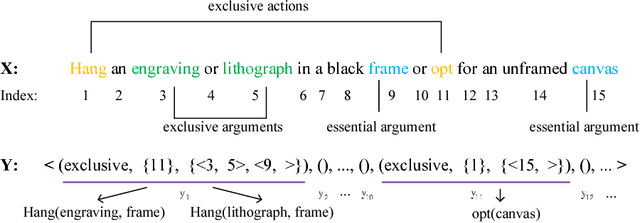

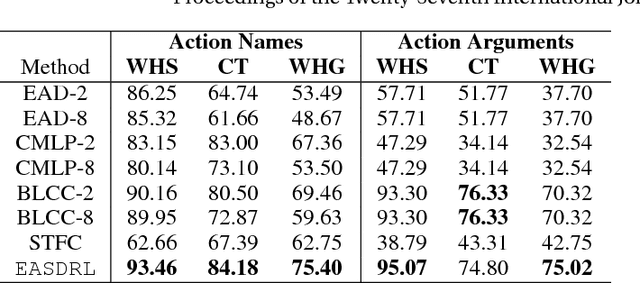

Abstract:Extracting action sequences from natural language texts is challenging, as it requires commonsense inferences based on world knowledge. Although there has been work on extracting action scripts, instructions, navigation actions, etc., they require that either the set of candidate actions be provided in advance, or that action descriptions are restricted to a specific form, e.g., description templates. In this paper, we aim to extract action sequences from texts in free natural language, i.e., without any restricted templates, provided the candidate set of actions is unknown. We propose to extract action sequences from texts based on the deep reinforcement learning framework. Specifically, we view "selecting" or "eliminating" words from texts as "actions", and the texts associated with actions as "states". We then build Q-networks to learn the policy of extracting actions and extract plans from the labeled texts. We demonstrate the effectiveness of our approach on several datasets with comparison to state-of-the-art approaches, including online experiments interacting with humans.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge