Weiwei Song

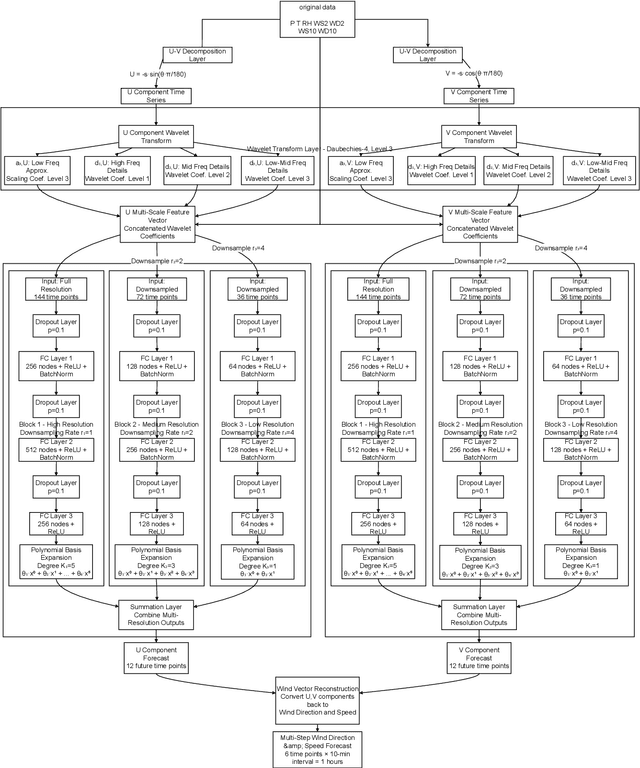

WaveHiTS: Wavelet-Enhanced Hierarchical Time Series Modeling for Wind Direction Nowcasting in Eastern Inner Mongolia

Apr 09, 2025

Abstract:Wind direction forecasting plays a crucial role in optimizing wind energy production, but faces significant challenges due to the circular nature of directional data, error accumulation in multi-step forecasting, and complex meteorological interactions. This paper presents a novel model, WaveHiTS, which integrates wavelet transform with Neural Hierarchical Interpolation for Time Series to address these challenges. Our approach decomposes wind direction into U-V components, applies wavelet transform to capture multi-scale frequency patterns, and utilizes a hierarchical structure to model temporal dependencies at multiple scales, effectively mitigating error propagation. Experiments conducted on real-world meteorological data from Inner Mongolia, China demonstrate that WaveHiTS significantly outperforms deep learning models (RNN, LSTM, GRU), transformer-based approaches (TFT, Informer, iTransformer), and hybrid models (EMD-LSTM). The proposed model achieves RMSE values of approximately 19.2{\deg}-19.4{\deg} compared to 56{\deg}-64{\deg} for deep learning recurrent models, maintaining consistent accuracy across all forecasting steps up to 60 minutes ahead. Moreover, WaveHiTS demonstrates superior robustness with vector correlation coefficients (VCC) of 0.985-0.987 and hit rates of 88.5%-90.1%, substantially outperforming baseline models. Ablation studies confirm that each component-wavelet transform, hierarchical structure, and U-V decomposition-contributes meaningfully to overall performance. These improvements in wind direction nowcasting have significant implications for enhancing wind turbine yaw control efficiency and grid integration of wind energy.

FusDreamer: Label-efficient Remote Sensing World Model for Multimodal Data Classification

Mar 18, 2025Abstract:World models significantly enhance hierarchical understanding, improving data integration and learning efficiency. To explore the potential of the world model in the remote sensing (RS) field, this paper proposes a label-efficient remote sensing world model for multimodal data fusion (FusDreamer). The FusDreamer uses the world model as a unified representation container to abstract common and high-level knowledge, promoting interactions across different types of data, \emph{i.e.}, hyperspectral (HSI), light detection and ranging (LiDAR), and text data. Initially, a new latent diffusion fusion and multimodal generation paradigm (LaMG) is utilized for its exceptional information integration and detail retention capabilities. Subsequently, an open-world knowledge-guided consistency projection (OK-CP) module incorporates prompt representations for visually described objects and aligns language-visual features through contrastive learning. In this way, the domain gap can be bridged by fine-tuning the pre-trained world models with limited samples. Finally, an end-to-end multitask combinatorial optimization (MuCO) strategy can capture slight feature bias and constrain the diffusion process in a collaboratively learnable direction. Experiments conducted on four typical datasets indicate the effectiveness and advantages of the proposed FusDreamer. The corresponding code will be released at https://github.com/Cimy-wang/FusDreamer.

LIR-LIVO: A Lightweight,Robust LiDAR/Vision/Inertial Odometry with Illumination-Resilient Deep Features

Feb 12, 2025Abstract:In this paper, we propose LIR-LIVO, a lightweight and robust LiDAR-inertial-visual odometry system designed for challenging illumination and degraded environments. The proposed method leverages deep learning-based illumination-resilient features and LiDAR-Inertial-Visual Odometry (LIVO). By incorporating advanced techniques such as uniform depth distribution of features enabled by depth association with LiDAR point clouds and adaptive feature matching utilizing Superpoint and LightGlue, LIR-LIVO achieves state-of-the-art (SOTA) accuracy and robustness with low computational cost. Experiments are conducted on benchmark datasets, including NTU-VIRAL, Hilti'22, and R3LIVE-Dataset. The corresponding results demonstrate that our proposed method outperforms other SOTA methods on both standard and challenging datasets. Particularly, the proposed method demonstrates robust pose estimation under poor ambient lighting conditions in the Hilti'22 dataset. The code of this work is publicly accessible on GitHub to facilitate advancements in the robotics community.

Forecasting the Future with Future Technologies: Advancements in Large Meteorological Models

Apr 10, 2024Abstract:The field of meteorological forecasting has undergone a significant transformation with the integration of large models, especially those employing deep learning techniques. This paper reviews the advancements and applications of these models in weather prediction, emphasizing their role in transforming traditional forecasting methods. Models like FourCastNet, Pangu-Weather, GraphCast, ClimaX, and FengWu have made notable contributions by providing accurate, high-resolution forecasts, surpassing the capabilities of traditional Numerical Weather Prediction (NWP) models. These models utilize advanced neural network architectures, such as Convolutional Neural Networks (CNNs), Graph Neural Networks (GNNs), and Transformers, to process diverse meteorological data, enhancing predictive accuracy across various time scales and spatial resolutions. The paper addresses challenges in this domain, including data acquisition and computational demands, and explores future opportunities for model optimization and hardware advancements. It underscores the integration of artificial intelligence with conventional meteorological techniques, promising improved weather prediction accuracy and a significant contribution to addressing climate-related challenges. This synergy positions large models as pivotal in the evolving landscape of meteorological forecasting.

Four years of multi-modal odometry and mapping on the rail vehicles

Aug 22, 2023Abstract:Precise, seamless, and efficient train localization as well as long-term railway environment monitoring is the essential property towards reliability, availability, maintainability, and safety (RAMS) engineering for railroad systems. Simultaneous localization and mapping (SLAM) is right at the core of solving the two problems concurrently. In this end, we propose a high-performance and versatile multi-modal framework in this paper, targeted for the odometry and mapping task for various rail vehicles. Our system is built atop an inertial-centric state estimator that tightly couples light detection and ranging (LiDAR), visual, optionally satellite navigation and map-based localization information with the convenience and extendibility of loosely coupled methods. The inertial sensors IMU and wheel encoder are treated as the primary sensor, which achieves the observations from subsystems to constrain the accelerometer and gyroscope biases. Compared to point-only LiDAR-inertial methods, our approach leverages more geometry information by introducing both track plane and electric power pillars into state estimation. The Visual-inertial subsystem also utilizes the environmental structure information by employing both lines and points. Besides, the method is capable of handling sensor failures by automatic reconfiguration bypassing failure modules. Our proposed method has been extensively tested in the long-during railway environments over four years, including general-speed, high-speed and metro, both passenger and freight traffic are investigated. Further, we aim to share, in an open way, the experience, problems, and successes of our group with the robotics community so that those that work in such environments can avoid these errors. In this view, we open source some of the datasets to benefit the research community.

A LiDAR-Inertial SLAM Tightly-Coupled with Dropout-Tolerant GNSS Fusion for Autonomous Mine Service Vehicles

Aug 22, 2023Abstract:Multi-modal sensor integration has become a crucial prerequisite for the real-world navigation systems. Recent studies have reported successful deployment of such system in many fields. However, it is still challenging for navigation tasks in mine scenes due to satellite signal dropouts, degraded perception, and observation degeneracy. To solve this problem, we propose a LiDAR-inertial odometry method in this paper, utilizing both Kalman filter and graph optimization. The front-end consists of multiple parallel running LiDAR-inertial odometries, where the laser points, IMU, and wheel odometer information are tightly fused in an error-state Kalman filter. Instead of the commonly used feature points, we employ surface elements for registration. The back-end construct a pose graph and jointly optimize the pose estimation results from inertial, LiDAR odometry, and global navigation satellite system (GNSS). Since the vehicle has a long operation time inside the tunnel, the largely accumulated drift may be not fully by the GNSS measurements. We hereby leverage a loop closure based re-initialization process to achieve full alignment. In addition, the system robustness is improved through handling data loss, stream consistency, and estimation error. The experimental results show that our system has a good tolerance to the long-period degeneracy with the cooperation different LiDARs and surfel registration, achieving meter-level accuracy even for tens of minutes running during GNSS dropouts.

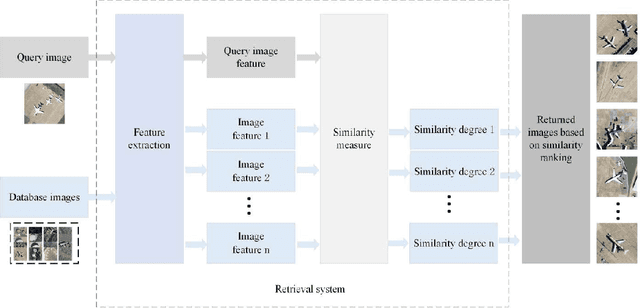

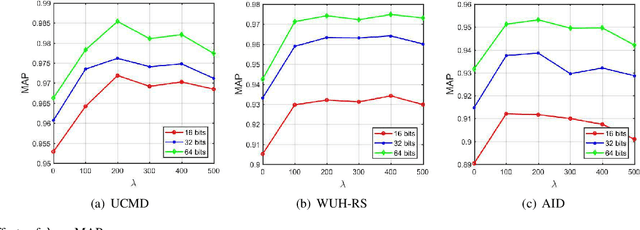

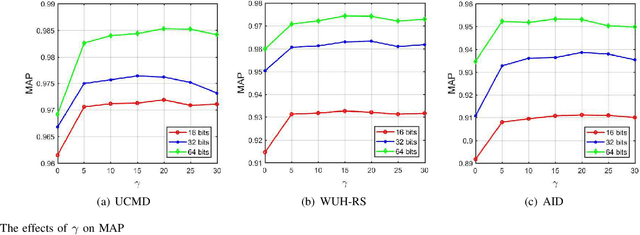

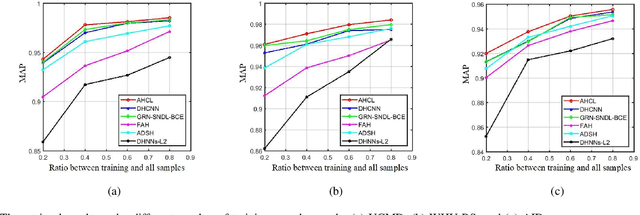

Asymmetric Hash Code Learning for Remote Sensing Image Retrieval

Jan 15, 2022

Abstract:Remote sensing image retrieval (RSIR), aiming at searching for a set of similar items to a given query image, is a very important task in remote sensing applications. Deep hashing learning as the current mainstream method has achieved satisfactory retrieval performance. On one hand, various deep neural networks are used to extract semantic features of remote sensing images. On the other hand, the hashing techniques are subsequently adopted to map the high-dimensional deep features to the low-dimensional binary codes. This kind of methods attempts to learn one hash function for both the query and database samples in a symmetric way. However, with the number of database samples increasing, it is typically time-consuming to generate the hash codes of large-scale database images. In this paper, we propose a novel deep hashing method, named asymmetric hash code learning (AHCL), for RSIR. The proposed AHCL generates the hash codes of query and database images in an asymmetric way. In more detail, the hash codes of query images are obtained by binarizing the output of the network, while the hash codes of database images are directly learned by solving the designed objective function. In addition, we combine the semantic information of each image and the similarity information of pairs of images as supervised information to train a deep hashing network, which improves the representation ability of deep features and hash codes. The experimental results on three public datasets demonstrate that the proposed method outperforms symmetric methods in terms of retrieval accuracy and efficiency. The source code is available at https://github.com/weiweisong415/Demo AHCL for TGRS2022.

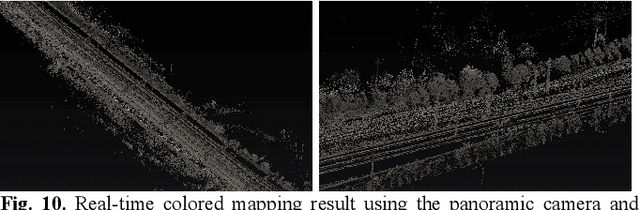

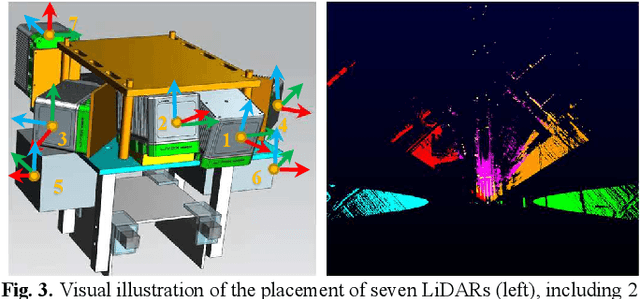

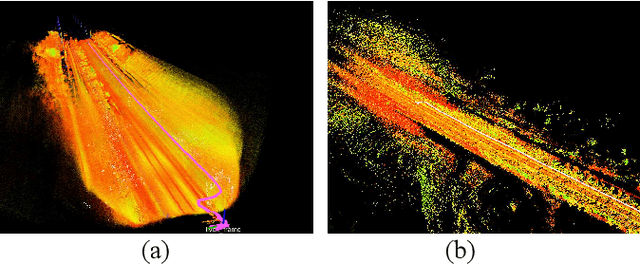

Simultaneous Location of Rail Vehicles and Mapping of Environment with Multiple LiDARs

Dec 25, 2021

Abstract:Precise and real-time rail vehicle localization as well as railway environment monitoring is crucial for railroad safety. In this letter, we propose a multi-LiDAR based simultaneous localization and mapping (SLAM) system for railway applications. Our approach starts with measurements preprocessing to denoise and synchronize multiple LiDAR inputs. Different frame-to-frame registration methods are used according to the LiDAR placement. In addition, we leverage the plane constraints from extracted rail tracks to improve the system accuracy. The local map is further aligned with global map utilizing absolute position measurements. Considering the unavoidable metal abrasion and screw loosening, online extrinsic refinement is awakened for long-during operation. The proposed method is extensively verified on datasets gathered over 3000 km. The results demonstrate that the proposed system achieves accurate and robust localization together with effective mapping for large-scale environments. Our system has already been applied to a freight traffic railroad for monitoring tasks.

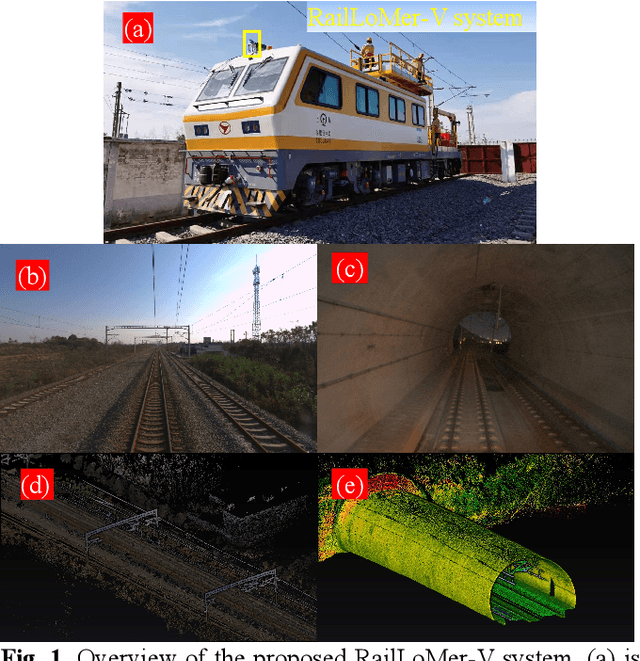

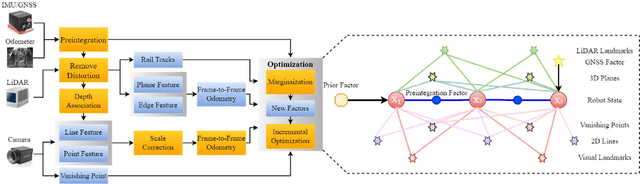

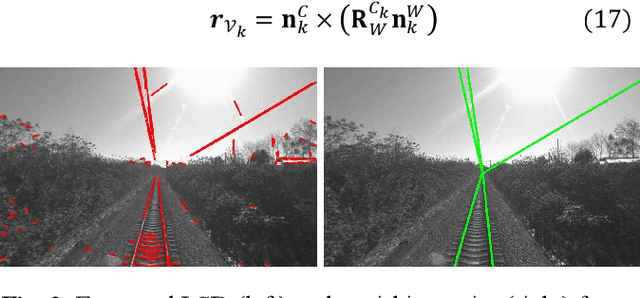

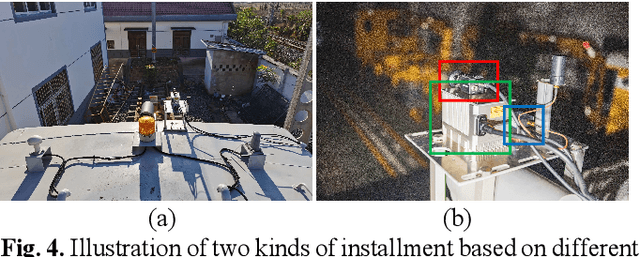

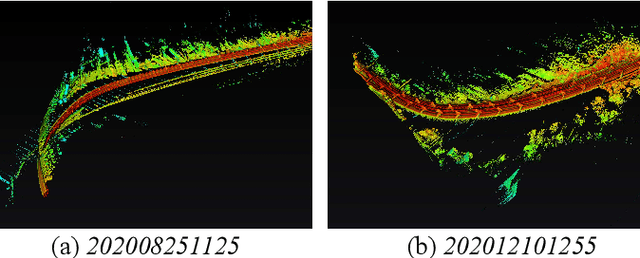

Rail Vehicle Localization and Mapping with LiDAR-Vision-Inertial-GNSS Fusion

Dec 16, 2021

Abstract:In this paper, we present a global navigation satellite system (GNSS) aided LiDAR-visual-inertial scheme, RailLoMer-V, for accurate and robust rail vehicle localization and mapping. RailLoMer-V is formulated atop a factor graph and consists of two subsystems: an odometer assisted LiDAR-inertial system (OLIS) and an odometer integrated Visual-inertial system (OVIS). Both the subsystem exploits the typical geometry structure on the railroads. The plane constraints from extracted rail tracks are used to complement the rotation and vertical errors in OLIS. Besides, the line features and vanishing points are leveraged to constrain rotation drifts in OVIS. The proposed framework is extensively evaluated on datasets over 800 km, gathered for more than a year on both general-speed and high-speed railways, day and night. Taking advantage of the tightly-coupled integration of all measurements from individual sensors, our framework is accurate to long-during tasks and robust enough to grievously degenerated scenarios (railway tunnels). In addition, the real-time performance can be achieved with an onboard computer.

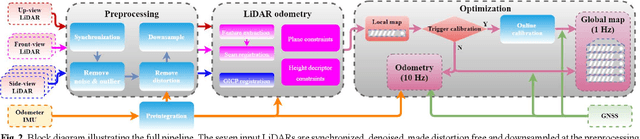

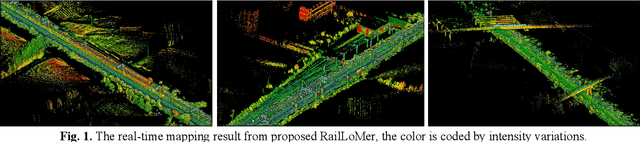

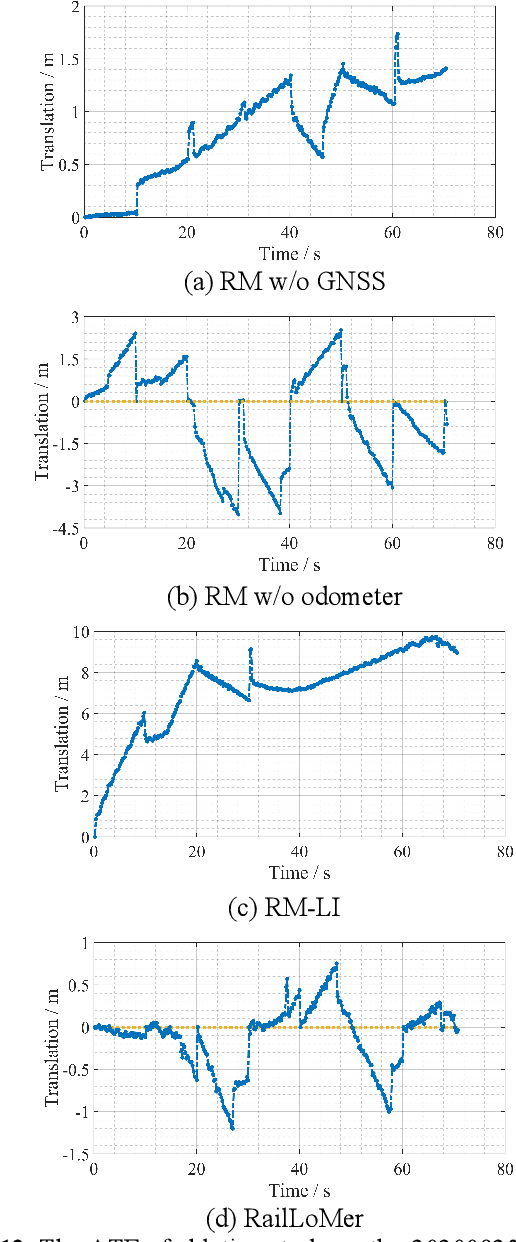

RailLoMer: Rail Vehicle Localization and Mapping with LiDAR-IMU-Odometer-GNSS Data Fusion

Nov 30, 2021

Abstract:We present RailLoMer in this article, to achieve real-time accurate and robust odometry and mapping for rail vehicles. RailLoMer receives measurements from two LiDARs, an IMU, train odometer, and a global navigation satellite system (GNSS) receiver. As frontend, the estimated motion from IMU/odometer preintegration de-skews the denoised point clouds and produces initial guess for frame-to-frame LiDAR odometry. As backend, a sliding window based factor graph is formulated to jointly optimize multi-modal information. In addition, we leverage the plane constraints from extracted rail tracks and the structure appearance descriptor to further improve the system robustness against repetitive structures. To ensure a globally-consistent and less blurry mapping result, we develop a two-stage mapping method that first performs scan-to-map in local scale, then utilizes the GNSS information to register the submaps. The proposed method is extensively evaluated on datasets gathered for a long time range over numerous scales and scenarios, and show that RailLoMer delivers decimeter-grade localization accuracy even in large or degenerated environments. We also integrate RailLoMer into an interactive train state and railway monitoring system prototype design, which has already been deployed to an experimental freight traffic railroad.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge