Zhiyong Tu

Four years of multi-modal odometry and mapping on the rail vehicles

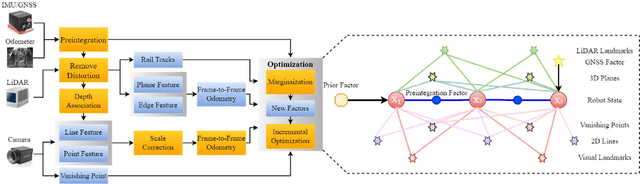

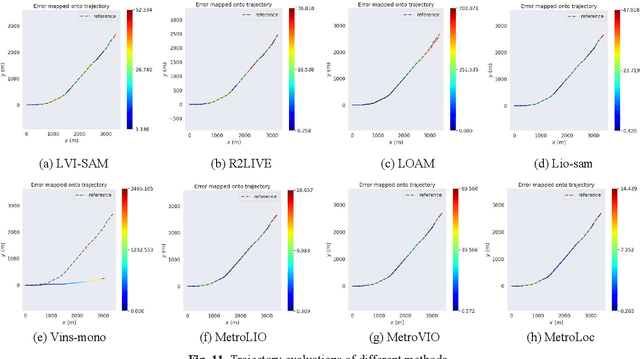

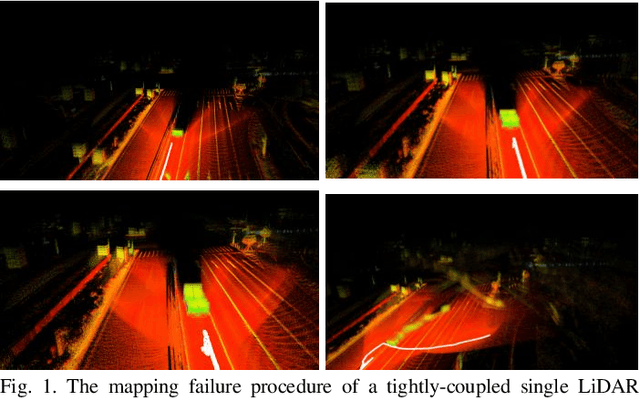

Aug 22, 2023Abstract:Precise, seamless, and efficient train localization as well as long-term railway environment monitoring is the essential property towards reliability, availability, maintainability, and safety (RAMS) engineering for railroad systems. Simultaneous localization and mapping (SLAM) is right at the core of solving the two problems concurrently. In this end, we propose a high-performance and versatile multi-modal framework in this paper, targeted for the odometry and mapping task for various rail vehicles. Our system is built atop an inertial-centric state estimator that tightly couples light detection and ranging (LiDAR), visual, optionally satellite navigation and map-based localization information with the convenience and extendibility of loosely coupled methods. The inertial sensors IMU and wheel encoder are treated as the primary sensor, which achieves the observations from subsystems to constrain the accelerometer and gyroscope biases. Compared to point-only LiDAR-inertial methods, our approach leverages more geometry information by introducing both track plane and electric power pillars into state estimation. The Visual-inertial subsystem also utilizes the environmental structure information by employing both lines and points. Besides, the method is capable of handling sensor failures by automatic reconfiguration bypassing failure modules. Our proposed method has been extensively tested in the long-during railway environments over four years, including general-speed, high-speed and metro, both passenger and freight traffic are investigated. Further, we aim to share, in an open way, the experience, problems, and successes of our group with the robotics community so that those that work in such environments can avoid these errors. In this view, we open source some of the datasets to benefit the research community.

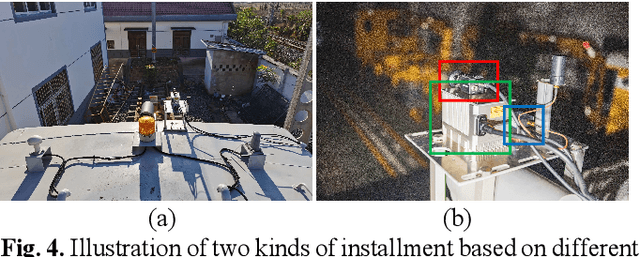

Simultaneous Location of Rail Vehicles and Mapping of Environment with Multiple LiDARs

Dec 25, 2021

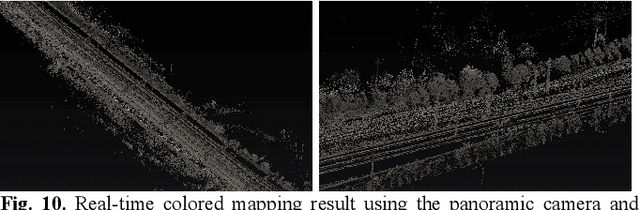

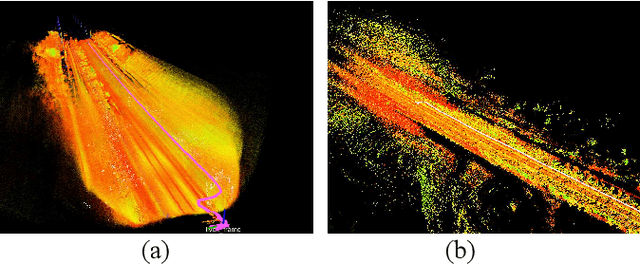

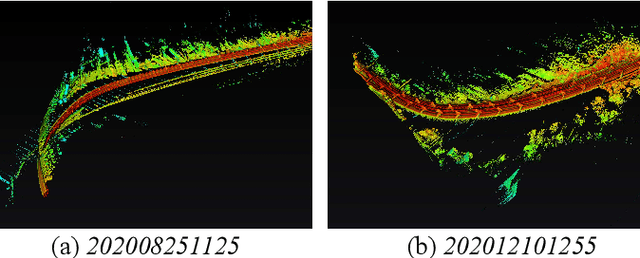

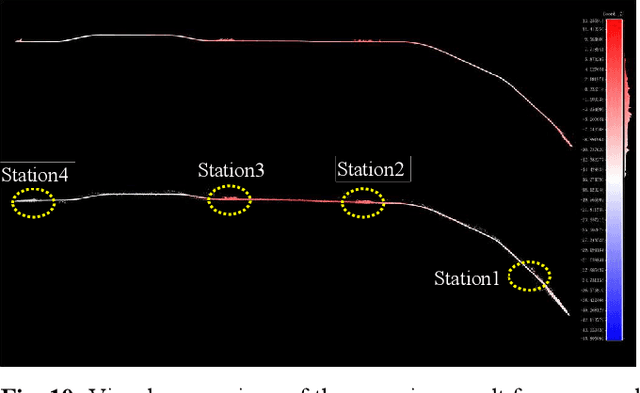

Abstract:Precise and real-time rail vehicle localization as well as railway environment monitoring is crucial for railroad safety. In this letter, we propose a multi-LiDAR based simultaneous localization and mapping (SLAM) system for railway applications. Our approach starts with measurements preprocessing to denoise and synchronize multiple LiDAR inputs. Different frame-to-frame registration methods are used according to the LiDAR placement. In addition, we leverage the plane constraints from extracted rail tracks to improve the system accuracy. The local map is further aligned with global map utilizing absolute position measurements. Considering the unavoidable metal abrasion and screw loosening, online extrinsic refinement is awakened for long-during operation. The proposed method is extensively verified on datasets gathered over 3000 km. The results demonstrate that the proposed system achieves accurate and robust localization together with effective mapping for large-scale environments. Our system has already been applied to a freight traffic railroad for monitoring tasks.

Rail Vehicle Localization and Mapping with LiDAR-Vision-Inertial-GNSS Fusion

Dec 16, 2021

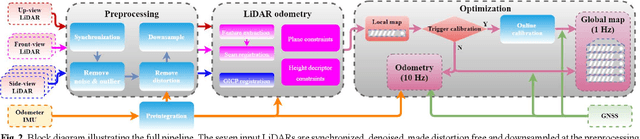

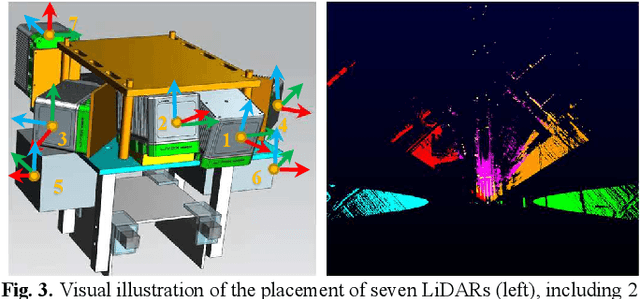

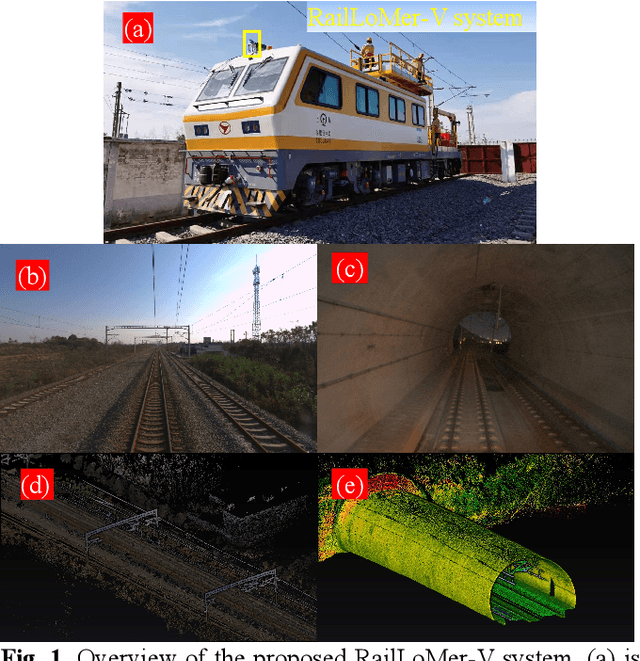

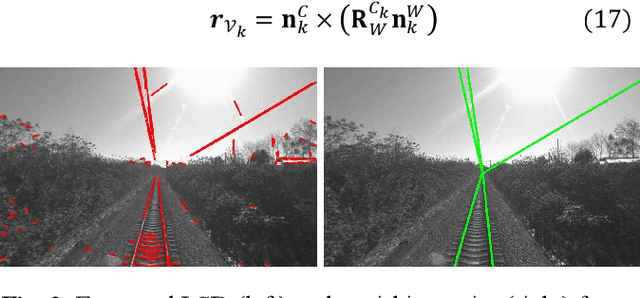

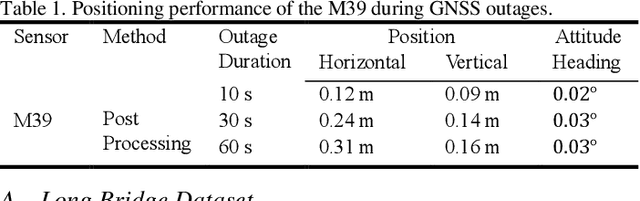

Abstract:In this paper, we present a global navigation satellite system (GNSS) aided LiDAR-visual-inertial scheme, RailLoMer-V, for accurate and robust rail vehicle localization and mapping. RailLoMer-V is formulated atop a factor graph and consists of two subsystems: an odometer assisted LiDAR-inertial system (OLIS) and an odometer integrated Visual-inertial system (OVIS). Both the subsystem exploits the typical geometry structure on the railroads. The plane constraints from extracted rail tracks are used to complement the rotation and vertical errors in OLIS. Besides, the line features and vanishing points are leveraged to constrain rotation drifts in OVIS. The proposed framework is extensively evaluated on datasets over 800 km, gathered for more than a year on both general-speed and high-speed railways, day and night. Taking advantage of the tightly-coupled integration of all measurements from individual sensors, our framework is accurate to long-during tasks and robust enough to grievously degenerated scenarios (railway tunnels). In addition, the real-time performance can be achieved with an onboard computer.

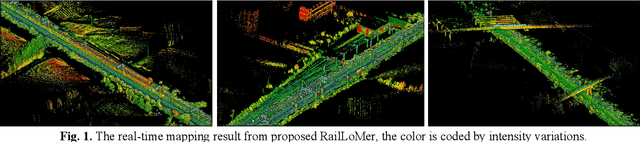

RailLoMer: Rail Vehicle Localization and Mapping with LiDAR-IMU-Odometer-GNSS Data Fusion

Nov 30, 2021

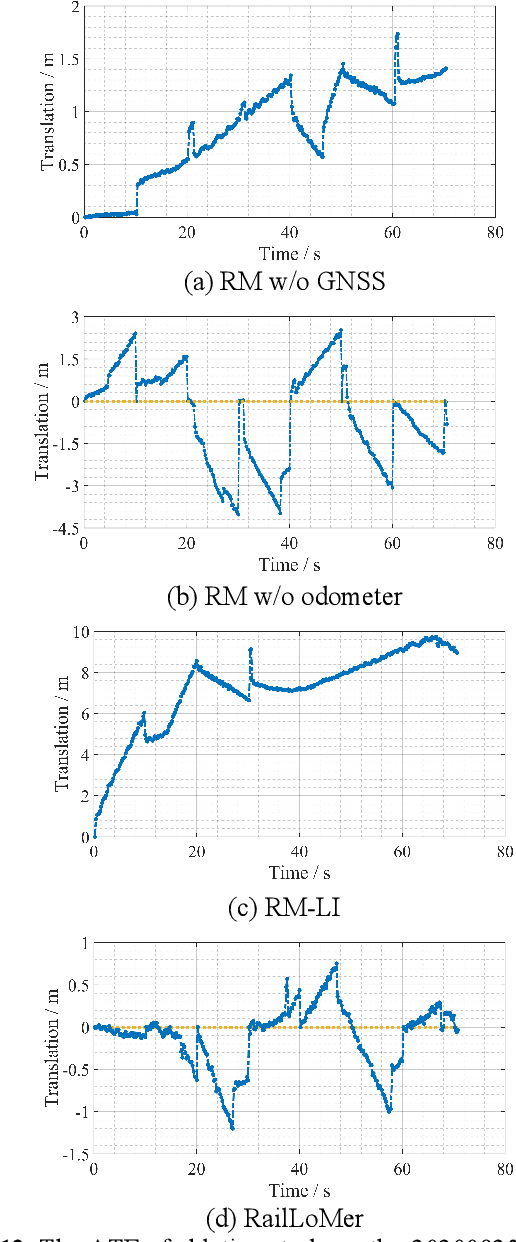

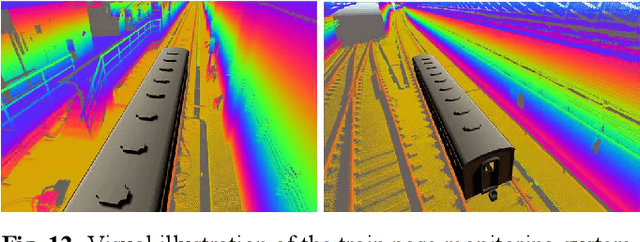

Abstract:We present RailLoMer in this article, to achieve real-time accurate and robust odometry and mapping for rail vehicles. RailLoMer receives measurements from two LiDARs, an IMU, train odometer, and a global navigation satellite system (GNSS) receiver. As frontend, the estimated motion from IMU/odometer preintegration de-skews the denoised point clouds and produces initial guess for frame-to-frame LiDAR odometry. As backend, a sliding window based factor graph is formulated to jointly optimize multi-modal information. In addition, we leverage the plane constraints from extracted rail tracks and the structure appearance descriptor to further improve the system robustness against repetitive structures. To ensure a globally-consistent and less blurry mapping result, we develop a two-stage mapping method that first performs scan-to-map in local scale, then utilizes the GNSS information to register the submaps. The proposed method is extensively evaluated on datasets gathered for a long time range over numerous scales and scenarios, and show that RailLoMer delivers decimeter-grade localization accuracy even in large or degenerated environments. We also integrate RailLoMer into an interactive train state and railway monitoring system prototype design, which has already been deployed to an experimental freight traffic railroad.

MetroLoc: Metro Vehicle Mapping and Localization with LiDAR-Camera-Inertial Integration

Nov 01, 2021

Abstract:We propose an accurate and robust multi-modal sensor fusion framework, MetroLoc, towards one of the most extreme scenarios, the large-scale metro vehicle localization and mapping. MetroLoc is built atop an IMU-centric state estimator that tightly couples light detection and ranging (LiDAR), visual, and inertial information with the convenience of loosely coupled methods. The proposed framework is composed of three submodules: IMU odometry, LiDAR-inertial odometry (LIO), and Visual-inertial odometry (VIO). The IMU is treated as the primary sensor, which achieves the observations from LIO and VIO to constrain the accelerometer and gyroscope biases. Compared to previous point-only LIO methods, our approach leverages more geometry information by introducing both line and plane features into motion estimation. The VIO also utilizes the environmental structure information by employing both lines and points. Our proposed method has been extensively tested in the long-during metro environments with a maintenance vehicle. Experimental results show the system more accurate and robust than the state-of-the-art approaches with real-time performance. Besides, we develop a series of Virtual Reality (VR) applications towards efficient, economical, and interactive rail vehicle state and trackside infrastructure monitoring, which has already been deployed to an outdoor testing railroad.

GM-Livox: An Integrated Framework for Large-Scale Map Construction with Multiple Non-repetitive Scanning LiDARs

Oct 11, 2021

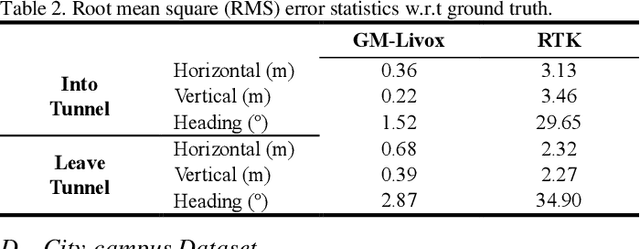

Abstract:With the ability of providing direct and accurate enough range measurements, light detection and ranging (LiDAR) is playing an essential role in localization and detection for autonomous vehicles. Since single LiDAR suffers from hardware failure and performance degradation intermittently, we present a multi-LiDAR integration scheme in this article. Our framework tightly couples multiple non-repetitive scanning LiDARs with inertial, encoder, and global navigation satellite system (GNSS) into pose estimation and simultaneous global map generation. Primarily, we formulate a precise synchronization strategy to integrate isolated sensors, and the extracted feature points from separate LiDARs are merged into a single sweep. The fused scans are introduced to compute the scan-matching correspondences, which can be further refined by additional real-time kinematic (RTK) measurements. Based thereupon, we construct a factor graph along with the inertial preintegration result, estimated ground constraints, and RTK data. For the purpose of maintaining a restricted number of poses for estimation, we deploy a keyframe based sliding-window optimization strategy in our system. The real-time performance is guaranteed with multi-threaded computation, and extensive experiments are conducted in challenging scenarios. Experimental results show that the utilization of multiple LiDARs boosts the system performance in both robustness and accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge