Weijun Ren

What Makes Entities Similar? A Similarity Flooding Perspective for Multi-sourced Knowledge Graph Embeddings

Jun 05, 2023Abstract:Joint representation learning over multi-sourced knowledge graphs (KGs) yields transferable and expressive embeddings that improve downstream tasks. Entity alignment (EA) is a critical step in this process. Despite recent considerable research progress in embedding-based EA, how it works remains to be explored. In this paper, we provide a similarity flooding perspective to explain existing translation-based and aggregation-based EA models. We prove that the embedding learning process of these models actually seeks a fixpoint of pairwise similarities between entities. We also provide experimental evidence to support our theoretical analysis. We propose two simple but effective methods inspired by the fixpoint computation in similarity flooding, and demonstrate their effectiveness on benchmark datasets. Our work bridges the gap between recent embedding-based models and the conventional similarity flooding algorithm. It would improve our understanding of and increase our faith in embedding-based EA.

Deep Active Alignment of Knowledge Graph Entities and Schemata

Apr 19, 2023

Abstract:Knowledge graphs (KGs) store rich facts about the real world. In this paper, we study KG alignment, which aims to find alignment between not only entities but also relations and classes in different KGs. Alignment at the entity level can cross-fertilize alignment at the schema level. We propose a new KG alignment approach, called DAAKG, based on deep learning and active learning. With deep learning, it learns the embeddings of entities, relations and classes, and jointly aligns them in a semi-supervised manner. With active learning, it estimates how likely an entity, relation or class pair can be inferred, and selects the best batch for human labeling. We design two approximation algorithms for efficient solution to batch selection. Our experiments on benchmark datasets show the superior accuracy and generalization of DAAKG and validate the effectiveness of all its modules.

Trustworthy Knowledge Graph Completion Based on Multi-sourced Noisy Data

Jan 21, 2022

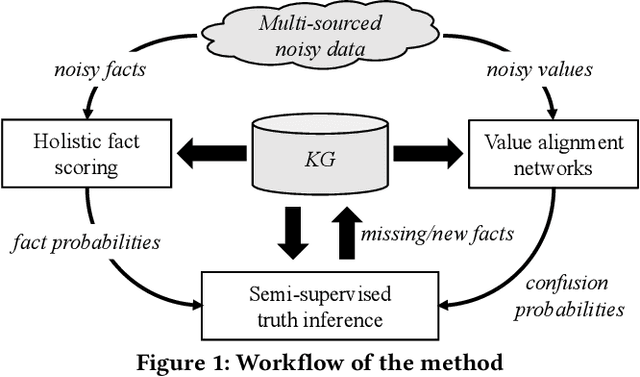

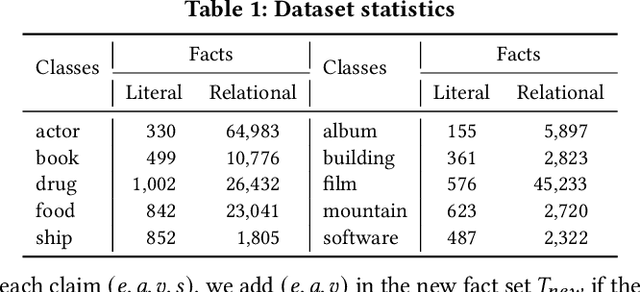

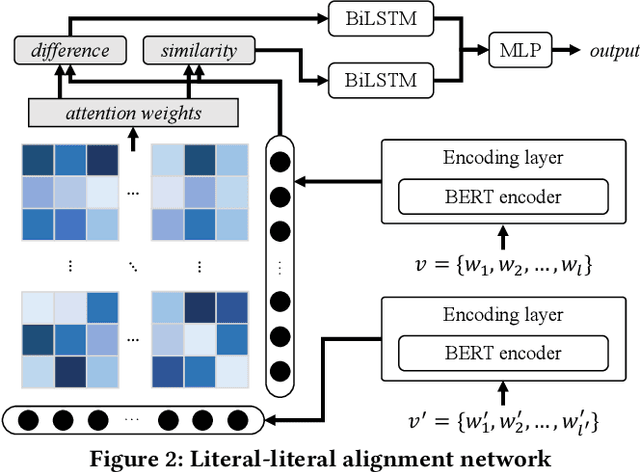

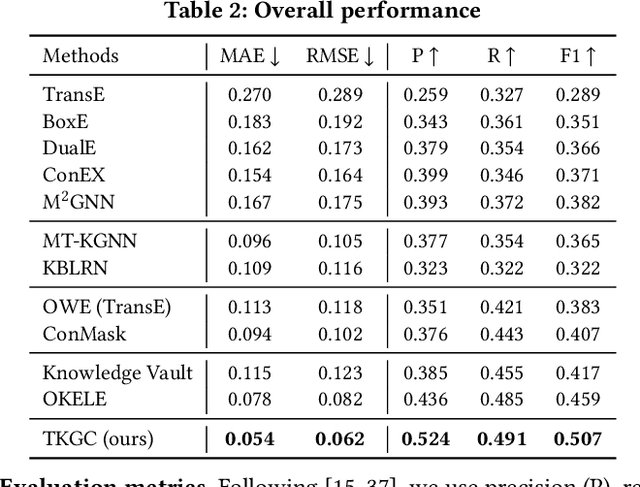

Abstract:Knowledge graphs (KGs) have become a valuable asset for many AI applications. Although some KGs contain plenty of facts, they are widely acknowledged as incomplete. To address this issue, many KG completion methods are proposed. Among them, open KG completion methods leverage the Web to find missing facts. However, noisy data collected from diverse sources may damage the completion accuracy. In this paper, we propose a new trustworthy method that exploits facts for a KG based on multi-sourced noisy data and existing facts in the KG. Specifically, we introduce a graph neural network with a holistic scoring function to judge the plausibility of facts with various value types. We design value alignment networks to resolve the heterogeneity between values and map them to entities even outside the KG. Furthermore, we present a truth inference model that incorporates data source qualities into the fact scoring function, and design a semi-supervised learning way to infer the truths from heterogeneous values. We conduct extensive experiments to compare our method with the state-of-the-arts. The results show that our method achieves superior accuracy not only in completing missing facts but also in discovering new facts.

Metapaths guided Neighbors aggregated Network for?Heterogeneous Graph Reasoning

Mar 11, 2021

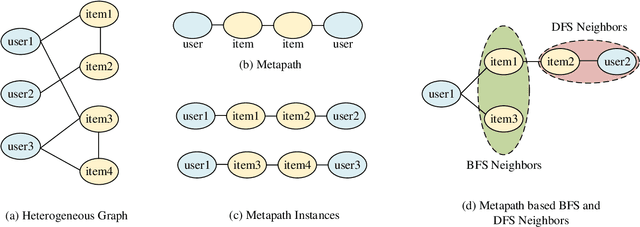

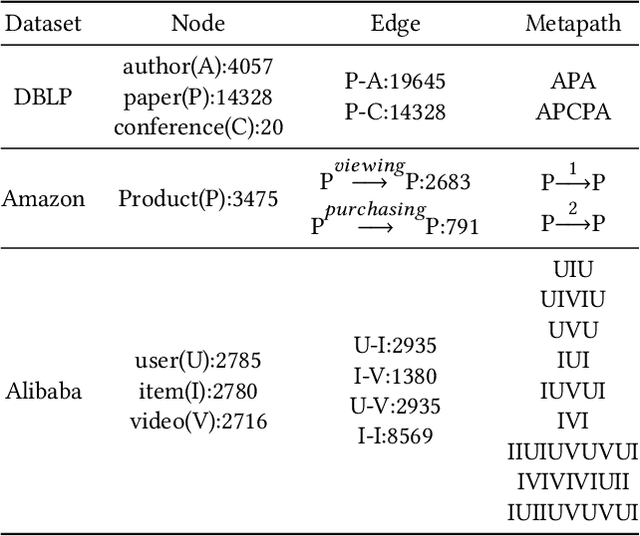

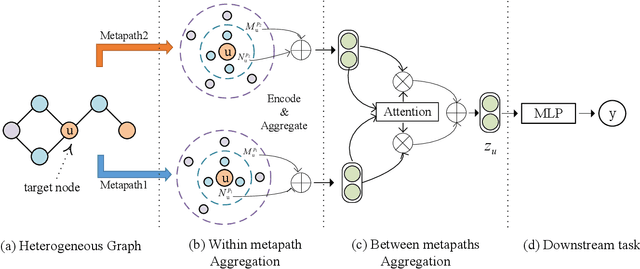

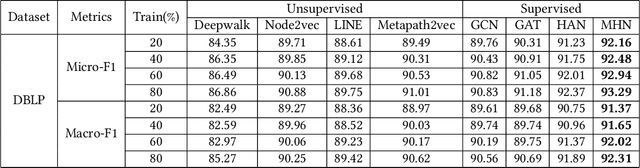

Abstract:Most real-world datasets are inherently heterogeneous graphs, which involve a diversity of node and relation types. Heterogeneous graph embedding is to learn the structure and semantic information from the graph, and then embed it into the low-dimensional node representation. Existing methods usually capture the composite relation of a heterogeneous graph by defining metapath, which represent a semantic of the graph. However, these methods either ignore node attributes, or discard the local and global information of the graph, or only consider one metapath. To address these limitations, we propose a Metapaths-guided Neighbors-aggregated Heterogeneous Graph Neural Network(MHN) to improve performance. Specially, MHN employs node base embedding to encapsulate node attributes, BFS and DFS neighbors aggregation within a metapath to capture local and global information, and metapaths aggregation to combine different semantics of the heterogeneous graph. We conduct extensive experiments for the proposed MHN on three real-world heterogeneous graph datasets, including node classification, link prediction and online A/B test on Alibaba mobile application. Results demonstrate that MHN performs better than other state-of-the-art baselines.

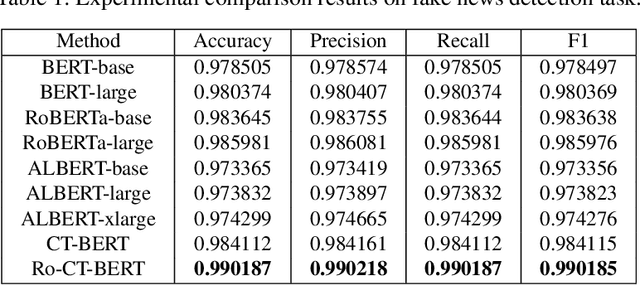

Transformer-based Language Model Fine-tuning Methods for COVID-19 Fake News Detection

Jan 18, 2021

Abstract:With the pandemic of COVID-19, relevant fake news is spreading all over the sky throughout the social media. Believing in them without discrimination can cause great trouble to people's life. However, universal language models may perform weakly in these fake news detection for lack of large-scale annotated data and sufficient semantic understanding of domain-specific knowledge. While the model trained on corresponding corpora is also mediocre for insufficient learning. In this paper, we propose a novel transformer-based language model fine-tuning approach for these fake news detection. First, the token vocabulary of individual model is expanded for the actual semantics of professional phrases. Second, we adapt the heated-up softmax loss to distinguish the hard-mining samples, which are common for fake news because of the disambiguation of short text. Then, we involve adversarial training to improve the model's robustness. Last, the predicted features extracted by universal language model RoBERTa and domain-specific model CT-BERT are fused by one multiple layer perception to integrate fine-grained and high-level specific representations. Quantitative experimental results evaluated on existing COVID-19 fake news dataset show its superior performances compared to the state-of-the-art methods among various evaluation metrics. Furthermore, the best weighted average F1 score achieves 99.02%.

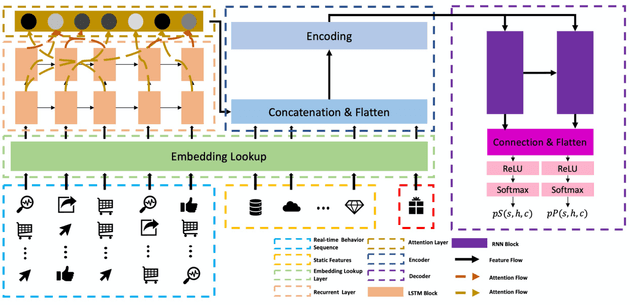

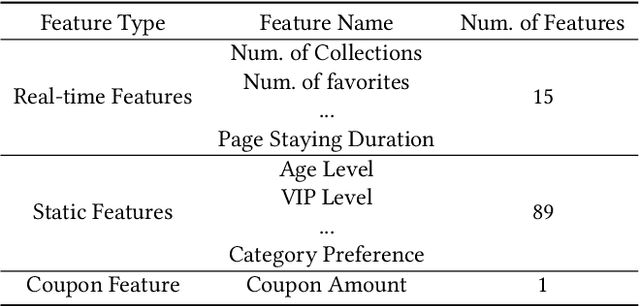

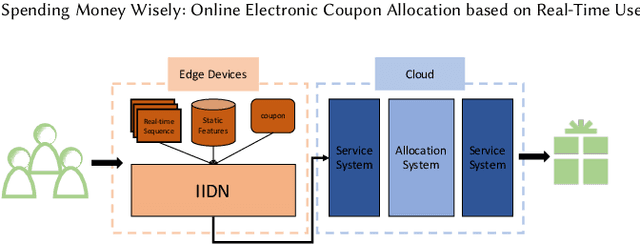

Spending Money Wisely: Online Electronic Coupon Allocation based on Real-Time User Intent Detection

Aug 23, 2020

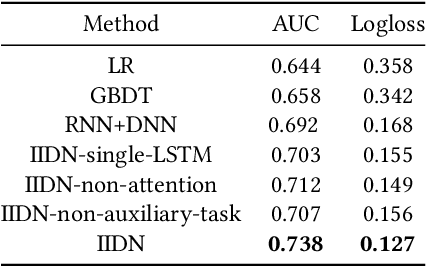

Abstract:Online electronic coupon (e-coupon) is becoming a primary tool for e-commerce platforms to attract users to place orders. E-coupons are the digital equivalent of traditional paper coupons which provide customers with discounts or gifts. One of the fundamental problems related is how to deliver e-coupons with minimal cost while users' willingness to place an order is maximized. We call this problem the coupon allocation problem. This is a non-trivial problem since the number of regular users on a mature e-platform often reaches hundreds of millions and the types of e-coupons to be allocated are often multiple. The policy space is extremely large and the online allocation has to satisfy a budget constraint. Besides, one can never observe the responses of one user under different policies which increases the uncertainty of the policy making process. Previous work fails to deal with these challenges. In this paper, we decompose the coupon allocation task into two subtasks: the user intent detection task and the allocation task. Accordingly, we propose a two-stage solution: at the first stage (detection stage), we put forward a novel Instantaneous Intent Detection Network (IIDN) which takes the user-coupon features as input and predicts user real-time intents; at the second stage (allocation stage), we model the allocation problem as a Multiple-Choice Knapsack Problem (MCKP) and provide a computational efficient allocation method using the intents predicted at the detection stage. We conduct extensive online and offline experiments and the results show the superiority of our proposed framework, which has brought great profits to the platform and continues to function online.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge