Weijie Fu

Worst-case Design for RIS-aided Over-the-air Computation with Imperfect CSI

Jun 14, 2022

Abstract:Over-the-air computation (AirComp) enables fast wireless data aggregation at the receiver through concurrent transmission by sensors in the application of Internet-of-Things (IoT). To further improve the performance of AirComp under unfavorable propagation channel conditions, we consider the problem of computation distortion minimization in a reconfigurable intelligent surface (RIS)-aided AirComp system. In particular, we take into account an additive bounded uncertainty of the channel state information (CSI) and the total power constraint, and jointly optimize the transceiver (Tx-Rx) and the RIS phase design from the perspective of worst-case robustness by minimizing the mean squared error (MSE) of the computation. To solve this intractable nonconvex problem, we develop an efficient alternating algorithm where both solutions to the robust sub-problem and to the joint design of Tx-Rx and RIS are obtained in closed forms. Simulation results demonstrate the effectiveness of the proposed method.

ExAD: An Ensemble Approach for Explanation-based Adversarial Detection

Mar 22, 2021

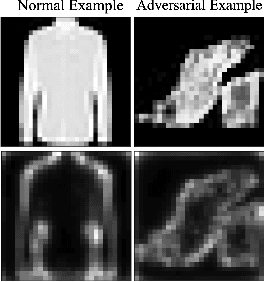

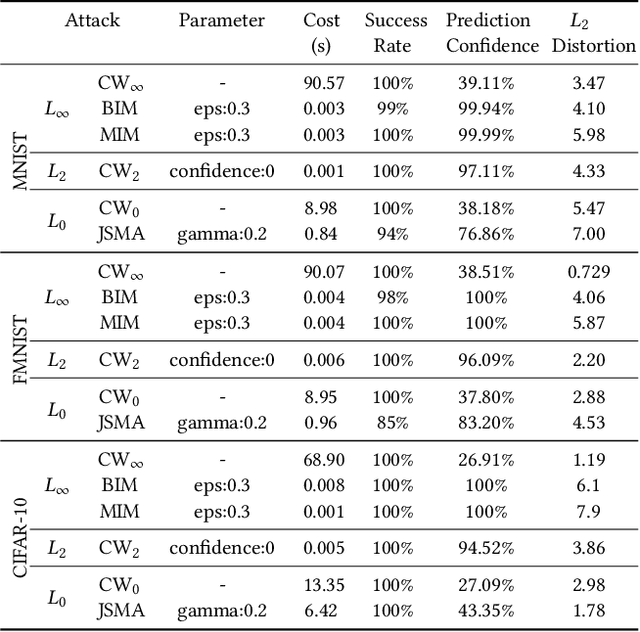

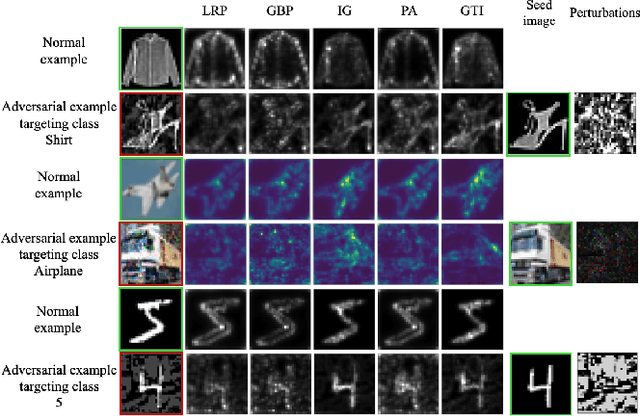

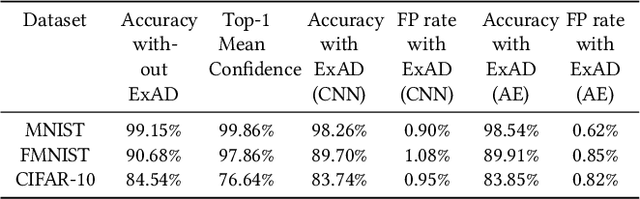

Abstract:Recent research has shown Deep Neural Networks (DNNs) to be vulnerable to adversarial examples that induce desired misclassifications in the models. Such risks impede the application of machine learning in security-sensitive domains. Several defense methods have been proposed against adversarial attacks to detect adversarial examples at test time or to make machine learning models more robust. However, while existing methods are quite effective under blackbox threat model, where the attacker is not aware of the defense, they are relatively ineffective under whitebox threat model, where the attacker has full knowledge of the defense. In this paper, we propose ExAD, a framework to detect adversarial examples using an ensemble of explanation techniques. Each explanation technique in ExAD produces an explanation map identifying the relevance of input variables for the model's classification. For every class in a dataset, the system includes a detector network, corresponding to each explanation technique, which is trained to distinguish between normal and abnormal explanation maps. At test time, if the explanation map of an input is detected as abnormal by any detector model of the classified class, then we consider the input to be an adversarial example. We evaluate our approach using six state-of-the-art adversarial attacks on three image datasets. Our extensive evaluation shows that our mechanism can effectively detect these attacks under blackbox threat model with limited false-positives. Furthermore, we find that our approach achieves promising results in limiting the success rate of whitebox attacks.

A Survey on Large-scale Machine Learning

Aug 10, 2020

Abstract:Machine learning can provide deep insights into data, allowing machines to make high-quality predictions and having been widely used in real-world applications, such as text mining, visual classification, and recommender systems. However, most sophisticated machine learning approaches suffer from huge time costs when operating on large-scale data. This issue calls for the need of {Large-scale Machine Learning} (LML), which aims to learn patterns from big data with comparable performance efficiently. In this paper, we offer a systematic survey on existing LML methods to provide a blueprint for the future developments of this area. We first divide these LML methods according to the ways of improving the scalability: 1) model simplification on computational complexities, 2) optimization approximation on computational efficiency, and 3) computation parallelism on computational capabilities. Then we categorize the methods in each perspective according to their targeted scenarios and introduce representative methods in line with intrinsic strategies. Lastly, we analyze their limitations and discuss potential directions as well as open issues that are promising to address in the future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge