Walter Karlen

Artifact detection and localization in single-channel mobile EEG for sleep research using deep learning and attention mechanisms

Apr 11, 2025Abstract:Artifacts in the electroencephalogram (EEG) degrade signal quality and impact the analysis of brain activity. Current methods for detecting artifacts in sleep EEG rely on simple threshold-based algorithms that require manual intervention, which is time-consuming and impractical due to the vast volume of data that novel mobile recording systems generate. We propose a convolutional neural network (CNN) model incorporating a convolutional block attention module (CNN-CBAM) to detect and identify the location of artifacts in the sleep EEG with attention maps. We benchmarked this model against six other machine learning and signal processing approaches. We trained/tuned all models on 72 manually annotated EEG recordings obtained during home-based monitoring from 18 healthy participants with a mean (SD) age of 68.05 y ($\pm$5.02). We tested them on 26 separate recordings from 6 healthy participants with a mean (SD) age of 68.33 y ($\pm$4.08), with contained artifacts in 4\% of epochs. CNN-CBAM achieved the highest area under the receiver operating characteristic curve (0.88), sensitivity (0.81), and specificity (0.86) when compared to the other approaches. The attention maps from CNN-CBAM localized artifacts within the epoch with a sensitivity of 0.71 and specificity of 0.67. This work demonstrates the feasibility of automating the detection and localization of artifacts in wearable sleep EEG.

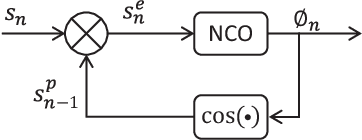

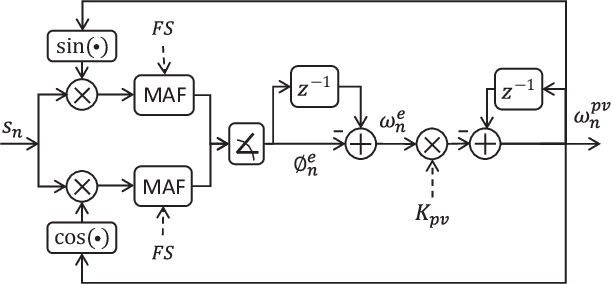

Benchmarking real-time algorithms for in-phase auditory stimulation of low amplitude slow waves with wearable EEG devices during sleep

Mar 04, 2022

Abstract:Auditory stimulation of EEG slow waves (SW) during non-rapid eye movement (NREM) sleep has shown to improve cognitive function when it is delivered at the up-phase of SW. SW enhancement is particularly desirable in subjects with low-amplitude SW such as older adults or patients suffering from neurodegeneration such as Parkinson disease (PD). However, existing algorithms to estimate the up-phase suffer from a poor phase accuracy at low EEG amplitudes and when SW frequencies are not constant. We introduce two novel algorithms for real-time EEG phase estimation on autonomous wearable devices. The algorithms were based on a phase-locked loop (PLL) and, for the first time, a phase vocoder (PV). We compared these phase tracking algorithms with a simple amplitude threshold approach. The optimized algorithms were benchmarked for phase accuracy, the capacity to estimate phase at SW amplitudes between 20 and 60 microV, and SW frequencies above 1 Hz on 324 recordings from healthy older adults and PD patients. Furthermore, the algorithms were implemented on a wearable device and the computational efficiency and the performance was evaluated on simulated sleep EEG, as well as prospectively during a recording with a PD patient. All three algorithms delivered more than 70% of the stimulation triggers during the SW up-phase. The PV showed the highest capacity on targeting low-amplitude SW and SW with frequencies above 1 Hz. The testing on real-time hardware revealed that both PV and PLL have marginal impact on microcontroller load, while the efficiency of the PV was 4% lower than the PLL. Active auditory stimulation did not influence the phase tracking. This work demonstrated that phase-accurate auditory stimulation can be delivered during home-based sleep interventions with a wearable device also in populations with low-amplitude SW.

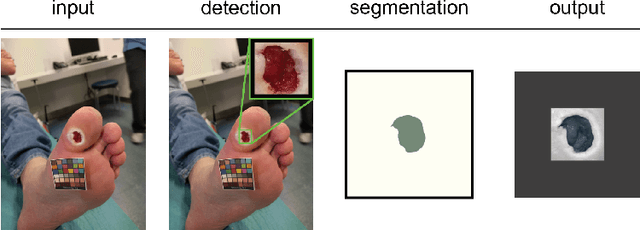

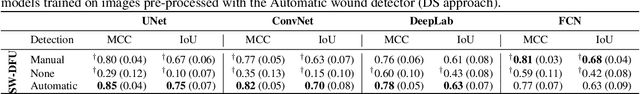

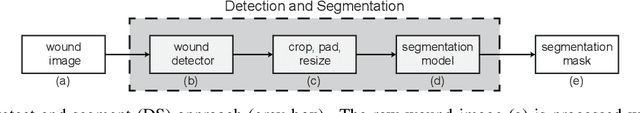

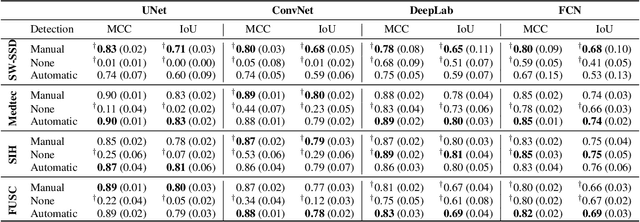

Detect-and-Segment: a Deep Learning Approach to Automate Wound Image Segmentation

Nov 02, 2021

Abstract:Chronic wounds significantly impact quality of life. If not properly managed, they can severely deteriorate. Image-based wound analysis could aid in objectively assessing the wound status by quantifying important features that are related to healing. However, the high heterogeneity of the wound types, image background composition, and capturing conditions challenge the robust segmentation of wound images. We present Detect-and-Segment (DS), a deep learning approach to produce wound segmentation maps with high generalization capabilities. In our approach, dedicated deep neural networks detected the wound position, isolated the wound from the uninformative background, and computed the wound segmentation map. We evaluated this approach using one data set with images of diabetic foot ulcers. For further testing, 4 supplemental independent data sets with larger variety of wound types from different body locations were used. The Matthews' correlation coefficient (MCC) improved from 0.29 when computing the segmentation on the full image to 0.85 when combining detection and segmentation in the same approach. When tested on the wound images drawn from the supplemental data sets, the DS approach increased the mean MCC from 0.17 to 0.85. Furthermore, the DS approach enabled the training of segmentation models with up to 90% less training data while maintaining the segmentation performance.

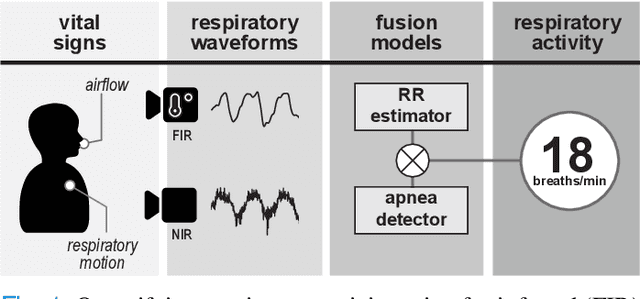

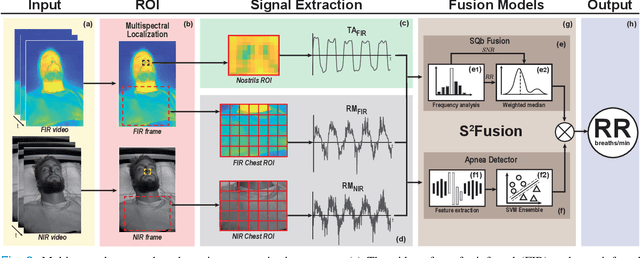

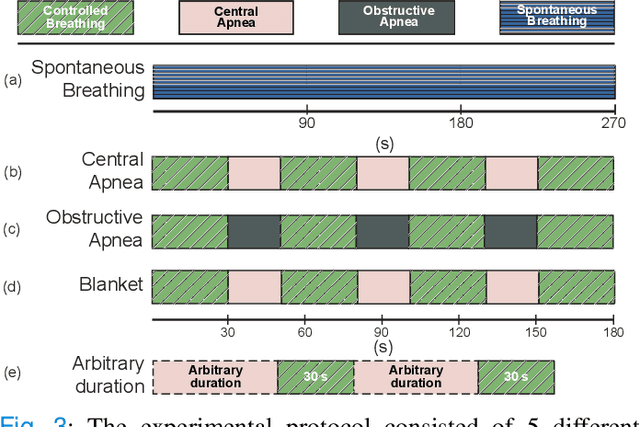

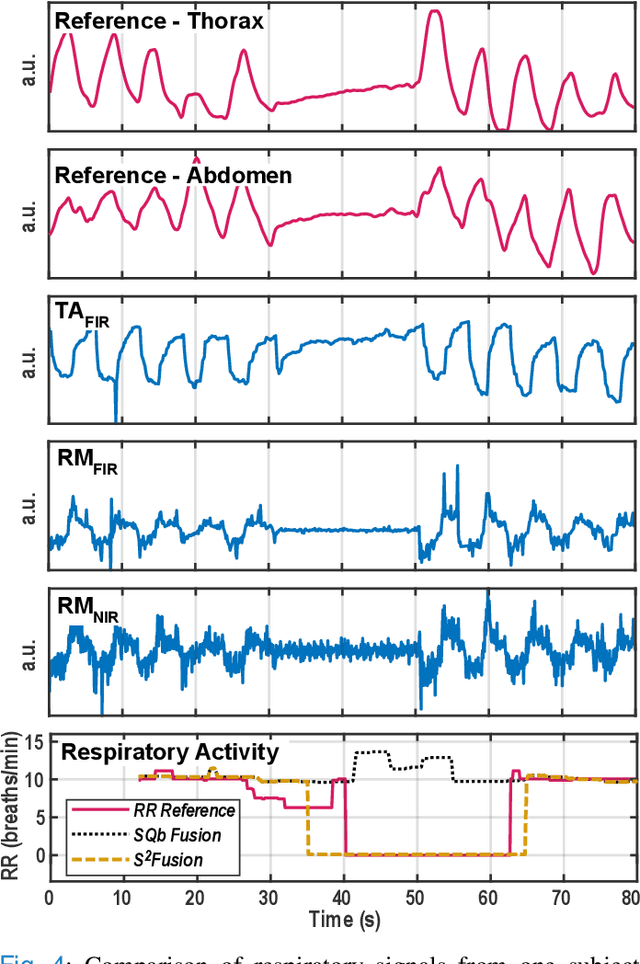

Multispectral Video Fusion for Non-contact Monitoring of Respiratory Rate and Apnea

Apr 21, 2020

Abstract:Continuous monitoring of respiratory activity is desirable in many clinical applications to detect respiratory events. Non-contact monitoring of respiration can be achieved with near- and far-infrared spectrum cameras. However, current technologies are not sufficiently robust to be used in clinical applications. For example, they fail to estimate an accurate respiratory rate (RR) during apnea. We present a novel algorithm based on multispectral data fusion that aims at estimating RR also during apnea. The algorithm independently addresses the RR estimation and apnea detection tasks. Respiratory information is extracted from multiple sources and fed into an RR estimator and an apnea detector whose results are fused into a final respiratory activity estimation. We evaluated the system retrospectively using data from 30 healthy adults who performed diverse controlled breathing tasks while lying supine in a dark room and reproduced central and obstructive apneic events. Combining multiple respiratory information from multispectral cameras improved the root mean square error (RMSE) accuracy of the RR estimation from up to 4.64 monospectral data down to 1.60 breaths/min. The median F1 scores for classifying obstructive (0.75 to 0.86) and central apnea (0.75 to 0.93) also improved. Furthermore, the independent consideration of apnea detection led to a more robust system (RMSE of 4.44 vs. 7.96 breaths/min). Our findings may represent a step towards the use of cameras for vital sign monitoring in medical applications.

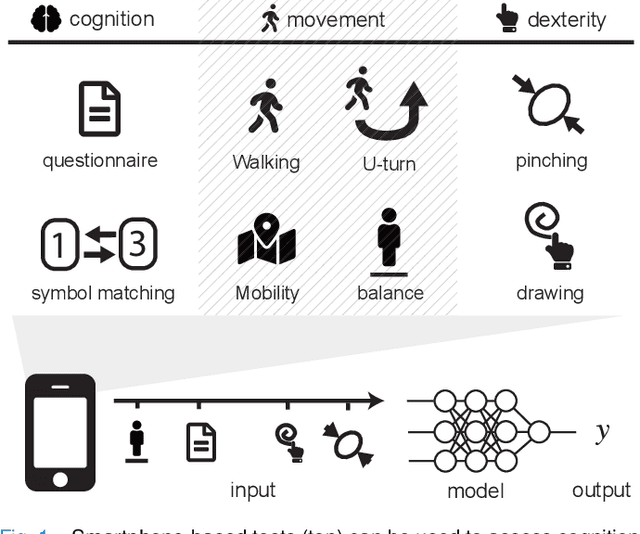

A Deep Learning Approach to Diagnosing Multiple Sclerosis from Smartphone Data

Jan 02, 2020

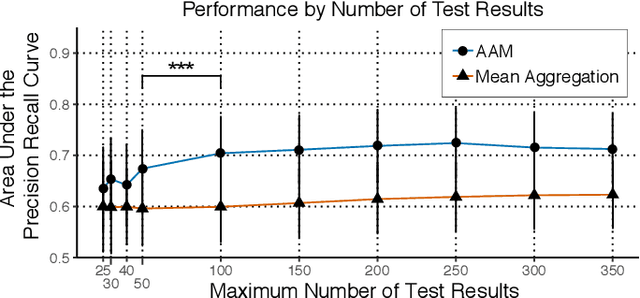

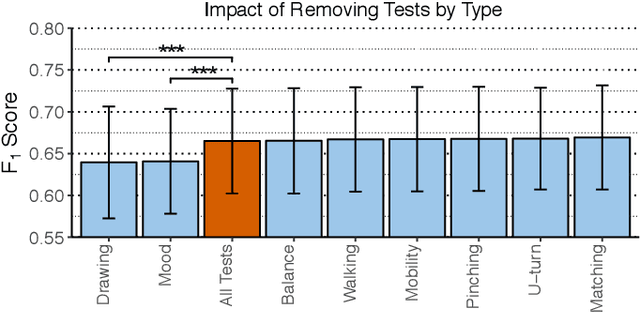

Abstract:Multiple sclerosis (MS) affects the central nervous system with a wide range of symptoms. MS can, for example, cause pain, changes in mood and fatigue, and may impair a person's movement, speech and visual functions. Diagnosis of MS typically involves a combination of complex clinical assessments and tests to rule out other diseases with similar symptoms. New technologies, such as smartphone monitoring in free-living conditions, could potentially aid in objectively assessing the symptoms of MS by quantifying symptom presence and intensity over long periods of time. Here, we present a deep-learning approach to diagnosing MS from smartphone-derived digital biomarkers that uses a novel combination of a multilayer perceptron with neural soft attention to improve learning of patterns in long-term smartphone monitoring data. Using data from a cohort of 774 participants, we demonstrate that our deep-learning models are able to distinguish between people with and without MS with an area under the receiver operating characteristic curve of 0.88 (95% CI: 0.70, 0.88). Our experimental results indicate that digital biomarkers derived from smartphone data could in the future be used as additional diagnostic criteria for MS.

CXPlain: Causal Explanations for Model Interpretation under Uncertainty

Oct 27, 2019

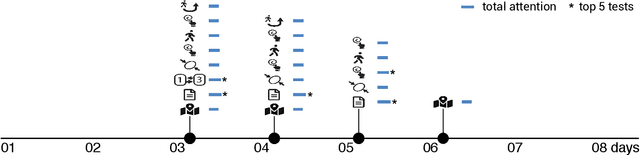

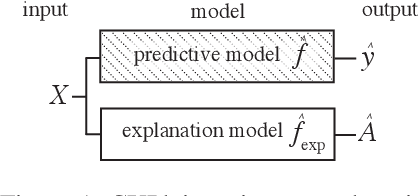

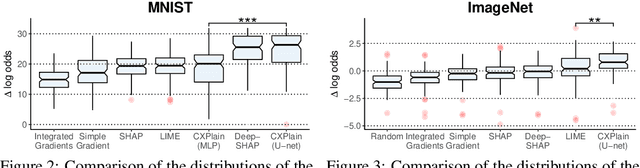

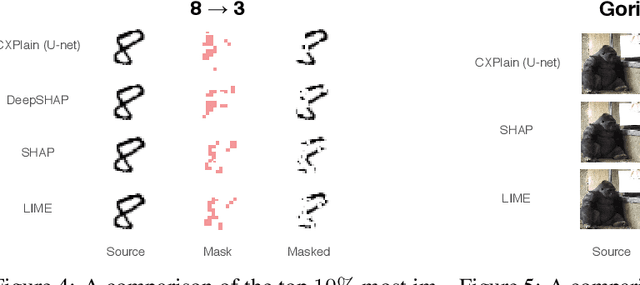

Abstract:Feature importance estimates that inform users about the degree to which given inputs influence the output of a predictive model are crucial for understanding, validating, and interpreting machine-learning models. However, providing fast and accurate estimates of feature importance for high-dimensional data, and quantifying the uncertainty of such estimates remain open challenges. Here, we frame the task of providing explanations for the decisions of machine-learning models as a causal learning task, and train causal explanation (CXPlain) models that learn to estimate to what degree certain inputs cause outputs in another machine-learning model. CXPlain can, once trained, be used to explain the target model in little time, and enables the quantification of the uncertainty associated with its feature importance estimates via bootstrap ensembling. We present experiments that demonstrate that CXPlain is significantly more accurate and faster than existing model-agnostic methods for estimating feature importance. In addition, we confirm that the uncertainty estimates provided by CXPlain ensembles are strongly correlated with their ability to accurately estimate feature importance on held-out data.

Forecasting intracranial hypertension using multi-scale waveform metrics

Feb 25, 2019

Abstract:Objective: Intracranial hypertension is an important risk factor of secondary brain damage after traumatic brain injury. Hypertensive episodes are often diagnosed reactively and time is lost before counteractive measures are taken. A pro-active approach that predicts critical events ahead of time could be beneficial for the patient. Methods: We developed a prediction framework that forecasts onsets of intracranial hypertension in the next 8 hours. Its main innovation is the joint use of cerebral auto-regulation indices, spectral energies and morphological pulse metrics to describe the neurological state. One-minute base windows were compressed by computing signal metrics, and then stored in a multi-scale history, from which physiological features were derived. Results: Our model predicted intracranial hypertension up to 8 hours in advance with alarm recall rates of 90% at a precision of 36% in the MIMIC-II waveform database, improving upon two baselines from the literature. We found that features derived from high-frequency waveforms substantially improved the prediction performance over simple statistical summaries, in which each of the three feature categories contributed to the performance gain. The inclusion of long-term history up to 8 hours was especially important. Conclusion: Our approach showed promising performance and enabled us to gain insights about the critical components of prediction models for intracranial hypertension. Significance: Our results highlight the importance of information contained in high-frequency waveforms in the neurological intensive care unit. They could motivate future studies on pre-hypertensive patterns and the design of new alarm algorithms for critical events in the injured brain.

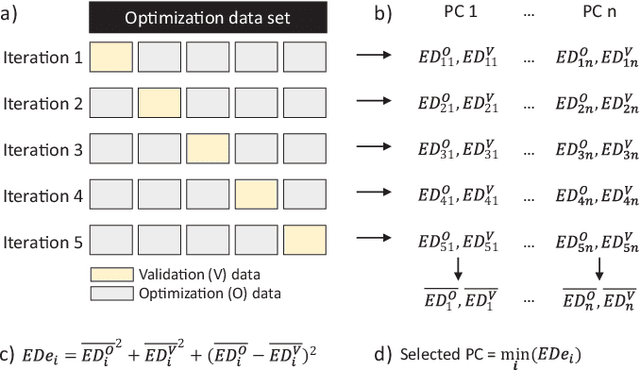

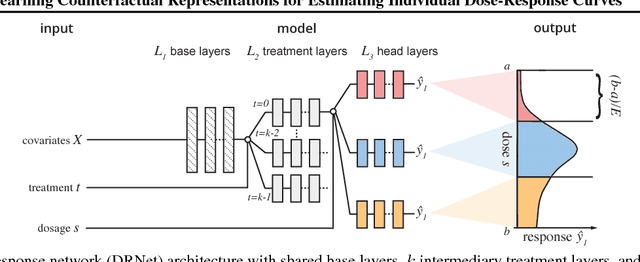

Learning Counterfactual Representations for Estimating Individual Dose-Response Curves

Feb 03, 2019

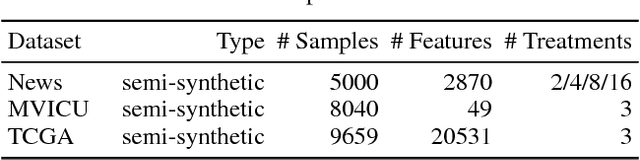

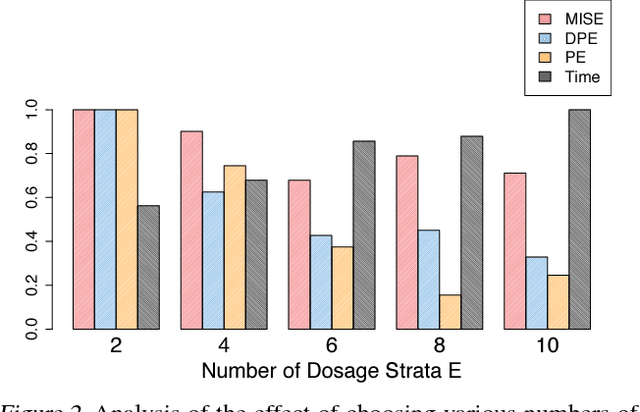

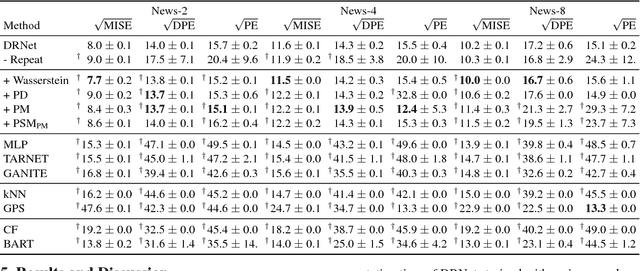

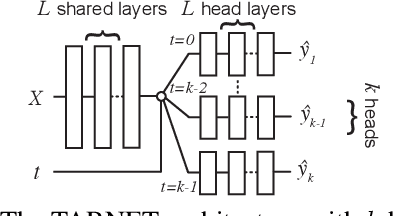

Abstract:Estimating what would be an individual's potential response to varying levels of exposure to a treatment is of high practical relevance for several important fields, such as healthcare, economics and public policy. However, existing methods for learning to estimate such counterfactual outcomes from observational data are either focused on estimating average dose-response curves, limited to settings in which treatments do not have an associated dosage parameter, or both. Here, we present a novel machine-learning framework towards learning counterfactual representations for estimating individual dose-response curves for any number of treatment options with continuous dosage parameters. Building on the established potential outcomes framework, we introduce new performance metrics, model selection criteria, model architectures, and open benchmarks for estimating individual dose-response curves. Our experiments show that the methods developed in this work set a new state-of-the-art in estimating individual dose-response curves.

Perfect Match: A Simple Method for Learning Representations For Counterfactual Inference With Neural Networks

Nov 01, 2018

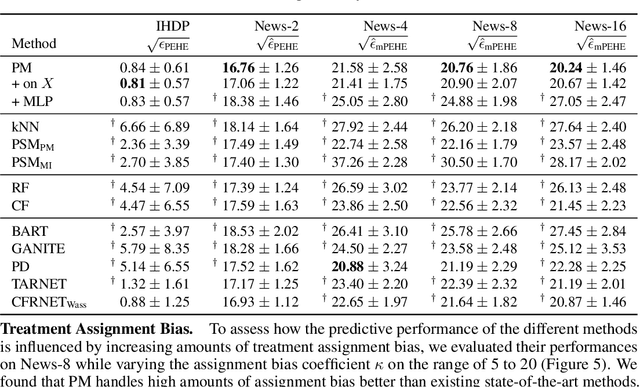

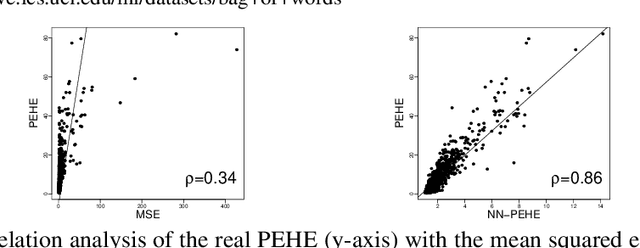

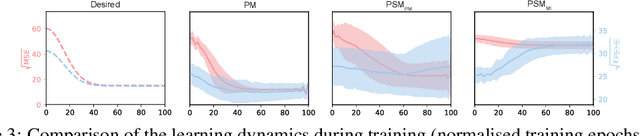

Abstract:Learning representations for counterfactual inference from observational data is of high practical relevance for many domains, such as healthcare, public policy and economics. Counterfactual inference enables one to answer "What if...?" questions, such as "What would be the outcome if we gave this patient treatment $t_1$?". However, current methods for training neural networks for counterfactual inference on observational data are either overly complex, limited to settings with only two available treatment options, or both. Here, we present Perfect Match (PM), a method for training neural networks for counterfactual inference that is easy to implement, compatible with any architecture, does not add computational complexity or hyperparameters, and extends to any number of treatments. PM is based on the idea of augmenting samples within a minibatch with their propensity-matched nearest neighbours. Our experiments demonstrate that PM outperforms a number of more complex state-of-the-art methods in inferring counterfactual outcomes across several real-world and semi-synthetic datasets.

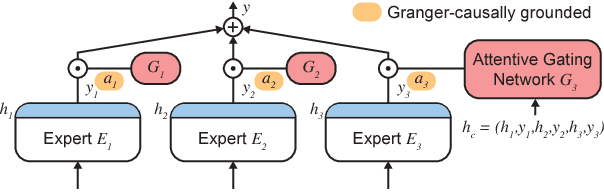

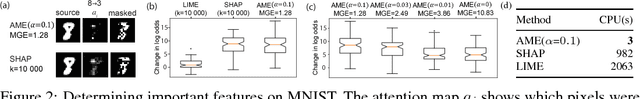

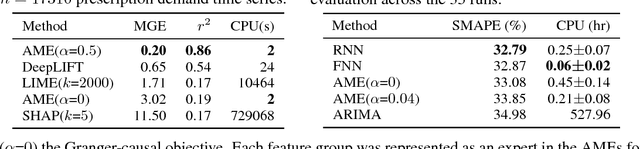

Granger-causal Attentive Mixtures of Experts: Learning Important Features with Neural Networks

Oct 01, 2018

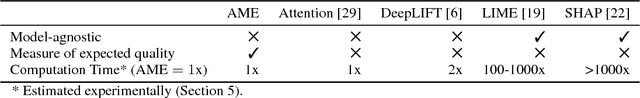

Abstract:Knowledge of the importance of input features towards decisions made by machine-learning models is essential to increase our understanding of both the models and the underlying data. Here, we present a new approach to estimating feature importance with neural networks based on the idea of distributing the features of interest among experts in an attentive mixture of experts (AME). AMEs use attentive gating networks trained with a Granger-causal objective to learn to jointly produce accurate predictions as well as estimates of feature importance in a single model. Our experiments on an established benchmark and two real-world datasets show (i) that the feature importance estimates provided by AMEs compare favourably to those provided by state-of-the-art methods, (ii) that AMEs are significantly faster than existing methods, and (iii) that the associations discovered by AMEs are consistent with those reported by domain experts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge