Gaetano Scebba

Detect-and-Segment: a Deep Learning Approach to Automate Wound Image Segmentation

Nov 02, 2021

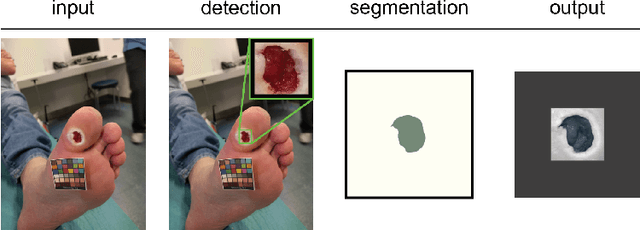

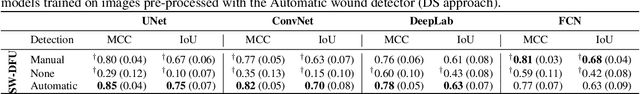

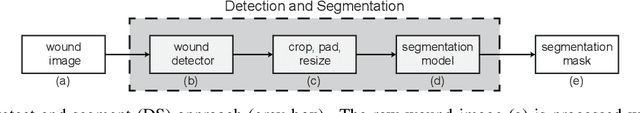

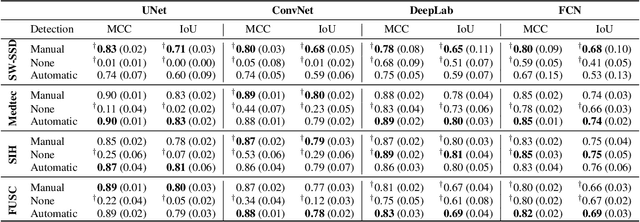

Abstract:Chronic wounds significantly impact quality of life. If not properly managed, they can severely deteriorate. Image-based wound analysis could aid in objectively assessing the wound status by quantifying important features that are related to healing. However, the high heterogeneity of the wound types, image background composition, and capturing conditions challenge the robust segmentation of wound images. We present Detect-and-Segment (DS), a deep learning approach to produce wound segmentation maps with high generalization capabilities. In our approach, dedicated deep neural networks detected the wound position, isolated the wound from the uninformative background, and computed the wound segmentation map. We evaluated this approach using one data set with images of diabetic foot ulcers. For further testing, 4 supplemental independent data sets with larger variety of wound types from different body locations were used. The Matthews' correlation coefficient (MCC) improved from 0.29 when computing the segmentation on the full image to 0.85 when combining detection and segmentation in the same approach. When tested on the wound images drawn from the supplemental data sets, the DS approach increased the mean MCC from 0.17 to 0.85. Furthermore, the DS approach enabled the training of segmentation models with up to 90% less training data while maintaining the segmentation performance.

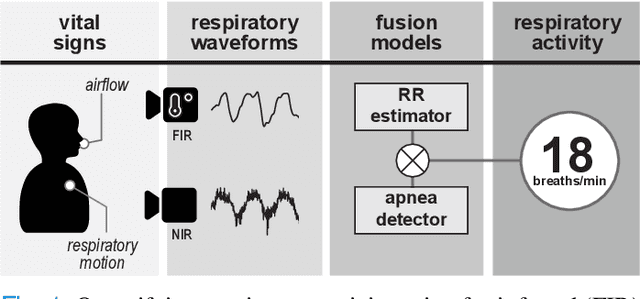

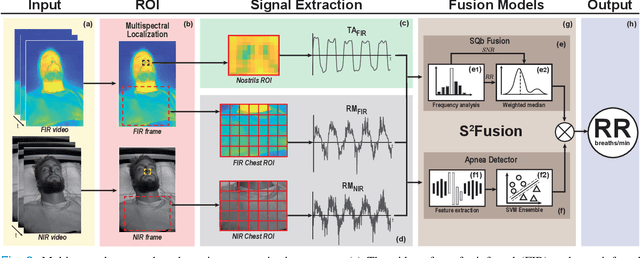

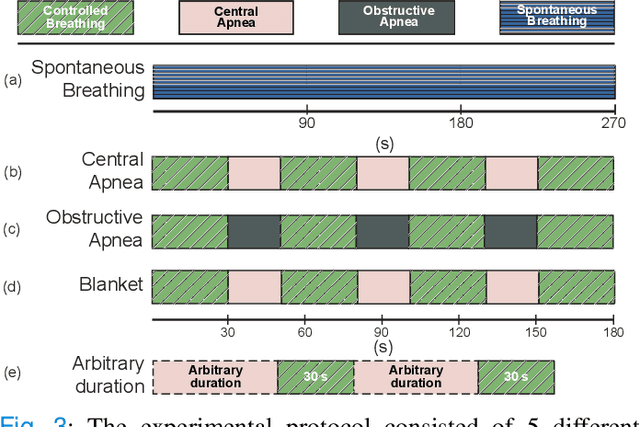

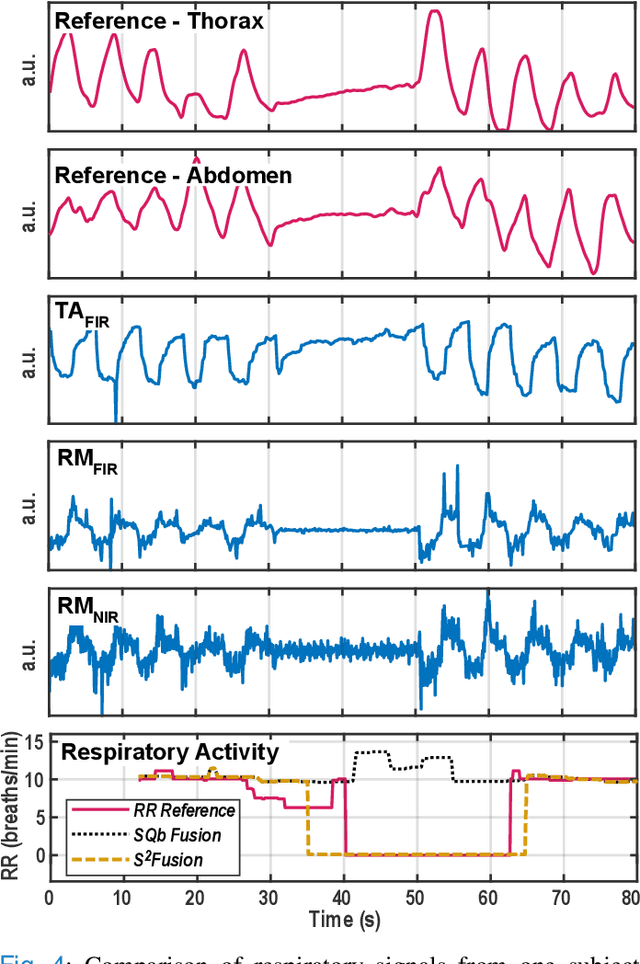

Multispectral Video Fusion for Non-contact Monitoring of Respiratory Rate and Apnea

Apr 21, 2020

Abstract:Continuous monitoring of respiratory activity is desirable in many clinical applications to detect respiratory events. Non-contact monitoring of respiration can be achieved with near- and far-infrared spectrum cameras. However, current technologies are not sufficiently robust to be used in clinical applications. For example, they fail to estimate an accurate respiratory rate (RR) during apnea. We present a novel algorithm based on multispectral data fusion that aims at estimating RR also during apnea. The algorithm independently addresses the RR estimation and apnea detection tasks. Respiratory information is extracted from multiple sources and fed into an RR estimator and an apnea detector whose results are fused into a final respiratory activity estimation. We evaluated the system retrospectively using data from 30 healthy adults who performed diverse controlled breathing tasks while lying supine in a dark room and reproduced central and obstructive apneic events. Combining multiple respiratory information from multispectral cameras improved the root mean square error (RMSE) accuracy of the RR estimation from up to 4.64 monospectral data down to 1.60 breaths/min. The median F1 scores for classifying obstructive (0.75 to 0.86) and central apnea (0.75 to 0.93) also improved. Furthermore, the independent consideration of apnea detection led to a more robust system (RMSE of 4.44 vs. 7.96 breaths/min). Our findings may represent a step towards the use of cameras for vital sign monitoring in medical applications.

Beat by Beat: Classifying Cardiac Arrhythmias with Recurrent Neural Networks

Oct 24, 2017

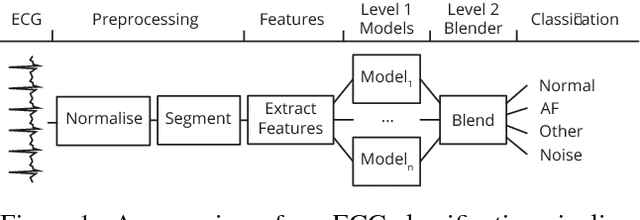

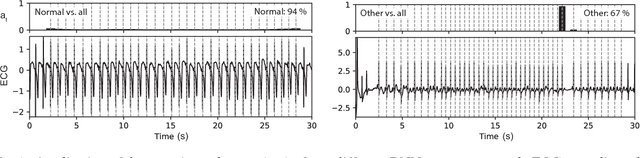

Abstract:With tens of thousands of electrocardiogram (ECG) records processed by mobile cardiac event recorders every day, heart rhythm classification algorithms are an important tool for the continuous monitoring of patients at risk. We utilise an annotated dataset of 12,186 single-lead ECG recordings to build a diverse ensemble of recurrent neural networks (RNNs) that is able to distinguish between normal sinus rhythms, atrial fibrillation, other types of arrhythmia and signals that are too noisy to interpret. In order to ease learning over the temporal dimension, we introduce a novel task formulation that harnesses the natural segmentation of ECG signals into heartbeats to drastically reduce the number of time steps per sequence. Additionally, we extend our RNNs with an attention mechanism that enables us to reason about which heartbeats our RNNs focus on to make their decisions. Through the use of attention, our model maintains a high degree of interpretability, while also achieving state-of-the-art classification performance with an average F1 score of 0.79 on an unseen test set (n=3,658).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge