Giulia Da Poian

Benchmarking real-time algorithms for in-phase auditory stimulation of low amplitude slow waves with wearable EEG devices during sleep

Mar 04, 2022

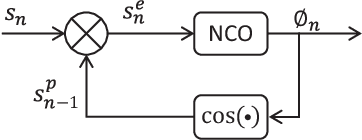

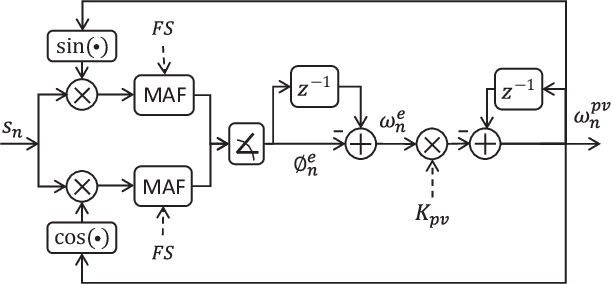

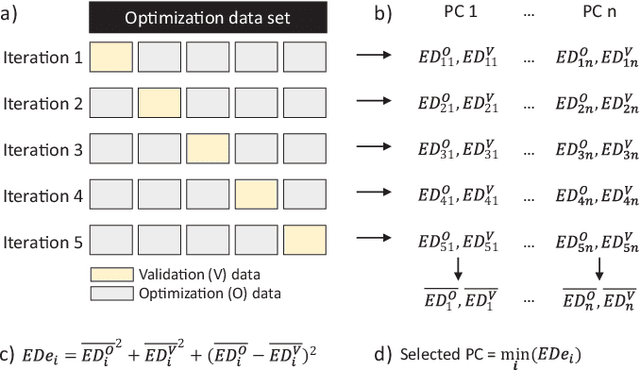

Abstract:Auditory stimulation of EEG slow waves (SW) during non-rapid eye movement (NREM) sleep has shown to improve cognitive function when it is delivered at the up-phase of SW. SW enhancement is particularly desirable in subjects with low-amplitude SW such as older adults or patients suffering from neurodegeneration such as Parkinson disease (PD). However, existing algorithms to estimate the up-phase suffer from a poor phase accuracy at low EEG amplitudes and when SW frequencies are not constant. We introduce two novel algorithms for real-time EEG phase estimation on autonomous wearable devices. The algorithms were based on a phase-locked loop (PLL) and, for the first time, a phase vocoder (PV). We compared these phase tracking algorithms with a simple amplitude threshold approach. The optimized algorithms were benchmarked for phase accuracy, the capacity to estimate phase at SW amplitudes between 20 and 60 microV, and SW frequencies above 1 Hz on 324 recordings from healthy older adults and PD patients. Furthermore, the algorithms were implemented on a wearable device and the computational efficiency and the performance was evaluated on simulated sleep EEG, as well as prospectively during a recording with a PD patient. All three algorithms delivered more than 70% of the stimulation triggers during the SW up-phase. The PV showed the highest capacity on targeting low-amplitude SW and SW with frequencies above 1 Hz. The testing on real-time hardware revealed that both PV and PLL have marginal impact on microcontroller load, while the efficiency of the PV was 4% lower than the PLL. Active auditory stimulation did not influence the phase tracking. This work demonstrated that phase-accurate auditory stimulation can be delivered during home-based sleep interventions with a wearable device also in populations with low-amplitude SW.

Multispectral Video Fusion for Non-contact Monitoring of Respiratory Rate and Apnea

Apr 21, 2020

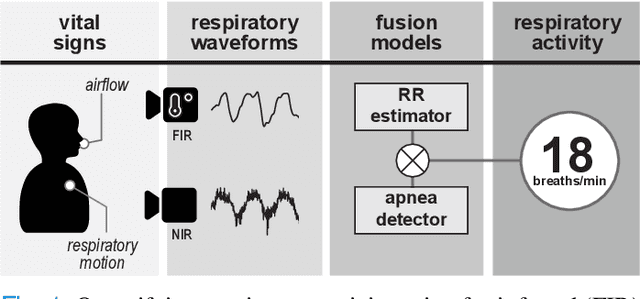

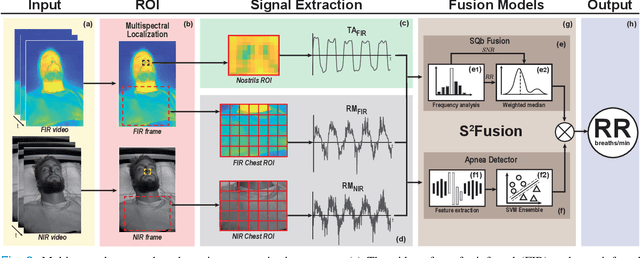

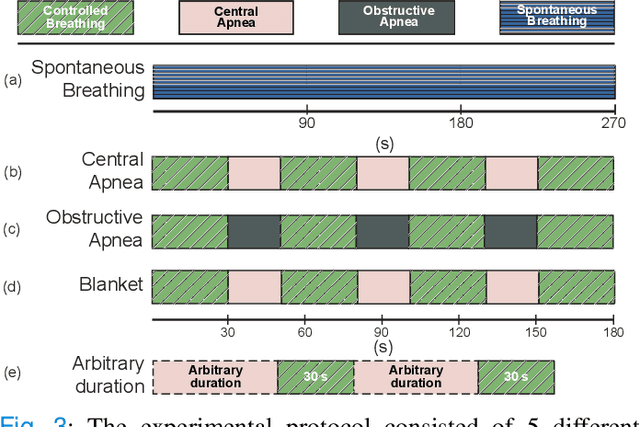

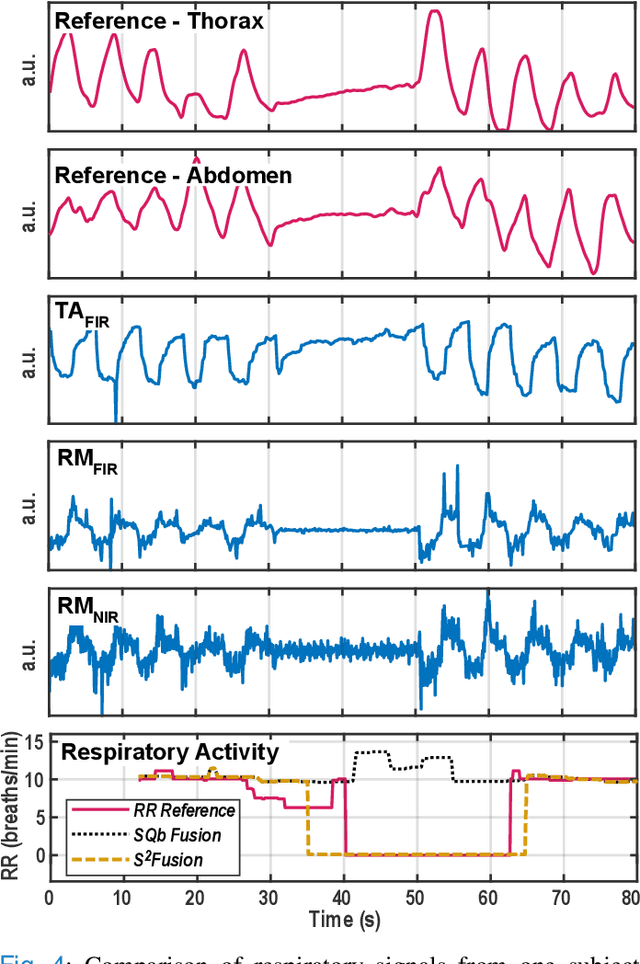

Abstract:Continuous monitoring of respiratory activity is desirable in many clinical applications to detect respiratory events. Non-contact monitoring of respiration can be achieved with near- and far-infrared spectrum cameras. However, current technologies are not sufficiently robust to be used in clinical applications. For example, they fail to estimate an accurate respiratory rate (RR) during apnea. We present a novel algorithm based on multispectral data fusion that aims at estimating RR also during apnea. The algorithm independently addresses the RR estimation and apnea detection tasks. Respiratory information is extracted from multiple sources and fed into an RR estimator and an apnea detector whose results are fused into a final respiratory activity estimation. We evaluated the system retrospectively using data from 30 healthy adults who performed diverse controlled breathing tasks while lying supine in a dark room and reproduced central and obstructive apneic events. Combining multiple respiratory information from multispectral cameras improved the root mean square error (RMSE) accuracy of the RR estimation from up to 4.64 monospectral data down to 1.60 breaths/min. The median F1 scores for classifying obstructive (0.75 to 0.86) and central apnea (0.75 to 0.93) also improved. Furthermore, the independent consideration of apnea detection led to a more robust system (RMSE of 4.44 vs. 7.96 breaths/min). Our findings may represent a step towards the use of cameras for vital sign monitoring in medical applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge