Tu Minh Phuong

SADL: An Effective In-Context Learning Method for Compositional Visual QA

Jul 02, 2024

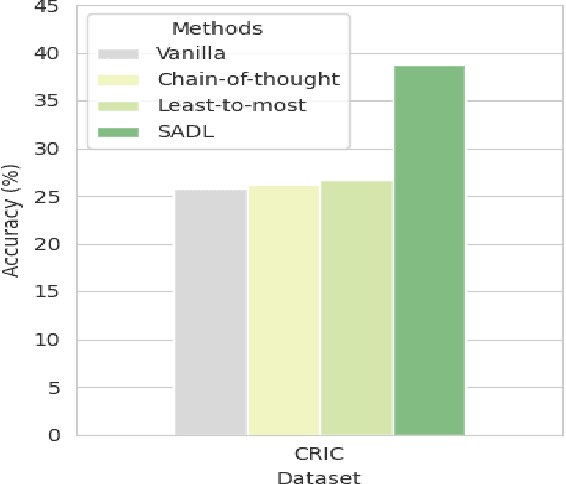

Abstract:Large vision-language models (LVLMs) offer a novel capability for performing in-context learning (ICL) in Visual QA. When prompted with a few demonstrations of image-question-answer triplets, LVLMs have demonstrated the ability to discern underlying patterns and transfer this latent knowledge to answer new questions about unseen images without the need for expensive supervised fine-tuning. However, designing effective vision-language prompts, especially for compositional questions, remains poorly understood. Adapting language-only ICL techniques may not necessarily work because we need to bridge the visual-linguistic semantic gap: Symbolic concepts must be grounded in visual content, which does not share the syntactic linguistic structures. This paper introduces SADL, a new visual-linguistic prompting framework for the task. SADL revolves around three key components: SAmpling, Deliberation, and Pseudo-Labeling of image-question pairs. Given an image-question query, we sample image-question pairs from the training data that are in semantic proximity to the query. To address the compositional nature of questions, the deliberation step decomposes complex questions into a sequence of subquestions. Finally, the sequence is progressively annotated one subquestion at a time to generate a sequence of pseudo-labels. We investigate the behaviors of SADL under OpenFlamingo on large-scale Visual QA datasets, namely GQA, GQA-OOD, CLEVR, and CRIC. The evaluation demonstrates the critical roles of sampling in the neighborhood of the image, the decomposition of complex questions, and the accurate pairing of the subquestions and labels. These findings do not always align with those found in language-only ICL, suggesting fresh insights in vision-language settings.

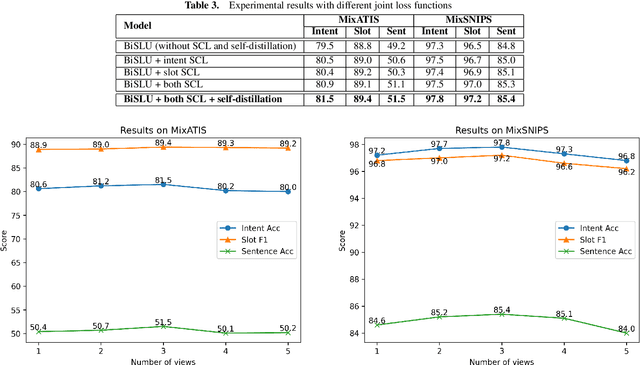

Joint Multiple Intent Detection and Slot Filling with Supervised Contrastive Learning and Self-Distillation

Aug 28, 2023

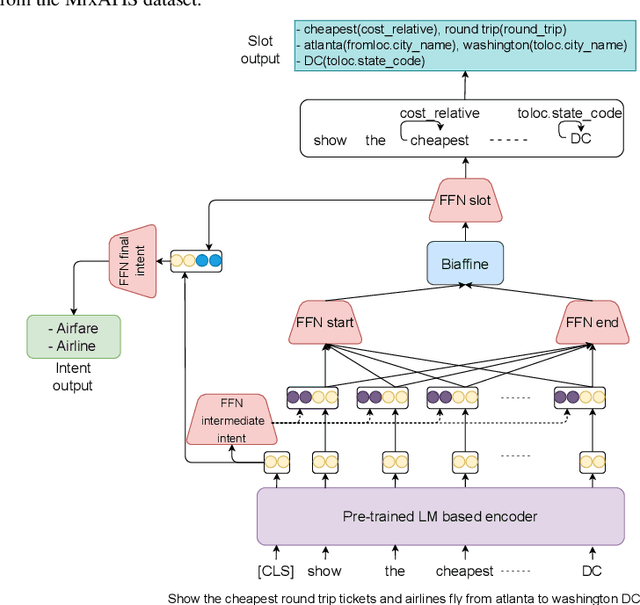

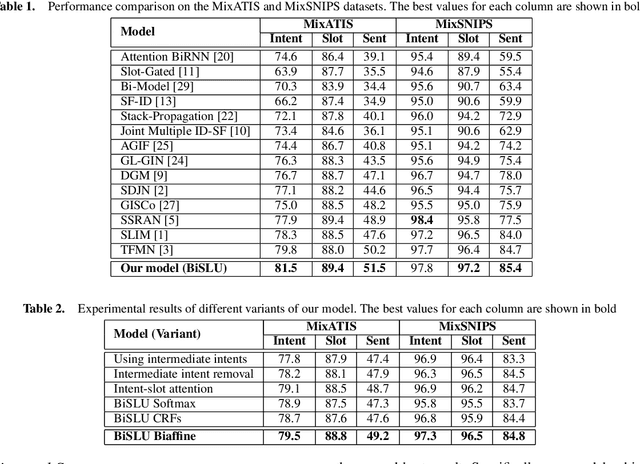

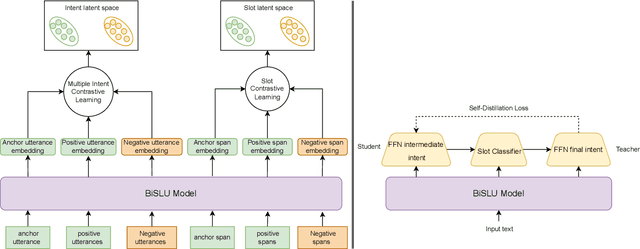

Abstract:Multiple intent detection and slot filling are two fundamental and crucial tasks in spoken language understanding. Motivated by the fact that the two tasks are closely related, joint models that can detect intents and extract slots simultaneously are preferred to individual models that perform each task independently. The accuracy of a joint model depends heavily on the ability of the model to transfer information between the two tasks so that the result of one task can correct the result of the other. In addition, since a joint model has multiple outputs, how to train the model effectively is also challenging. In this paper, we present a method for multiple intent detection and slot filling by addressing these challenges. First, we propose a bidirectional joint model that explicitly employs intent information to recognize slots and slot features to detect intents. Second, we introduce a novel method for training the proposed joint model using supervised contrastive learning and self-distillation. Experimental results on two benchmark datasets MixATIS and MixSNIPS show that our method outperforms state-of-the-art models in both tasks. The results also demonstrate the contributions of both bidirectional design and the training method to the accuracy improvement. Our source code is available at https://github.com/anhtunguyen98/BiSLU

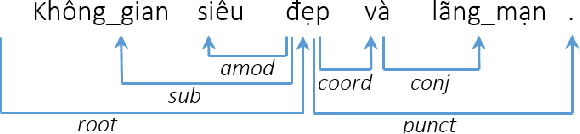

Analyzing Vietnamese Legal Questions Using Deep Neural Networks with Biaffine Classifiers

Apr 27, 2023Abstract:In this paper, we propose using deep neural networks to extract important information from Vietnamese legal questions, a fundamental task towards building a question answering system in the legal domain. Given a legal question in natural language, the goal is to extract all the segments that contain the needed information to answer the question. We introduce a deep model that solves the task in three stages. First, our model leverages recent advanced autoencoding language models to produce contextual word embeddings, which are then combined with character-level and POS-tag information to form word representations. Next, bidirectional long short-term memory networks are employed to capture the relations among words and generate sentence-level representations. At the third stage, borrowing ideas from graph-based dependency parsing methods which provide a global view on the input sentence, we use biaffine classifiers to estimate the probability of each pair of start-end words to be an important segment. Experimental results on a public Vietnamese legal dataset show that our model outperforms the previous work by a large margin, achieving 94.79% in the F1 score. The results also prove the effectiveness of using contextual features extracted from pre-trained language models combined with other types of features such as character-level and POS-tag features when training on a limited dataset.

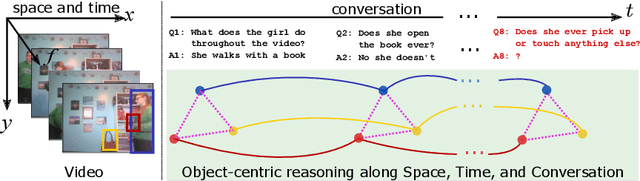

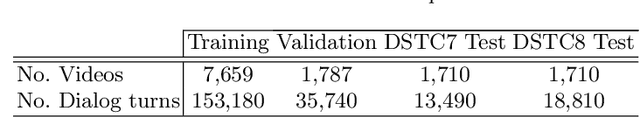

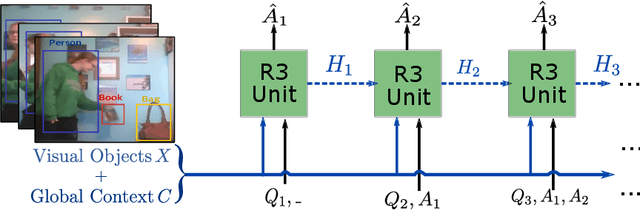

Video Dialog as Conversation about Objects Living in Space-Time

Jul 08, 2022

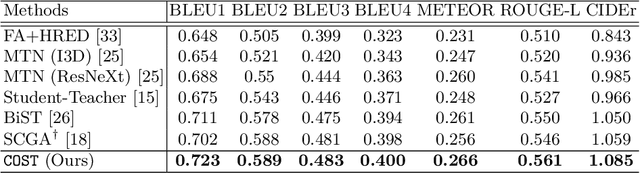

Abstract:It would be a technological feat to be able to create a system that can hold a meaningful conversation with humans about what they watch. A setup toward that goal is presented as a video dialog task, where the system is asked to generate natural utterances in response to a question in an ongoing dialog. The task poses great visual, linguistic, and reasoning challenges that cannot be easily overcome without an appropriate representation scheme over video and dialog that supports high-level reasoning. To tackle these challenges we present a new object-centric framework for video dialog that supports neural reasoning dubbed COST - which stands for Conversation about Objects in Space-Time. Here dynamic space-time visual content in videos is first parsed into object trajectories. Given this video abstraction, COST maintains and tracks object-associated dialog states, which are updated upon receiving new questions. Object interactions are dynamically and conditionally inferred for each question, and these serve as the basis for relational reasoning among them. COST also maintains a history of previous answers, and this allows retrieval of relevant object-centric information to enrich the answer forming process. Language production then proceeds in a step-wise manner, taking into the context of the current utterance, the existing dialog, the current question. We evaluate COST on the DSTC7 and DSTC8 benchmarks, demonstrating its competitiveness against state-of-the-arts.

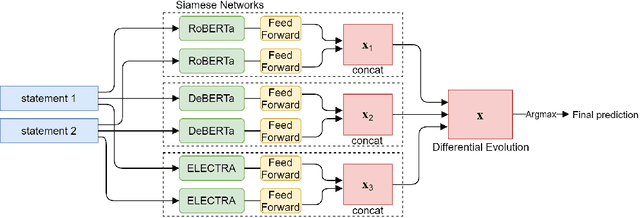

Autoencoding Language Model Based Ensemble Learning for Commonsense Validation and Explanation

Apr 07, 2022

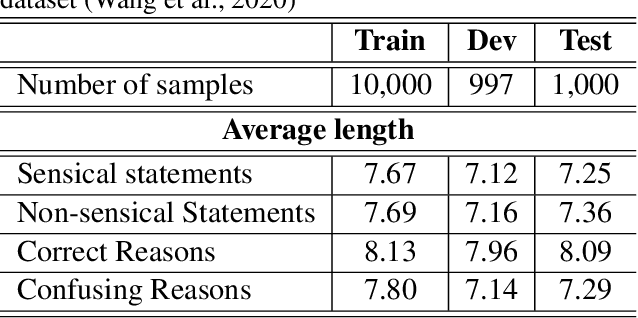

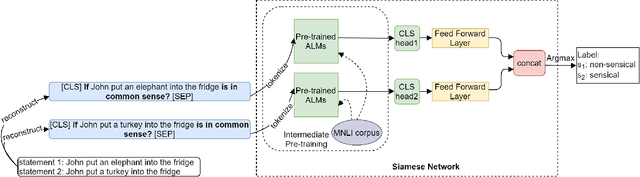

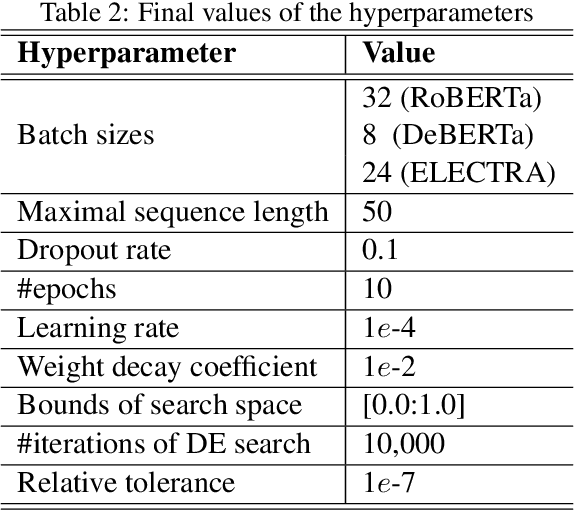

Abstract:An ultimate goal of artificial intelligence is to build computer systems that can understand human languages. Understanding commonsense knowledge about the world expressed in text is one of the foundational and challenging problems to create such intelligent systems. As a step towards this goal, we present in this paper ALMEn, an Autoencoding Language Model based Ensemble learning method for commonsense validation and explanation. By ensembling several advanced pre-trained language models including RoBERTa, DeBERTa, and ELECTRA with Siamese neural networks, our method can distinguish natural language statements that are against commonsense (validation subtask) and correctly identify the reason for making against commonsense (explanation selection subtask). Experimental results on the benchmark dataset of SemEval-2020 Task 4 show that our method outperforms state-of-the-art models, reaching 97.9% and 95.4% accuracies on the validation and explanation selection subtasks, respectively.

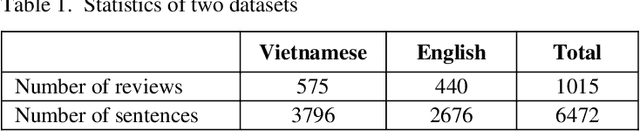

Leveraging Foreign Language Labeled Data for Aspect-Based Opinion Mining

Mar 15, 2020

Abstract:Aspect-based opinion mining is the task of identifying sentiment at the aspect level in opinionated text, which consists of two subtasks: aspect category extraction and sentiment polarity classification. While aspect category extraction aims to detect and categorize opinion targets such as product features, sentiment polarity classification assigns a sentiment label, i.e. positive, negative, or neutral, to each identified aspect. Supervised learning methods have been shown to deliver better accuracy for this task but they require labeled data, which is costly to obtain, especially for resource-poor languages like Vietnamese. To address this problem, we present a supervised aspect-based opinion mining method that utilizes labeled data from a foreign language (English in this case), which is translated to Vietnamese by an automated translation tool (Google Translate). Because aspects and opinions in different languages may be expressed by different words, we propose using word embeddings, in addition to other features, to reduce the vocabulary difference between the original and translated texts, thus improving the effectiveness of aspect category extraction and sentiment polarity classification processes. We also introduce an annotated corpus of aspect categories and sentiment polarities extracted from restaurant reviews in Vietnamese, and conduct a series of experiments on the corpus. Experimental results demonstrate the effectiveness of the proposed approach.

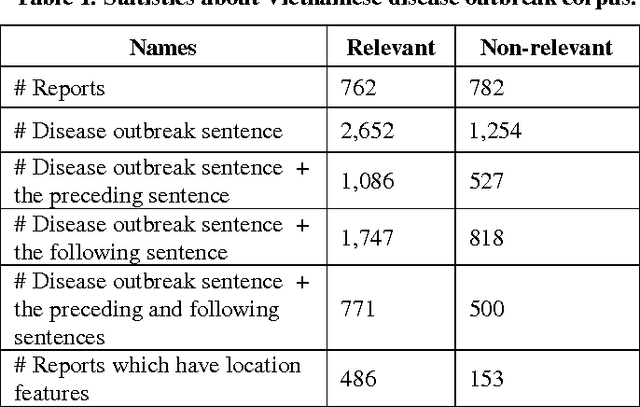

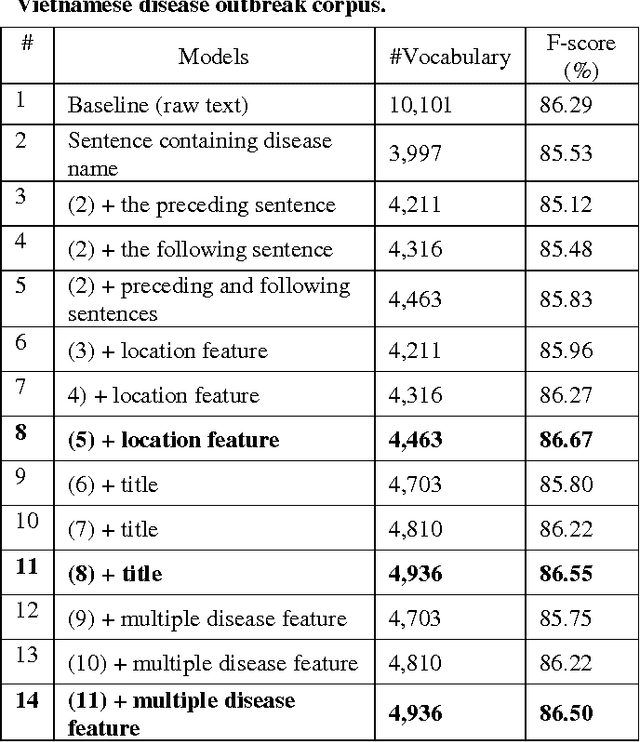

Classifying Vietnamese Disease Outbreak Reports with Important Sentences and Rich Features

Nov 22, 2019

Abstract:Text classification is an important field of research from mid 90s up to now. It has many applications, one of them is in Web-based biosurveillance systems which identify and summarize online disease outbreak reports. In this paper we focus on classifying Vietnamese disease outbreak reports. We investigate important properties of disease outbreak reports, e.g., sentences containing names of outbreak disease, locations. Evaluation on 10-time 10- fold cross-validation using the Support Vector Machine algorithm shows that using sentences containing disease outbreak names with its preceding/following sentences in combination with location features achieve the best F-score with 86.67% - an improvement of 0.38% in comparison to using all raw text. Our results suggest that using important sentences and rich feature can improve performance of Vietnamese disease outbreak text classification.

* 5 pages, 2 tables

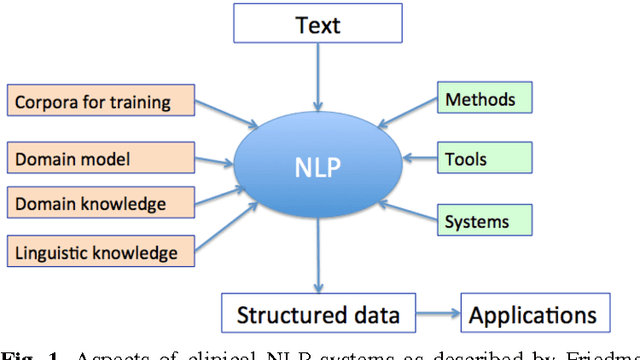

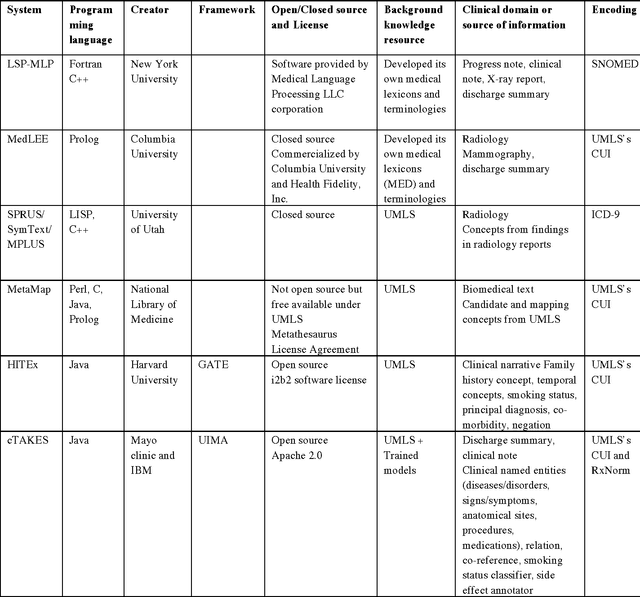

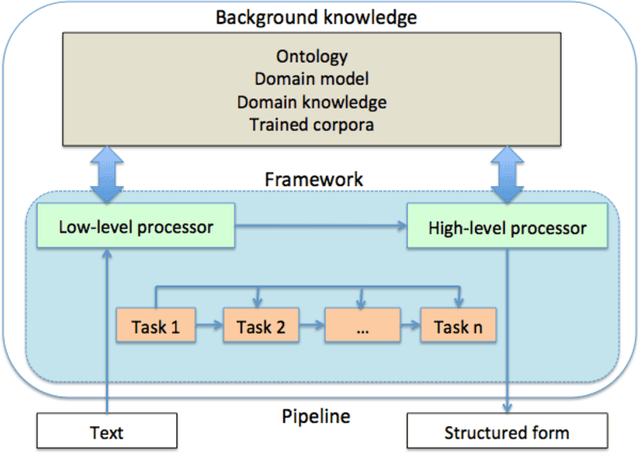

Natural Language Processing in Biomedicine: A Unified System Architecture Overview

Jan 08, 2014

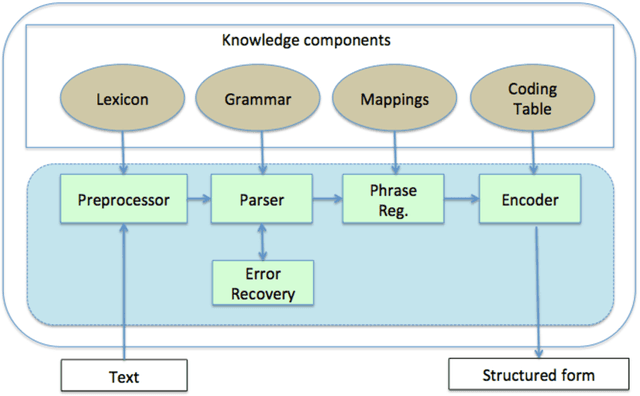

Abstract:In modern electronic medical records (EMR) much of the clinically important data - signs and symptoms, symptom severity, disease status, etc. - are not provided in structured data fields, but rather are encoded in clinician generated narrative text. Natural language processing (NLP) provides a means of "unlocking" this important data source for applications in clinical decision support, quality assurance, and public health. This chapter provides an overview of representative NLP systems in biomedicine based on a unified architectural view. A general architecture in an NLP system consists of two main components: background knowledge that includes biomedical knowledge resources and a framework that integrates NLP tools to process text. Systems differ in both components, which we will review briefly. Additionally, challenges facing current research efforts in biomedical NLP include the paucity of large, publicly available annotated corpora, although initiatives that facilitate data sharing, system evaluation, and collaborative work between researchers in clinical NLP are starting to emerge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge