Torsten Bertram

Next-Best-Trajectory Planning of Robot Manipulators for Effective Observation and Exploration

Mar 28, 2025

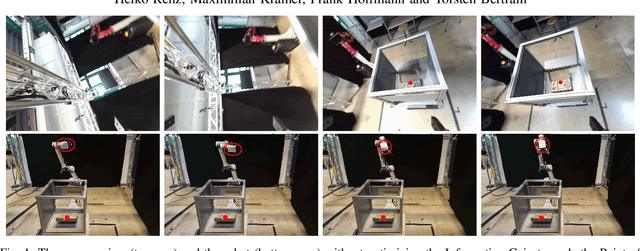

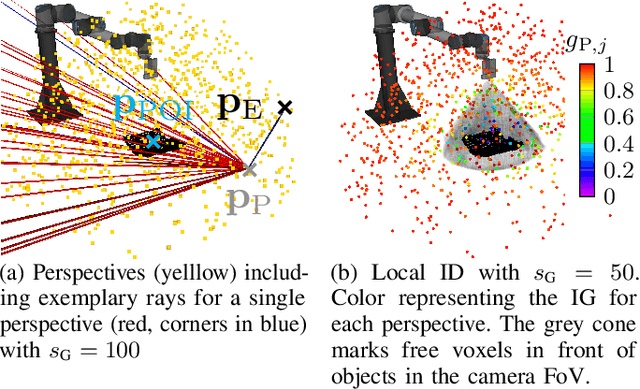

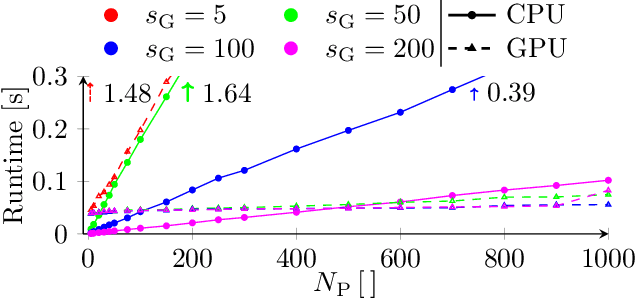

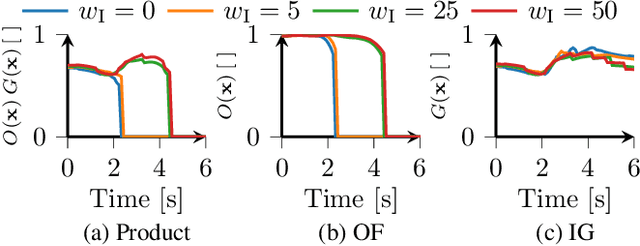

Abstract:Visual observation of objects is essential for many robotic applications, such as object reconstruction and manipulation, navigation, and scene understanding. Machine learning algorithms constitute the state-of-the-art in many fields but require vast data sets, which are costly and time-intensive to collect. Automated strategies for observation and exploration are crucial to enhance the efficiency of data gathering. Therefore, a novel strategy utilizing the Next-Best-Trajectory principle is developed for a robot manipulator operating in dynamic environments. Local trajectories are generated to maximize the information gained from observations along the path while avoiding collisions. We employ a voxel map for environment modeling and utilize raycasting from perspectives around a point of interest to estimate the information gain. A global ergodic trajectory planner provides an optional reference trajectory to the local planner, improving exploration and helping to avoid local minima. To enhance computational efficiency, raycasting for estimating the information gain in the environment is executed in parallel on the graphics processing unit. Benchmark results confirm the efficiency of the parallelization, while real-world experiments demonstrate the strategy's effectiveness.

LoRD: Adapting Differentiable Driving Policies to Distribution Shifts

Oct 15, 2024

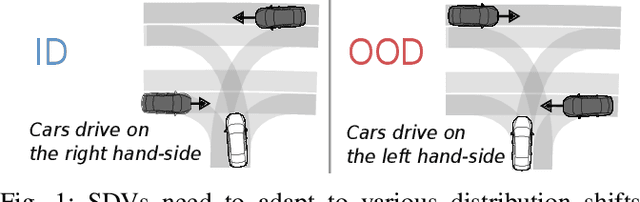

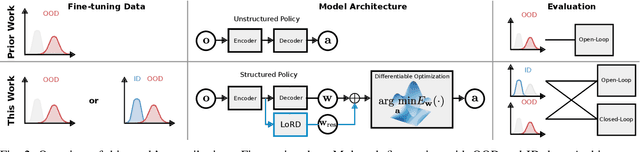

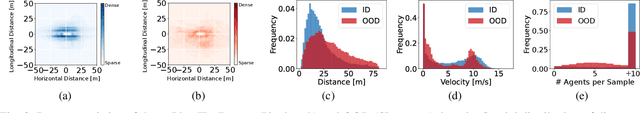

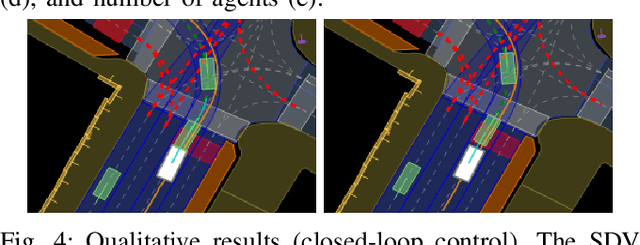

Abstract:Distribution shifts between operational domains can severely affect the performance of learned models in self-driving vehicles (SDVs). While this is a well-established problem, prior work has mostly explored naive solutions such as fine-tuning, focusing on the motion prediction task. In this work, we explore novel adaptation strategies for differentiable autonomy stacks consisting of prediction, planning, and control, perform evaluation in closed-loop, and investigate the often-overlooked issue of catastrophic forgetting. Specifically, we introduce two simple yet effective techniques: a low-rank residual decoder (LoRD) and multi-task fine-tuning. Through experiments across three models conducted on two real-world autonomous driving datasets (nuPlan, exiD), we demonstrate the effectiveness of our methods and highlight a significant performance gap between open-loop and closed-loop evaluation in prior approaches. Our approach improves forgetting by up to 23.33% and the closed-loop OOD driving score by 8.83% in comparison to standard fine-tuning.

LEROjD: Lidar Extended Radar-Only Object Detection

Sep 09, 2024

Abstract:Accurate 3D object detection is vital for automated driving. While lidar sensors are well suited for this task, they are expensive and have limitations in adverse weather conditions. 3+1D imaging radar sensors offer a cost-effective, robust alternative but face challenges due to their low resolution and high measurement noise. Existing 3+1D imaging radar datasets include radar and lidar data, enabling cross-modal model improvements. Although lidar should not be used during inference, it can aid the training of radar-only object detectors. We explore two strategies to transfer knowledge from the lidar to the radar domain and radar-only object detectors: 1. multi-stage training with sequential lidar point cloud thin-out, and 2. cross-modal knowledge distillation. In the multi-stage process, three thin-out methods are examined. Our results show significant performance gains of up to 4.2 percentage points in mean Average Precision with multi-stage training and up to 3.9 percentage points with knowledge distillation by initializing the student with the teacher's weights. The main benefit of these approaches is their applicability to other 3D object detection networks without altering their architecture, as we show by analyzing it on two different object detectors. Our code is available at https://github.com/rst-tu-dortmund/lerojd

Multi-Object Tracking based on Imaging Radar 3D Object Detection

Jun 03, 2024

Abstract:Effective tracking of surrounding traffic participants allows for an accurate state estimation as a necessary ingredient for prediction of future behavior and therefore adequate planning of the ego vehicle trajectory. One approach for detecting and tracking surrounding traffic participants is the combination of a learning based object detector with a classical tracking algorithm. Learning based object detectors have been shown to work adequately on lidar and camera data, while learning based object detectors using standard radar data input have proven to be inferior. Recently, with the improvements to radar sensor technology in the form of imaging radars, the object detection performance on radar was greatly improved but is still limited compared to lidar sensors due to the sparsity of the radar point cloud. This presents a unique challenge for the task of multi-object tracking. The tracking algorithm must overcome the limited detection quality while generating consistent tracks. To this end, a comparison between different multi-object tracking methods on imaging radar data is required to investigate its potential for downstream tasks. The work at hand compares multiple approaches and analyzes their limitations when applied to imaging radar data. Furthermore, enhancements to the presented approaches in the form of probabilistic association algorithms are considered for this task.

Energy-based Potential Games for Joint Motion Forecasting and Control

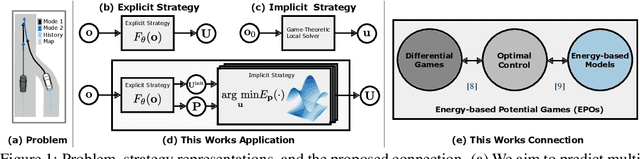

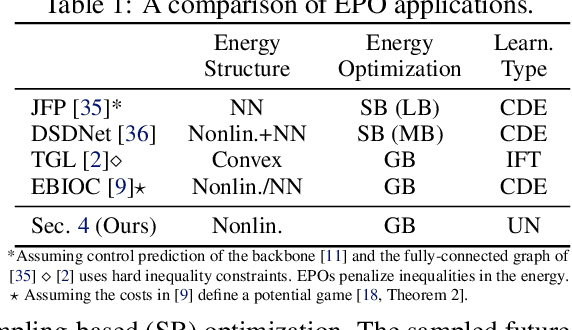

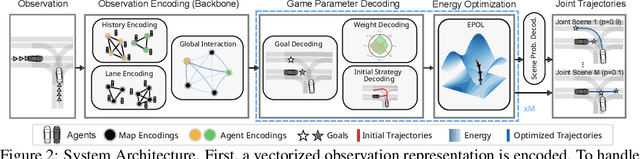

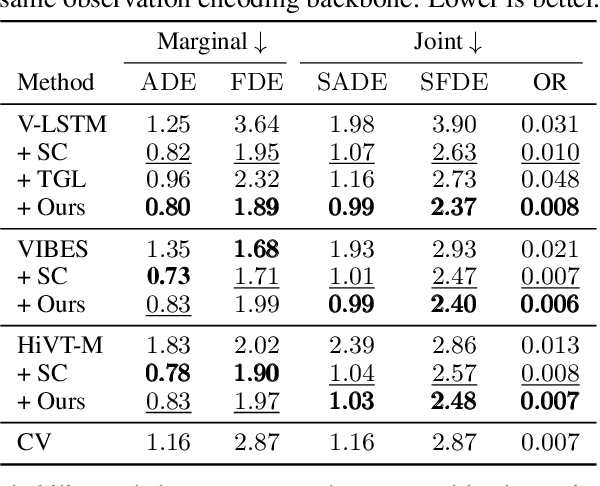

Dec 04, 2023

Abstract:This work uses game theory as a mathematical framework to address interaction modeling in multi-agent motion forecasting and control. Despite its interpretability, applying game theory to real-world robotics, like automated driving, faces challenges such as unknown game parameters. To tackle these, we establish a connection between differential games, optimal control, and energy-based models, demonstrating how existing approaches can be unified under our proposed Energy-based Potential Game formulation. Building upon this, we introduce a new end-to-end learning application that combines neural networks for game-parameter inference with a differentiable game-theoretic optimization layer, acting as an inductive bias. The analysis provides empirical evidence that the game-theoretic layer adds interpretability and improves the predictive performance of various neural network backbones using two simulations and two real-world driving datasets.

Time-Optimal Trajectory Planning in Highway Scenarios using Basis-Spline Parameterization

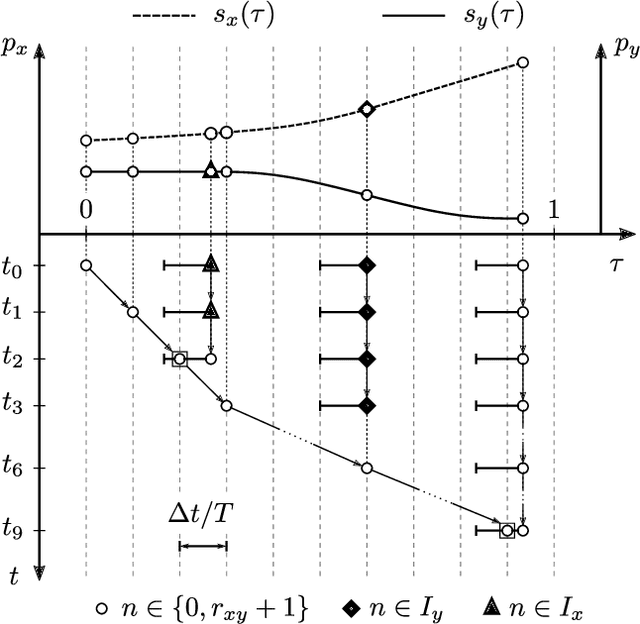

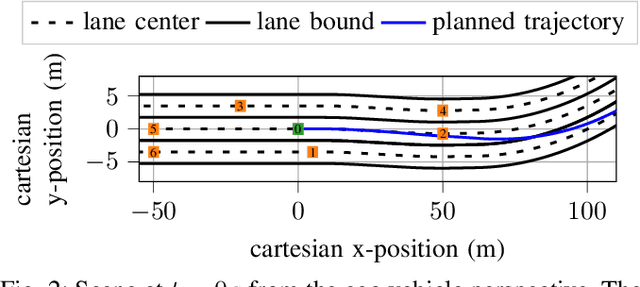

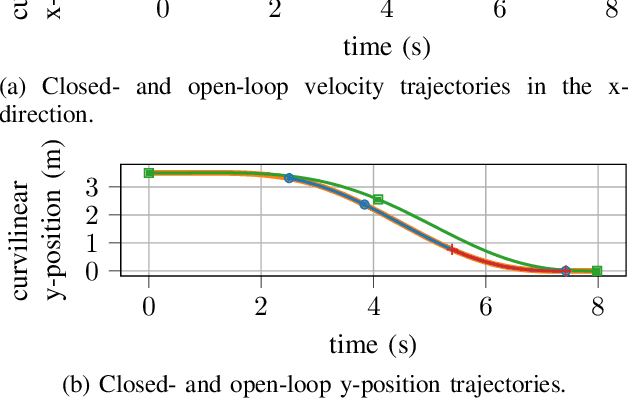

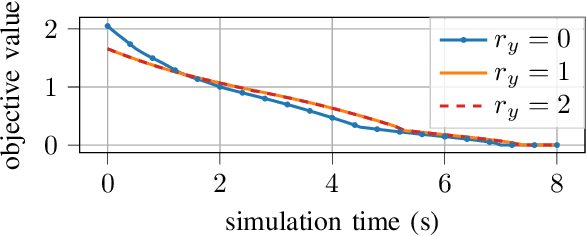

Oct 05, 2023

Abstract:Basis splines enable a time-continuous feasibility check with a finite number of constraints. Constraints apply to the whole trajectory for motion planning applications that require a collision-free and dynamically feasible trajectory. Existing motion planners that rely on gradient-based optimization apply time scaling to implement a shrinking planning horizon. They neither guarantee a recursively feasible trajectory nor enable reaching two terminal manifold parts at different time scales. This paper proposes a nonlinear optimization problem that addresses the drawbacks of existing approaches. Therefore, the spline breakpoints are included in the optimization variables. Transformations between spline bases are implemented so a sparse problem formulation is achieved. A strategy for breakpoint removal enables the convergence into a terminal manifold. The evaluation in an overtaking scenario shows the influence of the breakpoint number on the solution quality and the time required for optimization.

On a Connection between Differential Games, Optimal Control, and Energy-based Models for Multi-Agent Interactions

Aug 31, 2023Abstract:Game theory offers an interpretable mathematical framework for modeling multi-agent interactions. However, its applicability in real-world robotics applications is hindered by several challenges, such as unknown agents' preferences and goals. To address these challenges, we show a connection between differential games, optimal control, and energy-based models and demonstrate how existing approaches can be unified under our proposed Energy-based Potential Game formulation. Building upon this formulation, this work introduces a new end-to-end learning application that combines neural networks for game-parameter inference with a differentiable game-theoretic optimization layer, acting as an inductive bias. The experiments using simulated mobile robot pedestrian interactions and real-world automated driving data provide empirical evidence that the game-theoretic layer improves the predictive performance of various neural network backbones.

Ego-Motion Estimation and Dynamic Motion Separation from 3D Point Clouds for Accumulating Data and Improving 3D Object Detection

Aug 29, 2023

Abstract:New 3+1D high-resolution radar sensors are gaining importance for 3D object detection in the automotive domain due to their relative affordability and improved detection compared to classic low-resolution radar sensors. One limitation of high-resolution radar sensors, compared to lidar sensors, is the sparsity of the generated point cloud. This sparsity could be partially overcome by accumulating radar point clouds of subsequent time steps. This contribution analyzes limitations of accumulating radar point clouds on the View-of-Delft dataset. By employing different ego-motion estimation approaches, the dataset's inherent constraints, and possible solutions are analyzed. Additionally, a learning-based instance motion estimation approach is deployed to investigate the influence of dynamic motion on the accumulated point cloud for object detection. Experiments document an improved object detection performance by applying an ego-motion estimation and dynamic motion correction approach.

Reviewing 3D Object Detectors in the Context of High-Resolution 3+1D Radar

Aug 10, 2023

Abstract:Recent developments and the beginning market introduction of high-resolution imaging 4D (3+1D) radar sensors have initialized deep learning-based radar perception research. We investigate deep learning-based models operating on radar point clouds for 3D object detection. 3D object detection on lidar point cloud data is a mature area of 3D vision. Many different architectures have been proposed, each with strengths and weaknesses. Due to similarities between 3D lidar point clouds and 3+1D radar point clouds, those existing 3D object detectors are a natural basis to start deep learning-based 3D object detection on radar data. Thus, the first step is to analyze the detection performance of the existing models on the new data modality and evaluate them in depth. In order to apply existing 3D point cloud object detectors developed for lidar point clouds to the radar domain, they need to be adapted first. While some detectors, such as PointPillars, have already been adapted to be applicable to radar data, we have adapted others, e.g., Voxel R-CNN, SECOND, PointRCNN, and PV-RCNN. To this end, we conduct a cross-model validation (evaluating a set of models on one particular data set) as well as a cross-data set validation (evaluating all models in the model set on several data sets). The high-resolution radar data used are the View-of-Delft and Astyx data sets. Finally, we evaluate several adaptations of the models and their training procedures. We also discuss major factors influencing the detection performance on radar data and propose possible solutions indicating potential future research avenues.

UMBRELLA: Uncertainty-Aware Model-Based Offline Reinforcement Learning Leveraging Planning

Nov 23, 2021

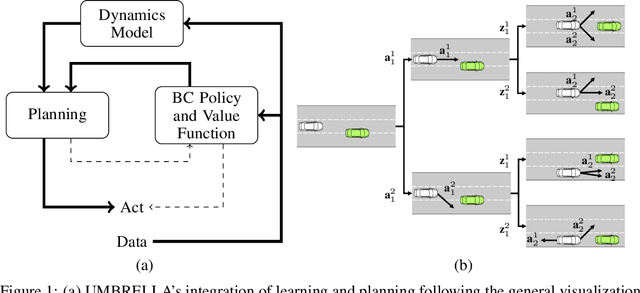

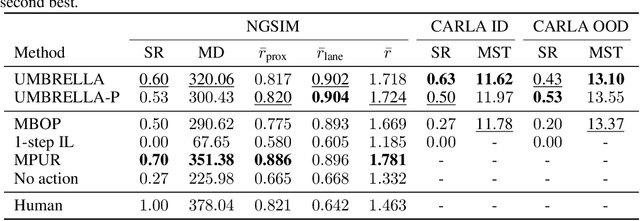

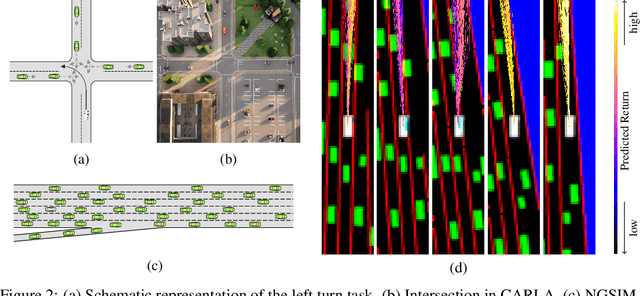

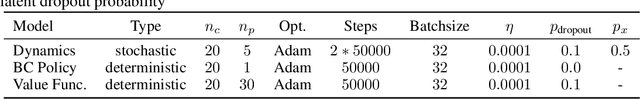

Abstract:Offline reinforcement learning (RL) provides a framework for learning decision-making from offline data and therefore constitutes a promising approach for real-world applications as automated driving. Self-driving vehicles (SDV) learn a policy, which potentially even outperforms the behavior in the sub-optimal data set. Especially in safety-critical applications as automated driving, explainability and transferability are key to success. This motivates the use of model-based offline RL approaches, which leverage planning. However, current state-of-the-art methods often neglect the influence of aleatoric uncertainty arising from the stochastic behavior of multi-agent systems. This work proposes a novel approach for Uncertainty-aware Model-Based Offline REinforcement Learning Leveraging plAnning (UMBRELLA), which solves the prediction, planning, and control problem of the SDV jointly in an interpretable learning-based fashion. A trained action-conditioned stochastic dynamics model captures distinctively different future evolutions of the traffic scene. The analysis provides empirical evidence for the effectiveness of our approach in challenging automated driving simulations and based on a real-world public dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge