Frank Hoffmann

Freie Universität Berlin, Germany

MTevent: A Multi-Task Event Camera Dataset for 6D Pose Estimation and Moving Object Detection

May 16, 2025Abstract:Mobile robots are reaching unprecedented speeds, with platforms like Unitree B2, and Fraunhofer O3dyn achieving maximum speeds between 5 and 10 m/s. However, effectively utilizing such speeds remains a challenge due to the limitations of RGB cameras, which suffer from motion blur and fail to provide real-time responsiveness. Event cameras, with their asynchronous operation, and low-latency sensing, offer a promising alternative for high-speed robotic perception. In this work, we introduce MTevent, a dataset designed for 6D pose estimation and moving object detection in highly dynamic environments with large detection distances. Our setup consists of a stereo-event camera and an RGB camera, capturing 75 scenes, each on average 16 seconds, and featuring 16 unique objects under challenging conditions such as extreme viewing angles, varying lighting, and occlusions. MTevent is the first dataset to combine high-speed motion, long-range perception, and real-world object interactions, making it a valuable resource for advancing event-based vision in robotics. To establish a baseline, we evaluate the task of 6D pose estimation using NVIDIA's FoundationPose on RGB images, achieving an Average Recall of 0.22 with ground-truth masks, highlighting the limitations of RGB-based approaches in such dynamic settings. With MTevent, we provide a novel resource to improve perception models and foster further research in high-speed robotic vision. The dataset is available for download https://huggingface.co/datasets/anas-gouda/MTevent

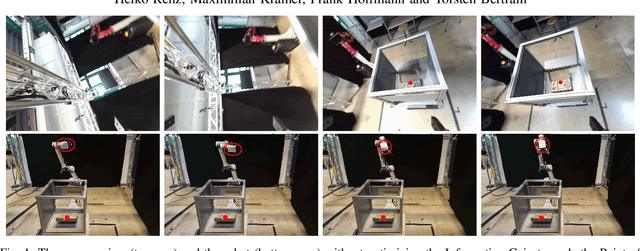

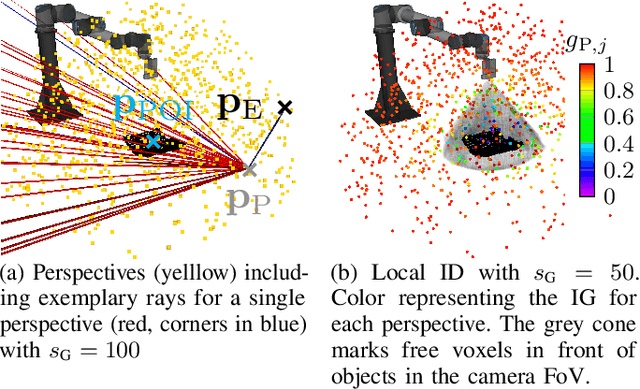

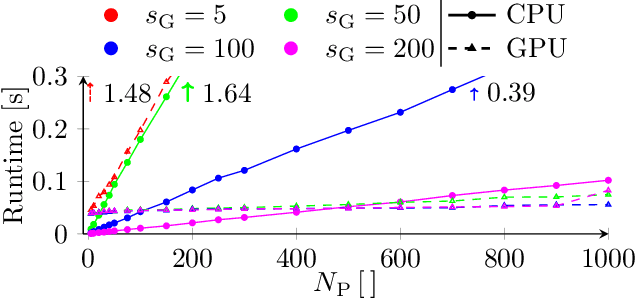

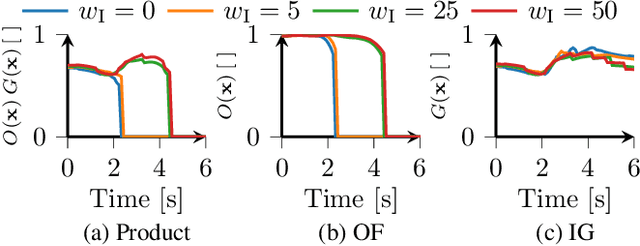

Next-Best-Trajectory Planning of Robot Manipulators for Effective Observation and Exploration

Mar 28, 2025

Abstract:Visual observation of objects is essential for many robotic applications, such as object reconstruction and manipulation, navigation, and scene understanding. Machine learning algorithms constitute the state-of-the-art in many fields but require vast data sets, which are costly and time-intensive to collect. Automated strategies for observation and exploration are crucial to enhance the efficiency of data gathering. Therefore, a novel strategy utilizing the Next-Best-Trajectory principle is developed for a robot manipulator operating in dynamic environments. Local trajectories are generated to maximize the information gained from observations along the path while avoiding collisions. We employ a voxel map for environment modeling and utilize raycasting from perspectives around a point of interest to estimate the information gain. A global ergodic trajectory planner provides an optional reference trajectory to the local planner, improving exploration and helping to avoid local minima. To enhance computational efficiency, raycasting for estimating the information gain in the environment is executed in parallel on the graphics processing unit. Benchmark results confirm the efficiency of the parallelization, while real-world experiments demonstrate the strategy's effectiveness.

UMBRELLA: Uncertainty-Aware Model-Based Offline Reinforcement Learning Leveraging Planning

Nov 23, 2021

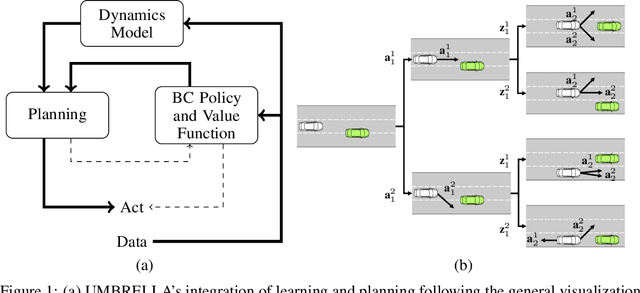

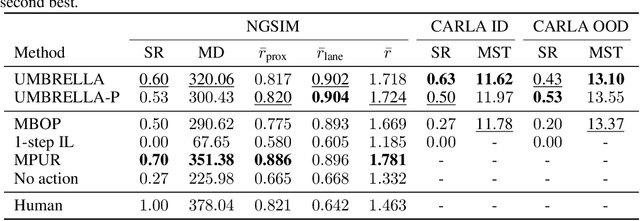

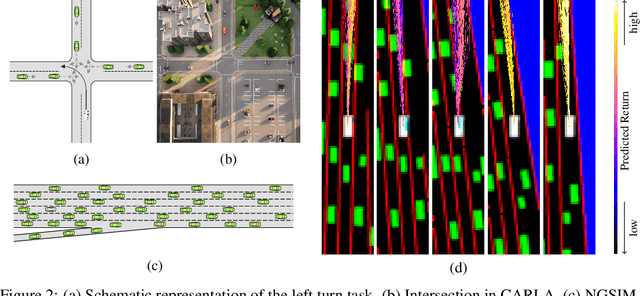

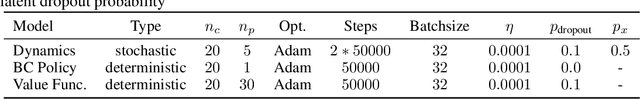

Abstract:Offline reinforcement learning (RL) provides a framework for learning decision-making from offline data and therefore constitutes a promising approach for real-world applications as automated driving. Self-driving vehicles (SDV) learn a policy, which potentially even outperforms the behavior in the sub-optimal data set. Especially in safety-critical applications as automated driving, explainability and transferability are key to success. This motivates the use of model-based offline RL approaches, which leverage planning. However, current state-of-the-art methods often neglect the influence of aleatoric uncertainty arising from the stochastic behavior of multi-agent systems. This work proposes a novel approach for Uncertainty-aware Model-Based Offline REinforcement Learning Leveraging plAnning (UMBRELLA), which solves the prediction, planning, and control problem of the SDV jointly in an interpretable learning-based fashion. A trained action-conditioned stochastic dynamics model captures distinctively different future evolutions of the traffic scene. The analysis provides empirical evidence for the effectiveness of our approach in challenging automated driving simulations and based on a real-world public dataset.

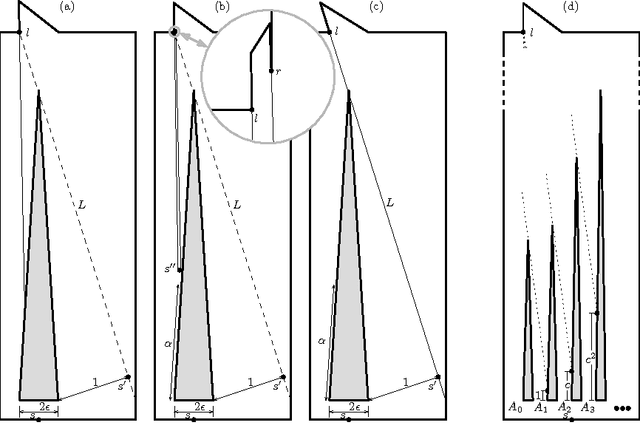

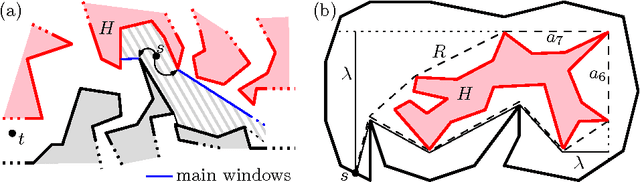

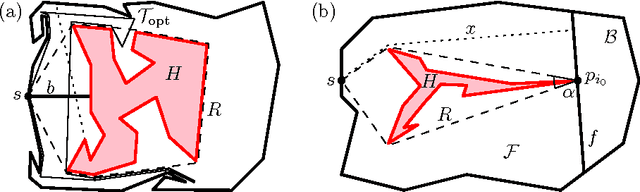

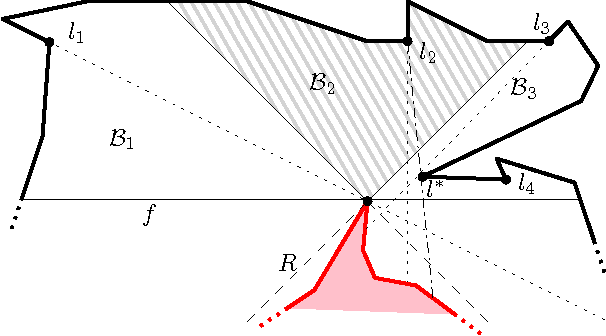

Online Exploration of Polygons with Holes

Jul 01, 2012

Abstract:We study online strategies for autonomous mobile robots with vision to explore unknown polygons with at most h holes. Our main contribution is an (h+c_0)!-competitive strategy for such polygons under the assumption that each hole is marked with a special color, where c_0 is a universal constant. The strategy is based on a new hybrid approach. Furthermore, we give a new lower bound construction for small h.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge