Moritz Roidl

Technical University of Dortmund

MTevent: A Multi-Task Event Camera Dataset for 6D Pose Estimation and Moving Object Detection

May 16, 2025Abstract:Mobile robots are reaching unprecedented speeds, with platforms like Unitree B2, and Fraunhofer O3dyn achieving maximum speeds between 5 and 10 m/s. However, effectively utilizing such speeds remains a challenge due to the limitations of RGB cameras, which suffer from motion blur and fail to provide real-time responsiveness. Event cameras, with their asynchronous operation, and low-latency sensing, offer a promising alternative for high-speed robotic perception. In this work, we introduce MTevent, a dataset designed for 6D pose estimation and moving object detection in highly dynamic environments with large detection distances. Our setup consists of a stereo-event camera and an RGB camera, capturing 75 scenes, each on average 16 seconds, and featuring 16 unique objects under challenging conditions such as extreme viewing angles, varying lighting, and occlusions. MTevent is the first dataset to combine high-speed motion, long-range perception, and real-world object interactions, making it a valuable resource for advancing event-based vision in robotics. To establish a baseline, we evaluate the task of 6D pose estimation using NVIDIA's FoundationPose on RGB images, achieving an Average Recall of 0.22 with ground-truth masks, highlighting the limitations of RGB-based approaches in such dynamic settings. With MTevent, we provide a novel resource to improve perception models and foster further research in high-speed robotic vision. The dataset is available for download https://huggingface.co/datasets/anas-gouda/MTevent

On the Effectiveness of Heterogeneous Ensemble Methods for Re-identification

Mar 19, 2024

Abstract:In this contribution, we introduce a novel ensemble method for the re-identification of industrial entities, using images of chipwood pallets and galvanized metal plates as dataset examples. Our algorithms replace commonly used, complex siamese neural networks with an ensemble of simplified, rudimentary models, providing wider applicability, especially in hardware-restricted scenarios. Each ensemble sub-model uses different types of extracted features of the given data as its input, allowing for the creation of effective ensembles in a fraction of the training duration needed for more complex state-of-the-art models. We reach state-of-the-art performance at our task, with a Rank-1 accuracy of over 77% and a Rank-10 accuracy of over 99%, and introduce five distinct feature extraction approaches, and study their combination using different ensemble methods.

Object Pose Estimation Annotation Pipeline for Multi-view Monocular Camera Systems in Industrial Settings

Oct 23, 2023Abstract:Object localization, and more specifically object pose estimation, in large industrial spaces such as warehouses and production facilities, is essential for material flow operations. Traditional approaches rely on artificial artifacts installed in the environment or excessively expensive equipment, that is not suitable at scale. A more practical approach is to utilize existing cameras in such spaces in order to address the underlying pose estimation problem and to localize objects of interest. In order to leverage state-of-the-art methods in deep learning for object pose estimation, large amounts of data need to be collected and annotated. In this work, we provide an approach to the annotation of large datasets of monocular images without the need for manual labor. Our approach localizes cameras in space, unifies their location with a motion capture system, and uses a set of linear mappings to project 3D models of objects of interest at their ground truth 6D pose locations. We test our pipeline on a custom dataset collected from a system of eight cameras in an industrial setting that mimics the intended area of operation. Our approach was able to provide consistent quality annotations for our dataset with 26, 482 object instances at a fraction of the time required by human annotators.

Event Camera as Region Proposal Network

May 01, 2023Abstract:The human eye consists of two types of photoreceptors, rods and cones. Rods are responsible for monochrome vision, and cones for color vision. The number of rods is much higher than the cones, which means that most human vision processing is done in monochrome. An event camera reports the change in pixel intensity and is analogous to rods. Event and color cameras in computer vision are like rods and cones in human vision. Humans can notice objects moving in the peripheral vision (far right and left), but we cannot classify them (think of someone passing by on your far left or far right, this can trigger your attention without knowing who they are). Thus, rods act as a region proposal network (RPN) in human vision. Therefore, an event camera can act as a region proposal network in deep learning Two-stage object detectors in deep learning, such as Mask R-CNN, consist of a backbone for feature extraction and a RPN. Currently, RPN uses the brute force method by trying out all the possible bounding boxes to detect an object. This requires much computation time to generate region proposals making two-stage detectors inconvenient for fast applications. This work replaces the RPN in Mask-RCNN of detectron2 with an event camera for generating proposals for moving objects. Thus, saving time and being computationally less expensive. The proposed approach is faster than the two-stage detectors with comparable accuracy

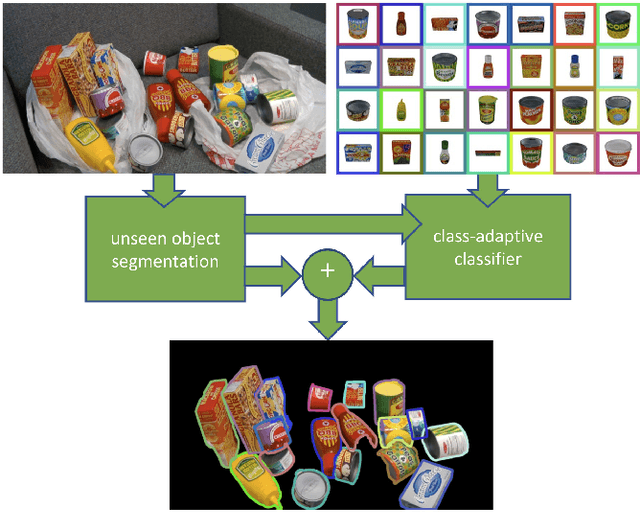

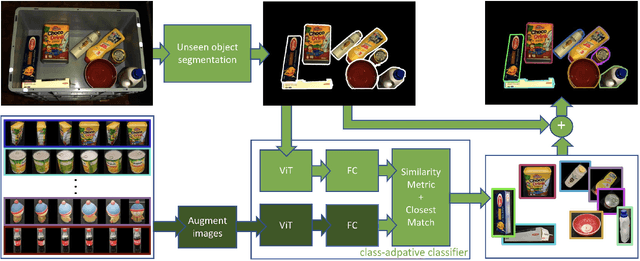

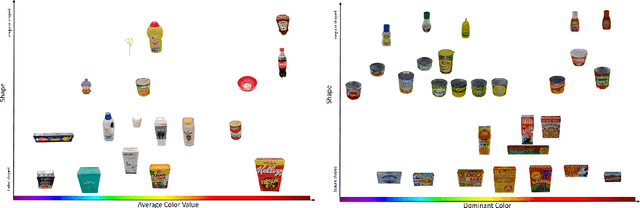

DoUnseen: Zero-Shot Object Detection for Robotic Grasping

Apr 06, 2023

Abstract:How can we segment varying numbers of objects where each specific object represents its own separate class? To make the problem even more realistic, how can we add and delete classes on the fly without retraining? This is the case of robotic applications where no datasets of the objects exist or application that includes thousands of objects (E.g., in logistics) where it is impossible to train a single model to learn all of the objects. Most current research on object segmentation for robotic grasping focuses on class-level object segmentation (E.g., box, cup, bottle), closed sets (specific objects of a dataset; for example, YCB dataset), or deep learning-based template matching. In this work, we are interested in open sets where the number of classes is unknown, varying, and without pre-knowledge about the objects' types. We consider each specific object as its own separate class. Our goal is to develop a zero-shot object detector that requires no training and can add any object as a class just by capturing a few images of the object. Our main idea is to break the segmentation pipelines into two steps by combining unseen object segmentation networks cascaded by zero-shot classifiers. We evaluate our zero-shot object detector on unseen datasets and compare it to a trained Mask R-CNN on those datasets. The results show that the performance varies from practical to unsuitable depending on the environment setup and the objects being handled. The code is available in our DoUnseen library repository.

Semi-Automated Computer Vision based Tracking of Multiple Industrial Entities -- A Framework and Dataset Creation Approach

Apr 03, 2023Abstract:This contribution presents the TOMIE framework (Tracking Of Multiple Industrial Entities), a framework for the continuous tracking of industrial entities (e.g., pallets, crates, barrels) over a network of, in this example, six RGB cameras. This framework, makes use of multiple sensors, data pipelines and data annotation procedures, and is described in detail in this contribution. With the vision of a fully automated tracking system for industrial entities in mind, it enables researchers to efficiently capture high quality data in an industrial setting. Using this framework, an image dataset, the TOMIE dataset, is created, which at the same time is used to gauge the framework's validity. This dataset contains annotation files for 112,860 frames and 640,936 entity instances that are captured from a set of six cameras that perceive a large indoor space. This dataset out-scales comparable datasets by a factor of four and is made up of scenarios, drawn from industrial applications from the sector of warehousing. Three tracking algorithms, namely ByteTrack, Bot-Sort and SiamMOT are applied to this dataset, serving as a proof-of-concept and providing tracking results that are comparable to the state of the art.

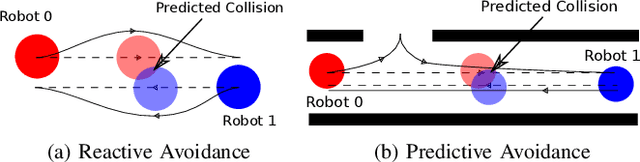

Distributed Timed Elastic Band (DTEB) Planner: Trajectory Sharing and Collision Prediction for Multi-Robot Systems

Mar 20, 2023

Abstract:Autonomous navigation of mobile robots is a well studied problem in robotics. However, the navigation task becomes challenging when multi-robot systems have to cooperatively navigate dynamic environments with deadlock-prone layouts. We present a Distributed Timed Elastic Band (DTEB) Planner that combines Prioritized Planning with the online TEB trajectory Planner, in order to extend the capabilities of the latter to multi-robot systems. The proposed planner is able to reactively avoid imminent collisions as well as predictively resolve potential deadlocks among a team of robots, while navigating in a complex environment. The results of our simulation demonstrate the reliable performance and the versatility of the planner in different environment settings. The code and tests for our approach are available online.

* Published in the International Conference on Robotics and Automation (ICRA) - 2022 https://ieeexplore.ieee.org/document/9811762

Comparing statistical and machine learning methods for time series forecasting in data-driven logistics -- A simulation study

Mar 13, 2023Abstract:Many planning and decision activities in logistics and supply chain management are based on forecasts of multiple time dependent factors. Therefore, the quality of planning depends on the quality of the forecasts. We compare various forecasting methods in terms of out of the box forecasting performance on a broad set of simulated time series. We simulate various linear and non-linear time series and look at the one step forecast performance of statistical learning methods.

A Grid-based Sensor Floor Platform for Robot Localization using Machine Learning

Dec 09, 2022Abstract:Wireless Sensor Network (WSN) applications reshape the trend of warehouse monitoring systems allowing them to track and locate massive numbers of logistic entities in real-time. To support the tasks, classic Radio Frequency (RF)-based localization approaches (e.g. triangulation and trilateration) confront challenges due to multi-path fading and signal loss in noisy warehouse environment. In this paper, we investigate machine learning methods using a new grid-based WSN platform called Sensor Floor that can overcome the issues. Sensor Floor consists of 345 nodes installed across the floor of our logistic research hall with dual-band RF and Inertial Measurement Unit (IMU) sensors. Our goal is to localize all logistic entities, for this study we use a mobile robot. We record distributed sensing measurements of Received Signal Strength Indicator (RSSI) and IMU values as the dataset and position tracking from Vicon system as the ground truth. The asynchronous collected data is pre-processed and trained using Random Forest and Convolutional Neural Network (CNN). The CNN model with regularization outperforms the Random Forest in terms of localization accuracy with aproximate 15 cm. Moreover, the CNN architecture can be configured flexibly depending on the scenario in the warehouse. The hardware, software and the CNN architecture of the Sensor Floor are open-source under https://github.com/FLW-TUDO/sensorfloor.

UAVs for Industries and Supply Chain Management

Dec 06, 2022

Abstract:This work aims at showing that it is feasible and safe to use a swarm of Unmanned Aerial Vehicles (UAVs) indoors alongside humans. UAVs are increasingly being integrated under the Industry 4.0 framework. UAV swarms are primarily deployed outdoors in civil and military applications, but the opportunities for using them in manufacturing and supply chain management are immense. There is extensive research on UAV technology, e.g., localization, control, and computer vision, but less research on the practical application of UAVs in industry. UAV technology could improve data collection and monitoring, enhance decision-making in an Internet of Things framework and automate time-consuming and redundant tasks in the industry. However, there is a gap between the technological developments of UAVs and their integration into the supply chain. Therefore, this work focuses on automating the task of transporting packages utilizing a swarm of small UAVs operating alongside humans. MoCap system, ROS, and unity are used for localization, inter-process communication and visualization. Multiple experiments are performed with the UAVs in wander and swarm mode in a warehouse like environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge