Emmanuel Müller

On Uniformly Scaling Flows: A Density-Aligned Approach to Deep One-Class Classification

Oct 10, 2025

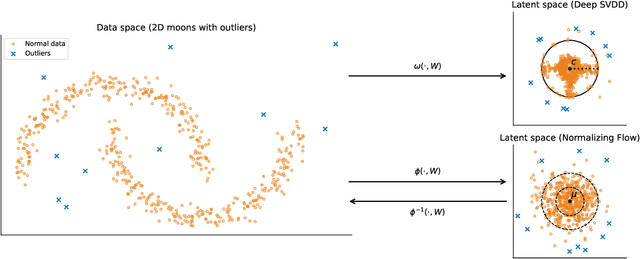

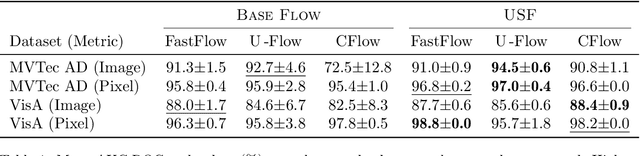

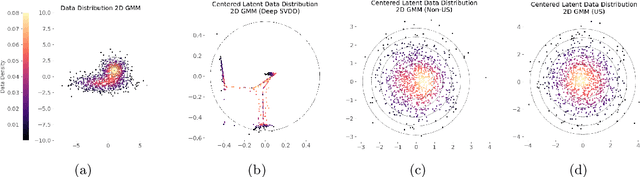

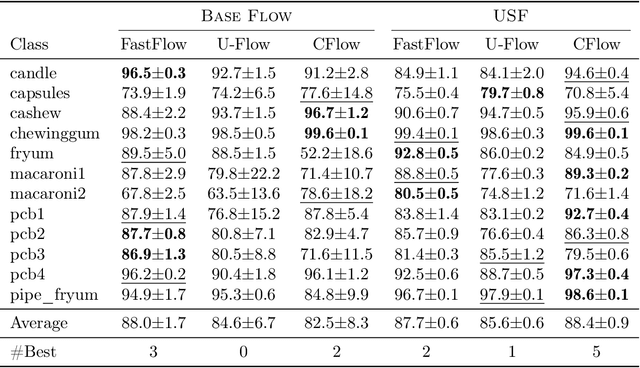

Abstract:Unsupervised anomaly detection is often framed around two widely studied paradigms. Deep one-class classification, exemplified by Deep SVDD, learns compact latent representations of normality, while density estimators realized by normalizing flows directly model the likelihood of nominal data. In this work, we show that uniformly scaling flows (USFs), normalizing flows with a constant Jacobian determinant, precisely connect these approaches. Specifically, we prove how training a USF via maximum-likelihood reduces to a Deep SVDD objective with a unique regularization that inherently prevents representational collapse. This theoretical bridge implies that USFs inherit both the density faithfulness of flows and the distance-based reasoning of one-class methods. We further demonstrate that USFs induce a tighter alignment between negative log-likelihood and latent norm than either Deep SVDD or non-USFs, and how recent hybrid approaches combining one-class objectives with VAEs can be naturally extended to USFs. Consequently, we advocate using USFs as a drop-in replacement for non-USFs in modern anomaly detection architectures. Empirically, this substitution yields consistent performance gains and substantially improved training stability across multiple benchmarks and model backbones for both image-level and pixel-level detection. These results unify two major anomaly detection paradigms, advancing both theoretical understanding and practical performance.

Uncertainty Awareness and Trust in Explainable AI- On Trust Calibration using Local and Global Explanations

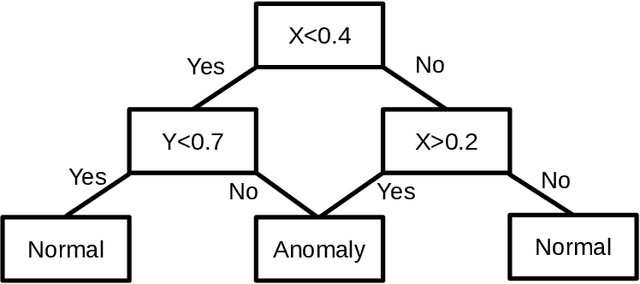

Sep 10, 2025Abstract:Explainable AI has become a common term in the literature, scrutinized by computer scientists and statisticians and highlighted by psychological or philosophical researchers. One major effort many researchers tackle is constructing general guidelines for XAI schemes, which we derived from our study. While some areas of XAI are well studied, we focus on uncertainty explanations and consider global explanations, which are often left out. We chose an algorithm that covers various concepts simultaneously, such as uncertainty, robustness, and global XAI, and tested its ability to calibrate trust. We then checked whether an algorithm that aims to provide more of an intuitive visual understanding, despite being complicated to understand, can provide higher user satisfaction and human interpretability.

Rare anomalies require large datasets: About proving the existence of anomalies

Aug 13, 2025

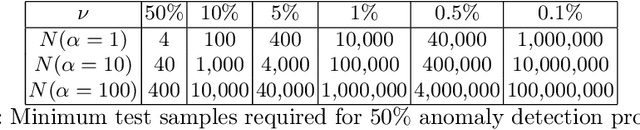

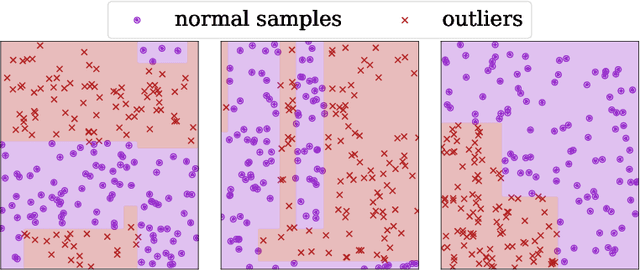

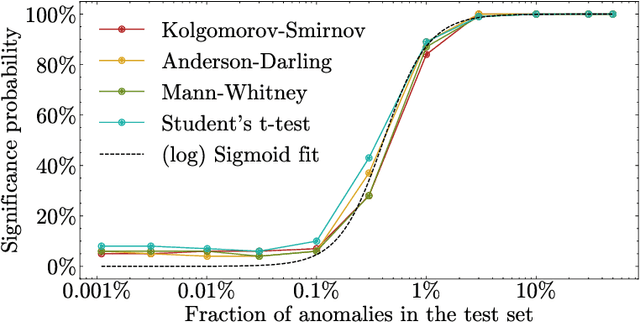

Abstract:Detecting whether any anomalies exist within a dataset is crucial for effective anomaly detection, yet it remains surprisingly underexplored in anomaly detection literature. This paper presents a comprehensive study that addresses the fundamental question: When can we conclusively determine that anomalies are present? Through extensive experimentation involving over three million statistical tests across various anomaly detection tasks and algorithms, we identify a relationship between the dataset size, contamination rate, and an algorithm-dependent constant $ \alpha_{\text{algo}} $. Our results demonstrate that, for an unlabeled dataset of size $ N $ and contamination rate $ \nu $, the condition $ N \ge \frac{\alpha_{\text{algo}}}{\nu^2} $ represents a lower bound on the number of samples required to confirm anomaly existence. This threshold implies a limit to how rare anomalies can be before proving their existence becomes infeasible.

Polyra Swarms: A Shape-Based Approach to Machine Learning

Jun 16, 2025Abstract:We propose Polyra Swarms, a novel machine-learning approach that approximates shapes instead of functions. Our method enables general-purpose learning with very low bias. In particular, we show that depending on the task, Polyra Swarms can be preferable compared to neural networks, especially for tasks like anomaly detection. We further introduce an automated abstraction mechanism that simplifies the complexity of a Polyra Swarm significantly, enhancing both their generalization and transparency. Since Polyra Swarms operate on fundamentally different principles than neural networks, they open up new research directions with distinct strengths and limitations.

Do you see what I see? An Ambiguous Optical Illusion Dataset exposing limitations of Explainable AI

May 27, 2025Abstract:From uncertainty quantification to real-world object detection, we recognize the importance of machine learning algorithms, particularly in safety-critical domains such as autonomous driving or medical diagnostics. In machine learning, ambiguous data plays an important role in various machine learning domains. Optical illusions present a compelling area of study in this context, as they offer insight into the limitations of both human and machine perception. Despite this relevance, optical illusion datasets remain scarce. In this work, we introduce a novel dataset of optical illusions featuring intermingled animal pairs designed to evoke perceptual ambiguity. We identify generalizable visual concepts, particularly gaze direction and eye cues, as subtle yet impactful features that significantly influence model accuracy. By confronting models with perceptual ambiguity, our findings underscore the importance of concepts in visual learning and provide a foundation for studying bias and alignment between human and machine vision. To make this dataset useful for general purposes, we generate optical illusions systematically with different concepts discussed in our bias mitigation section. The dataset is accessible in Kaggle via https://kaggle.com/datasets/693bf7c6dd2cb45c8a863f9177350c8f9849a9508e9d50526e2ffcc5559a8333. Our source code can be found at https://github.com/KDD-OpenSource/Ambivision.git.

Unsupervised Surrogate Anomaly Detection

Apr 29, 2025Abstract:In this paper, we study unsupervised anomaly detection algorithms that learn a neural network representation, i.e. regular patterns of normal data, which anomalies are deviating from. Inspired by a similar concept in engineering, we refer to our methodology as surrogate anomaly detection. We formalize the concept of surrogate anomaly detection into a set of axioms required for optimal surrogate models and propose a new algorithm, named DEAN (Deep Ensemble ANomaly detection), designed to fulfill these criteria. We evaluate DEAN on 121 benchmark datasets, demonstrating its competitive performance against 19 existing methods, as well as the scalability and reliability of our method.

A Cautionary Tale About "Neutrally" Informative AI Tools Ahead of the 2025 Federal Elections in Germany

Feb 21, 2025Abstract:In this study, we examine the reliability of AI-based Voting Advice Applications (VAAs) and large language models (LLMs) in providing objective political information. Our analysis is based upon a comparison with party responses to 38 statements of the Wahl-O-Mat, a well-established German online tool that helps inform voters by comparing their views with political party positions. For the LLMs, we identify significant biases. They exhibit a strong alignment (over 75% on average) with left-wing parties and a substantially lower alignment with center-right (smaller 50%) and right-wing parties (around 30%). Furthermore, for the VAAs, intended to objectively inform voters, we found substantial deviations from the parties' stated positions in Wahl-O-Mat: While one VAA deviated in 25% of cases, another VAA showed deviations in more than 50% of cases. For the latter, we even observed that simple prompt injections led to severe hallucinations, including false claims such as non-existent connections between political parties and right-wing extremist ties.

Exploring the Impact of Outlier Variability on Anomaly Detection Evaluation Metrics

Sep 24, 2024

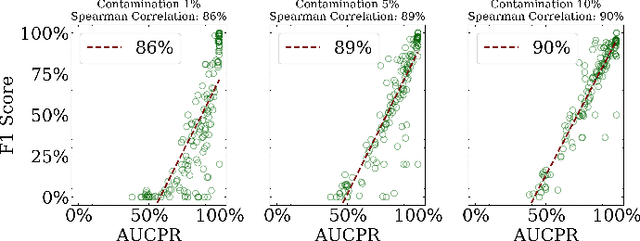

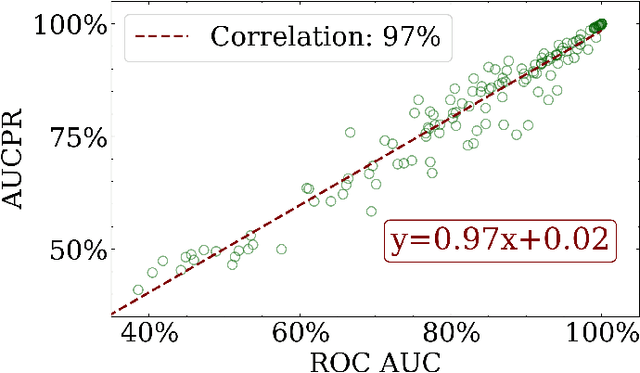

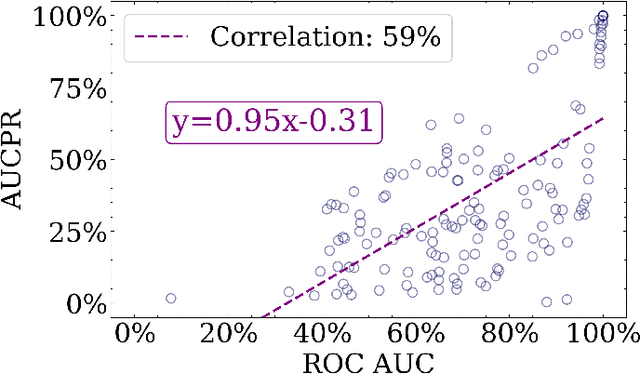

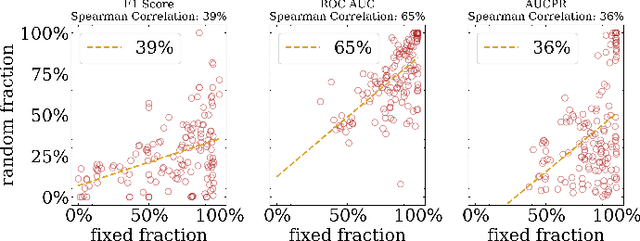

Abstract:Anomaly detection is a dynamic field, in which the evaluation of models plays a critical role in understanding their effectiveness. The selection and interpretation of the evaluation metrics are pivotal, particularly in scenarios with varying amounts of anomalies. This study focuses on examining the behaviors of three widely used anomaly detection metrics under different conditions: F1 score, Receiver Operating Characteristic Area Under Curve (ROC AUC), and Precision-Recall Curve Area Under Curve (AUCPR). Our study critically analyzes the extent to which these metrics provide reliable and distinct insights into model performance, especially considering varying levels of outlier fractions and contamination thresholds in datasets. Through a comprehensive experimental setup involving widely recognized algorithms for anomaly detection, we present findings that challenge the conventional understanding of these metrics and reveal nuanced behaviors under varying conditions. We demonstrated that while the F1 score and AUCPR are sensitive to outlier fractions, the ROC AUC maintains consistency and is unaffected by such variability. Additionally, under conditions of a fixed outlier fraction in the test set, we observe an alignment between ROC AUC and AUCPR, indicating that the choice between these two metrics may be less critical in such scenarios. The results of our study contribute to a more refined understanding of metric selection and interpretation in anomaly detection, offering valuable insights for both researchers and practitioners in the field.

About Test-time training for outlier detection

Apr 04, 2024

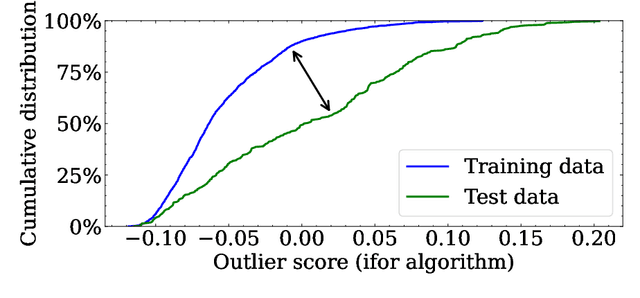

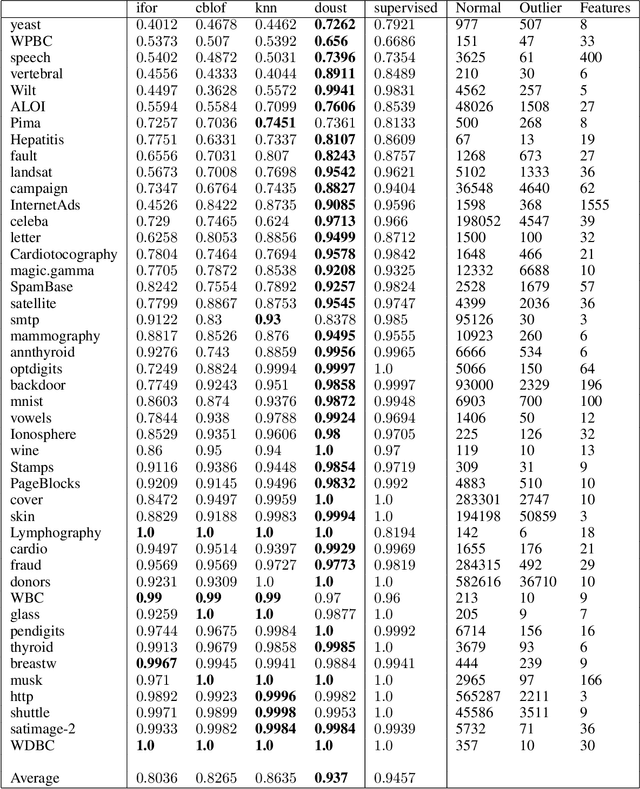

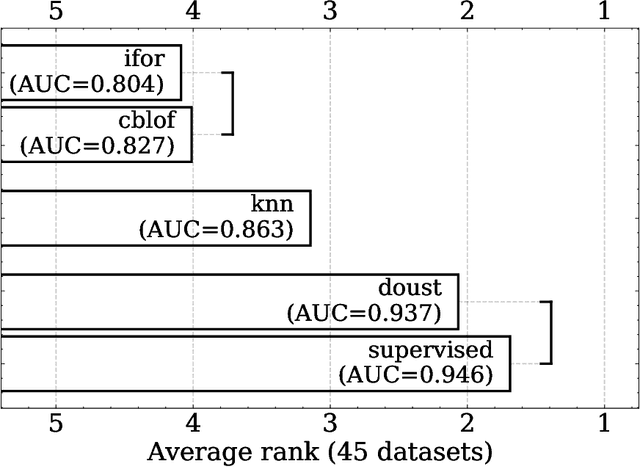

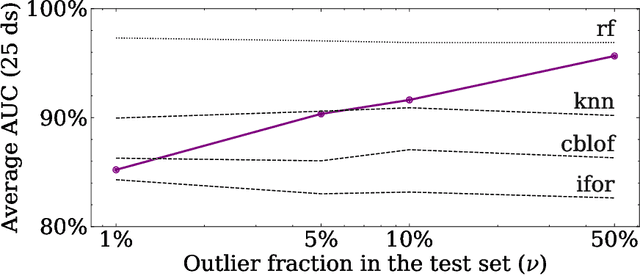

Abstract:In this paper, we introduce DOUST, our method applying test-time training for outlier detection, significantly improving the detection performance. After thoroughly evaluating our algorithm on common benchmark datasets, we discuss a common problem and show that it disappears with a large enough test set. Thus, we conclude that under reasonable conditions, our algorithm can reach almost supervised performance even when no labeled outliers are given.

On the Effectiveness of Heterogeneous Ensemble Methods for Re-identification

Mar 19, 2024

Abstract:In this contribution, we introduce a novel ensemble method for the re-identification of industrial entities, using images of chipwood pallets and galvanized metal plates as dataset examples. Our algorithms replace commonly used, complex siamese neural networks with an ensemble of simplified, rudimentary models, providing wider applicability, especially in hardware-restricted scenarios. Each ensemble sub-model uses different types of extracted features of the given data as its input, allowing for the creation of effective ensembles in a fraction of the training duration needed for more complex state-of-the-art models. We reach state-of-the-art performance at our task, with a Rank-1 accuracy of over 77% and a Rank-10 accuracy of over 99%, and introduce five distinct feature extraction approaches, and study their combination using different ensemble methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge