Titinunt Kitrungrotsakul

Falcon: A Remote Sensing Vision-Language Foundation Model

Mar 14, 2025

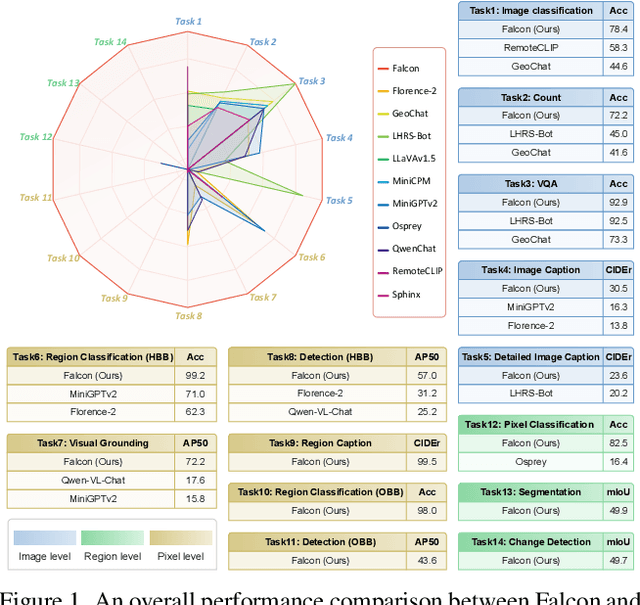

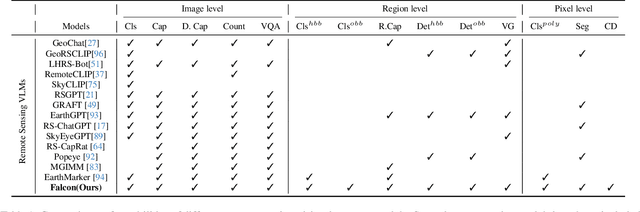

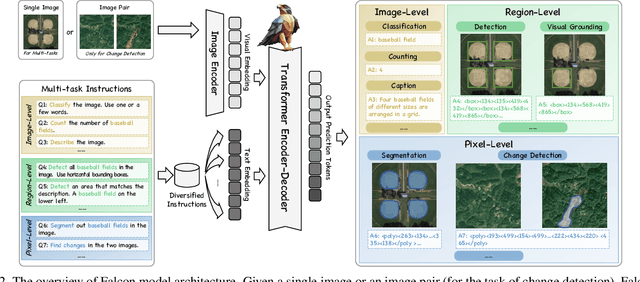

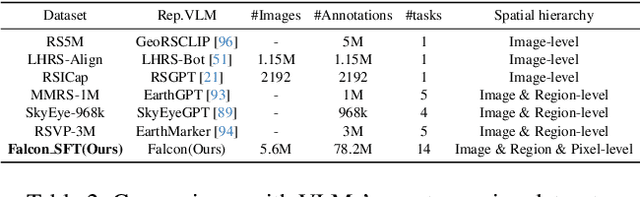

Abstract:This paper introduces a holistic vision-language foundation model tailored for remote sensing, named Falcon. Falcon offers a unified, prompt-based paradigm that effectively executes comprehensive and complex remote sensing tasks. Falcon demonstrates powerful understanding and reasoning abilities at the image, region, and pixel levels. Specifically, given simple natural language instructions and remote sensing images, Falcon can produce impressive results in text form across 14 distinct tasks, i.e., image classification, object detection, segmentation, image captioning, and etc. To facilitate Falcon's training and empower its representation capacity to encode rich spatial and semantic information, we developed Falcon_SFT, a large-scale, multi-task, instruction-tuning dataset in the field of remote sensing. The Falcon_SFT dataset consists of approximately 78 million high-quality data samples, covering 5.6 million multi-spatial resolution and multi-view remote sensing images with diverse instructions. It features hierarchical annotations and undergoes manual sampling verification to ensure high data quality and reliability. Extensive comparative experiments are conducted, which verify that Falcon achieves remarkable performance over 67 datasets and 14 tasks, despite having only 0.7B parameters. We release the complete dataset, code, and model weights at https://github.com/TianHuiLab/Falcon, hoping to help further develop the open-source community.

Interactive Deep Refinement Network for Medical Image Segmentation

Jun 27, 2020

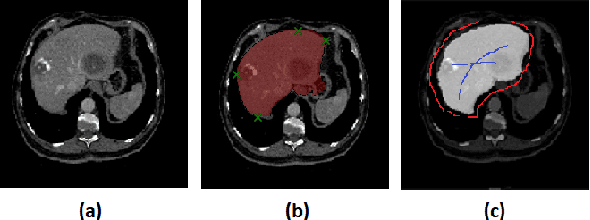

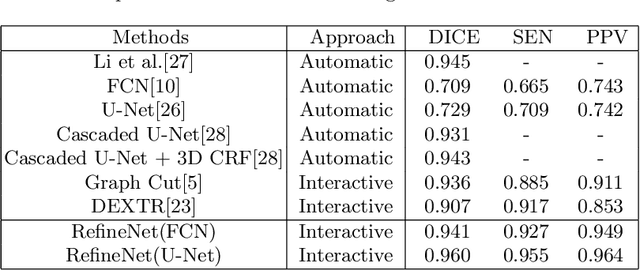

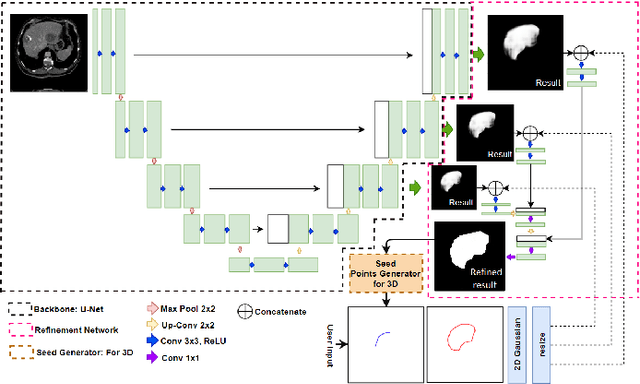

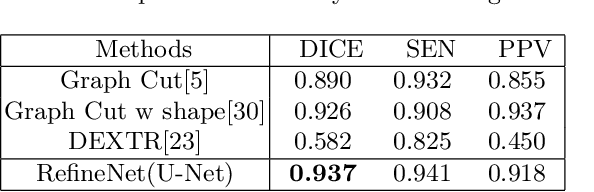

Abstract:Deep learning techniques have successfully been employed in numerous computer vision tasks including image segmentation. The techniques have also been applied to medical image segmentation, one of the most critical tasks in computer-aided diagnosis. Compared with natural images, the medical image is a gray-scale image with low-contrast (even with some invisible parts). Because some organs have similar intensity and texture with neighboring organs, there is usually a need to refine automatic segmentation results. In this paper, we propose an interactive deep refinement framework to improve the traditional semantic segmentation networks such as U-Net and fully convolutional network. In the proposed framework, we added a refinement network to traditional segmentation network to refine the segmentation results.Experimental results with public dataset revealed that the proposed method could achieve higher accuracy than other state-of-the-art methods.

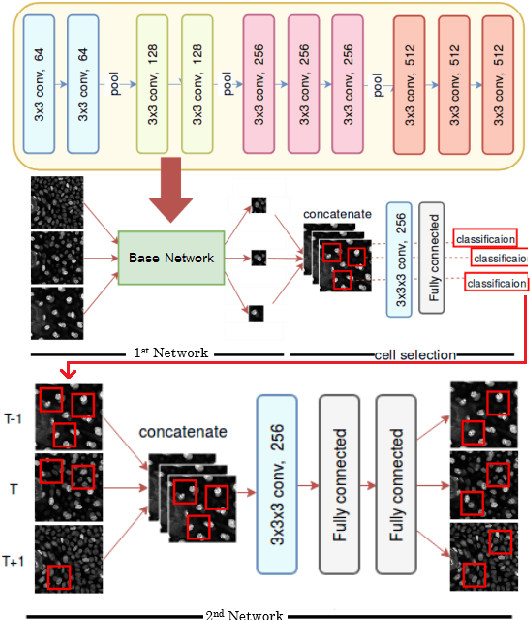

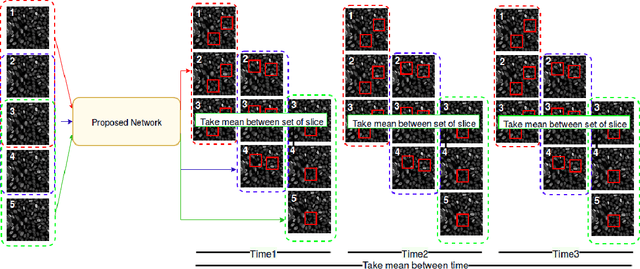

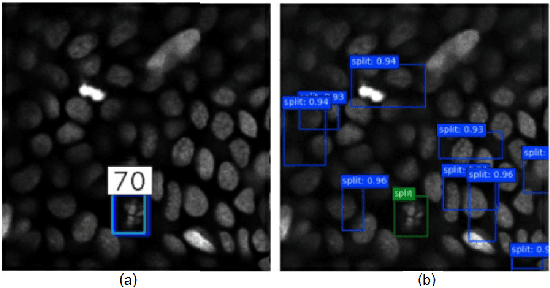

A Cascade of 2.5D CNN and LSTM Network for Mitotic Cell Detection in 4D Microscopy Image

Jun 04, 2018

Abstract:In recent years, intravital skin imaging has been used in mammalian skin research for the investigation of cell behaviors. The mitotic cell (cell division) detection is a fundamental step of the investigation. Due to the complex backgrounds (normal cells), most of the existing methods bring a lot of false positives. In this paper, we proposed a 2.5 dimensional (2.5D) cascaded end-to-end network (CasDetNet_LSTM) for accurate automatic mitotic cell detection in 4D microscopic images with fewer training data. The CasDetNet_LSTM consists of two 2.5D networks. The first one is a 2.5D fast region-based convolutional neural network (2.5D Fast R-CNN), which is used for the detection of candidate cells with only volume information and the second one is a long short-term memory (LSTM) network embedded with temporal information, which is used for reduction of false positive and retrieving back those mitotic cells that missed in the first step. The experimental results shown that our CasDetNet_LSTM can achieve higher precision and recall comparing to other state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge