Xiong Wei

A Cascade of 2.5D CNN and LSTM Network for Mitotic Cell Detection in 4D Microscopy Image

Jun 04, 2018

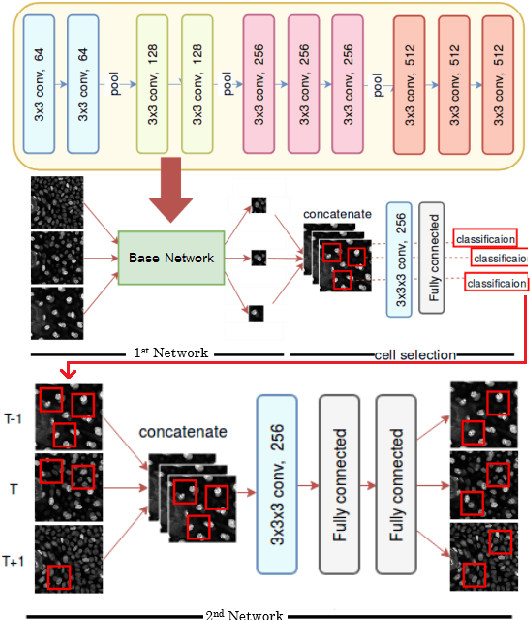

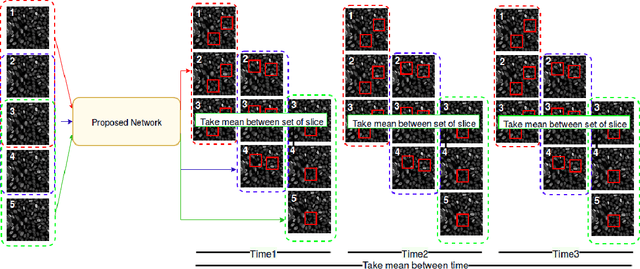

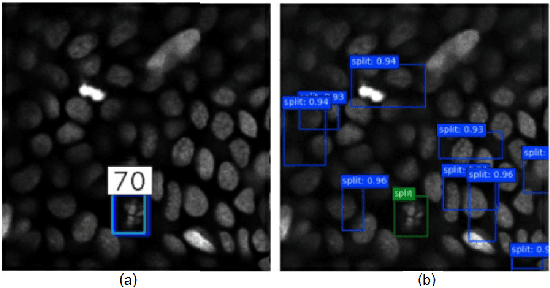

Abstract:In recent years, intravital skin imaging has been used in mammalian skin research for the investigation of cell behaviors. The mitotic cell (cell division) detection is a fundamental step of the investigation. Due to the complex backgrounds (normal cells), most of the existing methods bring a lot of false positives. In this paper, we proposed a 2.5 dimensional (2.5D) cascaded end-to-end network (CasDetNet_LSTM) for accurate automatic mitotic cell detection in 4D microscopic images with fewer training data. The CasDetNet_LSTM consists of two 2.5D networks. The first one is a 2.5D fast region-based convolutional neural network (2.5D Fast R-CNN), which is used for the detection of candidate cells with only volume information and the second one is a long short-term memory (LSTM) network embedded with temporal information, which is used for reduction of false positive and retrieving back those mitotic cells that missed in the first step. The experimental results shown that our CasDetNet_LSTM can achieve higher precision and recall comparing to other state-of-the-art methods.

Depth Not Needed - An Evaluation of RGB-D Feature Encodings for Off-Road Scene Understanding by Convolutional Neural Network

Jan 04, 2018

Abstract:Scene understanding for autonomous vehicles is a challenging computer vision task, with recent advances in convolutional neural networks (CNNs) achieving results that notably surpass prior traditional feature driven approaches. However, limited work investigates the application of such methods either within the highly unstructured off-road environment or to RGBD input data. In this work, we take an existing CNN architecture designed to perform semantic segmentation of RGB images of urban road scenes, then adapt and retrain it to perform the same task with multichannel RGBD images obtained under a range of challenging off-road conditions. We compare two different stereo matching algorithms and five different methods of encoding depth information, including disparity, local normal orientation and HHA (horizontal disparity, height above ground plane, angle with gravity), to create a total of ten experimental variations of our dataset, each of which is used to train and test a CNN so that classification performance can be evaluated against a CNN trained using standard RGB input.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge