Timo Speith

Saarland University, Germany

What Makes for a Good Saliency Map? Comparing Strategies for Evaluating Saliency Maps in Explainable AI (XAI)

Apr 23, 2025Abstract:Saliency maps are a popular approach for explaining classifications of (convolutional) neural networks. However, it remains an open question as to how best to evaluate salience maps, with three families of evaluation methods commonly being used: subjective user measures, objective user measures, and mathematical metrics. We examine three of the most popular saliency map approaches (viz., LIME, Grad-CAM, and Guided Backpropagation) in a between subject study (N=166) across these families of evaluation methods. We test 1) for subjective measures, if the maps differ with respect to user trust and satisfaction; 2) for objective measures, if the maps increase users' abilities and thus understanding of a model; 3) for mathematical metrics, which map achieves the best ratings across metrics; and 4) whether the mathematical metrics can be associated with objective user measures. To our knowledge, our study is the first to compare several salience maps across all these evaluation methods$-$with the finding that they do not agree in their assessment (i.e., there was no difference concerning trust and satisfaction, Grad-CAM improved users' abilities best, and Guided Backpropagation had the most favorable mathematical metrics). Additionally, we show that some mathematical metrics were associated with user understanding, although this relationship was often counterintuitive. We discuss these findings in light of general debates concerning the complementary use of user studies and mathematical metrics in the evaluation of explainable AI (XAI) approaches.

Mapping the Potential of Explainable Artificial Intelligence (XAI) for Fairness Along the AI Lifecycle

Apr 30, 2024

Abstract:The widespread use of artificial intelligence (AI) systems across various domains is increasingly highlighting issues related to algorithmic fairness, especially in high-stakes scenarios. Thus, critical considerations of how fairness in AI systems might be improved, and what measures are available to aid this process, are overdue. Many researchers and policymakers see explainable AI (XAI) as a promising way to increase fairness in AI systems. However, there is a wide variety of XAI methods and fairness conceptions expressing different desiderata, and the precise connections between XAI and fairness remain largely nebulous. Besides, different measures to increase algorithmic fairness might be applicable at different points throughout an AI system's lifecycle. Yet, there currently is no coherent mapping of fairness desiderata along the AI lifecycle. In this paper, we set out to bridge both these gaps: We distill eight fairness desiderata, map them along the AI lifecycle, and discuss how XAI could help address each of them. We hope to provide orientation for practical applications and to inspire XAI research specifically focused on these fairness desiderata.

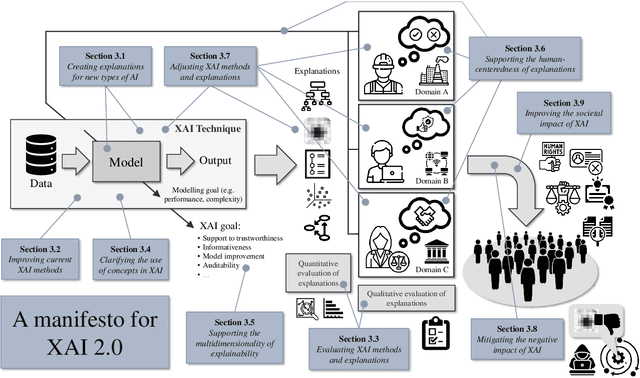

Explainable Artificial Intelligence (XAI) 2.0: A Manifesto of Open Challenges and Interdisciplinary Research Directions

Oct 30, 2023

Abstract:As systems based on opaque Artificial Intelligence (AI) continue to flourish in diverse real-world applications, understanding these black box models has become paramount. In response, Explainable AI (XAI) has emerged as a field of research with practical and ethical benefits across various domains. This paper not only highlights the advancements in XAI and its application in real-world scenarios but also addresses the ongoing challenges within XAI, emphasizing the need for broader perspectives and collaborative efforts. We bring together experts from diverse fields to identify open problems, striving to synchronize research agendas and accelerate XAI in practical applications. By fostering collaborative discussion and interdisciplinary cooperation, we aim to propel XAI forward, contributing to its continued success. Our goal is to put forward a comprehensive proposal for advancing XAI. To achieve this goal, we present a manifesto of 27 open problems categorized into nine categories. These challenges encapsulate the complexities and nuances of XAI and offer a road map for future research. For each problem, we provide promising research directions in the hope of harnessing the collective intelligence of interested stakeholders.

Revisiting the Performance-Explainability Trade-Off in Explainable Artificial Intelligence (XAI)

Jul 26, 2023

Abstract:Within the field of Requirements Engineering (RE), the increasing significance of Explainable Artificial Intelligence (XAI) in aligning AI-supported systems with user needs, societal expectations, and regulatory standards has garnered recognition. In general, explainability has emerged as an important non-functional requirement that impacts system quality. However, the supposed trade-off between explainability and performance challenges the presumed positive influence of explainability. If meeting the requirement of explainability entails a reduction in system performance, then careful consideration must be given to which of these quality aspects takes precedence and how to compromise between them. In this paper, we critically examine the alleged trade-off. We argue that it is best approached in a nuanced way that incorporates resource availability, domain characteristics, and considerations of risk. By providing a foundation for future research and best practices, this work aims to advance the field of RE for AI.

Sources of Opacity in Computer Systems: Towards a Comprehensive Taxonomy

Jul 26, 2023

Abstract:Modern computer systems are ubiquitous in contemporary life yet many of them remain opaque. This poses significant challenges in domains where desiderata such as fairness or accountability are crucial. We suggest that the best strategy for achieving system transparency varies depending on the specific source of opacity prevalent in a given context. Synthesizing and extending existing discussions, we propose a taxonomy consisting of eight sources of opacity that fall into three main categories: architectural, analytical, and socio-technical. For each source, we provide initial suggestions as to how to address the resulting opacity in practice. The taxonomy provides a starting point for requirements engineers and other practitioners to understand contextually prevalent sources of opacity, and to select or develop appropriate strategies for overcoming them.

A New Perspective on Evaluation Methods for Explainable Artificial Intelligence

Jul 26, 2023

Abstract:Within the field of Requirements Engineering (RE), the increasing significance of Explainable Artificial Intelligence (XAI) in aligning AI-supported systems with user needs, societal expectations, and regulatory standards has garnered recognition. In general, explainability has emerged as an important non-functional requirement that impacts system quality. However, the supposed trade-off between explainability and performance challenges the presumed positive influence of explainability. If meeting the requirement of explainability entails a reduction in system performance, then careful consideration must be given to which of these quality aspects takes precedence and how to compromise between them. In this paper, we critically examine the alleged trade-off. We argue that it is best approached in a nuanced way that incorporates resource availability, domain characteristics, and considerations of risk. By providing a foundation for future research and best practices, this work aims to advance the field of RE for AI.

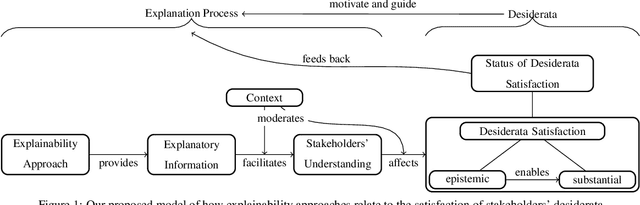

What Do We Want From Explainable Artificial Intelligence ? -- A Stakeholder Perspective on XAI and a Conceptual Model Guiding Interdisciplinary XAI Research

Feb 15, 2021

Abstract:Previous research in Explainable Artificial Intelligence (XAI) suggests that a main aim of explainability approaches is to satisfy specific interests, goals, expectations, needs, and demands regarding artificial systems (we call these stakeholders' desiderata) in a variety of contexts. However, the literature on XAI is vast, spreads out across multiple largely disconnected disciplines, and it often remains unclear how explainability approaches are supposed to achieve the goal of satisfying stakeholders' desiderata. This paper discusses the main classes of stakeholders calling for explainability of artificial systems and reviews their desiderata. We provide a model that explicitly spells out the main concepts and relations necessary to consider and investigate when evaluating, adjusting, choosing, and developing explainability approaches that aim to satisfy stakeholders' desiderata. This model can serve researchers from the variety of different disciplines involved in XAI as a common ground. It emphasizes where there is interdisciplinary potential in the evaluation and the development of explainability approaches.

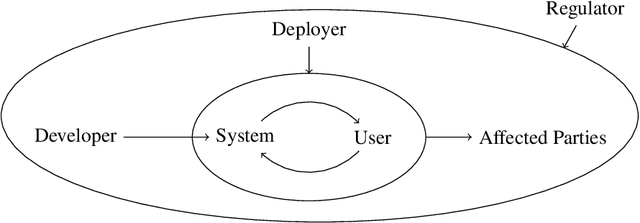

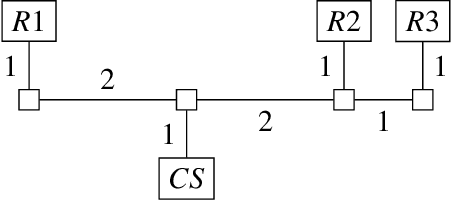

Towards a Framework Combining Machine Ethics and Machine Explainability

Jan 03, 2019

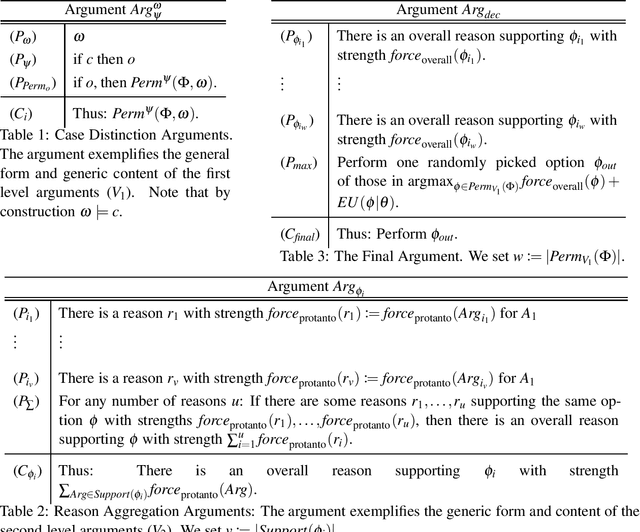

Abstract:We find ourselves surrounded by a rapidly increasing number of autonomous and semi-autonomous systems. Two grand challenges arise from this development: Machine Ethics and Machine Explainability. Machine Ethics, on the one hand, is concerned with behavioral constraints for systems, so that morally acceptable, restricted behavior results; Machine Explainability, on the other hand, enables systems to explain their actions and argue for their decisions, so that human users can understand and justifiably trust them. In this paper, we try to motivate and work towards a framework combining Machine Ethics and Machine Explainability. Starting from a toy example, we detect various desiderata of such a framework and argue why they should and how they could be incorporated in autonomous systems. Our main idea is to apply a framework of formal argumentation theory both, for decision-making under ethical constraints and for the task of generating useful explanations given only limited knowledge of the world. The result of our deliberations can be described as a first version of an ethically motivated, principle-governed framework combining Machine Ethics and Machine Explainability

* In Proceedings CREST 2018, arXiv:1901.00073

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge