Towards a Framework Combining Machine Ethics and Machine Explainability

Paper and Code

Jan 03, 2019

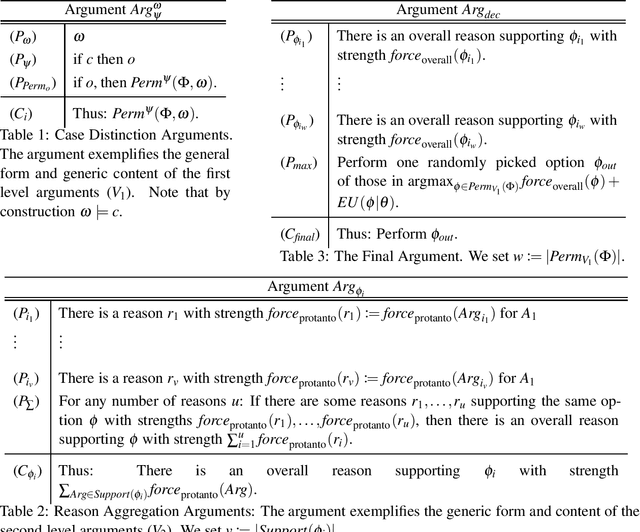

We find ourselves surrounded by a rapidly increasing number of autonomous and semi-autonomous systems. Two grand challenges arise from this development: Machine Ethics and Machine Explainability. Machine Ethics, on the one hand, is concerned with behavioral constraints for systems, so that morally acceptable, restricted behavior results; Machine Explainability, on the other hand, enables systems to explain their actions and argue for their decisions, so that human users can understand and justifiably trust them. In this paper, we try to motivate and work towards a framework combining Machine Ethics and Machine Explainability. Starting from a toy example, we detect various desiderata of such a framework and argue why they should and how they could be incorporated in autonomous systems. Our main idea is to apply a framework of formal argumentation theory both, for decision-making under ethical constraints and for the task of generating useful explanations given only limited knowledge of the world. The result of our deliberations can be described as a first version of an ethically motivated, principle-governed framework combining Machine Ethics and Machine Explainability

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge