Tien Pham

DeGuV: Depth-Guided Visual Reinforcement Learning for Generalization and Interpretability in Manipulation

Sep 05, 2025Abstract:Reinforcement learning (RL) agents can learn to solve complex tasks from visual inputs, but generalizing these learned skills to new environments remains a major challenge in RL application, especially robotics. While data augmentation can improve generalization, it often compromises sample efficiency and training stability. This paper introduces DeGuV, an RL framework that enhances both generalization and sample efficiency. In specific, we leverage a learnable masker network that produces a mask from the depth input, preserving only critical visual information while discarding irrelevant pixels. Through this, we ensure that our RL agents focus on essential features, improving robustness under data augmentation. In addition, we incorporate contrastive learning and stabilize Q-value estimation under augmentation to further enhance sample efficiency and training stability. We evaluate our proposed method on the RL-ViGen benchmark using the Franka Emika robot and demonstrate its effectiveness in zero-shot sim-to-real transfer. Our results show that DeGuV outperforms state-of-the-art methods in both generalization and sample efficiency while also improving interpretability by highlighting the most relevant regions in the visual input

Pay Attention to What and Where? Interpretable Feature Extractor in Vision-based Deep Reinforcement Learning

Apr 14, 2025

Abstract:Current approaches in Explainable Deep Reinforcement Learning have limitations in which the attention mask has a displacement with the objects in visual input. This work addresses a spatial problem within traditional Convolutional Neural Networks (CNNs). We propose the Interpretable Feature Extractor (IFE) architecture, aimed at generating an accurate attention mask to illustrate both "what" and "where" the agent concentrates on in the spatial domain. Our design incorporates a Human-Understandable Encoding module to generate a fully interpretable attention mask, followed by an Agent-Friendly Encoding module to enhance the agent's learning efficiency. These two components together form the Interpretable Feature Extractor for vision-based deep reinforcement learning to enable the model's interpretability. The resulting attention mask is consistent, highly understandable by humans, accurate in spatial dimension, and effectively highlights important objects or locations in visual input. The Interpretable Feature Extractor is integrated into the Fast and Data-efficient Rainbow framework, and evaluated on 57 ATARI games to show the effectiveness of the proposed approach on Spatial Preservation, Interpretability, and Data-efficiency. Finally, we showcase the versatility of our approach by incorporating the IFE into the Asynchronous Advantage Actor-Critic Model.

FlowMP: Learning Motion Fields for Robot Planning with Conditional Flow Matching

Mar 08, 2025Abstract:Prior flow matching methods in robotics have primarily learned velocity fields to morph one distribution of trajectories into another. In this work, we extend flow matching to capture second-order trajectory dynamics, incorporating acceleration effects either explicitly in the model or implicitly through the learning objective. Unlike diffusion models, which rely on a noisy forward process and iterative denoising steps, flow matching trains a continuous transformation (flow) that directly maps a simple prior distribution to the target trajectory distribution without any denoising procedure. By modeling trajectories with second-order dynamics, our approach ensures that generated robot motions are smooth and physically executable, avoiding the jerky or dynamically infeasible trajectories that first-order models might produce. We empirically demonstrate that this second-order conditional flow matching yields superior performance on motion planning benchmarks, achieving smoother trajectories and higher success rates than baseline planners. These findings highlight the advantage of learning acceleration-aware motion fields, as our method outperforms existing motion planning methods in terms of trajectory quality and planning success.

Gaussian Process Upper Confidence Bounds in Distributed Point Target Tracking over Wireless Sensor Networks

Sep 11, 2024

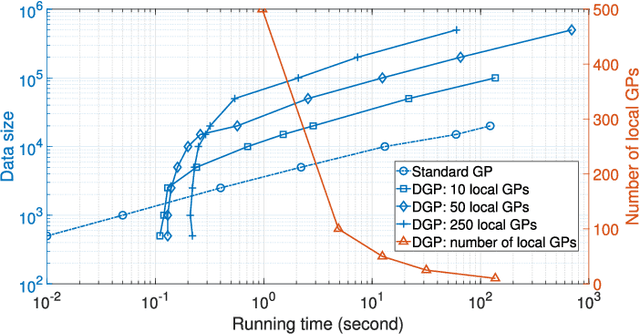

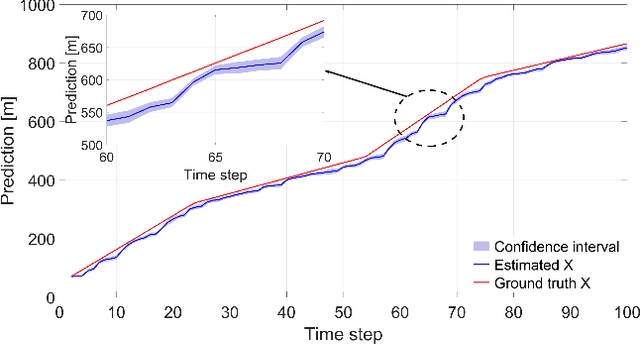

Abstract:Uncertainty quantification plays a key role in the development of autonomous systems, decision-making, and tracking over wireless sensor networks (WSNs). However, there is a need of providing uncertainty confidence bounds, especially for distributed machine learning-based tracking, dealing with different volumes of data collected by sensors. This paper aims to fill in this gap and proposes a distributed Gaussian process (DGP) approach for point target tracking and derives upper confidence bounds (UCBs) of the state estimates. A unique contribution of this paper includes the derived theoretical guarantees on the proposed approach and its maximum accuracy for tracking with and without clutter measurements. Particularly, the developed approaches with uncertainty bounds are generic and can provide trustworthy solutions with an increased level of reliability. A novel hybrid Bayesian filtering method is proposed to enhance the DGP approach by adopting a Poisson measurement likelihood model. The proposed approaches are validated over a WSN case study, where sensors have limited sensing ranges. Numerical results demonstrate the tracking accuracy and robustness of the proposed approaches. The derived UCBs constitute a tool for trustworthiness evaluation of DGP approaches. The simulation results reveal that the proposed UCBs successfully encompass the true target states with 88% and 42% higher probability in X and Y coordinates, respectively, when compared to the confidence interval-based method.

Explainable AI for Intelligence Augmentation in Multi-Domain Operations

Oct 16, 2019

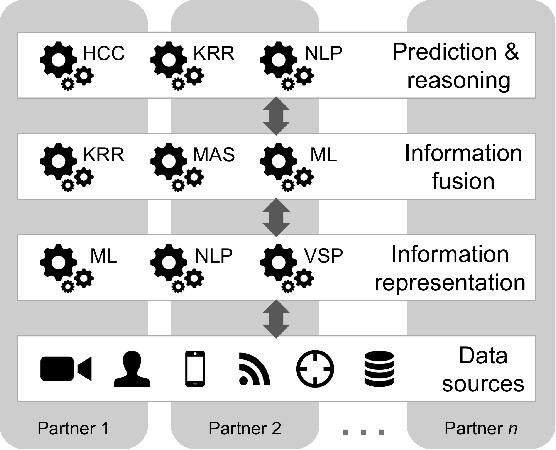

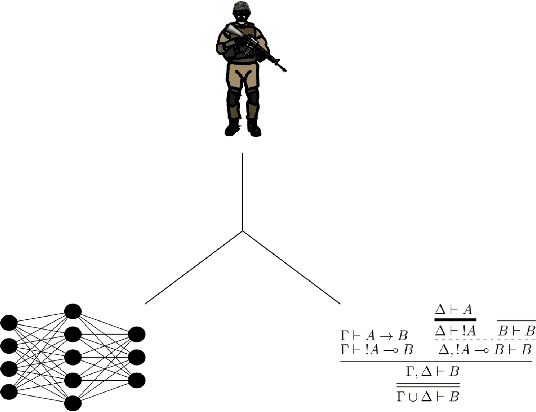

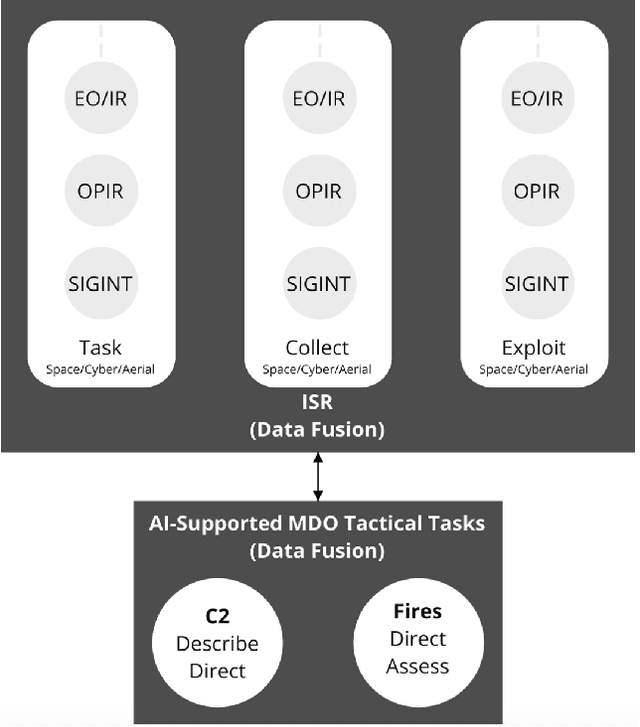

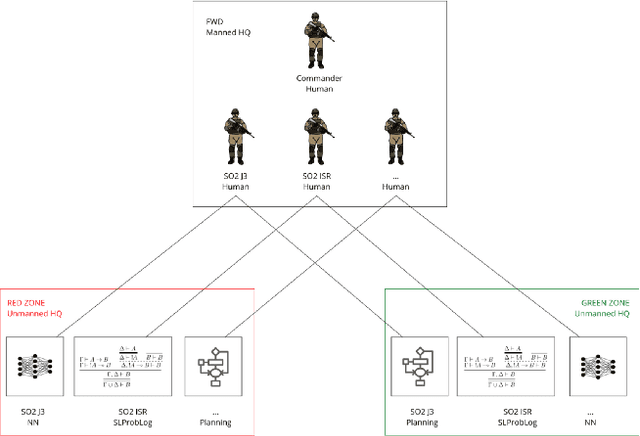

Abstract:Central to the concept of multi-domain operations (MDO) is the utilization of an intelligence, surveillance, and reconnaissance (ISR) network consisting of overlapping systems of remote and autonomous sensors, and human intelligence, distributed among multiple partners. Realising this concept requires advancement in both artificial intelligence (AI) for improved distributed data analytics and intelligence augmentation (IA) for improved human-machine cognition. The contribution of this paper is threefold: (1) we map the coalition situational understanding (CSU) concept to MDO ISR requirements, paying particular attention to the need for assured and explainable AI to allow robust human-machine decision-making where assets are distributed among multiple partners; (2) we present illustrative vignettes for AI and IA in MDO ISR, including human-machine teaming, dense urban terrain analysis, and enhanced asset interoperability; (3) we appraise the state-of-the-art in explainable AI in relation to the vignettes with a focus on human-machine collaboration to achieve more rapid and agile coalition decision-making. The union of these three elements is intended to show the potential value of a CSU approach in the context of MDO ISR, grounded in three distinct use cases, highlighting how the need for explainability in the multi-partner coalition setting is key.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge