Tianyu Wo

Eq.Bot: Enhance Robotic Manipulation Learning via Group Equivariant Canonicalization

Nov 19, 2025Abstract:Robotic manipulation systems are increasingly deployed across diverse domains. Yet existing multi-modal learning frameworks lack inherent guarantees of geometric consistency, struggling to handle spatial transformations such as rotations and translations. While recent works attempt to introduce equivariance through bespoke architectural modifications, these methods suffer from high implementation complexity, computational cost, and poor portability. Inspired by human cognitive processes in spatial reasoning, we propose Eq.Bot, a universal canonicalization framework grounded in SE(2) group equivariant theory for robotic manipulation learning. Our framework transforms observations into a canonical space, applies an existing policy, and maps the resulting actions back to the original space. As a model-agnostic solution, Eq.Bot aims to endow models with spatial equivariance without requiring architectural modifications. Extensive experiments demonstrate the superiority of Eq.Bot under both CNN-based (e.g., CLIPort) and Transformer-based (e.g., OpenVLA-OFT) architectures over existing methods on various robotic manipulation tasks, where the most significant improvement can reach 50.0%.

MathSE: Improving Multimodal Mathematical Reasoning via Self-Evolving Iterative Reflection and Reward-Guided Fine-Tuning

Nov 10, 2025Abstract:Multimodal large language models (MLLMs) have demonstrated remarkable capabilities in vision-language answering tasks. Despite their strengths, these models often encounter challenges in achieving complex reasoning tasks such as mathematical problem-solving. Previous works have focused on fine-tuning on specialized mathematical datasets. However, these datasets are typically distilled directly from teacher models, which capture only static reasoning patterns and leaving substantial gaps compared to student models. This reliance on fixed teacher-derived datasets not only restricts the model's ability to adapt to novel or more intricate questions that extend beyond the confines of the training data, but also lacks the iterative depth needed for robust generalization. To overcome these limitations, we propose \textbf{\method}, a \textbf{Math}ematical \textbf{S}elf-\textbf{E}volving framework for MLLMs. In contrast to traditional one-shot fine-tuning paradigms, \method iteratively refines the model through cycles of inference, reflection, and reward-based feedback. Specifically, we leverage iterative fine-tuning by incorporating correct reasoning paths derived from previous-stage inference and integrating reflections from a specialized Outcome Reward Model (ORM). To verify the effectiveness of \method, we evaluate it on a suite of challenging benchmarks, demonstrating significant performance gains over backbone models. Notably, our experimental results on MathVL-test surpass the leading open-source multimodal mathematical reasoning model QVQ. Our code and models are available at \texttt{https://zheny2751\allowbreak-dotcom.github.io/\allowbreak MathSE.github.io/}.

Motion Forecasting for Autonomous Vehicles: A Survey

Feb 10, 2025Abstract:In recent years, the field of autonomous driving has attracted increasingly significant public interest. Accurately forecasting the future behavior of various traffic participants is essential for the decision-making of Autonomous Vehicles (AVs). In this paper, we focus on both scenario-based and perception-based motion forecasting for AVs. We propose a formal problem formulation for motion forecasting and summarize the main challenges confronting this area of research. We also detail representative datasets and evaluation metrics pertinent to this field. Furthermore, this study classifies recent research into two main categories: supervised learning and self-supervised learning, reflecting the evolving paradigms in both scenario-based and perception-based motion forecasting. In the context of supervised learning, we thoroughly examine and analyze each key element of the methodology. For self-supervised learning, we summarize commonly adopted techniques. The paper concludes and discusses potential research directions, aiming to propel progress in this vital area of AV technology.

RobotDiffuse: Motion Planning for Redundant Manipulator based on Diffusion Model

Dec 27, 2024

Abstract:Redundant manipulators, with their higher Degrees of Freedom (DOFs), offer enhanced kinematic performance and versatility, making them suitable for applications like manufacturing, surgical robotics, and human-robot collaboration. However, motion planning for these manipulators is challenging due to increased DOFs and complex, dynamic environments. While traditional motion planning algorithms struggle with high-dimensional spaces, deep learning-based methods often face instability and inefficiency in complex tasks. This paper introduces RobotDiffuse, a diffusion model-based approach for motion planning in redundant manipulators. By integrating physical constraints with a point cloud encoder and replacing the U-Net structure with an encoder-only transformer, RobotDiffuse improves the model's ability to capture temporal dependencies and generate smoother, more coherent motion plans. We validate the approach using a complex simulator, and release a new dataset with 35M robot poses and 0.14M obstacle avoidance scenarios. Experimental results demonstrate the effectiveness of RobotDiffuse and the promise of diffusion models for motion planning tasks. The code can be accessed at https://github.com/ACRoboT-buaa/RobotDiffuse.

Deep Contrastive One-Class Time Series Anomaly Detection

Jul 04, 2022

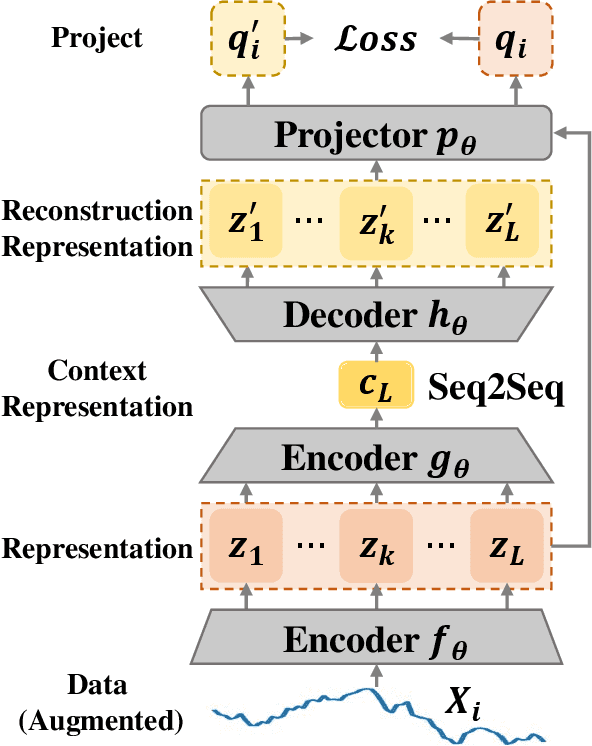

Abstract:The accumulation of time series data and the absence of labels make time-series Anomaly Detection (AD) a self-supervised deep learning task. Single-assumption-based methods may only touch on a certain aspect of the whole normality, not sufficient to detect various anomalies. Among them, contrastive learning methods adopted for AD always choose negative pairs that are both normal to push away, which is objecting to AD tasks' purpose. Existing multi-assumption-based methods are usually two-staged, firstly applying a pre-training process whose target may differ from AD, so the performance is limited by the pre-trained representations. This paper proposes a deep Contrastive One-Class Anomaly detection method of time series (COCA), which combines the normality assumptions of contrastive learning and one-class classification. The key idea is to treat the representation and reconstructed representation as the positive pair of negative-samples-free contrastive learning, and we name it sequence contrast. Then we apply a contrastive one-class loss function composed of invariance and variance terms, the former optimizing loss of the two assumptions simultaneously, and the latter preventing hypersphere collapse. Extensive experiments conducted on four real-world time-series datasets show the superior performance of the proposed method achieves state-of-the-art. The code is publicly available at https://github.com/ruiking04/COCA.

Passenger Mobility Prediction via Representation Learning for Dynamic Directed and Weighted Graph

Jan 04, 2021

Abstract:In recent years, ride-hailing services have been increasingly prevalent as they provide huge convenience for passengers. As a fundamental problem, the timely prediction of passenger demands in different regions is vital for effective traffic flow control and route planning. As both spatial and temporal patterns are indispensable passenger demand prediction, relevant research has evolved from pure time series to graph-structured data for modeling historical passenger demand data, where a snapshot graph is constructed for each time slot by connecting region nodes via different relational edges (e.g., origin-destination relationship, geographical distance, etc.). Consequently, the spatiotemporal passenger demand records naturally carry dynamic patterns in the constructed graphs, where the edges also encode important information about the directions and volume (i.e., weights) of passenger demands between two connected regions. However, existing graph-based solutions fail to simultaneously consider those three crucial aspects of dynamic, directed, and weighted (DDW) graphs, leading to limited expressiveness when learning graph representations for passenger demand prediction. Therefore, we propose a novel spatiotemporal graph attention network, namely Gallat (Graph prediction with all attention) as a solution. In Gallat, by comprehensively incorporating those three intrinsic properties of DDW graphs, we build three attention layers to fully capture the spatiotemporal dependencies among different regions across all historical time slots. Moreover, the model employs a subtask to conduct pretraining so that it can obtain accurate results more quickly. We evaluate the proposed model on real-world datasets, and our experimental results demonstrate that Gallat outperforms the state-of-the-art approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge