Deep Contrastive One-Class Time Series Anomaly Detection

Paper and Code

Jul 04, 2022

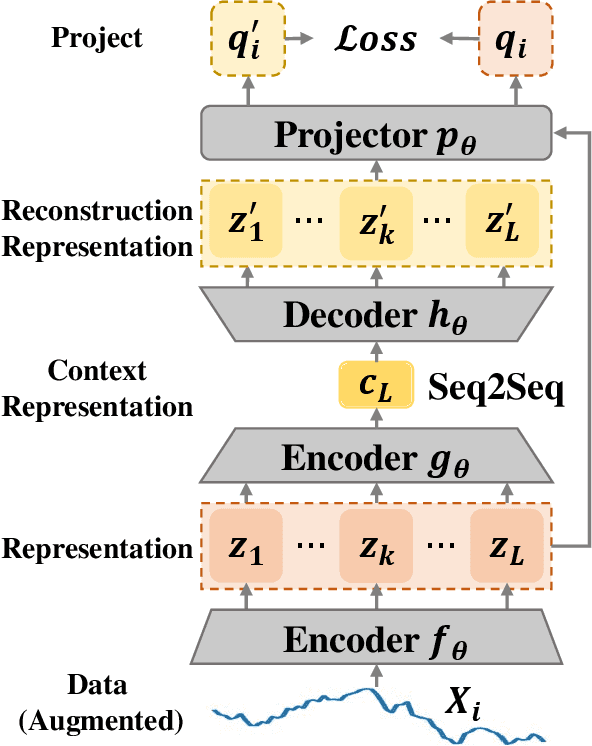

The accumulation of time series data and the absence of labels make time-series Anomaly Detection (AD) a self-supervised deep learning task. Single-assumption-based methods may only touch on a certain aspect of the whole normality, not sufficient to detect various anomalies. Among them, contrastive learning methods adopted for AD always choose negative pairs that are both normal to push away, which is objecting to AD tasks' purpose. Existing multi-assumption-based methods are usually two-staged, firstly applying a pre-training process whose target may differ from AD, so the performance is limited by the pre-trained representations. This paper proposes a deep Contrastive One-Class Anomaly detection method of time series (COCA), which combines the normality assumptions of contrastive learning and one-class classification. The key idea is to treat the representation and reconstructed representation as the positive pair of negative-samples-free contrastive learning, and we name it sequence contrast. Then we apply a contrastive one-class loss function composed of invariance and variance terms, the former optimizing loss of the two assumptions simultaneously, and the latter preventing hypersphere collapse. Extensive experiments conducted on four real-world time-series datasets show the superior performance of the proposed method achieves state-of-the-art. The code is publicly available at https://github.com/ruiking04/COCA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge