Xiaohui Guo

A novel Multi to Single Module for small object detection

Mar 27, 2023Abstract:Small object detection presents a significant challenge in computer vision and object detection. The performance of small object detectors is often compromised by a lack of pixels and less significant features. This issue stems from information misalignment caused by variations in feature scale and information loss during feature processing. In response to this challenge, this paper proposes a novel the Multi to Single Module (M2S), which enhances a specific layer through improving feature extraction and refining features. Specifically, M2S includes the proposed Cross-scale Aggregation Module (CAM) and explored Dual Relationship Module (DRM) to improve information extraction capabilities and feature refinement effects. Moreover, this paper enhances the accuracy of small object detection by utilizing M2S to generate an additional detection head. The effectiveness of the proposed method is evaluated on two datasets, VisDrone2021-DET and SeaDronesSeeV2. The experimental results demonstrate its improved performance compared with existing methods. Compared to the baseline model (YOLOv5s), M2S improves the accuracy by about 1.1\% on the VisDrone2021-DET testing dataset and 15.68\% on the SeaDronesSeeV2 validation set.

Deep Contrastive One-Class Time Series Anomaly Detection

Jul 04, 2022

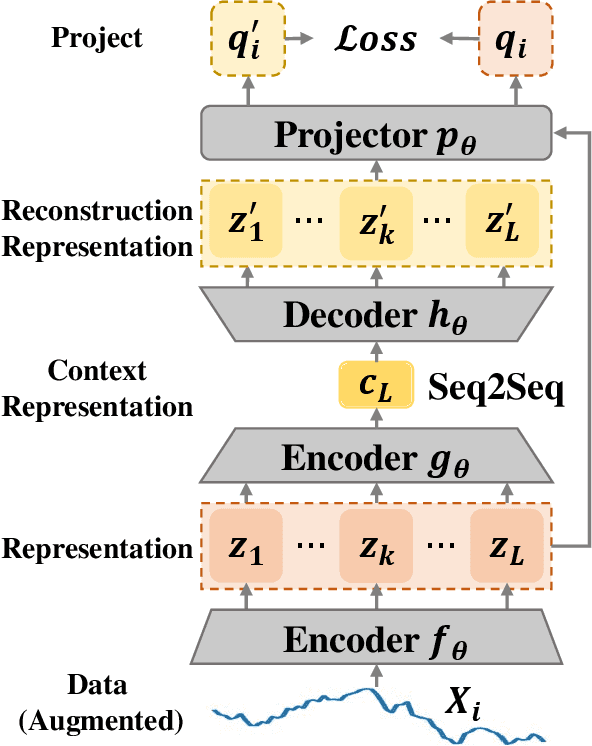

Abstract:The accumulation of time series data and the absence of labels make time-series Anomaly Detection (AD) a self-supervised deep learning task. Single-assumption-based methods may only touch on a certain aspect of the whole normality, not sufficient to detect various anomalies. Among them, contrastive learning methods adopted for AD always choose negative pairs that are both normal to push away, which is objecting to AD tasks' purpose. Existing multi-assumption-based methods are usually two-staged, firstly applying a pre-training process whose target may differ from AD, so the performance is limited by the pre-trained representations. This paper proposes a deep Contrastive One-Class Anomaly detection method of time series (COCA), which combines the normality assumptions of contrastive learning and one-class classification. The key idea is to treat the representation and reconstructed representation as the positive pair of negative-samples-free contrastive learning, and we name it sequence contrast. Then we apply a contrastive one-class loss function composed of invariance and variance terms, the former optimizing loss of the two assumptions simultaneously, and the latter preventing hypersphere collapse. Extensive experiments conducted on four real-world time-series datasets show the superior performance of the proposed method achieves state-of-the-art. The code is publicly available at https://github.com/ruiking04/COCA.

Robust Regularization with Adversarial Labelling of Perturbed Samples

May 28, 2021

Abstract:Recent researches have suggested that the predictive accuracy of neural network may contend with its adversarial robustness. This presents challenges in designing effective regularization schemes that also provide strong adversarial robustness. Revisiting Vicinal Risk Minimization (VRM) as a unifying regularization principle, we propose Adversarial Labelling of Perturbed Samples (ALPS) as a regularization scheme that aims at improving the generalization ability and adversarial robustness of the trained model. ALPS trains neural networks with synthetic samples formed by perturbing each authentic input sample towards another one along with an adversarially assigned label. The ALPS regularization objective is formulated as a min-max problem, in which the outer problem is minimizing an upper-bound of the VRM loss, and the inner problem is L$_1$-ball constrained adversarial labelling on perturbed sample. The analytic solution to the induced inner maximization problem is elegantly derived, which enables computational efficiency. Experiments on the SVHN, CIFAR-10, CIFAR-100 and Tiny-ImageNet datasets show that the ALPS has a state-of-the-art regularization performance while also serving as an effective adversarial training scheme.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge