Thomas Eboli

SING: A Plug-and-Play DNN Learning Technique

May 25, 2023Abstract:We propose SING (StabIlized and Normalized Gradient), a plug-and-play technique that improves the stability and generalization of the Adam(W) optimizer. SING is straightforward to implement and has minimal computational overhead, requiring only a layer-wise standardization of the gradients fed to Adam(W) without introducing additional hyper-parameters. We support the effectiveness and practicality of the proposed approach by showing improved results on a wide range of architectures, problems (such as image classification, depth estimation, and natural language processing), and in combination with other optimizers. We provide a theoretical analysis of the convergence of the method, and we show that by virtue of the standardization, SING can escape local minima narrower than a threshold that is inversely proportional to the network's depth.

Collaborative Blind Image Deblurring

May 25, 2023Abstract:Blurry images usually exhibit similar blur at various locations across the image domain, a property barely captured in nowadays blind deblurring neural networks. We show that when extracting patches of similar underlying blur is possible, jointly processing the stack of patches yields superior accuracy than handling them separately. Our collaborative scheme is implemented in a neural architecture with a pooling layer on the stack dimension. We present three practical patch extraction strategies for image sharpening, camera shake removal and optical aberration correction, and validate the proposed approach on both synthetic and real-world benchmarks. For each blur instance, the proposed collaborative strategy yields significant quantitative and qualitative improvements.

Handheld Burst Super-Resolution Meets Multi-Exposure Satellite Imagery

Mar 10, 2023

Abstract:Image resolution is an important criterion for many applications based on satellite imagery. In this work, we adapt a state-of-the-art kernel regression technique for smartphone camera burst super-resolution to satellites. This technique leverages the local structure of the image to optimally steer the fusion kernels, limiting blur in the final high-resolution prediction, denoising the image, and recovering details up to a zoom factor of 2. We extend this approach to the multi-exposure case to predict from a sequence of multi-exposure low-resolution frames a high-resolution and noise-free one. Experiments on both single and multi-exposure scenarios show the merits of the approach. Since the fusion is learning-free, the proposed method is ensured to not hallucinate details, which is crucial for many remote sensing applications.

Fast Two-step Blind Optical Aberration Correction

Aug 01, 2022

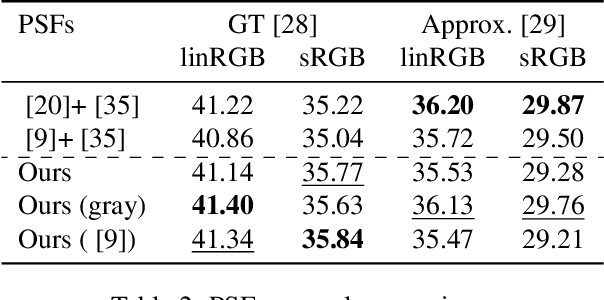

Abstract:The optics of any camera degrades the sharpness of photographs, which is a key visual quality criterion. This degradation is characterized by the point-spread function (PSF), which depends on the wavelengths of light and is variable across the imaging field. In this paper, we propose a two-step scheme to correct optical aberrations in a single raw or JPEG image, i.e., without any prior information on the camera or lens. First, we estimate local Gaussian blur kernels for overlapping patches and sharpen them with a non-blind deblurring technique. Based on the measurements of the PSFs of dozens of lenses, these blur kernels are modeled as RGB Gaussians defined by seven parameters. Second, we remove the remaining lateral chromatic aberrations (not contemplated in the first step) with a convolutional neural network, trained to minimize the red/green and blue/green residual images. Experiments on both synthetic and real images show that the combination of these two stages yields a fast state-of-the-art blind optical aberration compensation technique that competes with commercial non-blind algorithms.

High Dynamic Range and Super-Resolution from Raw Image Bursts

Jul 29, 2022

Abstract:Photographs captured by smartphones and mid-range cameras have limited spatial resolution and dynamic range, with noisy response in underexposed regions and color artefacts in saturated areas. This paper introduces the first approach (to the best of our knowledge) to the reconstruction of high-resolution, high-dynamic range color images from raw photographic bursts captured by a handheld camera with exposure bracketing. This method uses a physically-accurate model of image formation to combine an iterative optimization algorithm for solving the corresponding inverse problem with a learned image representation for robust alignment and a learned natural image prior. The proposed algorithm is fast, with low memory requirements compared to state-of-the-art learning-based approaches to image restoration, and features that are learned end to end from synthetic yet realistic data. Extensive experiments demonstrate its excellent performance with super-resolution factors of up to $\times 4$ on real photographs taken in the wild with hand-held cameras, and high robustness to low-light conditions, noise, camera shake, and moderate object motion.

Learning to Jointly Deblur, Demosaick and Denoise Raw Images

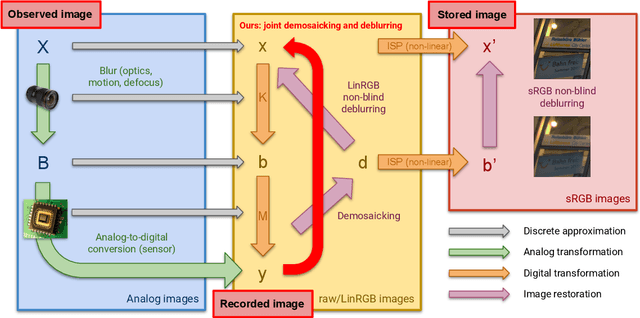

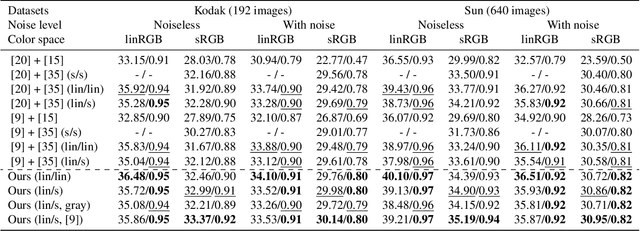

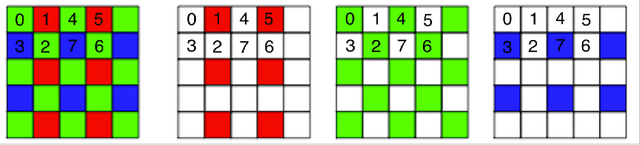

Apr 13, 2021

Abstract:We address the problem of non-blind deblurring and demosaicking of noisy raw images. We adapt an existing learning-based approach to RGB image deblurring to handle raw images by introducing a new interpretable module that jointly demosaicks and deblurs them. We train this model on RGB images converted into raw ones following a realistic invertible camera pipeline. We demonstrate the effectiveness of this model over two-stage approaches stacking demosaicking and deblurring modules on quantitive benchmarks. We also apply our approach to remove a camera's inherent blur (its color-dependent point-spread function) from real images, in essence deblurring sharp images.

End-to-end Interpretable Learning of Non-blind Image Deblurring

Jul 03, 2020

Abstract:Non-blind image deblurring is typically formulated as a linear least-squares problem regularized by natural priors on the corresponding sharp picture's gradients, which can be solved, for example, using a half-quadratic splitting method with Richardson fixed-point iterations for its least-squares updates and a proximal operator for the auxiliary variable updates. We propose to precondition the Richardson solver using approximate inverse filters of the (known) blur and natural image prior kernels. Using convolutions instead of a generic linear preconditioner allows extremely efficient parameter sharing across the image, and leads to significant gains in accuracy and/or speed compared to classical FFT and conjugate-gradient methods. More importantly, the proposed architecture is easily adapted to learning both the preconditioner and the proximal operator using CNN embeddings. This yields a simple and efficient algorithm for non-blind image deblurring which is fully interpretable, can be learned end to end, and whose accuracy matches or exceeds the state of the art, quite significantly, in the non-uniform case.

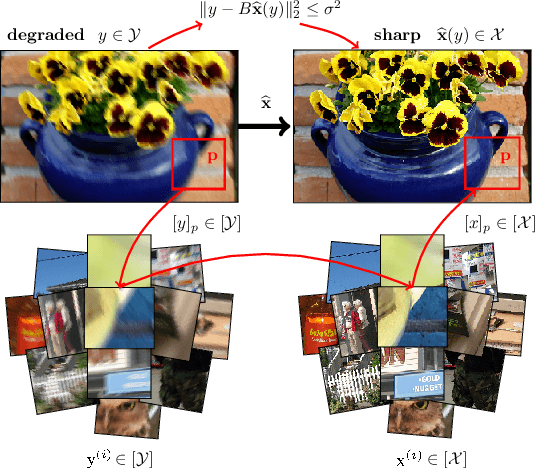

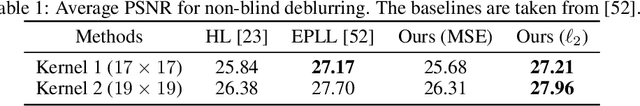

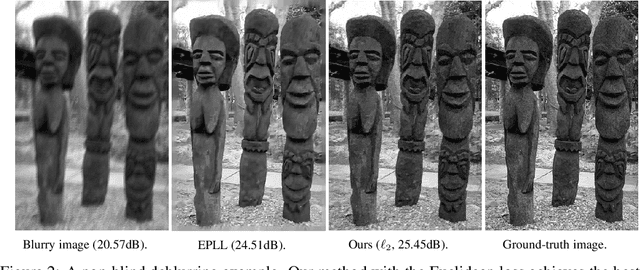

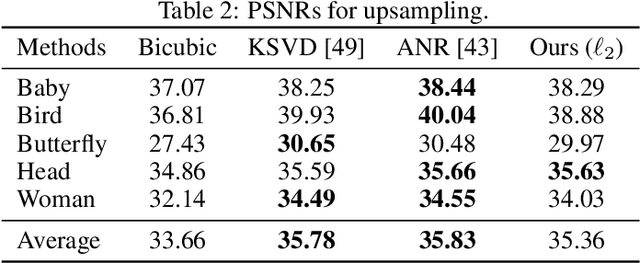

Structured and Localized Image Restoration

Jun 16, 2020

Abstract:We present a novel approach to image restoration that leverages ideas from localized structured prediction and non-linear multi-task learning. We optimize a penalized energy function regularized by a sum of terms measuring the distance between patches to be restored and clean patches from an external database gathered beforehand. The resulting estimator comes with strong statistical guarantees leveraging local dependency properties of overlapping patches. We derive the corresponding algorithms for energies based on the mean-squared and Euclidean norm errors. Finally, we demonstrate the practical effectiveness of our model on different image restoration problems using standard benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge