Tam Vu

QSM-RimDS: A highly sensitive paramagnetic rim lesion detection and segmentation tool for multiple sclerosis lesions

Dec 13, 2024

Abstract:Paramagnetic rim lesions (PRLs) are imaging biomarker of the innate immune response in MS lesions. QSM-RimNet, a state-of-the-art tool for PRLs detection on QSM, can identify PRLs but requires precise QSM lesion mask and does not provide rim segmentation. Therefore, the aims of this study are to develop QSM-RimDS algorithm to detect PRLs using the readily available FLAIR lesion mask and to provide rim segmentation for microglial quantification. QSM-RimDS, a deep-learning based tool for joint PRL rim segmentation and PRL detection has been developed. QSM-RimDS has obtained state-of-the art performance in PRL detection and therefore has the potential to be used in clinical practice as a tool to assist human readers for the time-consuming PRL detection and segmentation task. QSM-RimDS is made publicly available [https://github.com/kennyha85/QSM_RimDS]

UR2M: Uncertainty and Resource-Aware Event Detection on Microcontrollers

Feb 17, 2024Abstract:Traditional machine learning techniques are prone to generating inaccurate predictions when confronted with shifts in the distribution of data between the training and testing phases. This vulnerability can lead to severe consequences, especially in applications such as mobile healthcare. Uncertainty estimation has the potential to mitigate this issue by assessing the reliability of a model's output. However, existing uncertainty estimation techniques often require substantial computational resources and memory, making them impractical for implementation on microcontrollers (MCUs). This limitation hinders the feasibility of many important on-device wearable event detection (WED) applications, such as heart attack detection. In this paper, we present UR2M, a novel Uncertainty and Resource-aware event detection framework for MCUs. Specifically, we (i) develop an uncertainty-aware WED based on evidential theory for accurate event detection and reliable uncertainty estimation; (ii) introduce a cascade ML framework to achieve efficient model inference via early exits, by sharing shallower model layers among different event models; (iii) optimize the deployment of the model and MCU library for system efficiency. We conducted extensive experiments and compared UR2M to traditional uncertainty baselines using three wearable datasets. Our results demonstrate that UR2M achieves up to 864% faster inference speed, 857% energy-saving for uncertainty estimation, 55% memory saving on two popular MCUs, and a 22% improvement in uncertainty quantification performance. UR2M can be deployed on a wide range of MCUs, significantly expanding real-time and reliable WED applications.

An Unobtrusive and Lightweight Ear-worn System for Continuous Epileptic Seizure Detection

Jan 01, 2024Abstract:Epilepsy is one of the most common neurological diseases globally, affecting around 50 million people worldwide. Fortunately, up to 70 percent of people with epilepsy could live seizure-free if properly diagnosed and treated, and a reliable technique to monitor the onset of seizures could improve the quality of life of patients who are constantly facing the fear of random seizure attacks. The scalp-based EEG test, despite being the gold standard for diagnosing epilepsy, is costly, necessitates hospitalization, demands skilled professionals for operation, and is discomforting for users. In this paper, we propose EarSD, a novel lightweight, unobtrusive, and socially acceptable ear-worn system to detect epileptic seizure onsets by measuring the physiological signals from behind the user's ears. EarSD includes an integrated custom-built sensing, computing, and communication PCB to collect and amplify the signals of interest, remove the noises caused by motion artifacts and environmental impacts, and stream the data wirelessly to the computer or mobile phone nearby, where data are uploaded to the host computer for further processing. We conducted both in-lab and in-hospital experiments with epileptic seizure patients who were hospitalized for seizure studies. The preliminary results confirm that EarSD can detect seizures with up to 95.3 percent accuracy by just using classical machine learning algorithms.

A pilot study of the Earable device to measure facial muscle and eye movement tasks among healthy volunteers

Feb 01, 2022

Abstract:Many neuromuscular disorders impair function of cranial nerve enervated muscles. Clinical assessment of cranial muscle function has several limitations. Clinician rating of symptoms suffers from inter-rater variation, qualitative or semi-quantitative scoring, and limited ability to capture infrequent or fluctuating symptoms. Patient-reported outcomes are limited by recall bias and poor precision. Current tools to measure orofacial and oculomotor function are cumbersome, difficult to implement, and non-portable. Here, we show how Earable, a wearable device, can discriminate certain cranial muscle activities such as chewing, talking, and swallowing. We demonstrate using data from a pilot study of 10 healthy participants how Earable can be used to measure features from EMG, EEG, and EOG waveforms from subjects performing mock Performance Outcome Assessments (mock-PerfOs), utilized widely in clinical research. Our analysis pipeline provides a framework for how to computationally process and statistically rank features from the Earable device. Finally, we demonstrate that Earable data may be used to classify these activities. Our results, conducted in a pilot study of healthy participants, enable a more comprehensive strategy for the design, development, and analysis of wearable sensor data for investigating clinical populations. Additionally, the results from this study support further evaluation of Earable or similar devices as tools to objectively measure cranial muscle activity in the context of a clinical research setting. Future work will be conducted in clinical disease populations, with a focus on detecting disease signatures, as well as monitoring intra-subject treatment responses. Readily available quantitative metrics from wearable sensor devices like Earable support strategies for the development of novel digital endpoints, a hallmark goal of clinical research.

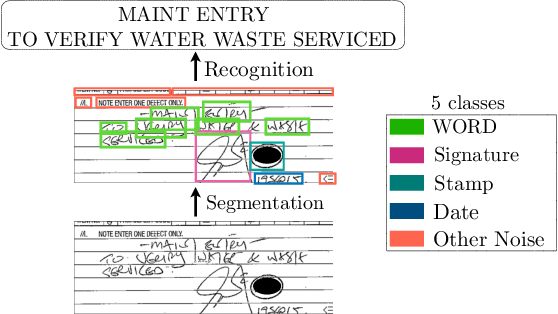

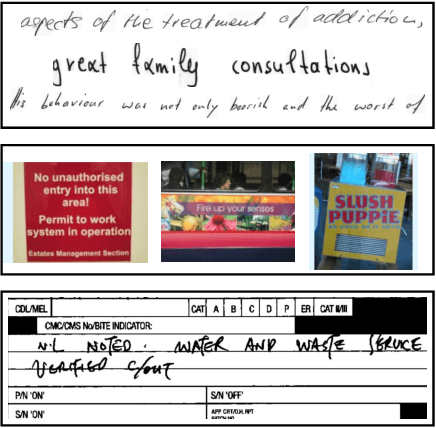

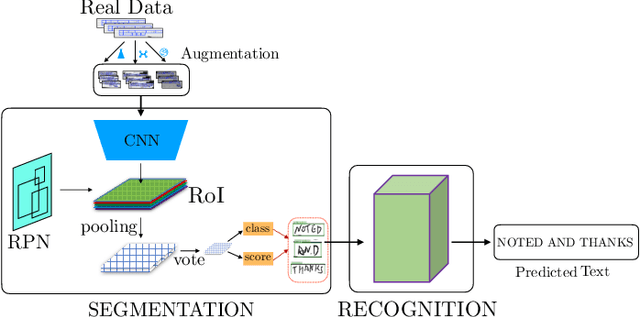

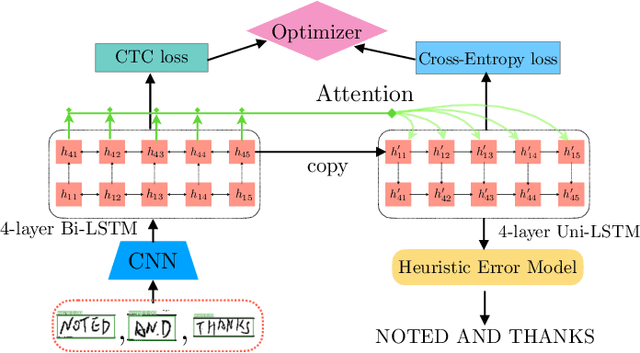

Robust Handwriting Recognition with Limited and Noisy Data

Aug 18, 2020

Abstract:Despite the advent of deep learning in computer vision, the general handwriting recognition problem is far from solved. Most existing approaches focus on handwriting datasets that have clearly written text and carefully segmented labels. In this paper, we instead focus on learning handwritten characters from maintenance logs, a constrained setting where data is very limited and noisy. We break the problem into two consecutive stages of word segmentation and word recognition respectively and utilize data augmentation techniques to train both stages. Extensive comparisons with popular baselines for scene-text detection and word recognition show that our system achieves a lower error rate and is more suited to handle noisy and difficult documents

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge